treat high

So at some point downloaded without knowing an adware for my computer called top deal and on the websites of random words to highlight as links and then I get pop up ads onmcafee , announcements of garbage, and there is not an extension for it in my preferences to erase.

, announcements of garbage, and there is not an extension for it in my preferences to erase.

Remove the browser pop up problems

Malwarebytes | Free Anti-Malware detection and removal of software for

Adblock more 1.8.9, GlimmerBlocker, or AdBloc k

Remove the adware that displays pop-up ads and graphics on your Mac

How to remove adware FlashMall of OS X

Stop advertising and pop-up advertising windows in Safari - Apple Support

Useful links about Malware problems

Open Safari, select Preferences from the Safari menu. Click the Extensions icon in the toolbar. Disable all Extensions. If it stops your problem, then re-enable one by one until the problem returns. Now remove this extension as it is the origin of the problem.

The following comes from user stevejobsfan0123. I made minor changes to adapt to this presentation.

Difficulty of pop-ups in browser that support Safari.

Common pop - ups include a message saying that the Government has taken over your computer and you pay release (often called "Moneypak"), or a false message saying that your computer has been infected and you need to call a number of tech support (sometimes claiming to be Apple) to get it to be resolved. First of all, understand that these pop-ups are not caused by a virus and that your computer has not been assigned. This "hack" is limited to your web browser. Also understand that these messages are scams, so don't pay not money, call number, or provide personal information. This article will give an overview of the solution to remove the pop-up window.

Quit Safari

Usually, these pop-ups will not go by clicking 'OK' or 'Cancel '. In addition, several menus in the menu bar may become disabled and show in grey, including the option to leave Safari. You'll probably force quit Safari. To do this, press command + option + ESC, select Safari, press on force quit.

Relaunch Safari

If you restart Safari, the page will reopen. To avoid this, hold the "Shift" key when opening Safari. This will prevent windows since the last time that Safari was running since the reopening.

It will not work in all cases. The SHIFT key must be maintained at the right time, and in some cases, even if done correctly, the window is displayed again. In these circumstances, after force quit Safari, turn off Wi - Fi or disconnect Ethernet, depending on how you connect to the Internet. Then restart Safari normally. He'll try to reload the malicious Web page, but without a connection, it will not be able to. Leave this page by entering a different URL, i.e. www.apple.com and try to load it. Now you can reconnect to the Internet and the page that you entered is displayed rather than the malicious.

Tags: Notebooks

Similar Questions

-

I loaded several series of images in Lightroom in recent weeks, star appreciation and treated high the page files in JPEG format for the download of my SmugMug account... who for years. Just noticed that several rounds of last week lost their side to star... No change to the process. The games this week and three weeks ago are fine. What to do?

(1) how the coast to stars to disappear?

A possibility, in grid mode, enter a 0 (zero) will remove any number of stars of an image of the selection, and if several or all the images have been selected when the 0 value has been entered, stars would be removed from all selected images.

(2) possible solution?

Depending on how often you back up the catalog, you can be able to go back to a time before the stars disappeared by opening a catalog earlier about your backups.

No certainty, but a good guess.

BobMc

-

Counters of artifacts of high rates of decimation filtering

Hello

I have problems with a report filter high decimation of artifacts in my data.

I use Digital Filter Design NStage MRate filter Design VI to create you a filter step achieve a ratio of decimation of 2000. The first step is a CIC. Both are the TREE. The decimation ratio first step is 500. The other two are 2. My incoming data rate is between 1 and 10 MECH. / s.

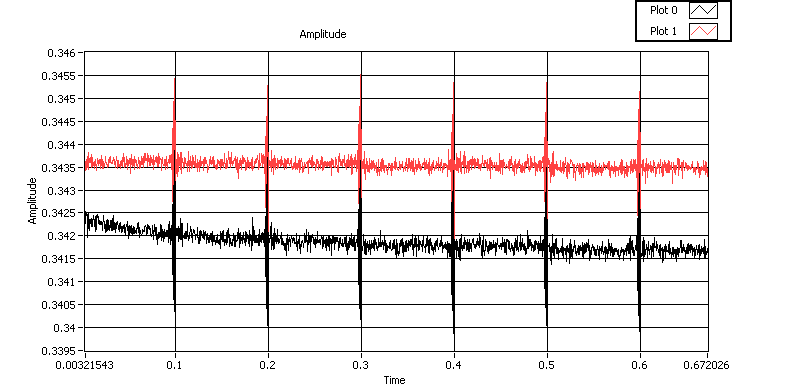

I'm acquiring a few seconds of data in blocks 200 ksps, and I'm filtering their block by block. I get artifacts at the borders between the blocks, as described below in two different zoom levels:

When you implement this filter, get the best results using the MRate Nstage DFD filtering to block VI with the symmetrical extension type. I think that it is supposed to avoid artifacts at the extremes of field.

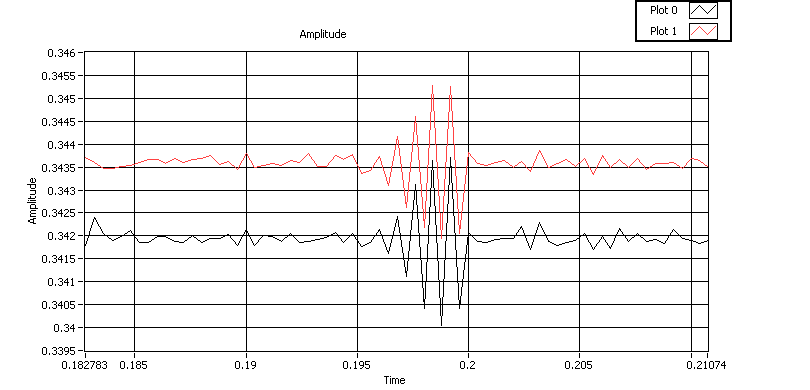

My application is lower. Another loop acquires the data blocks, and stores them in the queue. This loop treats each data block. The reason for the inside of the loop is because data are acquired from two channels simultaneously, make the waveform output a table 2 x 200000. The vi 'IQ Mix' is a mixture a wave of frequency equivalent with the data to convert it to focus on the DC I and Q signals.

Anyone who deals with this problem before? It strengthens with the highest ratio of decimation and lower bandwidth.

Cody,

Thanks for the offer, but I think that I traced this problem down.

Number 1 has been that if I want to deal with continuous data, my mix waves must be continuous. I mixed a same wave with each block. I did the phase of my wave continuous mixing from block to block, and which removed a lot of artifacts.

Number 2 was that I need to use the right filter treatment VI. Above, I use the VI for one-piece, non-continuous treatment.

Number 3 was when using the continuous processing VI, I need to make sure you use a separate VI for each channel instance, I want to deal with. This means that I can't use a loop for looping through the channels. A non-parallelisee loop for use the same instance for all iterations. Paralleled A for loop is not compatible with that iteration uses what instance.

I'm not completely out of the woods yet, however. I always have problems with the first block of data, that I proceeded. I think it's a quite different problem for a separate thread, though.

-

Real-time display at the high frequency of data acquisition with continuous recording

Hi all

I encountered a problem and you need help.

I collect tensions and corresponding currents via a card PCI-6221. While acquiriing data, I would like to see the values on a XY graph, so that I can also check current vs only voltage/current / time. In addition, data should be recorded on the acquisition.

First, I create hannels to analog input with the Virutal DAQmx channel create, then I set the sampling frequency and the mode and begin the tasks. The DAQmx.Read is placed in a while loop. Because of the high noise to signal, I want to average for example every 200 points of the current and acquired for this draw versus the average acquisition time or average voltage. The recording of the data should also appear in the while loop.

The first thing, I thought, was to run in continuous Mode data acquisition and utilization for example 10 k s/s sampling frequency. The DAQmx.Read is set to 1 D Wfm N Chan N Samp (there are 4 channels in total) and the number of samples per channel for example is 1000 to avoid the errors/subscribe for more of the buffer. Each of these packages of 1000 samples should be separatet (I use Index Array at the moment). After gaining separate waveforms out of table 1 d of waveforms, I extracted the value of Y to get items of waveform. The error that results must then be treated to get average values.

But how to get these averages without delaying my code?

My idea/concern is this: I've read 1000 samples after about 0.1 s. These then are divded into single waveforms, time information are subtracted, a sort of loop to sprawl is used (I don't know how this exactly), the data are transferred to a XY Chart and saved to a .dat file. After all that's happened (I hope I understood correctly the flow of data within a while loop), the code in the while loop again then 1000 samples read and are processed.

But if the treatment was too long the DAQmx.Read runs too late and cycle to cycle, reading buffer behind the generation of data on the card PCI-6221.

This concern is reasonable? And how can I get around this? Does anyone know a way to average and save the data?

I mean, the first thing that I would consider increasing the number of samples per channel, but this also increases the duration of the data processing.

The other question is on the calendar. If I understand correctly, the timestamp is generated once when the task starts (with the DAQmxStartTask) and the time difference betweeen the datapoints is then computed by 1 divded by the sampling frequency. However, if the treatment takes considerable time, how can I make sure, that this error does not accumulate?

I'm sorry for the long plain text!

You can find my attached example-vi(only to show roughly what I was thinking, I know there are two averaging-functions and the rate are not correctly set now).

Best wishes and thank you in advance,

MR. KSE

PS: I should add: imagine the acquisition of data running on a really old and slow PC, for example a Pentium III.

PPS: I do not know why, but I can't reach my vi...

-

HAVE high sampling frequency of trigger

Dear community

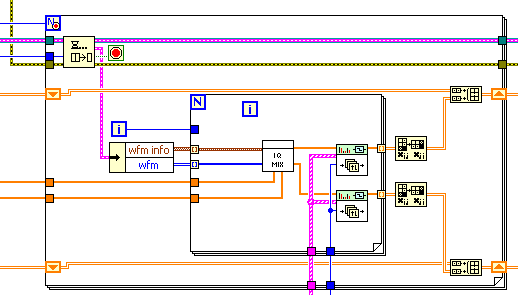

I am using a microscope to tunnel effect from here feeding two voltage signals on my map of acquisition of data USB-6212 (Labview 2013 SP1). A voltage signal is the voltage applied to a piezo in the microscope (AI0). This signal drifting slowly over time and it is noisy. The other voltage signal is a tunnel current is converted into a voltage (AI2) signal (see attached photo):

Ideally, I would like to record the two signals between the lines dotted in a .txt file whenever the event of tip in the top image rises. This should be about every second during a day.

So far, I've written a VI that calculates the moving average of the piezo signal and if the piezo voltage exceeds a certain percentage of the average running it fires a command 'Save as file'. The VI works well for a frequency of 100 Hz, but when I go to 20 kHz, the trigger does not work properly. I am also only watching a lot of a number (in this case, 1000) and if there is a trigger signal in these samples of 1000. So if there's a signal around 0 or 1000 I cut and split into two files that I want to avoid.

I don't have much experience with Labview and probably broke every rule of design in the book.

My question is if there is a smarter way to automatically back up the signal between the same dotted lines at high frequencies of sampling?

I thank very you much in advance!

Hi Mario,.

I rewrote the portions of your VI to improve performance (we hope). No need to three queues. No inquiry unless there is a trigger occurs.

I'm confused by the outbreak that seems to detect the edges of the piezo signal high side, even if the tip is in the negative sense. I modified this logic (eventually) get a threshold top-side of the signal of tunneling.

It is unclear what might happen to 20 kHz. The example shows a constant 1 kHz sampling rate and 1 K samples treated by loop. If the sampling rate is changed to 20 kHz, then the loop will have to run to 20 Hz in order to keep up with the acquired data (@1 K samples per read).

I hope that the joint allows VI (not tested).

-

Disk activity high start - CBS newspaper persist + DataStore.edb

I am facing a problem with same high disk activity during each boot, for several minutes.

I used the resource monitor to see what is happening and it whenever two points at the top of the list sorted in order descending of the column Total (B/s):

- DataStore.edb . in C:\Windows\SoftwareDistribution\DataStore, currently 1.13 GB in size.

- CbsPersist_DateTime.log in C:\Windows\Logs\CBS, several files, on 0.5 GB - 4 GB in size.

I have several questions:

- Why these files do not have the high disk activity even if no updates have been installed until the computer has been restarted?

- Y at - there no way how to limit the disk activity caused by the use of the DataStore.edb file above other than disabling updates (which are recommended to be activated) or do something with the treating process of updates?

- Is it safe and this will help if I delete the contents of the folder C:\Windows\Logs\CBS?

Thanks in advance for your suggestions. I have Windows 7 and it's the one big question I have.

Jirina salvation,

As you are facing problems with the performance of the operating system with high disk usage, don't worry, we will help you with this problem.

Let us know exactly how you're looking at record high on your device use?

I would like to inform that folder is where the Automatic Distribution of software updates are stored. Opinions are divided in all areas on the deletion of this file. When you do a disk cleanup on your device, the temporary folders on the computer would be deleted.

To apply to: tips to free up disk space on your PC

As you are facing problems with the performance of the operating system, I recommend you consult Microsoft help articles below to improve performance and check if it helps.

And,

Ways to improve your computer's performance

It will be useful. Get back to us with an up-to-date operating system performance report for assistance.

Thank you.

-

Win 7 - closing button displays updates to deal with but no updates ever treated on "stop".

Latest windows 7

Windows Update works fine in the sense that the traffic updates are treated and updated correctly. But there are 3 or 4 weeks, I noticed the power off button displaying the exclamation symbol and when I got "high" on the button it displayed the following message - "installs the updates and then shuts your computer."

I turned off, but no updates have been processed. I turned on again and check the updates manually, but there is nothing exceptional. I have a few days later a bunch of updates have been processed when I turned on (all properly updated), but I still got the message on the off button indicating updates to be processed when I turned off. When I turned off no updates have been processed. Other that an annoying message on the off button, there seems to be nothing wrong with my computer.

To try to address the issue, I ran the Windows update program utility troubleshooting on the Microsoft site. He said that this is a problem ("registration for the service is missing or corrupted") and said that it has been fixed. However, the Power Off button continues to indicate that updates will be handled when I turned off, but stop down quickly without ever get processed implemented to date.

This isn't a big problem for me, but everything just annoying. Can anyone help

Windows Update behaves properly and install updates when they become available. The only problem is that ever time I'd go to power off the system tell me that updates would be installed when I turned off. But there is never to deal with updates and the system would be turned off after a very slight delay. So on advice from Microsoft, I changed the windows settings of update "manual" and checked from time to time for updates and the issue was "resolved."

-

New desktop systems 'High Performance' of HP have contradictory specifications, which is correct?

HP Pavilion HPE h8qe for example. (Windows 7 (64 bit)), the specifications indicate the number of DIMM slots to be 6. The maximum amount of memory seems to be 16 GB. I bought HP 'High Performance' office computers in the past which had 6 DIMM slots and up to 24 GB of memory. Sales said 4 DIMM slots and 16 GB max memory (4 GB DIMMS). This seems absolutely backwards for me. Why desktop computers would take a step backward? I expect 4 or 6 DIMM slots each capable of treating the DIMMs from 8 GB to 32 GB or 48 GB memory MAX system. What is the truth... the support of offshore outsourcing seems to be reading the documentation which is conflict. I just want to buy another HP computer that moves me to the future rather than the past. Help me to know the correct specifications?

Thank you

Hi Bob,

The link you provided is dead.

You're right about the view picture and specifications are different. I'll let know HP. Good find!

You can watch HP workstations if you need more than 16 GB. The price jumps significantly with 8 GB DIMMs using. Some workstation models have 8 dimm slots, then you might be able to get up to 32 GB without using DIMM 8 GB which would take the low cost.

-

High availability with two 5508 WLAN controllers?

Hi all

We are considerung to implement a new wireless solution based on Cisco WLC 5508 and 1262N Access Points. We intend to buy about 30 access points and have two options: either buy a WLC 5508-50 or, for redundancy to, two controllers 5508-25.

Is it possible to configure two WLC 5508 as a high availability solution, so that all access points are distributed on the two WLCs and during breaks WLC one another case manages all the APs?

If we have 30 access points, and one of the two WLC 5508-25 breaks of course that not all access to 30 but only 25 points can be managed by one remaining. Is there some sort of control to choose the access points must be managed and which do not?

How does such a configuration looks like in general, is the implementation of an installation of two controller quite complex or simple?

Thank you!

Michael

Hi Michael,

Do not forget that the 5508 works with a system of licensing. The hardware can support up to 500 APs, but it depends on the license that you put in.

I think 2 5508 with 25 APs license will be more expensive than a 5508 with 50 APs license.

If you have 2 WLCs, the best is NOT to spread access between the WLCs points. In doing so, you increase the complexity of homelessness (WLCs have to discount customers to each other all the time). If your point was to gain speed, it really doesn't matter as the 5508 can have up to 8 Gbit/s of uplink speed and has the ability of UC to treat 50 APs with no problems at all. So I find it best to have all the access points on 1 WLC, then if something goes wrong, all the APs migrate anyway for the other WLC.

If you want 50 APs at a 25-degree WLC failover, you can select who will join Yes. The APs have a priority system, so you assign priorities. If the WLC sees it's full capacity but higher priority APs are trying to join, it will kick down-prio APs for the high prio allow to connect.

WLCs is not exactly "HA." It's just that if you have 2 WLCs work together (as if you had 700 APs and needed to put 2 WLCs) and delivered to customers. Or all APs sat on a WLC and when it breaks down, they join the other available controller.

The only thing to do is to put each WLC in the same group of mobility so that they know.

-

Hi all

We have several standards work of the Disqualification created to standardize the data from the source systems. Among all, there are 2 positions causing the CPU usage greater than 97% throughout the work. For the jobs of rest with the same logic, but the source systems different data do not take this as the CPU usage.

Run these 2 high CPU utilization causing positions for 50 k records and also around 1 lakh separately but still the CPU usage remains the same. that is more than 97%. Are there other specific job setting or settings that causes this problem? For the rest, the jobs CPU usage is average only 55%. Performance wise, it is not accepted and PT test sign off is blocked because of this.

Appreciate your suggestions! @

Thank you

Amol 971-505343978

Hello

Why do you think the high utilization of the processor is a bad thing? On the contrary, Disqualification treat this work as soon as possible. While other jobs [that run at 55%] are i/o bound and probably run a lot faster if you were to set the I/O bottleneck.

Kind regards

Nick

-

Problem with 'treat multiple files' quality?

I have pictures for my online store which can be really big zoom without distortion. So I leave the dpi to 300, and run several tests on my mac all with the exact same start/size of the picture everything.

1. I'm resizing in PSE 14 without using several files processing.

2. I'm resizing in PSE 14 using several files processing.

3. I resize preview and don't use all PSE.

In these contexts - each time, the size of the file is greater with PSE with preview (547KO vs 114ko) - which is a big problem when I have lots of images per page. The quality of these tests are all to minimal difference range - it is negligible. However, the quirks start when I add a watermark to the image by using the function 'treat multiple files' - this is where it goes south on me! Using the preview resized OR resized ESP - anyone, as soon as they get a watermark (and we are talking about only a line of tiny text at the bottom right), the quality gets worse.

After tattooing, the size of the file on the PES to PES "multiple files" resize watermark (test 1) version will 547KO to 79 KB - and the photo quality is a noticeable difference as well. Using the preview resized, then PSE "multiple files" (test 2) for watermark, it passes from 114 kb to 83 KB. Not much difference in size - but I would not have expected a big difference in both cases honestly since I'm not actually resizing - but only watermarking. But the quality of 'Trial 2' is still significantly different from the version "nothing other than the Preview" without watermark (hands down better on the version without watermark).

I'm completely stumped on how to make good quality, very "zoomable", a watermark images that remain small in size, while maintaining quality. Any help is greatly appreciated!

Thank you

Sharon

gametrailgirl wrote:

Michel - what wonderful assistance you gave me. Now I need to play with the different save options and bulk 'watermark' to see the quality.

Since I'm a mac user, unfortunately the link you gave does nothing for me because they provide only a windows product. Also, I can't find any reference to the elements + anywhere. Maybe the premier elements (?) which I did not... I would like to be able to record actions and who could help with the logo, but honestly I have no idea about how to put the logo on the images (or if it would affect the image quality for the zoom). If you know how to explain these things to this relative Rookie of photoshop, I'd be most grateful. In the meantime, I'll play with a few economies, sizing and options "my watermark".

Thanks again for starting me on the best way.

Sharon

There may be specific tattoo of Mac software, maybe other Mac users can give you suggestions.

For items +, see:

It's an incredible lot of extra features for $ 12...

Since the use and customization of scripts is not so obvious, that I should mention that the author, Andrei Doubrovski helps in the following forum:

Home page | Photoshop Elements & more

Your question would be interesting for many other users of the forum.

Adding a watermark logo .png file is easy and guarantees the best quality.

Premiere Elements is the video editor.

To check the maximum quality that you can get in your current workflow while maintaining a small output file, just the first step of resizing PNG, tiff, or psd. You might not get the best results. For the second round of tattoo to "treat multiple files", check the final rating different compression options. Using "high quality jpeg" should not create a visible difference. The limit for users to "zoom out" is 1000 pixels size.

I have a question: you say that with preview, you can resize, rename and crop your images? Can you clarify this "cropping" method?

-

How to make the high quality look smooth and not grainy photo?

I uploaded a picture of my camera and when I change it on Photoshop, it seems pretty grainy.

I'm doing an assignment for my photography class where I have to imitate the project of photographer and I chose this picture http://ic.pics.livejournal.com/_pinkpornstar_/1198725/322258/322258_original.jpg.However, once I took the picture, I have now is quite high in quality and import it in Photoshop, I can't find a way to make it more clear than "professional" photos out there!

I know that if I Zoom, it becomes less grainy, but even after I record and zoom, the picture is still not as smooth as the one I aim.

Is it because of the settings that I used while taking the photo?

PS: Sorry for this fundamental issue, I'm a noob to this.

JPEG quality is never (or not as high as possible). Shoot raw and process in Camera Raw, where you have total control over all other treatment and noise reduction settings.

JPEGs are self-treated behind doors closed, highly compressed and throw large amounts of data.

-

How to export to PDF with crop marks * and * an image high resolution?

HI - firstly, apologies - I have another query here and this is a related issue. Because I have been unable to solve this problem, which is with InDesign CS5, I downloaded the demo of InDesign CC, as I work at a date limit.

OK, so I'm used to be able to export PDF files of high quality with crop marks in CS5. That's what I want to do with my file.

In InDesign CC, it seems that if I simply 'export', I don't give me no option to add placemarks of crop, even if the file is configured to have a bleed.

If I rather 'print' in Adobe PDF format, I * can * add crop marks. However, I then lose the option to export images in high resolution (300 dpi), and they come out pixelated.

Maybe I'm missing something, but for now it seems to me that I have a choice of images of high quality but without crop marks or crop marks and bad quality images!

Any help is appreciated!

Thank you!

PDF presets are the same in InDesign CC, they are in InDesign CS5.

Choose file > export > Adobe PDF (Print)

Simply choose a preset PDF which defines the resolution of the image in order to treat his high enough for your printing. There is also an option to print the Page guides and funds lost.

-

Consumption consume slowly - high UNACK message

I have glassfish 2.1 with OpenMQ 4.3.

I have other posts related to the similar project. But I see all these different symptoms, so I put it in different threads.

I'm running on this issue, where consumers consume messages very slowly (200 RPM). It starts fine (3000 rpm), but in 20-25 hours it suddenly slows to slow. If anyone has any suggestions on how to diagnose the exact problem, I'd appreciate that. I'm also try using version 4.5 of OpenMQ by simply replacing the bin/lib files. But a solution with OpenMQ 4.3 is certainly preferred...

I also see a high number of messages of high Unack

-----------------------------------------------------------------------------------------------------

Name Type State producers consumers Msgs

Total of generic wildcard County remote UnAck Avg size

-----------------------------------------------------------------------------------------------------

BillingQueue queue running 0-0 - 0 0 0 0.0

testStatsQueue queue 0-0 running - 72 0 77 1441.6111

EsafQueue queue running 0-0 - 0 0 0 0.0

Section 0 0 5 0 440 80 20 1328.0 running InitialDestination

Queue queue running 0-0 - 0 0 0 0.0

MQ.sys.DMQ queue running 0-0 - 0 0 0 0.0

Here is the excerpt of the consumer code:

I create the connection in @postConstruct and close in @preDestroy, so that I don't have to do it every time.

private ResultMessage [] doRetrieve (String username, String password, String jndiDestination, String filter, int maxMessages, long timeout, type RetrieveType)

throws InvalidCredentialsException, InvalidFilterException, ConsumerException {}

// ******************************************************************

Resources

// ******************************************************************

A session = null;

try {}

If (log.isTraceEnabled ()) log.trace ("session creation treated with JMS broker.");

session = connection.createSession (Session.SESSION_TRANSACTED, true);

// **************************************************************

Locate the linked destination and create a consumer

// **************************************************************

If (log.isTraceEnabled ()) log.trace ("looking for a named destination:" + jndiDestination);

Destination destination = ic.lookup (jndiDestination) (Destination);

If (log.isTraceEnabled ()) log.trace ("Creation of consumer to destination named" + jndiDestination);

MessageConsumer = consumption (filter == null | filter.trim () .length () == 0)? session.createConsumer (destination): session.createConsumer (destination, filter);

If (log.isTraceEnabled ()) log.trace ("JMS connection starting.");

Connection.Start ();

// **************************************************************

Consume messages

// **************************************************************

If (log.isDebugEnabled ()) log.trace ("Creating recovery containers.");

The < ResultMessage > list processedMessages = new ArrayList < ResultMessage > (maxMessages);

BytesMessage jmsMessage = null;

for (int i = 0; i < maxMessages; i ++) {}

// **********************************************************

Retrieve message attempt

// **********************************************************

If (log.isTraceEnabled ()) log.trace ("recovery attempt:" + i);

switch (type) {}

BLOCKING of the case:

jmsMessage = consumer.receive () (BytesMessage);

break;

IMMEDIATE case:

jmsMessage = consumer.receiveNoWait () (BytesMessage);

break;

TIMED case:

jmsMessage = consumer.receive (timeout) (BytesMessage);

break;

}

// **********************************************************

Process of recovered messages

// **********************************************************

If (jmsMessage! = null) {}

If (log.isTraceEnabled ()) log.trace ("Message retrieved\n" + jmsMessage);

// ******************************************************

Extract the message

// ******************************************************

If (log.isTraceEnabled ()) log.trace ("" extraction result message the JMS message containing "");

Byte [] extracted = new byte [(int) jmsMessage.getBodyLength ()];

jmsMessage.readBytes (extracted);

// ******************************************************

Message to decompress

// ******************************************************

If (jmsMessage.propertyExists (COMPRESSED_HEADER) & & jmsMessage.getBooleanProperty (COMPRESSED_HEADER)) {}

If (log.isTraceEnabled ()) log.trace ("Decompressing message.");

excerpt = since (extracted);

}

// ******************************************************

Message from treatment

// ******************************************************

If (log.isTraceEnabled ()) log.trace ("Message added to the recovery container.");

String signature = jmsMessage.getStringProperty (DIGITAL_SIGNATURE);

processedMessages.add (new ResultMessage (excerpt, signature));

} else

If (log.isTraceEnabled ()) log.trace ("no message was available.");

}

// **************************************************************

Container package return

// **************************************************************

If (log.isTraceEnabled ()) log.trace ("packaging recovered to return messages.");

ResultMessage [] collectorMessages = new ResultMessage [processedMessages.size ()];

for (int i = 0; i < collectorMessages.length; i ++)

collectorMessages = processedMessages.get (i);

If (log.isTraceEnabled ()) log.trace ("Return" + collectorMessages.length + "messages.");

Return collectorMessages;

} catch (NamingException ex) {}

sessionContext.setRollbackOnly ();

log. Error ("unable to locate the named queue:" + jndiDestination, ex);

throw new ConsumerException ("unable to locate the named queue:" + jndiDestination, ex);

} catch (InvalidSelectorException ex) {}

sessionContext.setRollbackOnly ();

log. Error ("invalid filter:" + filter, ex);

throw new InvalidFilterException ("invalid filter:" + filter, ex);

} catch (IOException ex) {}

sessionContext.setRollbackOnly ();

log. Error ("Message of decompression failed.", ex);

throw new ConsumerException ("Message of decompression failed.", ex);

} catch (GeneralSecurityException ex) {}

sessionContext.setRollbackOnly ();

log. Error ("Decryption doesn't have a Message.", ex);

throw new ConsumerException ("Decryption doesn't have a Message.", ex);

} catch (JMSException ex) {}

sessionContext.setRollbackOnly ();

log. Error ("Cannot consumer messages.", ex);

throw new ConsumerException ("Impossible to consume messages.", ex);

} catch (Throwable ex) {}

sessionContext.setRollbackOnly ();

log. Error ("Unexpected error.", ex);

throw new ConsumerException ("Unexpected error.", ex);

} {Finally

try {}

If (session! = null) session.close ();

} catch (JMSException ex) {}

log. Error ("Unexpected error.", ex);

}

}

}

Published by: vineet may 9, 2011 19:53The questions you have posted related to the delivery of messages from consumers in the above test case might be all related to bugs 6961586 and 6972137 which have been fixed in 4.4u2p2 and 4.5. The symptoms caused by these 2 bugs are not limited to those in bug descriptions, for example the cause of the bug 6972137 directly affect the 'busy' State consumer notification. Please update or try to 4.5. As your application runs in the GlassFish server, please follow the notes in the following thread to try 4.5 with GlassFish 2.x

How to check the number of update for OpenMQ -

Can I mix up to 32 bits at a higher than 44.1 kHz sampling frequency?

When I'm using Ableton Live, it allows me to choose 16, 24 or 32-bit, and then I can choose a up to 192000 sample rate. Is this possible in the hearing? I've been through all the preferences and all tabs and I can't find this option. Everything I find is a convert, or the option adjust. But this isn't what I want. I want to mix down this way.

The closest thing is when I go to "Export Audio Mix Down", I found, I can select 32-bit. Then, there is a box for the sampling rate, with all the different values. But it doesn't allow me to change of 44100.

?

sleepwalk1000 wrote:

Thanks for the responses guys. Hmm, well the reason why I ask this question is because I am preparing a cd to be sent to a mastering studio. The engineer told me to mix down to 24 bits instead of 16 bit. I asked him why, because everything happens to 16 bit cd anyway. He said even if my session has 16 bit files, if I have them running through the beaches with effects bus vst as Altiverb (which I do), it will improve the sound effects in mixdown, if treated according to a higher bitrate. Would poll better mix so he could work in the mastering session. I assumed that this meant that a higher sampling frequency would also be beneficial. Maybe not? I use a lot of high-quality vst effects, so I want to make sure I get the best possible results. I mean if you have this option and space disk hard isn't a problem, why wouldn't you use it?

It is interesting that the export Audio Mix Down allows me to mix a session from 16-bit to 32-bit files, but if doesn't let me change the sampling frequency. Maybe I'm not understanding these terms exactly. I always thought that, for two, more the number, the higher sound quality.

SteveG - tell you it would be a waste of more than 32 bit 44.1 kHz mixing? What about k of 48 or 96 k? There is not a noticeable difference?

The sample rate only affects the highest frequency that can be solved - it has no impact on the quality of all, once the rate is high enough to fix all that humans can hear. You determine the highest frequency that can be resolved in any case of the sample given by half to get the "Nyquist" frequency - so 44.1 k the highest frequency that can be fully resolved is 22.05 kHz. The human ear extends up to 20 kHz, but it's only in young children - by the time you reach your teenage years he began to fall, especially if you listen to a lot of loud music... then 44.1 k is already greater than any human condition to the response at high frequency. This overall been proved? You bet it has!

To discover the truth about the hype about the sound quality in general and more bits/sample rate are concerned, you should first read this thread of AudioMasters - and the links it contains. It's of high quality academic research, and all attempts to discredit have been completely ransacked.

Your mastering engineer is correct up to a point, but not really for the right reasons - mixtures of 32 bits are usually more specific, simply because the sums do more accurately and scale of signal is significantly improved. You must keep in mind that the only place where you will hear no appreciable difference with one more high bit depth would be in really quiet parts of reverb tails. The best way to do a mix in Audition is to do all this in 32-bit (which is floating point version of 24-bit anyway) and if he really wants a 24 bit file, you can convert the 32-bit mix after that you have to create a copy of 24-bit integer. As much as the 16-bit CD is concerned, if you (or engineer) procrastinate it properly when you do the master of the 16 bits of the final mix of 32-bit CD, then the effective resolution is higher than 16 - bit anyway - there is also a thread of AudioMasters explaining all this too (it's complicated).

What all the above implies, is that if you keep your mix 32 bit 44.1 k files as they are, if you remaster at a higher sample rate, all you have to do is up-conversion files in hearing. You don't win a single thing in doing that, but then again, you won't lose anything either - and person don't will be able to make a difference! This is not a case of "why don't use you it?" - this is really a case of ' why would you? "

Either way, it should be noted that due to some misinformation presented by people who should really have known better long, understanding of most of the people of sampling is completely and totally false. Once more, a search around AudioMasters will give you a better understanding of the present. If I get a chance later, I'll look on all appropriate threads.

Maybe you are looking for

-

HP Pavilion 23-b034: How can I enter BIOS in an HP Pavilion b034-23 desktop PC?

Hello! I'm really looking forward to overclock my RAM speeds up and to do this, I have to enter the BIOS. I searched some tutorials but without success. I have a PC HP Pavilion b034-23 (all-in-One) and here are my specs PC (according to CPU - Z): AMD

-

I updated ios 9.3 and be forced to log in icloud. I don't know because it's hidden icoud when checking machine. Please help me...

-

Hi all In my vi I used write on the worksheet and read from spreadsheet to create a file or modify the file. Now I want to add a longer IE delete a file. Requirement: should I have a combo box, where the list of files, everything I have is listed on.

-

PROVISION OF 10,0000 ERROR MEMORY

New LaserJet P1102W. Installed cartridge provided in the box. Internal connection to the server and looking for status, I get: PROVISION OF 10,0000 ERROR MEMORY Unable to communicate with the cartridge. and a warning to not use non HP cartridges What

-

How to disable automatic replication of the original images

I really need help on how to disable the automatic original photos copy. I have thousands of duplicates and have hours of spents on topics help and Web sites and cannot find this option. I'm extremely frustrated because this should be simple. If yo