Write to the file of accounts, data from customs (intersection POV) in the rules of HFM

Hi allI'm trying to use scripture to deposit into the HFM rules. I am able to get the entity, scenario, year, time, and Timestamp to save to a file (see code below). Is it possible I can get accounts, customs and crossing of data also to write to a file and make the analysis of perfromance? Basically, how can I have the whole POV in a log file and test the rules.

f.WriteLine txtStringToWrite & '-for:-"& HS. Entity.Member & "-" & HS. Scenario.Member & "-" & HS. Year.Member & "-" & HS. Period.Member & "" & Now()

Thanks in advance. Looking forward to hear from you.

Chavigny.

The account, PKI and custom dimensions are not part of the point of view that the entity, period, year, scenario and value. The only way that you can write these dimensions in a file is to call them in a table, as HS. OpenDataUnit, or list any. It is the only way to get the context for intersections.

If you try to write data to a file, you'll end up with a file that is at least as large as the database itself and could be a very slow process. If that is your intention, you would be better to simply analyze the database. Write to a file should be used only for debugging.

-Chris

Tags: Business Intelligence

Similar Questions

-

Page 79 of erpi_admin_11123200.pdf says that "reporting entity groups are used to extract data from several reporting entities in a single data rule execution. I use standard EBS adapter to load data in HFM application, I created a group of entity made up of several accounting entities, but I can't find a place in FDMEE where you get to select/use this group... When you define an import format you type the name and select Source (e, g. EBS) system you can select Map Source (for example EBS11 I adapter) or the accounting entity (it is what I select to define data load maps), but not both. Note that there is no place to select the Group of accounting entity... Location check menu group entity drop-down but it doe not my group of accounting entity which I believe is anyway something different... and creating a location and pointing to a format compatible with the selected Source adapter is no not good either... I'm confused, so is it possible to load data from several reporting entities in one rule to load or am I misreading the documentation? Thank you in advance.Do not leave the field blank in the Import Format. If leave you empty to the place, when you set the rule to load data (for the location with EBS as a Source system), you will be able to select a single accounting entity or a group of accounting entity.

You can see here

Please mark this as useful or answer question so that others can see.

Thank you

-

Creating rules file to extract data from Interface OIC

Hello.

I'm new on the BEAK, I worked on the DSN earlier method to extract data from the database.

Can someone give me the steps of how extract data from the interface of the OIC.

My database is Oracle 11 g 2 and I use 11.1.2.2 version merger.

Concerning

Mahesh BallaThe format is DBSERVERNAME:PORT / service name

for example ORACLE11G:1521 / ORCLSee you soon

John

http://John-Goodwin.blogspot.com/ -

Copy data from Parent to child with a ruler or a Script

Considering a hierarchy of simple size like this:DimensionX

Parent1

Child1

Parent2

Child2

Parent3

Child3

etc...

How to code a business rule or calculation script to copy the data stored at the level of the parents to their children? Each parent has only one child, and the size in this example is rare.

Well, if you don't explicitly mark up the parent as "Never share" or a set configuration IMPLIED_SHARE setting then these members will automatically be 'implicit actions' containing the same data even when (because there is only one child) - see the setting Dimension and member properties.

Alternatively, you can write a calc script that fixed on level 0 members of this dimension (or any subset of the members of the level 0 you want) and then use a formula that does something like this:

@CURRMBR ("DimensionX") = @PARENTVAL ("DimensionX");

Because it is rare, peut run you in the creation of block issues, according to what blocks you know already exists.

-

Control of data rows Excel (write on a file of measure)

Hi all

Objective:

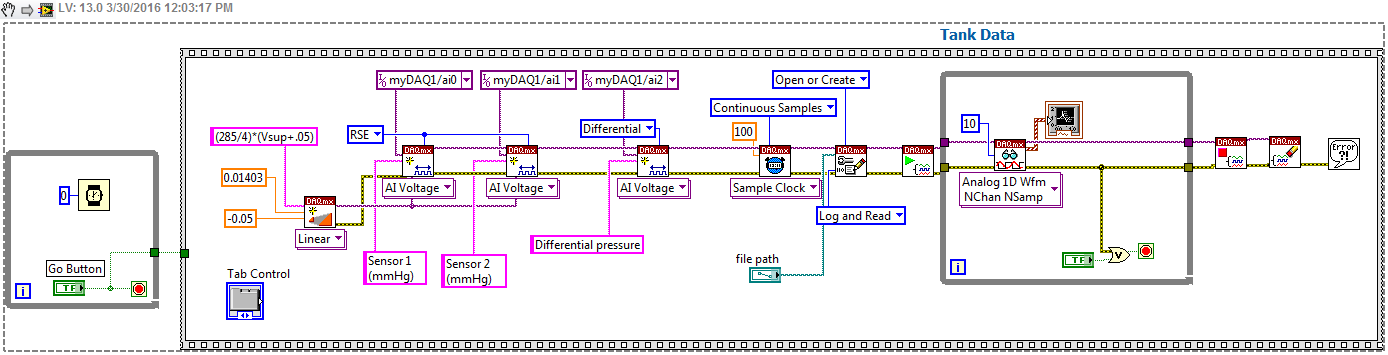

Get a bit of a live waveform of two pressure sensors, as well as calculate the differential pressure. The LabVIEW VI is intended for students for an exercise in module, in which data are exported to an excel file for the students to perform other calculations. Excel file must contain data from the beginning to the end of a race.

Problem:

Pressure sensors work well, waveform table works well, but when you stop the collection of data, the amount of lines in the file Excel output will reflect the number of samples per channel of the DAQmx Read Block (10 = 10 rows of data, 100 = 100 lines, etc.).

I would appreciate some advice on how to conduct the data collection in the part worksheet from start to finish, without this affecting the aesthetic experience of the student (by that I mean the number of channels of the > 10 sample will cause some lag in the waveform, jerky). I tried to resolve this issue with something in the sense of Tank1Solution.png without success. I've included a photo of the original block (Tank2Block.png) and so I worked the file VI. Once more, any help would be much appreciated, I'm not well versed in LabVIEW and I do not know how to address this problem effectively.

Some guys are always doing things the hard way

Of course, you need a son to ai0 - ai2 and ai1 - ai9. This linear scale DAQmx is of course very convenient! and hey, the TDMS files import beautifully into Excel! the addon is there then why not just open a session data? TDMS is much more portable than xlsx.

A few additional remarks. (a) you can't stop this vi before you press Go-who needs a fix. (b) Create scale is likely to return an error as it is the name being illegal. I named it that to show the source of the scale and offset values.

-

After downloading data from bank account

I have Windows 7 and I am considering upgrading to Windows 10. Currently, 7, I struggle when I download my account data from Wells Fargo bank with Quicken 2014. Often, the fake registry posting date shows the transaction has been posted days before the date of the transaction. Several requests have been made to Quicken and Wells Fargo customer support. Still no final settlement. Windows 10 would eliminate this annoyance?

Probably not. the problem seems to lie between Quicken and Wells Fargo. Change the Windows system will not change.

-

Import data from RCMP of PROD to TEST - can I exclude attachments?

Dear professionals,

I have RCMP Oracle Content Server 7.8 application.

Need your expert advise on how to handle this situation. I need update my TEST instance with data from PROD. To do this, I intend to import the metadata of content and the PROD database server to the TEST.

But I'm challenged by the fact that prod attachments are sensitive security and cannot be imported. Can I exclude attachments duing the import / export of the process? the archiver has such an ability to exclude attachments when I create a file to archive data from PROD?

Thank you very much in advance.As chance would have...

Attachments stored using the ZipRenditionManagement component is stored only in the weblayout and NOT the vault.

So if you want that the spare part to disappear on the TEST server when you create your archiver export on PROD make sure you disable the OPTION (by default it is enabled) option Copy Web content in export on the general tab Options.

This means that only the copy of the Vault will move through in the file archive 'batch '. The only fly in the ointment is that if you have the PDF etc. conversions or anything funky going on with the copy of weblayout this process will have to be redone in TEST - but this isn't normally a big headache.

Import this test and you will not all attachments.

Good luck and hope that helps

Tim

PS Si is what you need, please consider this correct marking help others with a similar problem in the future!

-

How to write data from the acquisition of data in NetCDF format?

I connect to a set of data from the sensor through the DAQ assistant and want to write all data in NetCDF format. I have the required plugins installed, but still can not find how to do this.

Or the labview can only read the netcdf files, but cannot write it! Please let me know if there is any other way out. I have looked everywhere but could not find something useful!

Thank you

Hey,.

Sorry, the sheet in effect only allows to read NetCDF files, not writing to the NetCDF format.

Kind regards

-Natalia

-

How many channels can I write to a file with the data Module write (Dasylab 12.00.00)?

I would like to write data from a large number of channels (up to 128) in the same file. Thanks to the write data module, I have up to 16 channels only! The only solution I found is to save data in different files 8... Is there a solution for this problem? I use DASYLAB V.12.00.00. Thank you

You can use the multiplexer unit, this will allow you to compress the 16 channels in 1 which saves up to 256 channels in a single file.

In the module of the file to write, you can then select options and, under the "input Type", select the values of mixed singles. You will then need to define how many channels in each entry will receive.

-

global variable functional to read and write data from and to the parallel loops

Hello!

Here is the following situation: I have 3 parallel while loops. I have the fire at the same time. The first loop reads the data from GPIB instruments. Second readers PID powered analog output card (software waveform static timed, cc. Update 3 seconds interval) with DAQmx features. The third argument stores the data in the case of certain conditions to the PDM file.

I create a functional global variable (FGV) with write and read options containing the measured data (30 double CC in cluster). So when I get a new reading of the GPIB loop, I put the new values in the FGV.

In parallel loops, I read the FGV when necessary. I know that, I just create a race condition, because when one of the loops reads or writes data in the FGV, no other loops can access, while they hold their race until the loop of winner completed his reading or writing on it.

In my case, it is not a problem of losing data measured, and also a few short drapes in some loops are okey. (data measured, including the temperature values, used in the loop of PID and the loop to save file, the system also has constants for a significant period, is not a problem if the PID loop reads sometimes on values previous to the FGV in case if he won the race)

What is a "barbarian way" to make such a code? (later, I want to give a good GUI to my code, so probably I would have to use some sort of event management,...)

If you recommend something more elegant, please give me some links where I can learn more.

I started to read and learn to try to expand my little knowledge in LabView, but to me, it seems I can find examples really pro and documents (http://expressionflow.com/2007/10/01/labview-queued-state-machine-architecture/ , http://forums.ni.com/t5/LabVIEW/Community-Nugget-2009-03-13-An-Event-based-messageing-framework/m-p/... ) and really simple, but not in the "middle range". This forum and other sources of NEITHER are really good, but I want to swim in a huge "info-ocean", without guidance...

I'm after course 1 Core and Core 2, do you know that some free educational material that is based on these? (to say something 'intermediary'...)

Thank you very much!

I would use queues instead of a FGV in this particular case.

A driving force that would provide a signal saying that the data is ready, you can change your FGV readme... And maybe have an array of clusters to hold values more waiting to be read, etc... Things get complicated...

A queue however will do nicely. You may have an understanding of producer/consumer. You will need to do maybe not this 3rd loop. If install you a state machine, which has (among other States): wait for the data (that is where the queue is read), writing to a file, disk PID.

Your state of inactivity would be the "waiting for data".

The PID is dependent on the data? Otherwise it must operate its own, and Yes, you may have a loop for it. Should run at a different rate from the loop reading data, you may have a different queue or other means for transmitting data to this loop.

Another tip would be to define the State of PID as the default state and check for new data at regular intervals, thus reducing to 2 loops (producer / consumer). The new data would be shared on the wires using a shift register.

There are many tricks. However, I would not recommend using a basic FGV as your solution. An Action Engine, would be okay if it includes a mechanism to flag what data has been read (ie index, etc) or once the data has been read, it is deleted from the AE.

There are many ways to implement a solution, you just have to pick the right one that will avoid loosing data. -

filtering of the data sent to write to the file of measure

Hi everyone, I was wondering how to implement a "filter" that prevents the data to write in the function "write into file measuments. Say if the incoming data is a 0 (DBL), hwo to prevent it from being written?

Use a box structure. Put your writing to the file of the measure within a case structure, probably in the REAL case.

-

How can I write to the data file only near the SW trigger samples?

Hello world

I'm working on a model in this one:

http://zone.NI.com/DevZone/CDA/EPD/p/ID/34#0requirements

I would like to save the data once the trigger is reached, with some data near the impact.

Where should I put a "write on a file of measurement" block so can load and draw a shock test data?

Kind regards.

Celuti,

You're so close!

I am happy to see that you have understood what I recommended!

You must ensure that you connect the same type of data in the queue as what comes from the acquisition of data.

Please see the amended version of the code you have posted.

Craig

-

VI data on average compared to the data from the Excel file on average .lvm

I am trying to build a .VI to measure voltages on a channel of a transducer of pressure for a period of 3 minutes. I would like the .VI to write all the samples of blood to a file .lvm with another .lvm file that comes from the average voltage over the period of 3 minutes. I built a .VI making everything above so I think that... The problem I'm running into is when I opened the file .lvm of all samples of blood in MS Excel and take the average of them using the built-in Excel function (= AVERAGE(B23:B5022) for example) averaged and compare it to the .lvm file, which has just 3 minute average .VI voltage, they do not correspond to the top.

This makes me wonder if I use VI with an average of function correctly or if maybe VI averages data different voltage than what is written in the .lvm file.

Does anyone know why the two averages are different and how I can match.

I have attached a picture of my functional schema with the file .VI for clarity.

The Type of dynamic data of LabVIEW you use is a special data type that can take many forms. It therefore requires the use "Of DDT" and "DDT" for the conbert to and from other data types. These special conversion functions can be configured by double-clicking them and specifying the format you are converting from. You can find the functions on the pallet handling Express-Signal.

I've attached a screenshot of the modification of the code using the "DDT" and the average is very good.

Please mark this as accepted such solution and/or give Kudos if it works for you. We appreciate the sides for our answers.

Thank you

Dan

-

I am using Windows 7 and have accounts with MSN Money 2005 and I try to have more data from the Money 2005 file updated in a format that is compatible with the money, and I can use the money more

See the information displayed by SpiritX MS MVP in the http://answers.microsoft.com/en-us/feedback/forum/user/microsoft-money-question/13ef1581-2dfd-45fc-85ba-92cbcade313a thread

-

Extract PDF form data using JavaScript and write in the CSV file

I received a PDF file with a form. The form is * not * formatted as a table. My requirement is to extract form field values and write into a CSV file that can be imported into Excel. I tried using the menu item "Merge data from spreadsheet files" automated in Acrobat Pro, but the release includes both the labels and values. I'm mostly just interested in the form field values.

I would use JavaScript to extract the data from the form and learn JavaScript write CSV file (since I know what should look like the spreadsheet of end). I got regarding the extraction of the fields in the form:

this.getField("Today_s_Date").value;

And the rest of this post: http://StackOverflow.com/questions/17422514/how-to-write-a-text-file-in-Acrobat-JavaScript , I tried to write to CSV using:

var cMyC = "abc";

var doc = this.createDataObject ({cName: "test.txt", cValue: cMyC});

but I get the following error:

"SyntaxError: syntax error".

1:Console:Exec ".

Ideally, I don't want to use a third-party tool online to make, because the data are sensitive. But please let me know if you have any suggestions. The ideal output is a CSV file that an end business user can open in Excel to see the format of spreadsheet of his choice.

Did anyone done this before? Open to hearing alternatives as well. Thanks in advance!

The code you have posted works fine for me in the JavaScript console, so I suspect the problem is something else. Where did he put the code and y at - he seized another code?

In addition, if CSV is not a strong requirement, I would say that you use delimited by tabs instead. Fields normally cannot contain tab characters, this is a good qualifier to use. It will be also more reliable when you import in Excel. If you need to process the field data that may contain quotes, you need prepare correctly the string data and can use a JavaScript library like this: https://github.com/uselesscode/ucsv

Maybe you are looking for

-

Hello. I need to reset my I Mac 8.1 English US English UK. Please can you help me? Thank you.

-

Please help, insert table 1 d 2d array

Hello I'm trying to insert a line of table 1 d in table 2d, but it must be placed in the index as the starting point. For example, line 1, column 2. = starting point. I already wrote the program, but the result is not that I want to.As shown in the p

-

32-bit Vista Home Premium: About 6 months ago, I ran a Check Disk from my C drive (using Vista error checking tool) and checked the two boxes before starting (fix Auto file system and search for errors / attempt to recover bad sectors). He ran throug

-

Impossible to install Wi - Fi - Latitude 10 driver

I reinstalled Windows 8 (not 8.1) on my Latitude 10, although of course most of the drivers were not installed because they were not in the value default Windows installation, including the Wi - Fi driver disc. I tried to install it by downloading th

-

RAID 1 configuration on a 8700

I just got a new 8700 Dell with Windows 7 and a 2 TB Seagate drive. I'm trying to install and configure an identical Seagate drive for RAID 1. I read a similar post for the 8500 which was helpful, but I always use a problem. When I go into the app