Arbitrary large amounts of binary data in a clip of loading

It is easy to download external data in XML format to a clip. However, what I need must load really large volumes of data binary readonly. In my case, the text representation is not an option. Is it possible to download an arbitrary array of bytes in memory, then get this table to read the individual bytes?I don't think that the forth tables like this

var data: Array = [1,2,3,...];

could be solution for my problem either. The reason is that the VM so much additional information associated with each Member of the group.

The only solution that I came here is to pack binary data as strings,

var data: String = "\u0000\u1234\uabcd";

two bytes per character. Avoid any overhead storage, and searching for a member of individual data is trivial.

But I doubt there is a better solution?

I don't think that there is another option other than to load it into a string and then encoded the decode internally to as2. So if you have \u0000 as in the example above, you will find that it does not work.

var data: String = "\u0000\u1234\uabcd";

(Data.Length) evidence //traces 0 (zero) as the first character is a string terminator

I think you need a method of encoding as base64 in the source string and an equivalent class of decoder to decode to binary flash inside. I'm no expert on this stuff... others may learn more, or it could be a starting point for your search.

In the past, I used the classes meychi.com for this sort of thing. Could not see them online now... but there is something else here that may be useful:

http://www.svendens.be/blog/archives/8

With as3 - if I understand correctly - it is not a problem because you can load binary data.

Tags: Adobe Animate

Similar Questions

-

Memory management by displaying the large amount of data

Hello

I have a requirement to display the large amount of data on the front in table 2 & 7 graphic during the time period 100hrs for 3 channels, data read from strings must be written in the binary file, and then converted and displayed in front of the Panel for 3 channels respectively.

If I get 36 samples after conversion for all hours, up to 83 h 2388 samples displayed in table and graphical data are thin and samples correspond exactly.

After that 90 hours 45 minutes late is observed after theoretical calculation of samples, what could be the problem

I have controller dual-core PXI8108 with 1 GB of ram

As DFGray,

says there is no problem with the RAM or display, problem with conversion (timming issue) if I am data conversion of large amount it takes even, compared to the conversion less amount of data. So I modifed so that each data point Sec 1 is convereted at once, problem solved

Thanks for your replies

-

Question on NAS200 and large amount of data to him.

Hi all

I have a Linksys NAS 200 on my home LAN. It is connected via a 1.1 WRT300N wireless router. I have my computer connected to the router via a cable (not wireless). I am running windows XP Media center SP3 on a HP duel core processor computer.

My problem is that I'm trying to move a large number of media to a failed drive on my computer to a drive on the NAS 200. There are about 1.3 TB of info and when I try to copy in windows Explorer it takes forever and consumes a lot of time my computer CPU.

Are other than copying small amounts of these data over time as 3-5 gb each night there other options? Maybe a program that works like a web browser download manager? I'm not too good with FTP solutions, but maybe it would work, but I don't know how to set up. Could this be something with how I set up my router? I use my router as my firewall and my computer does not have a firewall. It has spyware doctor and Ad-Aware of protection running.

Any help would be appreciated.

Thank you

Steve

Robocopy has an option to limit the bandwidth network, this will also limit the CPU usage (both on your Windows machine and the NAS).

For others, try Googling "windows copy bandwidth limit" or "copy windows limit UC" (without the quotes).

= Jac

-

Not sure if this should be in performance/maintenance or hardware/drivers.

Hello. I was wondering about the usb2, eSATA and a bit on the usb3. I have usb2 and eSATA on my systems.

Someone I work with told me that there may be corrupted data if you transfer a large amoutns of data via Usb2. It is best to break your files to move, copy, etc., he said. My colleague told me earlier that anything more than 30 or 40 GB start to be transferred correctly from external factors or for some reason any.

These issues apply to eSATA or Usb3? I guess not, since these other methods are designed to transfer large amounts of data.

Is this true? Is this due to material limitations? What is the recommended size of transfer? It's Windows XP, Vista or 7 limits?

Any info or links are appriciated.

Thank you.

I have never heard of something like this before and have done some fairly large data movements in the past. I would recommend using the program Robocopy in Windows Vista/Windows 7 (and available for Windows XP as a download add-on) to drag the move instead of type / move, given that Robocopy includes a number of features and security provisions that are not present in the case.

'Brian V V' wrote in the new message: * e-mail address is removed from the privacy... *

Not sure if this should be in performance/maintenance or hardware/drivers.

Hello. I was wondering about the usb2, eSATA and a bit on the usb3. I have usb2 and eSATA on my systems.

Someone I work with told me that there may be corrupted data if you transfer a large amoutns of data via Usb2. It is best to break your files to move, copy, etc., he said. My colleague told me earlier that anything more than 30 or 40 GB start to be transferred correctly from external factors or for some reason any.

These issues apply to eSATA or Usb3? I guess not, since these other methods are designed to transfer large amounts of data.

Is this true? Is this due to material limitations? What is the recommended size of transfer? It's Windows XP, Vista or 7 limits?

Any info or links are appriciated.

Thank you.

-

Impossible to transfer large amounts of data more than 10 GB

Original title: the maximum data transfer size?

I recently installed an eSata controller card in location faster PCIex-1 of my computer to benefit from a transfer of data to and from my Fantom drives GreenDrive, of 2 TB external HARD drive. When I started to copy the files from that drive to a new drive HARD internal, I recently installed I could not transfer large amounts of data more than 10 GB. The pop-up message indicating files preparing to copy, then nothing would pass. When I copy or cut smaller amounts of data all works fine. Perplexed...

I think I got the question. It seems that some of the files I transfer were problematic. When I transferred in small amounts, I was then invited for what I wanted to do about these files. Thanks for the reply!

-

Looking for ideas on how to get large amounts of data to the line in via APEX

Hi all

I am building a form that will be used to provide large amounts of data in row. Only 1 or 2 columns per line, but potentially dozens or hundreds of lines.

I was initially looking at using a tabular subform, but this feels like a method heavy since more than an insignificant number of lines.

So now I'm wondering what are the solutions others have used?

Theoretically, I could just provide a text box and get the user to paste in a list delimited by lines and use the background to interpret code on submit.

Another method that I've been thinking is to get the user to save and download a CSV file that gets automatically imported by the form.

Is there something else? If not, can someone give me any indication of which of the above would be easier to implement?

Thank you very much

PT

Hi PT,.

I would say that you need a loading data wizard to transfer your data with a CSV file. 17.13 Creating Applications with loading capacity of data

It is available for apex 4.0 and distributions, later.

Kind regards

Vincent

-

How can I find a large amount of data from a stored procedure?

How can I find a large amount of data to a stored procedure in an effective way?

For example do not use a cursor to go through all the lines and then assign values to variables.

Thanks in advance!>

How can I find a large amount of data to a stored procedure in an effective way?For example do not use a cursor to go through all the lines and then assign values to variables.

>

Leave the query to create the object back to you.Declare a cursor in a package specification than the result set gives you desired. And to declare a TYPE in the package specification which returns a table composed of % rowtype to this cursor.

Then use this type as the function's return type. Here is the code example that shows how easy it is.

create or replace package pkg4 as CURSOR emp_cur is (SELECT empno, ename, job, mgr, deptno FROM emp); type pkg_emp_table_type is table of emp_cur%rowtype; function get_emp( p_deptno number ) return pkg_emp_table_type pipelined; end; / create or replace package body pkg4 as function get_emp( p_deptno number ) return pkg_emp_table_type pipelined is v_emp_rec emp_cur%rowtype; begin open emp_cur; loop fetch emp_cur into v_emp_rec; exit when emp_cur%notfound; pipe row(v_emp_rec); end loop; end; end; / select * from table(pkg4.get_emp(20)); EMPNO ENAME JOB MGR DEPTNO ---------- ---------- --------- ---------- ---------- 7369 DALLAS CLERK2 7902 20 7566 DALLAS MANAGER 7839 20 7788 DALLAS ANALYST 7566 20 7876 DALLAS CLERK 7788 20 7902 DALLAS ANALYST 7566 20If you return a line an actual table (all columns of the table) so you don't need to create a cursor with the query a copy you can just declare the type like this % rowtype tables table.

create or replace package pkg3 as type emp_table_type is table of emp%rowtype; function get_emp( p_deptno number ) return emp_table_type pipelined; end; / create or replace package body pkg3 as function get_emp( p_deptno number ) return emp_table_type pipelined is begin for v_rec in (select * from emp where deptno = p_deptno) loop pipe row(v_rec); end loop; end; end; / -

Transport of large amounts of data from a schema of one database to another

Hello

We have large amount of data to a 10.2.0.4 database schema from database to another 11.2.0.3.

Am currently using datapump but quite slow again - to have done in chunks.

Also files datapump big enough in order to have to compress and move on the network.

Is there a quick way to better/more?

Habe haerd on tablespaces transportable but never used and do not know for speed - if more rapid thana datapump.

tablespace names in the two databases.

Also source database on the system of solaris on Sun box opertaing

target database on aix on the power box series of ibm.

Any ideas would be great.

Thank you

Published by: user5716448 on 08-Sep-2012 03:30

Published by: user5716448 on 2012-Sep-08 03:31>

Habe haerd on tablespaces transportable but never used and do not know for speed - if more rapid thana datapump.

>

Speed? Just copy the data files themselves at the level of the BONE. Of course, you use always EXPDP to export the "metadata" for the tablespace but that takes just seconds.See and try the example from Oracle-Base

http://www.Oracle-base.com/articles/Misc/transportable-tablespaces.phpYou can also use the first two steps listed on your actual DB to see if it is eligible for transport and to see what there could be violations.

>

DBMS_TTS EXEC. TRANSPORT_SET_CHECK (ts_list-online 'TEST_DATA', => of incl_constraints, TRUE);PL/SQL procedure successfully completed.

SQL > TRANSPORT_SET_VIOLATIONS The view is used to check violations.

SELECT * FROM transport_set_violations;

no selected line

SQL >

-

Hi all

I did an applet that receives the data, signs and returns the signed data. When the amount of data is too big, I break into blocks of 255 bytes and use the Signature.update method.

OK, it works fine, but perfomarnce is poor due to the large amount of blocks. Is it possible to increase the size of the blocks?

Thank you.Hello

You cannot change the size of the block, but you can change what you send.

You can get better performance by sending multiple of your block size of hash function that the card will not do an internal buffering.

You could also do as much of the work in your host as possible application and then simply send the data you need to work on with the private key. Generate the hash of the message does not require a private so key can be made in your host application. You then send the result of the hash to the card is encrypted with the private key. This will be the fastest method.

See you soon,.

Shane -

Advice needed on the way to store large amounts of data

Hi guys,.

Im not sure what the best way is to put at the disposal of my android application of large amounts of data on the local device.

For example records of food ingredients, in the 100?

I have read and managed to create .db using this tutorial.

http://help.Adobe.com/en_US/air/1.5/devappsflex/WS5b3ccc516d4fbf351e63e3d118666ade46-7d49. HTML

However, to complete the database, I use flash? If this kind of defeated the purpose of it. No point in me from a massive range of data from flash to a sql database, when I could access the as3 table data live?

Then maybe I could create the .db with an external program? but then how do I include this .db in the apk file and deploy it for android users device.

Or maybe I create a class as3 with a xml object initialization and use it as a way to store data?

Any advice would be appreciated

You can use any way you want to fill your SQLite database, including using external programs, (temporarily) incorporation of a text file with SQL, executing some statements code SQL from code AS3 etc etc.

Once you have filled in your db, deploy with your project:

Cheers, - Jon-

-

I am running a VI which each loop saves a 1 d array to a binary file. I leave the loop run thousands of times, but after I sent the binary data in .xls format, I noticed that it was down the last few hundred loops (because I know that data should have looked like). Basically I was running a wave form and it seemed as if when I let the waveform 4 times, cycle 3 present you would Excel in. Is there a reason for this?

The fixed! I used the skeleton to read a binary file for the example for my vi section and the skeleton was equal to 8 bytes of data size. Apparently, mine is 4 bytes? and so 8 was originally think that it there was only half because the amount of data that it has been. I changed and all the data is there! Thank you!

-

Converting large amounts of points - 76 million lat/lon to space object...

Hello, I need help.

Platform - Oracle 11g 64 bit on 64-bit Windows server 2008 Enterprise. 64 GB of ram with 2 processors for a total of 24 cores

Does anyone know a quick way to convert large amounts of points of a space object? I need to convert lat/lon st_geometry ESRI or Oracle sdo_geometry 76 millions.

Currently I have the configuration code using parallel functions in pipeline and several jobs that run at the same time. It takes still more than 2.5 hours to deal with all the points.

Pointers would be GREATLY appreciated!

Thank you

John

Hello

Where is the lat/long data at this time? In an external text file or in an existing database table as attributes numbers?

If they are in an external text file, then I'd probably use an external table to load them in as soon as possible.

Are they in an existing database table, then you can just update the column sdo_geometry to help:

update

set

= sdo_geometry(2001, , sdo_point_type( , , null), null, null) where is not null and is not null; That must run very fast for you. If you want to avoid the overhead of creating again, you can use "create table... as select... ». This example of creating 1 000 000 points works in 9 seconds for me.

create table sample_points (geometry) nologging as (select sdo_geometry(2001, null, sdo_point_type( trunc(100000 * dbms_random.value()), trunc(100000 * dbms_random.value()), null), null, null) from dual connect by level <= 1000000);

I have the configuration code using parallel functions in pipeline and several jobs that run simultaneously

You must use pl/sql for this task. If you find you, then provide a code example and we'll take a look.

Kind regards

John O'Toole

don't read full of binary data from the db

I have a strange problem. I read the binary data (png images) of database through cold fusion. everything worked, but now it has stopped working. the exact same script works on another server and the images appear correctly. but on the other server, it works more (from one hour to the next..). data base is the same. other data in the database are correctly read. but binary data may not be read or not completely. images remain blank, because only a header is created.

I managed to convert the binary data, which are read for string and display it on both servers. Result, on one server, its site more of one and a half on the non working server, it's just more or less an information line.

any ideas what could be the problem? kind of time-out due to the length of the data?

do not know how and why, but after 5 days of research, and now, writing this post, I looked in cold fusion administrator and I saw, that the ' enable binary large object (BLOB) retrieval ' has been disabled... it wasn't me...

so the problem is solvedbinary data from GPS VI-example RF recording / reading with NI USRP

Hello

In the demo video (http://www.ni.com/white-paper/13881/en) a ublox was used to record the GPS signal while driving. How is it possible to record with you - Center in a binary data format which is usable within LabView for the reading of the GPS signal? Ublox uses the *.ubx data format, is there a converter?

Hello YYYs,

The file was generated not by uBlox but by recording and playback VI. An active GPS antenna, fueled by some amplifiers and mini-circuits was related to the USRP and the program created LabVIEW file (USRP being used as a receiver)

Later the USRP is reading the file (generation) and the Ublox GPS receiver is to be fooled into thinking that its location is currently somewhere else.

Convert binary data into data across the

Hello

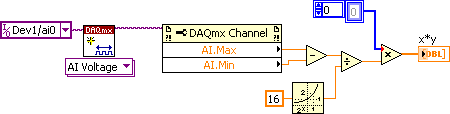

If I got DAQmx Read.VI binary data (analog 2D I16 Nchan Nsamp), how can I convert these data to scale?

Best,

Jay

See if that makes sense. There is probably a property for the number of bits A/d has, but I he can't think right now and can't spend toom much search time.

Maybe you are looking for

-

With Preview.app permission issues

Hello Given that I've updated for macOS 10.12 I can't change any photo on my desk with Preview.app. When I do a print screen or drag a picture of Mail.app on my desk and start to edit, I get a notice in Preview.app that the original document is not e

-

every word is marked as incorrect with no correction for any word.

-

Satellite L755-1NX repair and warranty

Two months ago, I bought a new Toshiba Satellite L755-1NX.*After this short period of normal use, the connection between the charger and the computer was damaged from overheating problems. The laptop and the Charger AC/DC seem to be tonwork well outs

-

Anti-virus AVG lights.

-

Hey guys,.I was wondering if vshpere installation must be on a formatted pc, or can I just install it on my pc with my existing operating system?