Crush the unread samples

I have the engine program DAQ, which has the following requirements:

- = 10kS/s sampling rate

- the buffer size = 200,000 samples

- unread samples need to be replaced

- It must be possible at any time to read all available samples, no matter how many samples is currently available...

- ... If 1 second of data is available, then read the last 10000 samples

- ... If I do not read a minute, I want to be able to read the most recent 200,000 examples

- ... after a reading, the buffer must be empty, so the next read returns only the new data - which have not been read yet.

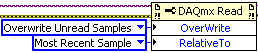

As you can imagine, I've played days as well as the property Read DAQmx node. But if I put this:

So I set an absolute offset, that is to say 200000. Then, I can not read until my buffer is full. If I try to read before, I get an error-200277. It is perfectly plausible, but let's say I wait 20 seconds to fill the buffer...

.. .once the buffer is full and try to read all available samples (-entry on the DAQmx Read VI (1), the buffer will not empty after reading.) This setting does not, mean that there is always some 200,000 samples available.

I have not found an example under the i and no solution in the forum.

concerning

Madottati

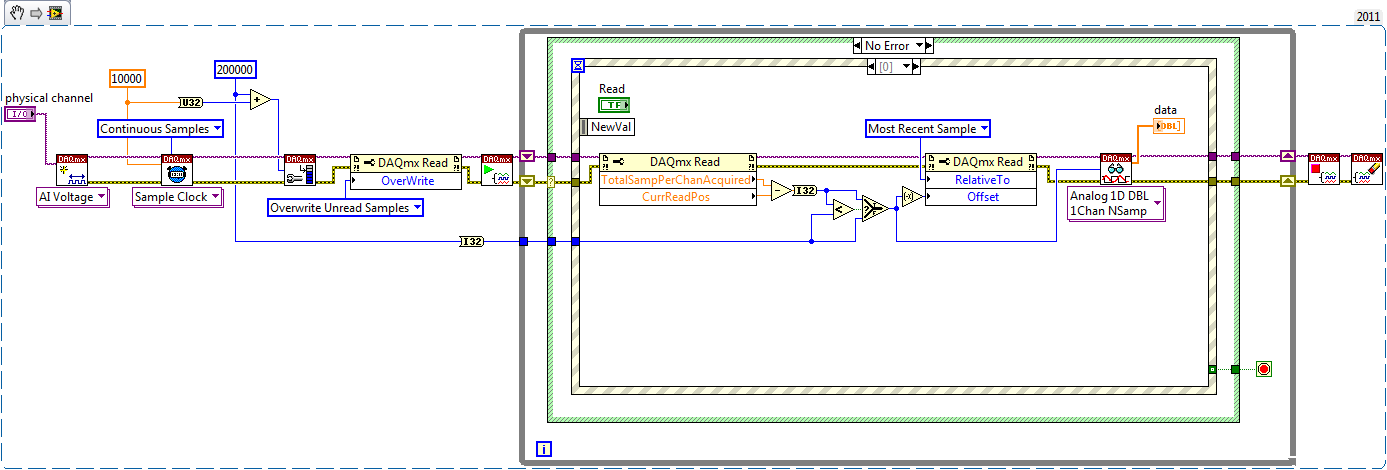

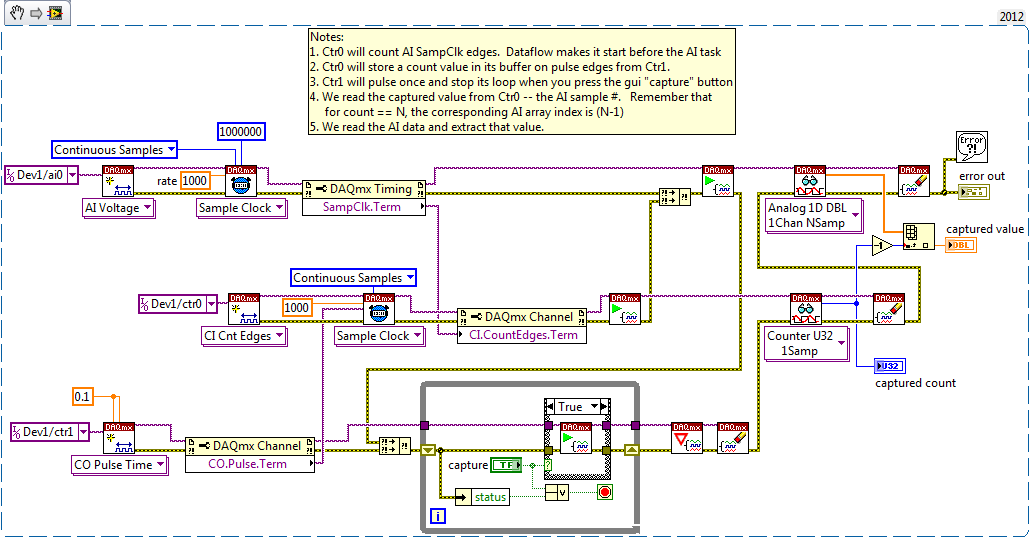

You can change those read Properties, while the task is running. Something like this should work:

You must add a bit of extra time to your buffer (I went with 1 second) - If you try to read a snippet that was crushed, you'll get an error. If the amount of data you want is exactly the same as the size of the buffer, it is almost certain that will happen.

Madottati wrote:

I wrote to 200,000 (twohundred thousand) samples-> hold 20 seconds of data.

You probably already know, but in many countries, a point is used to denote a decimal point. Putting periods / commas in numbers other than to show a number decimal may cause confusion on forums like this one.

Best regards

Tags: NI Hardware

Similar Questions

-

Save the high sampling rate data

Hello!

I use NI PXI-4462. (204.8kS, input analog 4 / s sampling frequency)

I want to collect data from "load" (channel 1) and "acceleration sensor" (2nd, 3rd, 4th channel).

I also want to save data to a text file.

So I do a front pannel and block diagram. You can see in the attached file.

The program works well in a low sampling rate.

However, when I put up to 204800 s/s sample rate, the program gives me "error-200279".»

I don't know what means this error, and I know why this happened in the high sampling rate.

I want to know how I can fix it.

Is there any problem in my diagram?

Is it possible to save high sampling rate data?

I really want to samplling more than 200000 s/s rate.

I would appreciate if you can help me.

Thank you.

NH,

You have provided excellent documentation. So what has happened is that the amount of time it takes to run the other portion of the loop results in a number of samples to be taken is greater than the size of the buffer you provided (I don't know exactly what it is, but it will happen at high frequencies of sampling high) resulting in samples are crushed. You might be best served in this case to take a loop of producer-consumer - have the loop you have acquire the data but then have an additional loop that processes the data in parallel with the acquisition. The data would be shipped from the producer to the consumer via a queue. However, a caveat is that, if you have a queue that is infinitely deep and you start to fall behind, you will find at the sampling frequency, you specify that you will begin to use more and more memory. In this case, you will need to find a way to optimise your calculations or allow acquisition with loss.

I hope this helps. Matt

-

Crush the display flashes for 2-3 minutes after that mini mac wakes up from sleep

Crush the display flashes for 2-3 minutes after that mini mac wakes up from sleep

If you have another Thunderbolt cable, plug it into the unused port on the

Thunderbolt Display and then in the Mini and see if the same thing happens.

What you can see is a precursor of the poster, built out of the cable.

At least, it could eliminate a costly repair problem. Although the cable itself is not

expensive, opening to the top of the screen of TB to replace could be.

Well that not connected on a Mini, I began to see in similar behavior my

iMac, so that there was finally a break in the complete cable.

-

How can I encrypt a drive where cs diskutil convert reports 'a problem', let the unreadable disk?

I try to encrypt my Time Machine backup volume, but diskutil refuses and leaves the disc unreadable (but fixable using Linux). How can I proceed?

It is a disc of TB USB3 Western Digital Elements 107 3.

It has one HFS partition + and works very well.

diskutil verifyDisk and diskutil verifyVolume don't report any problems. I also used Alsoft DiskWarrior to repair inconsistencies, without problems.

If I try to convert the volume, diskutil report 'a problem', like this:

rb@Silverbird$ /usr/sbin/diskutil cs convert /Volumes/RB3TB1/ Started CoreStorage operation on disk4s2 RB3TB1 Resizing disk to fit Core Storage headers Creating Core Storage Logical Volume Group Attempting to unmount disk4s2 Switching disk4s2 to Core Storage Waiting for Logical Volume to appear Mounting Logical Volume A problem occurred; undoing all changes Switching partition from Core Storage type to original type Undoing creation of Logical Volume Group Reclaiming space formerly used by Core Storage metadata Error: -69842: Couldn't mount disk

This leaves the unreadable disk. diskutil cs list shows the drive as a basic storage volume that is "online" and "reversible" but it may not be mounted or acted on, nor can the physical volume of the parent. Disk utility crashes at startup when the drive is connected. diskutil repairDisk on volume of parent reports:

Repairing the partition map might erase disk4s1, proceed? (y/N) y Started partition map repair on disk4 Checking prerequisites Problems were encountered during repair of the partition map Error: -69808: Some information was unavailable during an internal lookup

In fact, all access to the disc seems to be broken at this point.

rb@Silverbird$ sudo /usr/sbin/gpt show /dev/disk4 gpt show: unable to open device '/dev/disk4': Input/output error

You cannot read the device using hexdump. The kernel seems mightily confused.

I was able to recover the disc mounting Linux using the utility 'gdisk' to change the partition type to AF02 (storage of carrots) to AF00 (HFS +). After that, diskutil checks the disk so the volume as OK.

I think that Apple has a bug where diskutil fails to return the partition type.

But this me no bugs more closely to encrypt my backup volume.

Anyone have any idea what might be the 'problem' and how do I proceed?

rb@Silverbird$ /usr/sbin/diskutil info /Volumes/RB3TB1 Device Identifier: disk5s2 Device Node: /dev/disk5s2 Whole: No Part of Whole: disk5 Device / Media Name: RB3TB1 Volume Name: RB3TB1 Mounted: Yes Mount Point: /Volumes/RB3TB1 File System Personality: Journaled HFS+ Type (Bundle): hfs Name (User Visible): Mac OS Extended (Journaled) Journal: Journal size 229376 KB at offset 0x8f07408000 Owners: Enabled Partition Type: Apple_HFS OS Can Be Installed: Yes Media Type: Generic Protocol: USB SMART Status: Not Supported Volume UUID: F096E831-F27D-3433-9BBE-6B65F4F69FA5 Disk / Partition UUID: FE09034E-6AA0-4490-82A1-1F7E894ACD91 Total Size: 3.0 TB (3000110108672 Bytes) (exactly 5859590056 512-Byte-Units) Volume Free Space: 78.1 GB (78142939136 Bytes) (exactly 152622928 512-Byte-Units) Device Block Size: 4096 Bytes Allocation Block Size: 4096 Bytes Read-Only Media: No Read-Only Volume: No Device Location: External Removable Media: No

The disk contains files of about 732000000, according to the disk utility (in reality the directory entries).

This is OS X 10.11.2, day, based on a new install of OS X 10.11 last month.

Hello RPTB1,.

Thank you for using communities of Apple Support.

I see that you are wanting to encrypt your Time Machine backup disk. I see you are trying to do through Terminal or Unix command line, have you tried to use it through the Time Machine configuration and preferences? Take a look at this article and see how it works for you.

OS X El Capitan: choose a backup drive, then set the encryption options

Best regards.

-

Measurement error of the County of edge by using the external sample clock

Hello

I'm trying to measure the number of edges (rising) on a square wave at 5 kHz with a generator function on a device of the NI PCIe-6363. I configured a channel of County of front edge of counter at the entrance of the PFI8 device. I use an external sample clock that is provided by the output of the meter of a NI USB-6211 housing channel. If I acquire for 10secs then ideally I would expect to see a total of 50000 edges measured on the meter inlet channel. However, my reading is anywhere between 49900 and 50000.

When I use the internal clock of time base to measure the edges, the measure is accurate and almost always exactly 50000. I understand that when you use the external sample clock, the precision of the measurements is subject to noise level of the clock signal. However, I checked the clock signal is stable and not very noisy. Any reason why there is an error of measurement and how tolerance should I expect when using an external sample clock compared to when you use the internal time base clock?

Also, what is best clock Frequency (with respect to the frequency of the input signal) when using an external clock?

Thank you

Noblet

Hi all

Thanks for all your sugggestions. I was using an input signal with a function generator which had a range of 8V. It turns out that the reduction of the amplitude to 5V solves the problem. I was able to get accurate numbers with the 6211 external clock.

Thank you

Noblet

-

divide the internal sample clock (VCXO)

Hi all

I want to divide clock source internal sample of my high-speed 5122 digitizer PXI card.

5122 PXI, 200 MHz internal clcok source example. I want to taste my data at 10 MHz.

So I want to divide down the clock of internal sampling by a factor of 20.

I want to do it programmatically.

I have found no vi node or property for that (although found the node property for entry divider OR worn... but which has been used only for reference for on-board clock clock)

Help me as soon as possible...

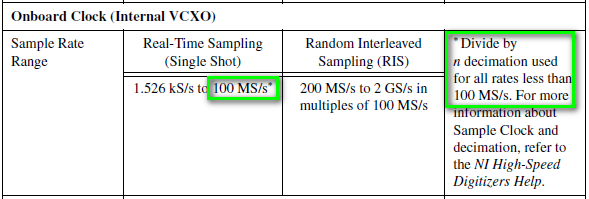

Hi Jirav,

I just wanted to clear some things. The 5122 has a sampling rate 100 MECH's maximum real-time. / s (not 200 MHz as you originally suggested). To obtain a rate of 10 MHz, you would be divided down by a factor of 10. From page 13 of the document specifications NI 5122:

Just to add to what Henrik suggested, the following help topic describes the specific VI to configure horizontal properties such as the sampling frequency, he mentioned:

http://zone.NI.com/reference/en-XX/help/370592P-01/scopeviref/niscope_configure_horizontal_timing/

Most of the expedition OR SCOPE examples use the VI above and there is an entry for "min sampling rate" where you can simply specify the value "10 M" or '10000000' to get the device of sampling at a lower rate.

Note: because the digitizer allows only sampling frequencies which are an integer divide down the maximum sampling frequency, rate will always be forced to match up to the second tier legal sample. For example, if you specify '9.8435 M', it would automatically force the rate up to 10 MHz. To display the actual value that the scanner is used, you can query the property node "Actual sampling frequency" at any point in your code after the configurations have been committed digitizer. The help manual describes this property on the following page:

http://zone.NI.com/reference/en-XX/help/370592N-01/scopepropref/pniscope_actualsamplerate/

Kind regards

-

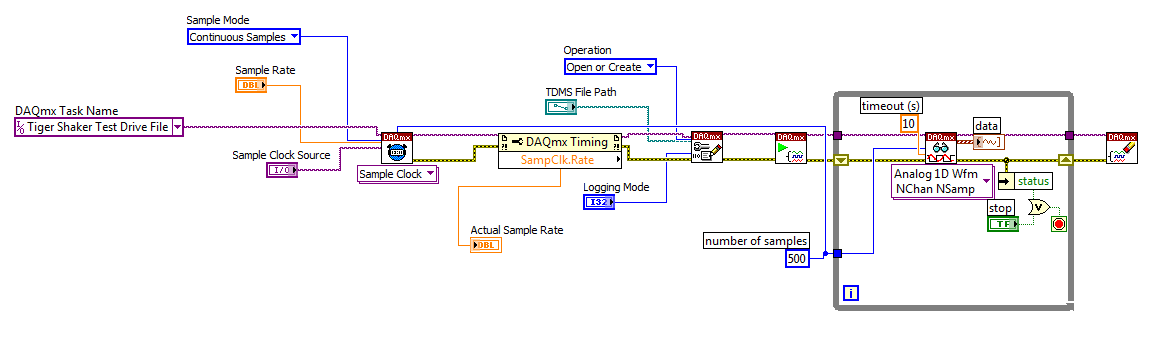

How to change the DAQmx sampling frequency

Hello

I'm trying to: record streaming channels (acceleration 21 and 1 tension) using a DAQmx task, then convert the data to a PDM file. The program files and output to the TDMS file very well. The issue I'm having is that I can't change the sampling frequency. I want to record 500samples/s and I can not get the "real sampling rate" of change of 1651.61samples / s. I am trying to use the clock to do this and I succumbed. I also tried to change the settings of "Timing" in the task without a bit of luck. Here is a screenshot of the .VI I created. I've also attached a copy of the file VI. Any help would be greatly appreciated!

Thank you

Tony

You will need to provide the model of your device. You can also look in the sheet/manual to see what the real supported sampling rates. Some devices have limited rates.

-

DaqMX wait the next sample causing slow down Clock.vi

Hello

I have a question about the proper use of DaqMX wait for next sample clock.

I read channels analog voltage on a map or pcie-6259.

I would like to read as soon as possible make your comments between each of these points of single data points.

I wish I had an error generated if I miss a data point.

From reading the forums, I've gathered that the best way to do it is using the Timed Single Point material.

A simplified program that I use to test this is attached.

If I remove the DaqMX wait for next sample Clock.vi, my program seems to work.

I added a counter to check the total time is as expected.

For example, the program seems to work at the speed appropriate for 120.

However, without that vi, it seems that the program does not generate a warning if I missed a sample.

So I thought that the next sample clock waiting vi could be used to determine if a single data point has been missed using the output "is late."

However, when I add inside as shown in the joint, the program seems to slow down considerably.

At high rates as 120000, I get the error:-209802

14kHz is the approximate maximum rate before you start to make mistakes.

My question is: is this the right way to check a missed sample? I don't understand why the wait next sample Clock.vi is originally a slow down. Without this vi, the program does just what I want except that I do not have strict error control.

My confusion may be based on a lack of understanding of real-time systems. I don't think I do 'real time' as I run on an ordinary pc, so maybe I use some features that I wouldn't.

Thank you

Mike

Mike,

You should be able to read to return delays errors and warnings by setting the DAQmx real-time-> ReportMissedSamp property. I think that if you enable this, you will see errors or warnings (according to the DAQmx real-time-> ConvertLateErrorsToWarnings) in the case where you use read-only. I'm a little surprised that you have measured your application works at 120 kHz without waiting for next sample clock (WFNSC), although I'm not surprised that it would be significantly faster. I think if you call read-only, you'll read the last sample available regardless of whether you would of missed samples or not. When you call WFNSC, DAQmx will always wait for the next, if you are late or not sample clock. In this case, you will wait an additional sample clock that is not the case in read-only. Once again, I expect that, in both cases, your loop would not go to 120 kHz.

Features real-time DAQmx (hardware Timed Single Point - HWTSP) are a set of features that are optimized for a one-time operation, but also a mechanism to provide feedback as to if a request is following the acquisition. There is nothing inherently wrong with using this feature on a non real-time OS. However, planner of a non real-time OS is not going to be deterministic. This means that your app 'real time' may be interrupted for a period not confined while the BONE died in the service of other applications or everything he needs to do. DAQmx will always tell you if your application is to follow, but can do nothing to guarantee that this will happen. Thus, your request * must * tolerant bet of this type of interruption.

There are a few things to consider. If it is important that you perform the action at a given rate, then you should consider using a real-time operating system, or even with an FPGA based approach. If it is not essential to your system, you might consider using is HWTSP, where you do not declare lack samples (DAQmx simply give you the most recent example), or you could avoid HW timing all together and just use HAVE request to acquire a sample at a time. What is appropriate depends on the requirements of your application.

Hope that helps,

Dan

-

Distortion of the signal caused by the channels # sampled and sampling frequency

I am using an acquisition of data USB-6211 (250 ksps / s) and looking at the sample channels 3s 80kS. (Labview 2012)

When I taste one channel, it looks fine (1 Channel_Sampled First_250kS), but when I add another channel to be sampled, the signal is driven down and that it depends on which channel is sampled (2 channels (Different) _Sampled First_40kS and 2 Channels_Sampled First_30kS). Addition of channel 3, it pulls down even more. I also noticed that the sampling rate also distorts the signal the higher the sampling rate, the more the signal is driven down.

The acquisition of data IS sampling of signals "correctly" when I run my Labview VI my external hardware begins to read in correctly based on the distortion of the signal.

What is the cause and is there a way to fix this?

I have attached the waveform above captures and can post some if necessary.

Thanks in advance,

WBrenneman

That's exactly what ghosts means. The measurement signals later is affected by other signals. It happens usually if you have a high impedance input signal. Adding pads like you can help solve this problem by making the signal to a lower impedance.

Ghosting would probably look worse to the frequency sampling rates higher, just as you said that you had problems, as it provides less time between samples of the amplifier set new voltage level when the multiplexer allows to switch between input signals.

-

By dividing the time base clock sample by N, we're the first sample on pulse 1 or pulse N?

I use an external source for the time base a task of analog input sample clock. I'm dividing down by 100 to get my sample clock. Is could someone please tell me if my first sample clock pulse will be generated on the first impulse of the source of the base of external time, or about the 100th?

I use a M Series device, but can't see a time diagram in the manual that answers my question.

Thank you.

CASE NO.

CERTIFICATION AUTHORITIES,

I don't think it's possible to use the sample clock of 3 kHz on the fast map as the time base clock sample on the slow map and get the first sample to align. The fast card can enjoy on each pulse signal 3 kHz, while the slow card will have to meet the requirement of the initial delay before he can deliver a sample clock. If you turn this initial delay a minimum of two ticks of the time base, the slow card eventually picking around the edges of the clock 2, 102, 202, etc.. You can set the initial delay for 100, which means that the slow card would taste on the edges, 100, 200, 300... but you wouldn't get a card reading slow on the first edge of your sample clock.

Hope that helps,

Dan

-

Effectively change the continuous sample mode sample mode finished

9188 chassis, modules (HAVE them), 9 of Labview

I want to acquire continually (and display) given at a fast rate to date, and then when a 'trigger arms' State is detected, the switch to finished hardware, triggered task with pre-trigger samples with a minimum loss of perceived as update rate.

What is the best way to do

Thank you

Collin

I started my answer a long time ago while waiting for a reboot and am just finally getting back to it. I see that you got another another answer in the meantime. On the outbreak of reference, here is another link that might help some: Acq suite w / Ref Trig

I did have not tinkered with reference trigger a lot, so not sure what is meant in the link above about the task being an acquisition over 'the end'. Maybe the task continues to fill the buffer just before there about to overwrite the first sample of pre-trigger?

In any case, my original thought the whole thing was on another track. If the trigger Ref works for you, it'll be easier. But if Ref trigger causes the acquisition of stop * and * you really need to keep it going permanently, then consider the things below:

-------------------------------------------------------------------------------------------

Passage of the continuous sampling done will require the judgment and the reprogramming of your task, and you will have a 'blind spot' of data loss, while what is happening.

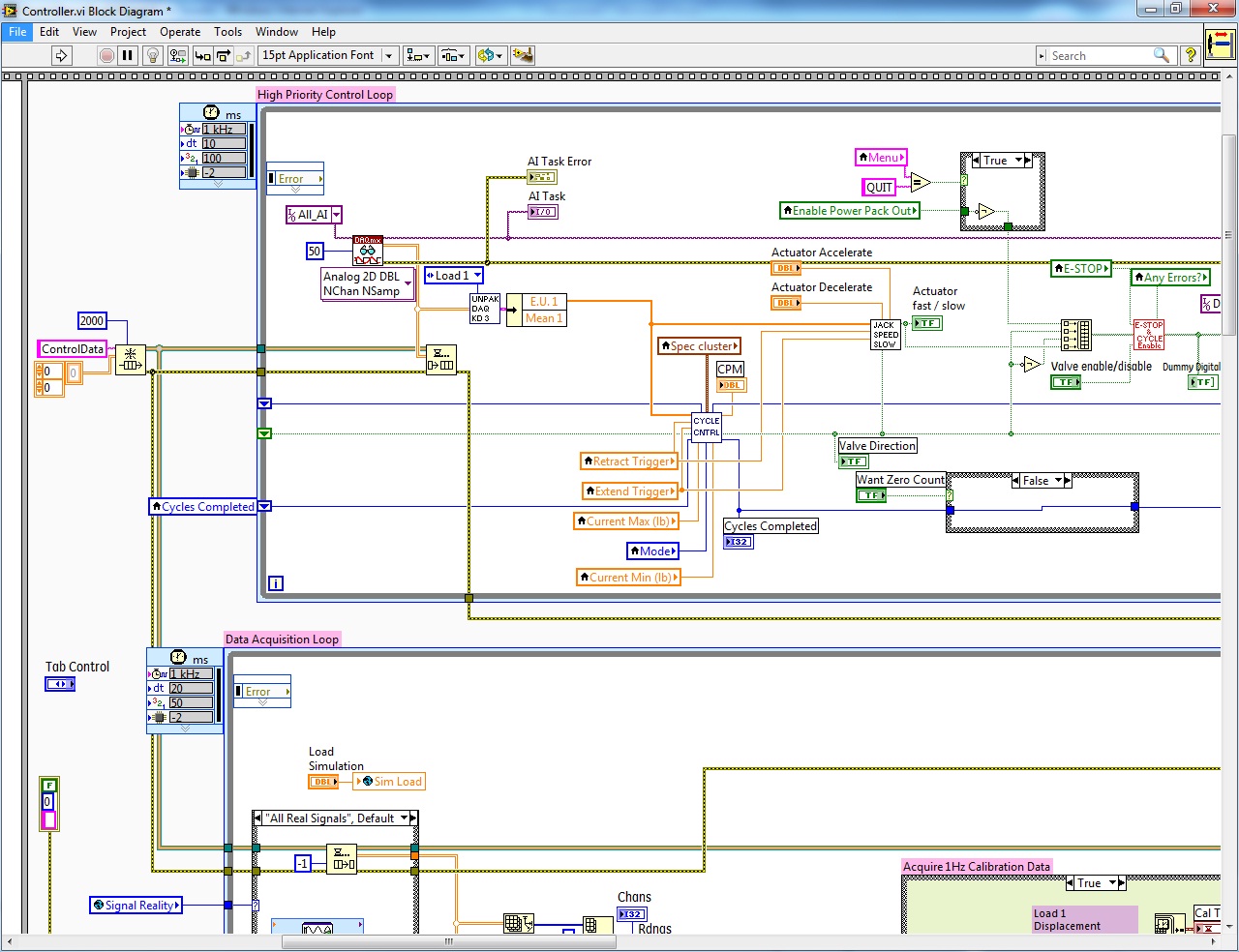

An approach that I think is to stick to a sampling WAS continuous, but also set up a counter task that can count it HAVE examples of clock and capture on the trigger signal. The captured value of count will be HAVE it taste # when the trigger has occurred, and then easily post-process you your data HAVE to find the subset that represents your data before and after the desired trigger.

Here is an excerpt of what I mean 2012:

-Kevin P

-

Some or all of the requested samples are not yet acquired: API change responsible?

My Delphi program, which works very well under NOR-DAQmx 8.9.0f4 on a set of material, fails under 9.0.0f2 on what I received insurance material is equivalent. The error is «some or all of the requested samples are not yet acquired...» ». This happens even to slow scanning rates, and coming to suspect that the API has changed subtly in the transition from version 8 to 9. What is the probability is it? Now that I have access to the source code on the new hardware (NI PXI-1044 with computer integrated PXI-8110) is there something I can do to check this?

Hi Francis,.

Your program still calls DAQmxCfgDigEdgeStartTrig() for all the tasks of the slave? If you do not do this, the devices are beginning to acquire data at the same time, and since you pass 0 for the timeout parameter to DAQmxReadBinaryI16(), out-of-sync devices could certainly time-out errors.

In addition, you must ensure you start the slave devices before the master device, so that they're already waiting for a trigger at the start of the master device.

Brad

-

Gel application - "the required samples are not yet acquired.

Hello

I have a vi that controls a test bench. The vi was created using Labview 8.6. Vi works fine and controls to the test correctly when running on the pc where it was created in the full version of software development (which is not converted into a stand-alone executable).

The problem occurs when I create an executable file and try to run it in another pc. Initially the application seems to work ok but then after a random time (anything between 30 seconds and 12 hours), the application starts to turn slowly and then loses communication with the hardware (cDAQ-9178). After what happened when I opened MAX and try to run the task I get an error message that says that «some of the requested samples are not yet acquired...» »

When the application starts to slow down, I see a reduction of the available physical memory and one of the CPU usage increases to 100%.

I built the application using Labview 2012 and use MAX 5.3.1 on the pc hosting the executable file.

Below, I have included a screenshot of the area of the block diagram which is probably more relevant to solve this problem. In MAX, the task is set to continuous samples, 1 k samples read at the rate of 1 k.

Anyone have any suggestions for me to try please?

Thank you

So now, you can try what BCL@Servodan suggested. Create and launch tasks with DAQmx before your loop and see if it helps. Remove the timed loop would be also advised, and you set the sampling frequency before the normal while loop, as in the official example.

This would not require too much effort and can help...

-

In all other ways my XP system is up-to-date, with the exception of SP3. I never needed before. SP1 and SP2 have been installed at the time when they were new. Now SP3 is required to upgrade to some new programs. Installation of the SP3 at this late date crush the latest system updates or interfere with programs like IE 8? I don't want that my system is back to older parameters which are now outdated. If the system settings are changed, how do I know those who?

No, this does not disturb.

-

ATT00004

My e-mail address is stored in My Documents in the file ATT0004. Opening the onus in the unreadable text by me. How to convert to text.

This looks like an attachment from Outlook that was sent in the RTF format. Try asking the sender to send in text in HTML format or gross but not rich TEXT format.

Otherwise, you will need to be much more detailed in your question.

Steve

Maybe you are looking for

-

Cannot change settings on R3000 XR

Hello I try to remove the devices of a R3000 XR UPS management module but cannot delete devices. Other symptoms include the inablility to change the ip address of the existing devices in the list of connected devices. Has anyone encountered a similar

-

I try to use the Microsoft Office tool that implement an MSI for 64-bit Office 2010 installation file. The tool works file and creates the msi file. I get to the tool by going to the x 64-bit folder on the office 2010 installation disc. I install a

-

communication serial port to read from an Analog Devices inertail sensor

Hi, I have an ADIS16350 of inertial sensor by Analog Devices. My motive is to read continuous data of the sensor. Firmware sensor has specific commands as follows: Wil returns OK RA read a time of sensor It's going to read the sensor continuously Whe

-

error message windows Security Center cannot be started

A "Windows security alerts" icon (red shield with an X) makes its appearance on the right side of my taskbar. When I right click on it, I'm promped to click on, "Open Security Center. I then follow the instructions to locate the Security Center, but

-

My operating system is Vista Home Premium. We have two user accounts: mine (Administrator) and my husband. On his account, all his images links are broken. Images with assistance, the Explorer, Google (weather, calendar) desktop tools. I did basic