Fire of sql require separate memory allocation

Hello

So I tried light sql and trying to put things up. I had a question on how data is managed in memory regarding the memory available for sqlfire.

First of all,-sqlfire has a default amount of memory allocated when we have a home server? I didn't notice settings during server startup to say he could use x amount of memory.

Also assume that I have 2 members in my cluster (running with 4 GB of memory available) and I have millions of lines that could not fit in available memory. Data would be saturated on the disk?

See you soon

-Imran

You can explicitly set the initial heap and max - heap for the server at startup. Sqlf server aid Fund

By default, lines are only in memory.

If you set the initial heap and max heap and there is no more than ability, you will get an exception. If you do not use these parameters, you run the risk of running out of memory server.

To outflank your tables, you must explicitly create the table with the configuration of overflow. Keys and indexes are still in memory even when overflow you.

Tags: VMware

Similar Questions

-

Change the memory allocated for the graphics?

Hi, I have an Equium A60-181 with graphics ATi Mobility Radeon 7000. For the moment, that this GPU is using 64 MB of my system RAM that result with only 448 MB of RAM (512-64) for Windows. For a video resolution of 1024 x 768 32 bit it's a ridiculous amount of memory since it only really requires 4 MB for this. I'm not interested in games or 3d graphics, so my question is if I can change the memory allocated to recover more memory system?

see you soon,

Richard S.

HI Richard,

ATi drivers include an improved graphics option devices-> display manager that allows the value of the AMU to change. My SA30 supports load 16 MB, 32 MB, 64 MB and 128 MB.

The same value UMA adjustable in BIOS Setup Panel.

HTH

-

Cannot install software due to lack of memory allocated

I use 4 Fusion on a Mac Book Pro with 4 MB, when I try to install new software via windows, there is only 1 MB of memory allocated and the software requires 2MB, how to adjust the allocation of memory?

Shut down Windows Virtual MAchine (MAchine virtual menu > Shutdown)

Click Virtual Machine > settings > processors and memory

Change the value of memory 2048 MB. Click View all.

Close the settings window and start windows.

-

Linux kernel parameters and Oracle memory allocations.

I'm currently creating an Oracle 11 G R2 in database, on a Linux (SLES 11) OS. The software OS and DB are the two 64-bit.

I wondered about the optimal method of memory allocation shared, such that I can get the automatic memory management to recognize 12G of memory (which allows 4 g for the operating system and other pieces).

I put the kernel parameters are:

SHMMAX: 8589934592 (the recommended physical memory/2).

SHMALL: 3145728 (giving a total of 12 GB of shared memory allocation that the page size is 4096).

The way I read the documentation, it must free up enough shared memory to allow the specification of a memory_max_target and memory_target "11 g". I'm getting 11G to ensure that I am not ruin by a few bytes (if it all works, I will develop to 12G).

However, whenever I try a startup, I get the ' ORA-00845: not supported on this system MEMORY_TARGET' error.

It disappears if I create a great shmfs block using a mount command, but I was under the impression you had is no longer to do with 64-bit and Oracle 11 G system.

Could someone clarify a little bit about what I'm doing wrong and where I should be looking for the answer?

See you soon,.

RichNote 749851.1 ID and ID 460506.1:

AMM all SGA memory is attributed by creating the files under/dev/SHM. When Oracle DB not allowances of SGA that HugePages reserved/does not serve. The use of the AMM is absolutely incompatible with HugePages.

eseentially, AMM requires that/dev/SHM.

-

Get the DLL string (memory allocated for DLL)

Hi, I'm aware there are a lot of discussions around this topic, but there are a lot of variations and I've never used before LabVIEW, and I seem to have a hard time at a very basic level, so I hope someone can help me with the below simple specific test case to put me on the right track before I pull my hair remaining.

I've created a DLL with a single function "GenerateGreeting". When it is called, it allocates enough memory for the string "Hello World!" \0"at the pGreeting of pointer, copy this string to the pointer and sets the GreetingLength parameter to the number of allocated bytes (in the DLL in the end, I want to use, there is a DLL function to free the memory allocated for this way).

I created a header file to go with the DLL containing the following line.

extern __declspec(dllimport) int __stdcall GenerateGreeting(char* &pGreeting, int &GreetingLength);

I then imported the LabVIEW file using the import Shared Library Wizard. That created a "generate Greeting.vi' and everything seems somewhat sensitive for me (although this does not mean a lot right now). When I run the vi, the ' GreetingLength on ' display correctly '13', the length of the string, but "pGreeting out" shows only three or four characters (which vary in each race), place of the string that is expected of junk.

The pGreeting parameter is set to the 'String' type, the string "String pointer C" format, size currently Minimum of 4095. I think the problem is that the DLL wants to allocate memory for pGreeting; the caller is supposed to pass a unallocated pointer and let the DLL allocates memory for the string the right amount, but LabVIEW expected the DLL to write in its buffer préallouée. How to with LabVIEW? Most of the functions in the DLL in the end, I want to use work this way, so I hope that's possible. Or I have to rewrite all my DLL functions to use buffers allocated by the appellant?

The vi , header and the DLL are atteched, tips appreciated. Edit - cannot attach the dll or the headers.

tony_si wrote:

extern __declspec(dllimport) int __stdcall GenerateGreeting(char* &pGreeting, int &GreetingLength);

Although char * & pGreeting is actually a thing of C++ (no C compiler I know would accept it) and this basically means that the char pointer is passed as a reference. So, technically, it's a double referenced pointer, however nothing in C++ Specifies that reference parameters should be implemented as a pointer at the hardware level. So free to decide to use some other possible MECHANISM that takes the target CPU architecture support a C compiler constructor. However, for the C++ compilers, I know it's really just syntactic sugar and is implemented internally as a pointer.

LabVIEW has no type of data that allows to configure this directly. You will have to configure it as a whole size pointer passed as a pointer value and then use a call MoveBlock() or the support VI GetValuePtr() to copy the data on the pointer in a string of LabVIEW.

AND: You need to know how the DLL allocates the pointer so that you can deallocate it correctly after each call to this function. Otherwise you probably create a leak memory, since you say that the first 4 bytes in the returned buffer always change, this feature seems to assign to each run of a new buffer that you want to deallocate correctly. Unless the DLL uses a Windows such as HeapAlloc() API function for this, it should also export a function according to deallocate the buffer. Functions like malloc() and free() from the C runtime cannot always be applied in the same version between the caller and callee, so that calling free() by calling on a buffer that has been allocated with malloc() in the DLL may not work on the same segment of memory and result in undefined behavior.

-

Change THE Memory Allocation 6.0.

I own a 7 '' netbook Sylvania, with 128 MB of RAM and Windows CE 6.0 OS. There the cursor to change the memory allocation between the PROGRAM and the STORAGE, but it won't budge. To get YouTube running on this thing, I need to go 68 MB of RAM allocated to executing programs for about 130 MB, taking storage (use an SD card to make up the difference - easy). Is there a way to unlock the slide or modify the default setting through the registry hive files? I have already made some adjustments with explore in this way and have managed to add a file to it. I understand in hex. Thank you!

Hello GusCD6,

The best place to ask your question on Windows CE 6.0 is in the MSDN forums for the development of Windows Mobile. Click here for a link to the Windows Mobile Development Forum.

They are there, the experts and would be better able to solve your problem of Windows CE and help answer your questions.Sincerely,

Marilyn

-

Maximum memory allocation for HP Pavilion dv7-7010us

HP Pavilion dv7-7010us

Windows 7 (64-bit)Hello!

As shown in the topic, I'm looking for the maximum memory allocation for my new HP Pavilion dv7-7010us notebook.I am aware that my computer has two memory slots filled a 2 GB DDR3 SDRAM and a 4 GB DDR3 SDRAM.

If anyone could also tell me the perfect combination of chip (2 x 4 GB, 2x16GB, etc.) to get the maximum memory allocation, that would be much appreciated. Thank you!

-AngeloHi, Angelo:

Here is the link to the service manual for your laptop.

http://h10032.www1.HP.com/CTG/manual/c03221579.PDF

Supported memory configurations are in Chapter 1, page 4.

Paul

-

The display of file space of total Information System Page after changing virtual memory allocation?

I thought that I understood that I could set up a file.sys page on any available partition on Windows, if I wished, for example, to create more virtual memory or move a file to another drive. However the changes I make to my computer are not appearing in the system information, after a reboot - why is - this?

I have successfully configured internal auxiliary training to take on a pagefile.sys when you use the system advanced settings in the computer file system properties. Page created on the auxiliary drive single file memory is equal to once and a half the size of the physical memory of the computer.

As I had created a larger file of the Page on the auxiliary drive, I then decided to reduce the Page file on the boot up to 300 MB drive. When I did make changes, I clicked on the set then OK and then I rebooted the entire computer.

After restarting the computer, I tried to check system information for a summary of the changes I had made to the file Page and virtual memory space and I was disappointed to see that the pagefile on the auxiliary drive does not show upward. Information system had just recorded space Page MB 300 in C:\pagefile.sys file, and there is no trace of the larger Page file that I created on the auxiliary drive. How is that?

If I go back to the virtual memory window in advanced system settings, it clearly shows I changed two files Page. Yet in Information System shows only the boot Page file drive. How is that?

I always use Internet Explorer with only 300 MB Page file space, and it works really faster than the default virtual memory allocation, however the system soon slows down after opening about 5 instances of Internet Explorer and the system starts asking to close application windows down to save memory. So why does the system not switch to use the Page file on the auxiliary drive?

Hello

Thanks for posting your query in Microsoft Community.

I suggest you to report this query in TechNet forum for better support.

https://social.technet.Microsoft.com/forums/en-us/home

Be sure to visit Microsoft Community, if you have any questions in the future.

-

We use the desktop computer intel i3 3220 cpu with operating system windows 7 ultimate 32 / 64 bit os that quantity grafics memory will be allocted to me of my 4 GB of ram? Also please intimate how to check and change the assignment

Hi Brandon,.

I suggest you to see the following links on memory allocation works.

Usable memory may be less than the memory installed on Windows 7 computers

-

Oracle 10g, how to determine the memory allocated is healthy or sufficient.

Hi guys,.

I have a 10.2.0.5 production database.

Currently, my server has 8 GB of physical RAM.

/ 3GB is allocated to the SGA and 1 GB for the PGA.

Let's say a day is needed for the application (for example, weblogic) to increase the pool of connections from 20 to 50.

How are we able to know if the memory allocated is sufficient for the existing load as well as the increase in workload?

CPU is altogether would apply us we can generate on the CPU. If the load is low, I assumed is quite safe to increase the connection.

Please share your experiences of dealing with this situation.

Thank youChewy wrote:

How are we able to know if the memory allocated is sufficient for the existing load as well as the increase in workload?There are a set of views memory Advisor which will tell you if your memory structures of appropriate size:

v$ db_cache_advice

v$ shared_pool_advice

v$ java_pool_advice

v$ sga_target_advice

v$ pga_target_advice

--

John Watson

Oracle Certified Master s/n

http://skillbuilders.com -

Virtual machine consumes memory allocated all

I have 4 ESXi 4.1 on Dell PE R610 servers. I used the installation ISO Dell from their support site media to install ESXi 4.1.

I noticed that all virtual machines consume all the memory allocated to it.

In other words, if I look at the memory of all resource allocation the VM is private.

It has no memory shared to do so.

I did not make reservations for the virtual machines.

Someone at - it other ideas as to why this could happen?

Thank you

This "problem" has been discussed a lot already, see threads like these:

http://communities.VMware.com/message/1487356#1487356

In short:

ESX 4.x use large memory pages by default. Find duplicates MB 2 pages for GST is almost impossible with them. You can set the Advanced setting mem.allocguestlargepage to 0 if you want to force the use of small pages, but ESX breaks down large pages under contention memory itself.

-

Resizing of the memory allocated to the virtual machine

Hello

I use VMPlayer on a XP and Ubuntu invited crowd.

I was wondering if I could resize the memory of my Ubuntu virtual machine by changing the value of the variable memsize in 512 to 1024 vmx configuration file or if something more complex needs to be done?

Thank you very much.

T bar

Yes, with the Machine virtual shutdown, not suspended, and it's best if you close the VMware product too.

Also have you looked in the help file in VMware Player? With VMware Player 2.5 (haven't looked at VMware Player 3 yet). ... under... Running VMware Player > configure memory Allocation change

-

iOS app breaks down at the return of cameraUI - a memory allocation problem?

Hey all

try to complete my first application

When running on iOS, the application crashes SOMETIMES after returning from cameraUI ("use" / MediaEvent.COMPLETE or cancel "/ Event.CANCEL" "").

When I leave some other applications running on my iPhone 3 g (and not many are open), the problem goes away, which makes me think it is a problem of memory allocation

in this aspect, can I trust the iOS to leave inactive applications to allocate more memory for my, currently active, AIR application?

(there is no memory leak)

This is a version of os running iPhone 3Gs 4.3.5

the app is made with Flash Pro 5.5 overlaid with AIR 3.1 sdk and deployed by using the "deploy to app store" type (which should be the most free)

(no crashes on Android or desktop versions)

someone had this problem of cameraUI or a similar where an application crashes if more then some numbers of applications are open?

thanx

Saar

Hello

Thanks for reporting the bug. This problem is known to us, and it is currently under investigation. As far as I know, there is no work around.

Kind regards

Samia

-

We took a 32-bit 10.1.2.3 B2B instance on Linux. We have dedicated as much memory as possible to the JVM and for some messages we receive memory errors in allocation of native code NYI (EDIFACT). When the native code allocate the memory?

My real question is whether we should allocate less memory for the JAVA virtual machine in order to make more memory in the 32-bit address space available for the operating system for allowances of JNI or what we should do instead. There are many memory physical machine (16 G, no more than 4 G) it must have something to do with the 32-bit limitation.My mistake. Not sure which used Oracle of the API, but for native code memory must be allocated in the heap of BONES and not in the java heap.

When execution of a piece of large capacity memory is requested, then the allocator of OS will try to find that OS bunch and if she couldn't then returns the bad_alloc exception (as far as I know, bad_alloc is a class in the C++ standard library). It needs a lot of debugging to know the exact cause and find a solution; and for this, Oracle's Support is certainly knocking on the right door.

There are many memory physical machine (16 G, no more than 4 G) it must have something to do with the 32-bit limitation.

This statement is interesting because as far as I know, Linux does not need to be defragmented and so if 4G memory is available then bad_alloc exception should not come. However I still can not comment because there are other factors as well that should be considered, as - size of the payload, of environment settings, by the memory of the affected user, by the maximum memory allocation process has allowed etc.

Please check the above factors and try to find any occurrence of pattern/common between exceptions. I'm not a linux expert, but again, it seems that the problem is with OS and its parameters.

Kind regards

Anuj -

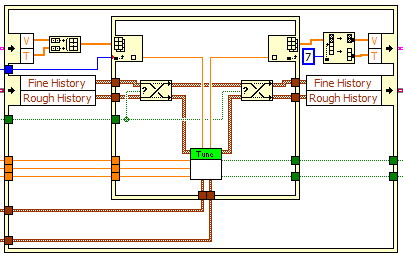

buffer allocation and minimizing memory allocation

Hello

I am tryint to minimize the buffer allocation and memory in general activity. The code will run 'headless' on a cRIO and our experience and that of the industry as a whole is to ellliminate or minimize any action of distribution and the dynamic memory deallocation.

In our case we treat unfortunately many string manipulations, thus eliminating all the alloc/dealloc memmory is significant (impossible?).

Which leaves me with the strategy of "minimize".

I did some investigation and VI of profiling and play with the structure "on the spot" to see if I can help things.

For example, I have a few places where I me transpoe a few 2D charts. . If I use the tool 'See the buffer allocations' attaced screenshot would indicate that I am not not to use the structure of the preliminary examination International, both for the operation of transposition of the table for the item index operations? As seems counter intuitive to me, I have a few basic missunderstanding either with the "show stamp" tool of the preliminary examination International, or both... The tool shows what a buffer is allocated in the IPE and will once again out of the International preliminary examination, and the 2D table converts has an allowance in and out, even within the IPE causing twice as many allowances as do not use REI.

As for indexing, using REI seems to result in 1.5 times more allowances (not to mention the fact that I have to wire the index numbers individually vs let LabVIEW auto-index of 0 on the no - IPE version).

The example illustrates string conversions (not good from the point of view mem alloc/dealloc because LabVIEW does not determine easily the length of the 'picture' of the chain), but I have other articles of the code who do a lot of the same type of stuff, but keeping digital throughout.

I would be grateful if someone could help me understand why REI seems to increase rather than decrease memory activity.

(PS > the 2D array is used in the 'incoming' orientation by the rest of the code, so build in data table to avoid the conversion does not seem useful either.)

QFang wrote:

-My reasoning (even if it was wrong) was to indicate to the compiler that "I do not have an extra copy of these tables, I'll just subscribe to certain values..." Because a fork in a thread is a fairly simple way to increase the chances of duplications of data, I thought that the function index REI, by nature to eliminate the need to split or fork, the wire of the array (there an in and an exit), I would avoid duplication of work or have a better chance to avoid duplication of work.

It is important to realize that buffer allocations do occur at the level of the nodes, not on the wires. Although it may seem to turn a thread makes a copy of the data, this is not the case. As the fork will result in incrementing a reference count. LabVIEW is copy-on-write - no copy made memory until the data is changed in fact, and even in this case, the copy is performed only if we need to keep the original. If you fork a table to several functions of Board index, there is always only one copy of the table. In addition, the LabVIEW compiler tries to plan operations to avoid copies, so if several branches read from a wire, but only it changes, the compiler tries to schedule the change operation to run after all the readings are made.

QFang wrote:

After looking at several more cases (as I write this post), I can't find any operation using a table that I do in my code that reduces blackheads by including a preliminary International examination... As such, I must STILL understand IPE properly, because my conclusion at the present time, is that haver you 'never' in them for use. Replace a subset of a table? no need to use them (in my code). The indexing of the elements? No problem. .

A preliminary International examination is useful to replace a subset of the table when you're operating on a subset of the original array. You remove the items that you want, make some calculations and then put back them in the same place in the table. If the new table subset comes from somewhere other than the original array, then the POI does not help. If the sides of entry and exit of International preliminary examination log between them, so there no advantage in PEI.

I am attaching a picture of code I wrote recently that uses the IPEs with buffer allocations indicated. You can see that there is only one game of allowances of buffer after the Split 1 table D. I could have worked around this but the way I wrote it seemed easier and the berries are small and is not time-critical code so there is no need of any optimization. These tables is always the same size, it should be able to reuse the same allowance with each iteration of the VI, rather than allocate new arrays.

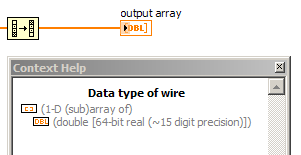

Another important point: pads can be reused. You might see a dot of distribution on a shift register, but that the shift register must be assigned only once, during the first call to the VI. Every following call to the VI reuses this very spot. Sometimes you do not see an allocation of buffer even if it happens effectively. Resizing a table might require copying the whole table to a new larger location, and even if LabVIEW must allocate more memory for it, you won't always a point of buffer allocation. I think it's because it is technically reassign an existing table instead of allocating a new, but it's always puzzled me a bit. On the subject of the paintings, there are also moments where you see a point to buffer allocation, but all that is allocated is a 'subfield' - a pointer to a specific part of an existing table, not a new copy of the data. For example 1 reverse D table can create a sub-table that points towards the end of the original with a 'stride' array-1, which means that it allows to browse the transom. Same thing with the subset of the table. You can see these subtables turning on context-sensitive help and by placing the cursor on a wire wearing one, as shown in this image.

Unfortunately, it isn't that you can do on the string allocations. Fortunately, I never saw that as a problem, and I've had systems to operate continuously for months who used ropes on limited hardware (Compact FieldPoint controllers) for two recordings on the disk and TCP communication. I recommend you move the string outside critical areas and separate loop operations. For example, I put my TCP communication in a separate loop that also analyses the incoming strings in specific data, which are then sent by the queue (or RT-FIFO) to urgent loops so that these loops only address data of fixed size. Same idea with logging - make all string conversions and way of handling in a separate loop.

Maybe you are looking for

-

Firefox stops working and I get this error page Problem Event Name: BEX Application Name: firefox.exe Application Version: 1.9.2.4363 Application Timestamp: 4ee68c41 Fault Module Name: StackHash_26c1 Fault Module Version: 0.0.0.0 Fault Module Timesta

-

C8547A: Color LaserJet 9500DHN

I have an EIO hard disk failure. Question no matter which hard drive can be used, or should I hold on OEM 20 Gig hard drive?

-

HP hp Server ml310e gen8 v2 install with Server 2008 4 t hard drive can be used completely

Know one on hp hp Server ml310e gen8 v2 install with Server 2008 and why 4 t hard drive cannot be used entirely to the server? How can I change the settings of the Bios MBR TPG mode?

-

Spam from my own email address

I use MSN on Windows XP and today I received an email from my email address. When I opened it, it is a supplement for Viagra. It's as if I send my own spam free. I'm trying to understand how someone can do and prevent it from happening again. I use N

-

default email in the original ePrint

Hi - I tried a coujple of time to set up an email for my 7600 but it won't save no and default return values to the original which is embedeed in the printer. It appeared to work for a year back when I first set up the printer, but the data seem to h