hash with very large internal table plan causes ORA-01652

HelloWe have a select with dubious plan:

SELECT * FROM T1 where T1. F1 in (select T2. F1 T2)

Plan:

| ID | Operation | Name | Lines | Bytes | TempSpc | Cost |

| 0 | SELECT STATEMENT | 59 M | 12G | 1 401 K |

| 1. SEMI HASH JOIN | 59 M | 12G | 55G | 1 401 K |

| 2. TABLE ACCESS FULL | T1 | 260 M | 52G | 489K |

| 3. TABLE ACCESS FULL | T2 | 209K | 1843K | 84.

As you see a record 260 million (52 GB) T1 and T2 has approximately 200,000 records (approximately 2 MB)

Oracle chooses the hash with T1 inner table join. Thus all T1 is tempted to read first in memory.

And when the memory is full, the TEMP tablespace is filled.

And because the TEMP tablespace is less than 55 GB, an error has occurred:

ORA-01652: unable to extend segment temp of 128 in tablespace TEMP

Question:

Why oracle choose the hash with inner table so big join?

And how can we solve this problem?

Other notes:

T1 and T2 is analyzed today.

Presumably no index or table.

The database is Oracle 9i Enterprise.

Description of Oracle 9i hash (first there are more small table as you can see):

http://download.Oracle.com/docs/CD/B10501_01/server.920/a96533/optimops.htm#76074

THX: sides.

Hello

Oracle 9i cannot make HASH JOIN RIGHT SEMI - were introduced in 10g.

----------------------------------------------------------------------------------------------------------------------------

| Id | Operation | Name | Starts | E-Rows | A-Rows | A-Time | Buffers | Reads | OMem | 1Mem | Used-Mem |

----------------------------------------------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | | 1 |00:00:55.73 | 162K| 162K| | | |

| 1 | SORT AGGREGATE | | 1 | 1 | 1 |00:00:55.73 | 162K| 162K| | | |

|* 2 | HASH JOIN RIGHT SEMI| | 1 | 8147 | 0 |00:00:55.73 | 162K| 162K| 988K| 988K| 1031K (0)|

| 3 | TABLE ACCESS FULL | T2 | 1 | 18 | 18 |00:00:00.01 | 3 | 0 | | | |

| 4 | TABLE ACCESS FULL | T | 1 | 11M| 11M|00:02:09.99 | 162K| 162K| | | |

----------------------------------------------------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

2 - access("OBJECT_NAME"="USERNAME")

Tags: Database

Similar Questions

-

Power of function with a very large number & table HEX

Hello

I have 3 problems and I would be grateful if anyone can help. I have attached

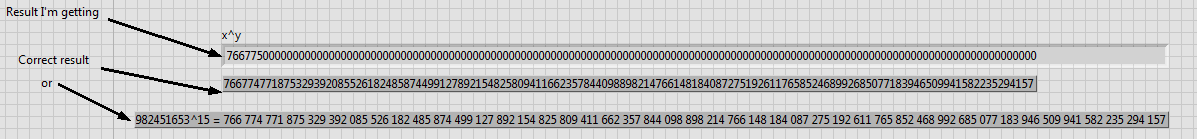

(1) I need to calculate 982451653 ^ 15. I used the "Power of X" function but her resut I receive is incorrect.

is there a way to get the correct result?

(2) after that I need to calculate result, but I get nothing? I use the function «Quotient & reminder»

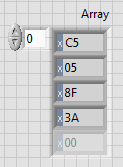

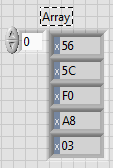

(3) I need to convert the number to HEX 982451653--> 3A8F05C5 and send it to table by two gruped back as shown below:

3A8F05C5--> [3A] [8F] [05] [C5] and write on the Board by behind.

Table should be:

.. .and for hex 3A8F05C56 number--> [03] [A8] [F0] [5 C] [56]

Table:

Help, please!

-

Georaster: Import very large images with sdo_geor.importFrom

Hello

What is the best way to import images of very large (> 50 GB) (GeoTIFF, TIFF, etc.) in a table georaster. Actually I don't have the image in a single file, but in raster 1 GB files.

Approach 1:

Import each image 1 GB with sdo_geor.importFrom block into small tiles (block settings) within the oracle and merge 50 rasters then into a large raster object using SDO_GEOR.mosaic. It is a recommended method?

Approach 2:

Create the actual tiles of all images with GDAL. Is it possible to import directly the tiles with meta-information into a table of raster data?

Thanks for your suggestions on this matter.

Yes, for Linux, you will need to compile it yourself. I did it without too much trouble on Centos.

But the most straightforward approach is to download and install the latest QGIS for Windows. The grouped GDAL is up-to-date and includes the hooks of the OIC.

See you soon,.

Paul

-

With the help of the network location and mapped a drive to the server FTP off site; during the transfer of very large amounts of the login information is always lost. Computer power settings are configured to not to do no matter what, I'm assuming that the ftp server can publish a scenerio timeout but is there a way for my computer and windows to restart the file transfer?

Hello

Thanks for posting your question in the Microsoft Community forums.I see from the description of the problem, you have a problem with networking on the FTP server.The question you posted would be better suited in the Technet Forums. I would post the query in the link below.http://social.technet.Microsoft.com/forums/en/w7itpronetworking/threads

Hope this information helps you. If you need additional help or information on Windows, I'll be happy to help you. We, at tender Microsoft to excellence. -

Very large file upload > 2 GB with Adobe Flash

Hello, anyone know how I can download very large files with Adobe Flash or how to use SWFUpload?

Thanks in advance, any help will be much appreciated.

If the error comes from php there is a server limit, not a flash limit.

you will need to edit your php.ini or .htaccess file download limit.

-

CC of Dreamweaver on iMac very slow to open sites with a large number of files

In recent weeks, a site I've worked regularly for several years began to take 10-15 minutes to open. A pop up says it checks the files, but the button that should allow you to escape this process does not work. The site has about 120 000 files located in different folders, and this was never a problem in the past. To access the site, the only way is to wait until the course ends and the spinning ball to go. I read the thread on the port of Skype settings, but I think that this may be a different problem because even though I have installed Skype I rarely running and only one of my sites is affected.

Any ideas?

With a large number of files, it would be a good idea to disable the cache of Dreamweaver for this site.

- Site > Manage Sites.

- Select the site, then click on the pencil icon to change site settings.

- Select Advanced settings > local news in the list on the left of the Site Setup dialog box.

- Uncheck the Enable Cache.

- Save > fact.

Another possibility is that your Dreamweaver cache is corrupted (probably with as many files). See remove a corrupt cache file.

-

the menus appear initially very large or very small

When in the Firefox browser if I click on a menu, it appears very large or very small. When I move the arrow in the menu change to the right size. This has not happened before. Is there a solution?

Try Firefox Safe mode to see how it works there.

A way of solving problems, which disables most of the modules.

The problems of Firefox using Firefox SafeModeWhen in Safe Mode...

- The State of plugins is not affected.

- Custom preferences are not affected.

- All extensions are disabled.

- The default theme is used, without a character.

- userChrome.css and userContent.css are ignored.

- The layout of the default toolbar is used.

- The JIT Javascript compiler is disabled.

- Hardware acceleration is disabled.

- You can open the mode without failure of Firefox 15.0 + by pressing the SHIFT key when you use the desktop Firefox or shortcut in the start menu.

- Or use the Help menu option, click restart with the disabled... modules while Firefox is running.

To exit safe mode of Firefox, simply close Firefox and wait a few seconds before using the shortcut of Firefox (without the Shift key) to open it again.

If it's good in Firefox Safe mode, your problem is probably caused by an extension, and you need to understand that one.

http://support.Mozilla.com/en-us/KB/troubleshooting+extensions+and+themesOr it could be caused by hardware acceleration.

When find you what is causing that, please let us know. It might help others who have this problem.

-

What is the purpose of several iTunes libraries? Is it because iTunes is slow with a large library?

I found the link on the creation of multiple itunes libraries

Open a different iTunes library file or create a new - Apple Support

research on a way to make iTunes more quickly.

Normally a different itunes library would be related to a different user account.

This feature answer Apple iTunes is very slow with a large library? What is the solution?

xefned wrote:

This feature answer Apple iTunes is very slow with a large library? What is the solution?

No and probably not. Some may choose several libraries as a way to manage multiple devices. Personally, I have my main library, another that I use only with iTunes game, and several that I could use to test behavior iTunes when I answer questions and I want to correct the details. Most activities in iTunes causes the .itl file update and the .xml so it is created. It is likely to be some kind of non-linear relationship between the size of the library and the time required to process updates. Each smart playlist should be updated when the data changes. Most of the smart playlists and playlist folders you more than the activity takes. Avoid creating the .xml if you don't need it, the control is in iTunes > Preferences > advanced.

TT2

-

Keep two very large synchronized data warehouses

I look at the options to keep a very large (potentially 400GO) datastore TimesTen (11.2.2.5) in sync between a Production Server and [sleep].

The replication was abandoned because it does not support compressed tables, or table types creates our closed code (without PKs not null) application

- I did some tests with smaller data warehouses for the indicative figures, and a store of 7.4 GB data (according to dssize) has resulted in a set of 35 GB of backup (using ttBackup - type fileIncrOrFull). This surge in volume should, and would it extrapolate to a 400 GB data store (2 TB backup set?)?

- I have seen that there are incremental backups, but to keep our watch as hot, we will restore these backups and what I had read and tested only a ttDestroy/ttRestore is possible, that is full restoration of the full DSN every time, which takes time. Am I missing a smarter way to do this?

- Y at - it other than our request to keep two data warehouses in the construction phase, all other tricks we can use to effectively keep the two synchronized data stores?

- Random last question - I see "datastore" and "database" (and to a certain extent, "DSN") apparently interchangeable - are they the same thing in TimesTen?

Update: the 35 GB compress down with 7za to slightly more than 2.2 GB, but takes 5.5 hours to do this. If I take a fileFull stand-alone, it is only 7.4 GB on the disc and ends faster too.

Thank you

rmoff.

Post edited by: rmoff - add additional details

It must be a system of Exalytics, right? I ask this question because compressed tables are not allowed for use outside of a system of Exalytics...

As you note, currently the replication is not possible in an Exalytics environment, but that is likely to change in the future and then he will certainly be the preferred mechanism for this. There is not really of another viable way to proceed otherwise than through the application.

With regard to your questions:

1. a backup consists mainly of file of the most recent checkpoint, more all the files/folders that are newer than this file logs. Thus, to reduce the size of a backup complete ensure

that a checkpoint occurs (e.g. "call ttCkpt" a ttIsql session) just before starting the backup.

2. No, only the restore is possible from an incremental backup set. Also note that due to the large amount of rollforward needed, restore a large incremental backup set can take quite a long time. Backup and restore are not really intended for this purpose.

3. If you are unable to use replication, then a sort of application-level synchronization is your only option.

4 data store and data mean the same thing - a physical TimesTen database. We prefer the database of the expression of our days; data store is a legacy term. A DSN is something different (Data Source Name) and should not be swapped with the data/database store. A DSN is a logical entity that defines the attributes of a database and how to connect. It is not the same as a database.

Chris

-

value are of a sequnec and looking at a very large number

Hello

We have a table that has a column filled with a sequence - it is maintained by code in owb, but is growing at a rate higher than expected

for example sequence begins to tell 10000000000, expect the next row until the b created with 10000000001 but the big gap between the two.

Have a separate ticket raised with oracel for this as maintained by dimesnion opertaor code.

However, I have two or three sequences of questions reagrding.

(1) can how big be

(2) if we try to application numbers that over 14 digits begins to show the e in the tool that we use (pl/sql developer) is there a way to make sure that we see the integer (sqlplus?) and

I'm assuming that say if had number column that very large say 18 digits long attached to an another numbers table 18 long then no problem?

Thank youIf you take a look at the documentation for creating a sequence, you will see that the maximum value is 10 ^ 27, assuming that you do not specify a maximum. If you have generated 10.000.000.000 (10 ^ 10) sequence values every second, you don't miss in 10 ^ 17 seconds which is about 3 * 10 ^ 9 years (3,168,808,781 or 3 billion years). The Earth is approximately 4.5 billion years if your sequence would not run out of values for about half the current age of the Earth. For most systems, it's a lot.

In SQL * Plus, you can control the display of a column using the COLUMN command

SQL> ed Wrote file afiedt.buf 1* select 1e20 col from dual 2 / COL ---------- 1.0000E+20 SQL> column col format 999999999999999999999999999; SQL> select 1e20 col from dual 2 / COL ---------------------------- 100000000000000000000Justin

-

BAD RESULTS WITH OUTER JOINS AND TABLES WITH A CHECK CONSTRAINT

HII All,

Could any such a me when we encounter this bug? Please help me with a simple example so that I can search for them in my PB.

Bug:-8447623

Bug / / Desc: BAD RESULTS WITH OUTER JOINS AND TABLES WITH a CHECK CONSTRAINT

I ran the outer joins with check queries constraint 11G 11.1.0.7.0 and 10 g 2, but the result is the same. Need to know the scenario where I will face this bug of your experts and people who have already experienced this bug.

Version: -.

SQL> select * from v$version; BANNER -------------------------------------------------------------------------------- Oracle Database 11g Enterprise Edition Release 11.1.0.7.0 - 64bit Production PL/SQL Release 11.1.0.7.0 - Production CORE 11.1.0.7.0 Production TNS for Solaris: Version 11.1.0.7.0 - Production NLSRTL Version 11.1.0.7.0 - ProductionWhy do you not use the description of the bug test case in Metalink (we obviously can't post it here because it would violate the copyright of Metalink)? Your test case is not a candidate for the elimination of the join, so he did not have the bug.

Have you really read the description of the bug in Metalink rather than just looking at the title of the bug? The bug itself is quite clear that a query plan that involves the elimination of the join is a necessary condition. The title of bug nothing will never tell the whole story.

If you try to work through a few tens of thousands of bugs in 11.1.0.7, of which many are not published, trying to determine whether your application would be affected by the bug? Wouldn't be order of magnitude easier to upgrade the application to 11.1.0.7 in a test environment and test the application to see what, if anything, breaks? Understand that the vast majority of the problems that people experience during an upgrade are not the result of bugs - they are the result of changes in behaviour documented as changes in query plans. And among those who encounter bugs, a relatively large fraction of the new variety. Even if you have completed the Herculean task of verifying each bug on your code base, which would not significantly easier upgrade. In addition, at the time wherever you actually performed this analysis, Oracle reportedly released 3 or 4 new versions.

And at this stage would be unwise to consider an upgrade to 11.2?

Justin

-

ADT does not not with the large amount of files

Hello

I posted a similar question in the Flash Builder forum; It seems that these tools are not intended for something too big. Here's my problem; I have an application that runs on the iPad very well, however I can't seem to be able to package the resource files that it uses, such as flv, jpg, swf, and xml. There are a lot of these files, perhaps to a 1000. This app is not on the AppStore and only used internally in my company. I tried to package using Flash Builder, but he could not do, and so now I tried the adt command line tool. The problem is that it is out of memory:

Exception in thread "main" means

at java.util.zip.Deflater.init (Native Method)

in java.util.zip.Deflater. < init >(Unknown Source)

at com.adobe.ucf.UCFOutputStream.addFile(UCFOutputStream.java:428)

at com.adobe.air.ipa.IPAOutputStream.addFile(IPAOutputStream.java:338)

at com.adobe.ucf.UCFOutputStream.addFile(UCFOutputStream.java:273)

at com.adobe.ucf.UCFOutputStream.addFile(UCFOutputStream.java:247)

at com.adobe.air.ADTOutputStream.addFile(ADTOutputStream.java:367)

at com.adobe.air.ipa.IPAOutputStream.addFile(IPAOutputStream.java:161)

at com.adobe.air.ApplicationPackager.createPackage(ApplicationPackager.java:67)

at com.adobe.air.ipa.IPAPackager.createPackage(IPAPackager.java:163)

at com.adobe.air.ADT.parseArgsAndGo(ADT.java:504)

at com.adobe.air.ADT.run(ADT.java:361)

at com.adobe.air.ADT.main(ADT.java:411)I tried to increase the size of a segment of memory from java to 1400 m, but that did not help. What is troubling is well why this day and age, the adt tool is written only for the 32-bit java runtimes, when you can buy is more a 32-bit computer. Almost everything is now 64-bit. Be able to use a 64 bit java runtime would eliminate it.

Has anyone else had to deal with a large number of files in an application iPad packaging?

Thank you

Hello

Could you post the bug number in the thread?

We fixed a few bugs OOM AIR 2.7. Here's the blog of liberation: http://blogs.adobe.com/flashplayer/2011/06/adobe-air-2-7-now-available-ios-apps-4x-faster. html . Could you try to package your application and see if it works?

-Samia

-

How to get rid of the msg "...". is very large and may take a long time to

Hello

Simple question:

How to get rid of msg "...". is very large and might take a long time to load... »

I tried to increase the number of cells under "App settings-> options-> warn if greater than 5 000 000 000 data form. I even tried to '0 '. Nothing works planning 9.3.1Hello

That's how I've always changed the number of cells, after which display the message on the forms of data.

There are 2 levels of adjustment. You can configure the application level, but users must select "Use Application Defaults" in the planning of the preferences of the user parameters. To do this, use the file-> Preferences and select schedule.

Otherwise, you can set the value of the number of cells at the level of the user for ' warn if data form more xxxx ". It should solve the problem. This should be the installation program if you have not selected checkbox default Application for the user in the preferences of planning.

If nothing else works, it is very difficult to guess, I would open a SR with Oracle.

Let me know if it helps.

See you soon

RS -

Why firefox doesn't go very large print even after that I customized to print smaller.

I downloaded Firefox and the screen is very large fonts and the screen is big too.

Check that you are not running Firefox in compatibility, or with a reduced screen resolution mode.

You can open the properties of the desktop Firefox shortcut via the context menu and check under the tab "compatibility".

Make sure that all items are disabled in the tab "Compatibility" in the Properties window.Try to assign layout.css.devPixelsPerPx 1.0 (default is - 1) on the topic: config page.

If necessary adjust the value by 0.1 or 0.05 (1.1 or 0.9) until icons or text looks right.You can open the topic: config page via the address bar.

You can accept the warning and click on "I'll be careful" to continue.You can watch the Default FullZoom Level or NoSquint extension to define a page zoom and the size of the default font on the web pages.

- Default FullZoom Level: https://addons.mozilla.org/firefox/addon/default-fullzoom-level/

- NoSquint: https://addons.mozilla.org/firefox/addon/nosquint/

-

The Web Acceleration Client error (513) - internal error

The Web acceleration Client has detected an internal error that caused the connection between the customer to accelerate Web and server acceleration Web to be broken. A new attempt of the web page may correct the problem.I get this error at all times when working in the ancestry.com Web site. I have to reload the page on almost every search I do on this Web site. It is the site of the ONLY I get this error message, can run uninterrupted for several hours on other sites and never get this message. I talked to the people at Ancestry.com support and they did 2 recommendations: turn antivirus (did not help) or switch to another web browser. I tried both 11 IE and Chrome Version 31.0.1650.63 m and I have no problem with either of these 2 browsers this error.

Is there a problem with the way Firefox and ancestry.com communicate?

Hello byron.lewis, many site problems can be caused by corrupted cookies or cache. To try to solve these problems, the first step is to clear cookies and cache.

Note: This will be you temporarily disconnect all sites, you're connected to.

To clear the cache and cookies to do the following:- Go to Firefox > history > clear recent history or (if no Firefox button is displayed) go to tools > clear recent history.

- Under "Time range to clear", select "all".

- Now, click the arrow next to details to toggle the active details list.

- In the list of details, see the Cache and Cookies and uncheck everything.

- Now click the clear now button.

More information can be found in article to clear your cache, history, and other personal information in Firefox .

This solve your problems? Please report to us!

Thank you.

Maybe you are looking for

-

Close browser when closing the last tab

I wish that my browser to close when I close the last tab. I tried browser.tabs.closeWindowWithLastTab; true but when the last tab, 'close' is grayed out right.

-

How can I stop firefox from the offer to remember my passwords?

I don't want ANY program to offer to remember my password. Ask Firefox several TIMES to remember this information anywhere, anytime, that I connect to work or personal sites. I'm quite capable of remembering my own passwords, and the pop up key icon

-

Whenever I have disconnect and reconnect a USB scanner renamed it to scan #2

Windows XP SP3 operating system.I have a Fujitsu 6130 document scanner.Whenever I plug it into another port USB rather than using the existing device it creates a new device named fi6130 #2, fi6130 #3, fi6130 #4 etc...I said it of a unique problem fo

-

BlackBerry Z3 im unable to coneect with BlackBerry Desktop Software

Hello I bought blackberry mobile opportunity from a person that there is as much me BBID says I have forgeted ID and password... 1 problem - I m not able to connect with newly made BBID. 2 Im not able to use Blackberry link, mixture of Blackberry or

-

In SSMS, that means the space between an alias of the table and column name mean?

Sometimes I see this: "d.PhaseKey" instead of this "d.PhaseKey". I'm sure this means something is wrong in the query or table - but this gives no indication of what is wrong? I'm not sure on how to interpret it.