In almost real time image processing

What is the best way to implement almost "real - time" treatment of image with two usb cameras? Capture, process, showing results and then capture, process, etc. until I chose to stop the program. I use LV with vision development module 8.6. What function can I use to capture the image?

With LabVIEW 8.6, you can get the driver NOR-IMAQ for USB cameras . It's really similar to the other drivers IMAQ, but examples are provided in this knowledge base. To use several cameras, you will use just two copies of the screws.

I'm not sure if you need more information than that, but let me know if you have any more specific questions, with which I can help.

Tags: NI Hardware

Similar Questions

-

Real-time audio processing, is it possible?

Hello, I am trying to transfer my existing application of synthesizer for Playbook and I can't seem to get the audio streaming properly.

I created a separate thread for my audio processing that basically contains the code of the example 'PlayWav' example but by replacing 'reading the file' at rather my function call process() to generate the audio signal.

There are a few problems:

1. constant underruns: if I just generate whitenoise with a simple rand() instead of calling my process() function, it works ok, but as soon as I ask him to do a little work, run the code from my synth, it falls to 10% signal / 90% against the recess time to stall. The function of process is demanding, but the same code works fine in ~ 5-10% CPU load on an Android equivalent.

I tried the following command to increase the priority of the audio thread, that didn't seem to do much, but it does return any errors either.

static pthread_attr_t s_AudioThreadAttr; int nPolicy; sched_param_t sch_prm; pthread_attr_init(&s_AudioThreadAttr); pthread_create(&s_AudioThread, &s_AudioThreadAttr, &myAudioThread, NULL); pthread_getschedparam(s_AudioThread, &nPolicy, &sch_prm); sch_prm.sched_priority = sched_get_priority_max(SCHED_FIFO); int nErrCheck = pthread_setschedparam(s_AudioThread, SCHED_FIFO, &sch_prm);

2 sizes of buffers: I'm aiming for the treatment of low latency. The size of the fragment back to the pcm plugin info is thin (~ 15ms) but then there are the number of fragments returned by Setup of the plugin, which is really high. Here's the info printed by the sample code for my setup:

SampleRate = 44100, channels = 2, SampleBits = 16 Format Signed 16-bit Little Endian Frag Size 2820 Total Frags 85 Rate 44100 Voices 2

If I'm reading this right, it's 85 x (2820 / 2 / sizeof (short)) = 59925 samples for pads of frag... it's more a second, if I'm waiting for a complete buffer before playing. I'm not 100% sure what that means but if this will be allowed between presing a key and hear the sound, so I need to find another way.

But there are apps that seem to play with little latency, so maybe I'm looking at the API.

Can someone help me please?

Sorry for wasting your time, the error was on my end. My service of process was left calendar synchronization in it from the last device that I wore on the code (it needed sync code because of the terrible latency) which was at the origin of the unnecessary stalls.

The code example PlayWav works now my synth very well, without changes of balance or priority thread. In regards to the latency time, by adjusting the number of frags min - max of (1, -1) in this example (1,4), I get 15ms latency in 44 kHz stereo with no underruns, which is fine for now.

Apologies again and thanks for your help.

-

best practices to increase the speed of image processing

Are there best practices for effective image processing so that will improve the overall speed of the performance? I have a need to do near real-time image processing real (threshold, filtering, analysis of the particle/cleaning and measures) at 10 frames per second. So far I am not satisfied with the length of my cycle so I wonder if he has documented ways to speed up performance.

Hello

IMAQdx is only the pilot, it is not directly related to the image processing IMAQ is the library of the vision. This function allows you to use multi-hearts on IMAQ function, to decrease the time of treatment, Arce image processing is the longest task for your computer.

Concerning

-

Hello

I'm new to labview and trying to develop a system of eye tracking using labview 8.6. He has the vision development module, and I was wondering if this was not enough for the treatment and real-time image acquisition or could I need other software tools.

Yes, to acquire images from a webcam, you need drivers imaq-dx.

Take a look at this link:

http://digital.NI.com/public.nsf/allkb/0564022DAFF513D2862579490057D42E

Best regards

K

-

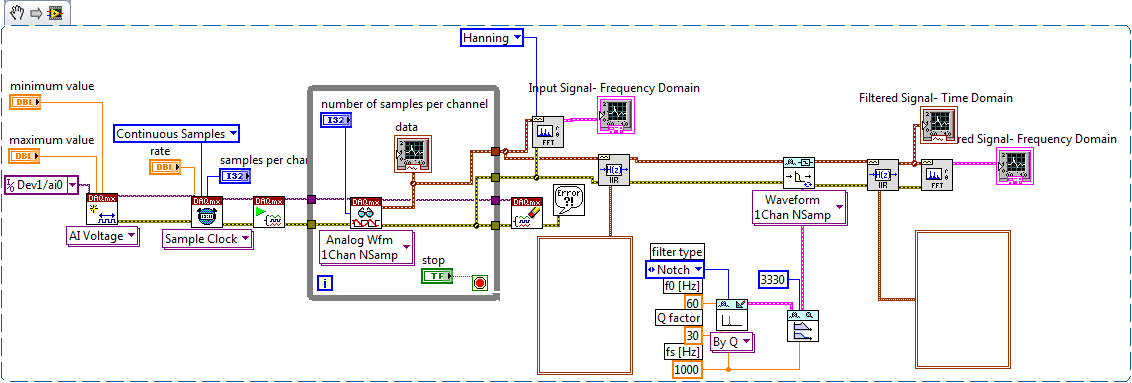

Continuous data acquisition and real-time analysis

Hi all

It is a VI for the continuous acquisition of an ECG signal. As far as I understand that the analog read DAQmx VI must be placed inside a while loop so it can acquire the data permanently, I need perform filtering and analysis of the wave in real time. How I implemented the block schema means that data stays int the while loop, and AFAIK the data will be transferred on through the tunnels of data once the loop ends the execution, it clearly isn't real-time data processing.

The only way I can think to fixing this problem is by placing another loop that covers the screw scene filtering and using some sort of registeing shift to transmit the data in the second while loop. My question is whether or not it would introduce some sort of delay, and weather or not it would be supposed to be the treatment in real time. Wouldn't be better to place all the screws (aquicition and filtering) inside a while loop? or it is a bad programming practice. Other features I need to do is back up the data I na file, but only when the user wants to do.

Any advice would be appreciated.

You have two options:

- A. as you said, you can place the code inside your current while loop to perform the treatment. If you're smart, you won't need to put one another while loop inside your existing (nested loops). But it totally depends on the type of treatment that you do.

- B. create a second parallel loop to perform the treatment. This would be separate processes to ensure that the treatment is not obstacle to your purchase. For more information, see here .

Your choice really depends on the transformation that you plan to perform. If it's much the processor, this could introduce delays as you said.

I would recommend that you start at any place in the first loop and see if your DAQ buffer overruns (you can monitor the rear of the buffer during operation). If so, you should decouple the process in separate loops.

In what concerns or not ' it would be considered as real time processing ' is a trick question. Most of the people on these forums say that your system is NEVER in real time because you're using a desktop PC to perform processing (note: I guess it's the code that runs on a laptop or desktop?). It is not a deterministic systemand your data is already "old" by the time wherever he leaves your DAQ buffer. But the answer to your question really depends on how you define "real time processing". Many lay it will set as the treatment of 'live' data... but what is "actual data"?

-

Vision in real time with USB2?

Hey guys,.

I'm stuck to decide on a method of image acquisition.

My project requires real-time imaging, but it is on a netbook, except that USB is out. Ethernet is only 100mbit so no gige.

I tried a few Comsumer s level USB cameras that I slept here and all seem to have about a half second lag in all lighting conditions. Is there a solution for me?

I tend to avoid the acquisition of vision with the USB. I don't know what the limits are. I know the that most consumer webcams are not enough good quality for machine vision. There are a few industrial USB cameras, you might want to look at.

Can you put a firewire card in your netbook? It would probably be your best option, because there are a large number of firewire cameras and they are very easy to use.

Bruce

-

RIO image real-time processing

I'm designing an autonomous robot to aid RIO OR which is used to detect objects by only after looking at them, that is, no sensor is used and only image processing is used.

Problem is that I'm confused if cRIO or sbRIO is able to do this type of image processing? Because I have to do that processing of the image on a certain embedded system. In addition, these RIOs can support blocks IMAQ image processing?

Kind regards

Adeel Amin.

Hello Adeel, IMAQ vision is supported on the CRIO - SBRio, be sure to install IMAQ vision on your platform. You can check this news: http://digital.ni.com/worldwide/bwcontent.nsf/web/all/F3DD9A90E45DAEE3862576BE007E2926

In this message, there is a link to a tutorial, you can use GIGE or analog camera via a movimed module.

However, be aware that the CRIO or SBRio target will be not as good as a PC for image processing tasks.

Greetz,

Ben Engelen Mechanization of GTD - Vision & measure

Innovative applications Philips NV

Steenweg op Gierle 417

BE-2300 TurnhoutBelgium

Tel: 0032 14/40.70.80visit us at the www.philips.com/mechanization -

Torch: OpenVG will increase the speed in real time for the scaling\rotating image?

Hello!

I have such a problem. I need to view the satellite map (not vectored) with the possibility of scaling on in real time. Map is a bitmap either 1500 x 1500.

I'm doing the scaling that requires 480 * 360 for operations return the second part of the map on a defined scale, but it is not enough - it is slow to make good zoom in real time.

Maybe someone knows is it possible to increase the speed by using OpenVG? (OpenGL is not supported in the torch)

Thank you for your attention.

Realization of OpenVG for play ~ 1500px x 1500px images, rotation & them staggering realtime works pretty fast.

So if someone needs of fast image processing to use OpenVG

-

5 Lightroom Develop module does not display the changes in real time - locks on original image

After almost a year of using Lightroom 5 (Version 5.7.1) with no problem there now will not show changes/changes I do them in the main view of the magnifying glass. The image on the film down changes. The Navigator view darkens, once a change is made. If I I have the active secondary monitor it will show the changes in real time (because of the quality of the secondary monior I do not use it for changes). If I go back to the magnifying glass library display changes to develop module are shown. I can also make do and discover tiem real changes in the Librrary module in the quick develop Panel. IF I come back to the develop Module updated (edited) image but more it is stuck on this image in the Loupe view. I suspect strongly I accidentally made some key race in the error that created this situation, but I can't really pinpoint exactly when this problem occurred are contiguous 5 days ago.

The problem persists with any image. I tried several images - old and new. The only software or any other change that I have introduced in the last 30 days was Piccure + 30 days ago. I later have it removed as a precaution. No change has resulted. I took additional measures include 1) Reseting preferences (twice), 2) reinstall the software twice, the second time I deleted all the files of supported / presets except the current catalog and 3) I have used backup catalogs as well dating back to the period of time, well before the problem appeared. I am running Windows 7 Professional with Service Pack 1, 64-bit OS

Any suggestions greatly appreciated. I feel like I'm under low on things to try. Thanks - Jeff

This could be caused by a defective monitor profile.

As a diagnostic tool and possibly a temporary solution, try to set the monitor to sRGB profile.

Go to control panel > color management and define the sRGB as the default profile.

If this solves the problem, you should ideally calibrate your monitor with a standard material.

-

Able user to zoom in/out the image in real time the performance of façade?

Well I have searched this and have developed empty. What I would do, is to have a picture of a diagram on the front panel and during execution of the VI user can somehow zoom in/out the image in real time.

I know there is something that is called Zoomfactor that you instantiate a way in the block diagram, but that seems to zoom in on a picture of race prior to the program. The zoom is not in real time. There is no possibility of real user to zoom in/out with free will, using the Zoomfactor I see. (Sorry I forgot display name of the service). And I saw messages by a man named George Zou that seems to come with a VI which is closest to what I want, but I pulled the VI site seems not compatible with my computer (my rig is under XP with Labview 2013 currently). So, I was wondering if someone else had found other answers appropriate to my specifications?

Use a structure of the event to change magnification during execution.

You can even program your own shortcuts to the structure of the event, for example if the user clicks on a particular point on the picture and you read this coordinate on, and you zoom way at this point remains in the Center... There are many options to play with...

-

Digital processing in real-time or on FPGA?

I am new in time real and FPGA (I got the starter kit 08 - 2012 but I just got all the programs installed a few days). I saw in the LabVIEW FPGA module and LabVIEW modules in real time, we can use functions of digital processing of the signal on both. In the case, a function is supported on both - what wil be some criterion to decide which one we should go with?

Much of the decision will probably depend on the type of signal that you are handling. If you have not worked with or the other, real-time is probably easier to start with. On the other hand, FPGA can be better if you look more advanced working or processing on a point by point basis.

There is a part of the community OR which is dedicated to the analysis and signal processing. I would like to look at some examples and discussions over there to get an idea of what your application can cause.

https://decibel.NI.com/content/groups/signal-processing-and-analysis

-

Subtract the model image of a real-time video and image/video subtracted from output

Hi all

I have a case in which I need to acuiqre a video (of circular loop) to a camera (which I am able to do with the vision development module in avi format) and subtract the model standard (for loop) image of him and display the output for each frame subtraction. The subtraction will tell me how much movement there was in the loop in real time. Is it possible to do?

I donot want to save any image or video, just want to show the subtraction of real time to the user

Joined the image.not still able to add video

Hello AnkitG,

Subtract the IMAQ VI removes one image from another or a constant. You can then view the removal from the user or save the images if necessary. Here a link to the reference IMAQ subtract VI.

-

I had my computer checked by professionals and they added Malwarebytes, my MSE keeps turning the real-time protection off the coast of almost every day and take 2 or more going to turn around.

original title: MICROSOFT SECURITY ESSENTIALS AND MALWAREBYTES ARE OK, YES or NOI had my computer checked by professionals and they added Malwarebytes, my MSE keeps turning the real-time protection off the coast of almost every day and take 2 or more going to turn around.

There are 2 types of Malewarebytes:

A free edition

An edition of Malewarebytes Pro you have to buy.Ask thos 'pro' that is they added to your computer.

Let them also disable the protection in real time of the Malwarebytes.I have MSE as my time protection rel and Malwarebytes (free version) as my program "we demand."

Ways to request that is not run until I turn it on, and it stops when finished scanning. -

I have 2 questions related to LabVIEW Real-time. I'm using LabVIEW 2010 Service Pack 1. We have a PXI-8186 controller running the embedded real-time.

(1) I have my software written to communicate using TCP/IP. The real time software runs the listener 'TCP create' then the 'wait on TCP listener"to look for the connections of the computer. This works well in the LabVIEW project. I then build the executable and deploy it in the system in real time, set up to run during startup. I put the target name to be my program name "Test program.rtexe" rather than "startup.rtexe". I don't know if that makes a difference. I saw the other files in the startup folder of the system in real time that have been named something else. My questions are: the name possible no matter what name we want and the system in real time all programs contained in the startup folder is running or is there another method to specify the programs to be executed?

(2) is there a way to monitor or to see what programs are running on the system in real time? As the the Task Manager on a windows system. The reason I ask, is that after I restart the PXI system and without using the program of project, I can't connect to my test program running on the system in real time. I don't know if the software is running on the system in real time, is it established that the network interface which it was assumed, etc. to determine why I can not connect with him. Any help on various methods to determine if the software actually runs would be useful.

Thanks for any help you can give.

Bill

Finally found the problem.

It would seem that, at least in the 2 controllers shipped we have there, controllers have a flag in the BIOS that allows you to disable the file to begin execution on the system in real time. Once I put the flag on the 'NO', everything works. The flag is named:

'Disable the boot VI' tab 'LabVIEW RT"in the BIOS.

It is a good option if the boot VI is damaged or was not debugged prior to deployment.

-

I want the camera to stream to USB (buffer image0) with treatment in time real simple image (image1 buffer).

After a treatment, I want to copy the image to another buffer image2.

However, I have observed some interference between the pads.

For example, I copied image1 image2 buffer. Whenever image2 buffer has been modified, image1 is also changed.

I suspect my mistake in the refnum image processing but can't find it.

Before posting my code shot, I want to check again with your comments because LV is not installed on this computer.

Anyway, what is the rule in the definition/treatment image buffer?

It is desirable to define the new back buffer image whenever I changed the image?

I found a few examples of creating image inside the loop buffer without deleting the refnums.

Is it safe to the memory of the control system?

What I picture reference must be transferred with shift register?

labmaster using LV2011 (no SP1)

What image of buffers are you referring to the IMAQ Image?

If so (or if not possible), these aren't the sons of Norway in labview, they are more like pointers than a thread that keeps the sata, so if you wiring buffer image 1 in the image of the stamp 2 make you actually point towards the same area of the image, copying data between buffers, there is a function of copy IMAQ This puts the data to the image specified by buffer 1 in the space of the image indicated by buffer 2. (I say space image of table instead because IMAQ images are more complex than just a chunk of memory).

Maybe you are looking for

-

How to check the date of renewal of iCloud (paid)?

I'm completely lost. I'm sure I checked everywhere (settings iCloud, info account Apple ID, menu 'manage subscriptions', etc) but I can not find my subscription renewal date to 50 GB of extra storage. Of course, I could get my mail Inbox, but I well

-

Restore the C50A Satellite to factory settings

Tried to reset the computer but screen everything shuts off and does nothing.Any ideas pls

-

Strange values on the output of this simple buffer.

-

Mobsync.exe begins and ends several times when running directX games

Whenever I start a current game directx mobsync.exe enforcement begins and ends. It causes a shift in the game and the cheek than the "dee-dum' sound as if I am connect and disconnect a USB device. I changed everything in the system for material. New

-

Having problems with administrative privileges on the computer

I have that a single user account on my pc and the system will not allow me to delete progs b/c I'm not the admin. ????? Help! What's wrong?