ODI - products .txt or .csv file output

Hi guru,.I'm new on ODI and have hit a problem with that I hope someone could help me!

I have a chart / MS SQL data store (2005), I would use ODI to produce a .txt or .csv file output. I have looked at the documentation, but I couldn't find an obvious way to do it - can someone please advise how this can be done?

Thank you very much

Joe

Hey Joe,

Here is a link to a 'for example Oracle' explaining how to load a flat file:

http://www.Oracle.com/WebFolder/technetwork/tutorials/OBE/FMW/ODI/odi_11g/odi_project_ff-to-FF/odi_project_flatfile-to-flatfile.htm

You just need to change the LKM to choose one for MS SQL.

Kind regards

Jerome

Tags: Business Intelligence

Similar Questions

-

List of instant vm via a txt or csv file

Hello

I want to create a workflow to create a snapshot of the vm list, this list of virtual machine must be a txt or csv file.

the file will be asking when I run the workfow (browse file) or a UNC path is already configured in the workflow.

someone has an idea?

An example of code here is in a workflow, I wrote for vCloud Director 1 imported users from a csv file that has been uploaded to the server of the vCO. The variable "csvfile" is my MimeAttachment entry, the server has been configured to allow read/write in the folder c:\orchestrator...

var csvContent = csvFile.content; var csvFileName = "c:\\Orchestrator\\csvFile.csv"; csvFile.write("c:\\Orchestrator\\","csvFile.csv"); System.log("csvFileName: "+csvFileName); var host = organization.parent; // Now use file to create FileReader object: var fileReader = new FileReader(csvFileName); if (fileReader.exists){ fileReader.open(); var line=fileReader.readLine(); System.log("Headers: \n"+line); line = fileReader.readLine(); while (line != null){ var lineValues = line.split(","); var username = lineValues[0]; var username = lineValues[0]; var password = lineValues[1]; var enabled = lineValues[2]; var role = lineValues[3]; var fullname = lineValues[4]; var email = lineValues[5]; var phone = lineValues[6]; var im = lineValues[7]; var storedvmquota = lineValues[8]; var runningvmquota = lineValues[9]; var vcdRole = System.getModule("com.vmware.pso.vcd.roles").getRoleByName(host,role); System.log("Role name found: "+vcdRole.name); System.log("Attempting to create User Params"); var userParams = System.getModule("com.vmware.library.vCloud.Admin.User").createUserParams( username, fullname, enabled, false, email, phone, null, password, vcdRole, false, runningvmquota, false, storedvmquota ); var userOut = System.getModule("com.vmware.library.vCloud.Admin.User").createUser(organization, userParams); userOut.im = im; userOut.update(); System.log(userOut.name + " imported..."); line = fileReader.readLine(); } fileReader.close(); // now cleanup by deleting the uploaded file from the server var file = new File(csvFileName); System.log("Filename: "+file.name); deleteFile(csvFileName); }else{ System.log("Error! File not found! "+csvFileName); } function deleteFile(fileName) { var file = new File(fileName); if (file.exists) { file.deleteFile(); } }And who gets a VC:VirtualMachine object in vm name where "vmName" is the string entry containing the name to search and "vCenterVM" is the purpose of VC:VirtualMachine resulting code example:

var vms = VcPlugin.getAllVirtualMachines(null, "xpath:name='"+vmName+"'"); var vCenterVM = null; if (vms != null){ if (vms.length == 1){ System.log("Match found for vm named: "+vmName); vCenterVM = vms[0]; }else{ System.log("More than one VM found with that name! "+vmName); for each (vm in vms){ System.log("VM ID: "+vm.id); } } } -

.txt or CSV file to the database

Hi-

I have a file of text generated by a Pulse Oximeter, what I want is, as the system is receiving the file (CSV .txt) he automaticly walked into a MySql or SQL Server database and generates an SMS message. Thank you

Concerning

Tayyab Hussain

Web services involve using sychronous communication. However, the use case you describe means that asynchronous, the so-called fire-and-forget, communication is better suited to your needs.

For example, with sychronous communication, the system ignores the exact moment where in a TXT or CSV file will come. Also, when the Treaty system a file, all the other treatments waits until the system ends. This can cause synchronization problems if multiple files enter the system in quick succession.

ColdFusion is an asynchronous solution that fits the requirements of your use case. The following methods will allow you to receive the file to automatically enter data and generate an SMS message. Use the DirectoryWatcher gateway in ColdFusion to monitor the directory in which the TXT or CSV files are deleted and events of ColdFusion SMS gateway to send SMS messages.

Whenever a third party files a file in the directory, an event is triggered, setting the DirectoryWatcher in action. Set up a query to the onAdd of listener of the DirectoryWatcher CFC method to store the file in the database. Following the request, use the sendGatewayMessage method to send the SMS message.

-

Hello, I have a work select the statement and I try to adapt an old output CSV file I've used before, but I get the following error:

SQL > @$ ORBEXE/sup.sql

TYPE wk_tab IS TABLE OF read_input % ROWTYPE

***

ERROR on line 98:

ORA-06550: line 98, column 4:

PL/SQL: ORA-00933: SQL not correctly completed command

ORA-06550: line 8, column 1:

PL/SQL: SQL statement ignored

There is probably a little more errors, but it start with this one.

Thank you CP

DECLARE v_count NUMBER(10) := 0; EXEC_file UTL_FILE.FILE_TYPE; CURSOR read_input IS SELECT COALESCE(ilv1.btr_pla_refno,ilv2.pla_refno,ilv3.PLP_PLA_REFNO) refno, ilv1.par_per_surname, ilv1.adr_line_all, ilv1.description, ilv1.SCHEME_NO, ilv1.btr_cpa_cla_refno, ilv1.susp ,ilv2.pla_par_refno, ilv3.PLP_BAK_ACCOUNT_NUMBER FROM ( select distinct benefit_transactions.btr_cpa_cla_refno ,parties.par_per_surname ,addresses.adr_line_all ,rbx151_schemes_data.description ,rbx151_schemes_data.SCHEME_NO ,btr_pla_refno ,nvl2 (claim_parts.cpa_suspended_date, 'Y', 'N') AS SUSP from fsc.address_usages ,fsc.address_elements ,fsc.addresses ,fsc.parties ,fsc.properties ,claim_periods ,benefit_transactions ,rbx151_schemes_cl ,rbx151_schemes_data ,claim_roles ,claim_property_occupancies ,claim_hb_payment_schemes ,claims ,claim_parts where address_elements.ael_street_index_code = addresses.adr_ael_street_index_code and addresses.adr_refno = address_usages.aus_adr_refno and properties.pro_refno = address_usages.aus_pro_refno and properties.pro_refno = claim_property_occupancies.cpo_pro_refno and rbx151_schemes_cl.scheme_no = rbx151_schemes_data.scheme_no and claim_roles.cro_crt_code = 'CL' and claim_roles.cro_end_date is null and claim_periods.cpe_cpa_cla_refno = claim_roles.cro_cla_refno and parties.par_refno = claim_roles.cro_par_refno and claim_property_occupancies.cpo_cla_refno = claim_periods.cpe_cpa_cla_refno and claim_property_occupancies.cpo_cla_refno = benefit_transactions.btr_cpa_cla_refno and claim_periods.cpe_cpa_cla_refno = benefit_transactions.btr_cpa_cla_refno and benefit_transactions.btr_cpa_cla_refno = rbx151_schemes_cl.claim_no and claim_roles.cro_cla_refno = claim_property_occupancies.cpo_cla_refno and claim_periods.cpe_cpo_pro_refno = rbx151_schemes_cl.pro_refno and claim_periods.cpe_cpa_cpy_code = 'HB' and claim_periods.cpe_cps_code = 'A' and claim_periods.cpe_cpa_cpy_code = benefit_transactions.btr_cpa_cpy_code and rbx151_schemes_cl.claim_no like '406%' -- and benefit_transactions.btr_cpa_cla_refno = '307801231' -- and parties.par_per_surname is not null and claim_property_occupancies.cpo_pro_refno = rbx151_schemes_cl.pro_refno and claim_periods.cpe_cpa_cla_refno = claim_parts.cpa_cla_refno --MORE ADDED CODE!! and claims.cla_refno = claim_hb_payment_schemes.chp_cla_refno --ADDED CODE!!! AND claims.cla_refno = claim_roles.cro_cla_refno --ADDED CODE!!! and (claim_hb_payment_schemes.chp_pty_code ='CL' or claim_hb_payment_schemes.chp_pty_code ='LL') --ADDED CODE and claim_periods.cpe_created_date = (select max(c2.cpe_created_date) from claim_periods c2 where c2.cpe_cpa_cla_refno = claim_periods.cpe_cpa_cla_refno and claim_periods.cpe_cpa_cpy_code = c2.cpe_cpa_cpy_code ) and claim_property_occupancies.cpo_created_date = (select max(cp2.cpo_created_date) from claim_property_occupancies cp2 where cp2.cpo_cla_refno = claim_property_occupancies.cpo_cla_refno) and benefit_transactions.btr_created_date = (select max(b2.btr_created_date) from benefit_transactions b2 where b2.btr_cpa_cla_refno = benefit_transactions.btr_cpa_cla_refno) and claim_parts.CPA_CREATED_DATE = (select max(c1.CPA_CREATED_DATE) from claim_parts c1 where c1.CPA_CREATED_DATE = claim_parts.CPA_CREATED_DATE)) ilv1 full outer join (select private_ll_accounts.pla_refno, private_ll_accounts.pla_par_refno from private_ll_accounts where private_ll_accounts.pla_created_date = (select max(p2.pla_created_date) from private_ll_accounts p2 where p2.pla_refno = private_ll_accounts.pla_refno and private_ll_accounts.pla_refno = p2.pla_refno (+))) ilv2 ON (ilv1.btr_pla_refno = ilv2.pla_refno) full outer JOIN (select distinct private_ll_pay_schemes.PLP_PLA_REFNO, private_ll_pay_schemes.PLP_BAK_ACCOUNT_NUMBER from private_ll_pay_schemes where private_ll_pay_schemes.PLP_START_DATE = (select max(p1.PLP_START_DATE) from private_ll_pay_schemes p1 where p1.PLP_PLA_REFNO = private_ll_pay_schemes.PLP_PLA_REFNO and private_ll_pay_schemes.PLP_PLA_REFNO = p1.PLP_PLA_REFNO (+))) ilv3 ON (ilv2.pla_refno =ilv3.PLP_PLA_REFNO) WHERE (ilv1.par_per_surname IS not NULL) --or ilv1.btr_pla_refno IS NULL and ilv3.PLP_PLA_REFNO IS NOT NULL) --and ( -- OR ilv2.pla_refno IS NOT NULL OR ilv3.PLP_PLA_REFNO IS NOT NULL); TYPE wk_tab IS TABLE OF read_input%ROWTYPE INDEX BY PLS_INTEGER; wk wk_tab; BEGIN exec_file := utl_file.fopen('/spp/spool/RBlive/rr_output', 'sup.txt', 'W'); OPEN read_input; LOOP EXIT WHEN read_input%NOTFOUND; FETCH read_input BULK COLLECT INTO wk LIMIT 100; FOR i IN 1 .. wk.count LOOP v_count :=0; utl_file.put_line(exec_file, wk(i).refno||','||wk(i).par_per_surname||','||wk(i).adr_line_all||','||wk(i).description||','||wk(i).SCHEME_NO||','||wk(i).btr_cpa_cla_refno||','||wk(i).susp||','||wk(i).PLA_PAR_REFNO||','||wk(i).PLP_BAK_ACCOUNT_NUMBER); END LOOP; END LOOP; CLOSE read_input; utl_file.fclose(exec_file); END; /Hello

Given that you have commented this line:

--and ( -- OR ilv2.pla_refno IS NOT NULL OR ilv3.PLP_PLA_REFNO IS NOT NULL);You're cursor definition is no longer ended with a semicolon;

Concerning

Peter -

creating CSV files - output contains comma - work around?

Greetings,

It is:

Is there a way to bypass the databases containing commas

When you create csv files?

I have the process down to create a .csv file and stored on the server and

or by e-mail to go out. The problem I have is that one of the db fields being withdrawn

the database has a comma in there and so cause problems for me in

creation of the csv file.

Fields of DB:

-emp_number

-emp_name (name, first name)

-activity_code

-report_date

-time_total

When the file is created it recognizes the comma in the emp_name field

and separates the name and surname and creates separate

columns for them in the CSV files that do not match with the db field

names in the row 1 of csv.

I already searched the forum to see if this has been asked before. Or the other

my eyes is too crossed everyone reading or I'm not in the

appropriate search criteria.

Any help will be greatly appreciated.

Leonard

double quotes the data part.

-

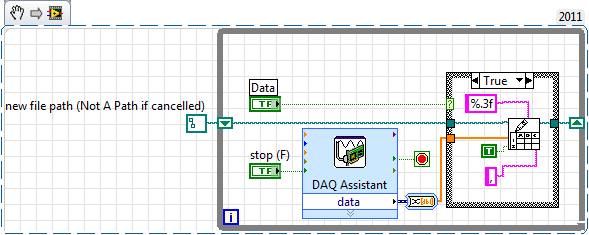

Write data to data acquisition in a .txt or .csv file

Hello

I want to write the DAQ data inot a file .csv or .txt, so I'll try to handle 'Write in a spreadsheet file', can cause I can record data in all formats. My concept is that data acquisition data will save in a file continuously when I press the button IT and when I press STOP button then it will ask the user to save the file.

I have attached the file VI.

But my problem is that every time he asks me a new file even if I put the file append as true.

All of the suggestions.

Thank you

Chotan

Try this

-

Helps to replace a string in a txt file with a string from a csv file

Hi all

I worked on the script following since a few days now, determined to exhaust my own knowledge before asking for help... Unfortunately, it didn't take very long to exhaust my knowledge :-(

Basically, I need to replace a value in a single file (raw.txt) with the value of another file (userids.csv) when a variable is. Then I released the results of a third file.

To do this, I divided the "raw" file into variables using the ',' as the separator, the problem is that some variables are intentionally empty, where the fi $variable = "statements.

It is currently what I want to do but only when the userids.csv file contains a single line. It is obviously because of the foreach ($user in import)... What I need to figure out is how to loop through the file raw.txt, text replacement when a variable in the user ID file is the text in raw.txt... I hope that makes sense?

The user ID file is in the following format - user, service, Dept that can contain dozens of lines

I would appreciate any pointers :-)

See you soon

# Treatment

$importraw = get-content i:\raw.txt

$import = import-csv i:\userids.csv-en-tete UserAccount, functional, Dept{foreach ($user in $import)

$useraccount = $user. UserAccount

$userfunction = $user. Functional

$userdept = $user. Dept

{foreach ($line in $importraw)

$first, $second, $third, $fourth, $fifth, $sixth, $seventh, $eighth, $ninth = $line - split(",")

$linesproc = $linesproc + 1

If ($sixth - eq ") {}

$temp6 = "6TEMP".

Write-Host "field Null detected - assigning temporary value:"$temp6 ".

$sixth = $temp6 # the assignment of a temporary value so that - statement to replace later works

}

If ($seventh - eq ") {}

$temp7 = "7TEMP".

Write-Host "field Null detected - assigning temporary value:"$temp7 ".

$seventh = $temp7 # the assignment of a temporary value so that - statement to replace later works

}

If ($fifth - eq $user.) UserAccount) {}

$line - $seventh, replace $user. Dept | Add content i:\Output.txt

}

else {}

$line - $seventh, replace "/ / customer. Add content i:\Output.txt

}

}}

Try the attached version.

The problem, in my opinion, was in nested ForEach loops.

Instead I've implemented with a lookup table

-

Conversion of out-line of output to a. CSV file

Hi all

I was wondering if it would be possible to convert the output of the out-file cmdlet to a .csv file. When I use out-file it creates a text file that puts each item on its own line.

For example, the text file would be:

Server2

Server3

Server4

I would like to convert this .txt file to a .csv file, which would be:

Server2, Server 3, Server 4, etc.

I tried to use the Export-csv cmdlet instead of the out-file cmdlet, but I can't seem to make it work, so instead I was wondering if it would be possible to convert the text using a pre-made PowerCLI command or some type of line of the script to remove and replace characters and delimiters.

Thank you very much for any help or assistance that anyone can give.

Best

Oops, my mistake.

See if it works for you

(Get-Content 'C:\text.txt' | %{"'$_'"}) - join ',' |) Out-file "C:\csv.csv."

-

How about the text of the .txt file output?

This is my code:

#include "iostream.h".

#include 'fstream.h.

ofstream myfile;

MyFile.Open ("C:\test.txt");

MyFile < < 'text ';

MyFile.Close ();

But it occurs the error C1083: cannot open include file: 'iostream.h': no such file or directory.

How about the text of the .txt file output?

I would suggest that you do in the InDesign style to match...

InterfacePtr

stream (StreamUtil::CreateFileStreamWrite (...); if(Stream->GetStreamState() == kStreamStateGood) {}

flow-> XferByte (buf, size);

flow-> Flush();

}

flow-> Close();

There are lazy as CreateFileStreamWriteLazy equivalent methods.

Deferred methods will open the file in the application, but you will always get a valid pointer.

See docs on its use.

-

Download the .csv file display output white

Hi all

We are facing problem when we try to download the output from one page to the .csv format or any other format. After downloading, we not get any data in .csv file.

Action - Download-> .csv file displays only not all data

Please help me how to solve this problem.

Kind regards

Sushant

What is the relevant SQL? Do you have any bind variables? They are included in session state?

-

ODI - read CSV file and write to the Oracle table

Hello world

After 4 years, I started to work again with ODI, and I'm completely lost.

I need help, I don't know what to use for each step, interfaces, variables, procedures...

What I have to do is the following:

(1) reading a CSV file-> I have the topologies and the model defined

(2) assess whether there is a field of this CSV file in TABLE A-> who do not exist in the table is ignored (I tried with an interface joining the csv with the TABLE model a model and recording the result in a temporary data store)

Evaluate 3) I need to update TABLE C and if not I need to INSERT if another field that CSV exists in TABLE B-> if there

Could someone help me with what use?

Thanks in advance

Hi how are you?

You must:

Create an interface with the CSV template in the source and a RDBM table in the target (I'll assume you are using Oracle). Any type of filter or the transformation must be defined to be run in the stadium. (you must use a LKM for SQL file and add an IKM Sql control (it is best to trim them and insert the data when it cames to a file if you want after this process, you may have an incremental update to maintain history or something like that).)

For validation, you will use a reference constraints in the model of the oracle table: (for this you need a CKM Oracle to check constraints)

Then, you must select the table that you sponsor and in the column, you choose which column you will match.

To article 3, you repeat the above process.

And that's all. Pretty easy. If you do not have the two tables that you need to use your validation that you need to load before loading the CSV file you need valid.

Hope this can help you

-

Hi gurrus and LucD

I'm looking for a Script that can list all virtual machines with type of NIC E1000 via the output of the CSV file.

The script should search for information in a multiple Vcenter servers and multiple clusters and list all the VMs name, status (two powers on or off) with type card NETWORK Type E1000 only no other.

Concerning

Nauman

Try like this

$report = @)

{foreach ($cluster Get-cluster)

foreach ($rp in Get-ResourcePool-location $cluster) {}

foreach ($vm in (Get-VM-location the $rp |)) Where {Get-NetworkAdapter - VM $_______ | where {$_.}} Type - eq "e1000"}})) {}

$report += $vm. Select @{N = "VM"; E={$_. Name}},

@{N = 'vCenter'; E={$_. Uid.Split('@') [1]. "Split(':') [0]}},"

@{N = "Cluster"; E = {$cluster. Name}},

@{N = "ResourcePool"; E = {$rp. Name}}

}

}

}

$report | Export Csv C:\temp\report.csv - NoTypeInformation - UseCulture

-

output of the coil in .csv file - of the problems with the display of data

Hello

You will need to provide the result of a select query that contains approximately 80000 registration in a .csv file. A procedure is written for the select query and the procedure is called in the script of the coil. But few of the columns have the decimal point in values. For example, there is a personal_name column in the select query that says the name as "James, Ed. Then the output in different columns. Therefore, the data is being shifted to the right for the remaining columns.

Some could help solve this problem. I mainly used a procedure as the select query is about three pages, and so you want the script to look clear.

Script is,

AUTOPRINT activated;

Set the title.

Set TRIMSPOOL ON;

the value of colsep «,»;

set linesize 1000;

set PAGESIZE 80000;

variable main_cursor refcursor;

escape from the value.

coil C:\documents\querys\personal_info.csv

EXEC proc_personal_info(:main_cursor);

spool off;Hello

set PAGESIZE 80000 ;is not valid, and it will print header as all the 14 ranks of default.

You can avoid printing the header in this way:set AUTOPRINT ON ; set heading ON; set TRIMSPOOL ON ; set colsep ',' ; set linesize 1000 ; set PAGESIZE 0 ; set escape / set feedback off spool c:\temp\empspool.csv SELECT '"'||ename||'"', '"'||job||'"' FROM emp; spool offThe output will look like this in this case

"SMITH" ,"CLERK" "ALLEN" ,"SALESMAN" "WARD" ,"SALESMAN" "JONES" ,"MANAGER" "MARTIN" ,"SALESMAN" "BLAKE" ,"MANAGER" "CLARK" ,"MANAGER" "SCOTT" ,"ANALYST" "KING" ,"PRESIDENT" "TURNER" ,"SALESMAN" "ADAMS" ,"CLERK" "JAMES" ,"CLERK" "FORD" ,"ANALYST" "MILLER" ,"CLERK"Alternatively, you can consider creating a single column by concatenating the columns in this way:

spool c:\temp\empspool.csv SELECT '"'||ename||'","'||job||'"'In this case the output will look like without spaces between the columns:

"SMITH","CLERK" "ALLEN","SALESMAN" "WARD","SALESMAN" "JONES","MANAGER" "MARTIN","SALESMAN" "BLAKE","MANAGER" "CLARK","MANAGER" "SCOTT","ANALYST" "KING","PRESIDENT" "TURNER","SALESMAN" "ADAMS","CLERK" "JAMES","CLERK" "FORD","ANALYST" "MILLER","CLERK"Kind regards.

AlPublished by: Alberto Faenza may 2, 2013 17:48

-

Hot to export the two outputs a script to a csv file

Hi all

I have a simple sctipt which is the instant list of two DC in my environment. Currently each line generates a separate CSV file with data.

Is it possible to create a single file with the output of these two lines data?

Get-data center-name DC1. Get - vm | Get-snapshot | Select-Object name, Description, PowerState, VM, created, @{Name = 'Host'; Expression = {(Get-VM $_.)} {{VM). $host.name}} | Export ' E:\artur\reports\DC1_snapshot.csv

Get-data center-name DC2. Get - vm | Get-snapshot | Select-Object name, Description, PowerState, VM, created, @{Name = 'Host'; Expression = {(Get-VM $_.)} {{VM). $host.name}} | Export 'E:\artur\reports\DC2_snapshot.csv '.

both files will be sent by mail

Copy & paste the problem.

The Export-Csv should be of course outside the loop

get-datacenter -name "blackburn","coventry" | %{ $dcName = $_.Name $_ | get-vm | get-snapshot | ` Select-Object @{Name="DC"; Expression={$dcName}},VM, Name, Description, PowerState, Created, @{Name="Host"; Expression = {(Get-VM $_.VM).Host.Name}} | ` } | Export-Csv 'C:\DC_snapshot.csv'____________

Blog: LucD notes

Twitter: lucd22

-

I could turn my sql to CSV file tables in odi?

If the answer is Yes, how can I do? with packages?

I really appreciatte your help

Best regardsodisqlunload in the package to extract data from table in the file (csv)

Maybe you are looking for

-

WiFi problem with iPad 4 on first generation Airport Express

Bad problem with iPad 4 / iOS9. It seems that it is connected to the wifi (my old airport express of first-generation lists my iPad as a connected device and in settings of the iPad, the connection has the usual blue tick). BUT Internet works only fo

-

Photosmart 5512: I would like to analyze not only black and white

I tried to find some configurations but I can not... When I try to scan a sheet saying that is an image of the document, it kind of "break" leaves in different documents, and if I say that it is a document, scannner scans in black and white... Is it

-

MG6320 Scanner Stopped Working - "Set the PC to start scanning ' WINDOWS 8.1

I had this problem when I was on Windows 7 and it is solved by what Ramona suggested: "The Canon IJ Network Scanner selector EX must be running on your computer to scan using the control panel of the printer. To start the program in the Start Menu o

-

Rocket new 8 GB not recognized by Windows

My new 8 GB is not fully recognised on two different Windows computers. I've tried different USB settings (several times for each) I've tried different USB ports Hold the off button for 20 seconds Tried different ways to connect (USB first and then t

-

Pavilion Slimline 400-314: cannot delete the renamed user profile

Greetings! I've been enjoying the nuances of Windows 8.1... right. In any case, I have Win8.1 64-bit on this excellent HP SLimline PC. Now, I need help with the operating system. I am an avid user of Windows 7 and like it a lot. My four PC's the o