polling on the question of range date?

HelloI am looking for a solution for this problem,

In the DB Table, there is a column of type TIMESTAMP. then, when I ask the using range of date BETWEEN the keyword it returns the data inserted on the next day at 12.00.00 AM

example:

Table A

Column A_

Data1

data2

Data3

Column Time_

03/08/2010 11.55.00 AM

04/08/2010 12.00.00 AM

12.00.01 AM 04/08/2010

Here, when I ask the use between keyword (08/03/2010 and 04/08/2010) on Column_Time then,

Ouput

Data1

data2

but I'm looking for data1 in the output, any ideas?

Between the two limits is included:

column between condition1 and condition2

Translates

condition1 >= column

and

condition2 <= column

So say the code what you want... in your case

condition1 >= column

and

condition2 < column

I do not know if you expect the > = or not... Adjust according to your needs.

Tags: Database

Similar Questions

-

faced with the question when writing data to a flat file with UTL_FILE.

Hi gurus,

We have a procedure that writes the data from the table to a flat file. RAC is implemented on this database.

While writing data if the current instance, this procedure creates two copies of the data in parts.

Any body can help me to solve this problem.

Thanks in advance...I also asked this question, but it seems no final solution...

In any case, here are two possibilities

(1) the directory for the file among all nodes share

(2) run you a procedure on a specific node -

The question in PopUp data refresh

Hi all

I have a table of detail popup. I'm using this popup fill some fields and upload the photo.

The photo is like upload the photo by using Browse button and the display of the image.

whenever I try to add the second line of the secondary table to upload the photo, instead of display the Browse button it displays the name of the previous line of photo with the update button. So I'm not able to add the photo of the new line.

Basically, it is not refreshing.

Please help me solve this problem.

Thanks in advance,

Vijay

This means that your Add button is outside the pop-up window?

You have to bind the value property of inputFile to any variable?

If yes then set its value property to null and set contentDelivery of popup for lazyUncached and ResetEditableValues to WhenCancelled

Ashish

-

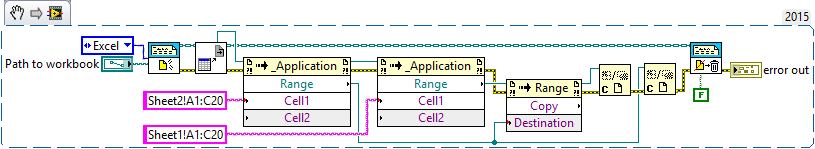

LabVIEW 2015, select data in the Excel worksheet range.

Hi, guys.

I have a question about deal with the data in Excel,

I determine the address of the two (for example A1, C20) cell, and then select the data (for example, in A1 to A20 and C1 to C20) to other leaves (or table).

THX.

There is no need to import the value of range for LabVIEW and then to write this back to Excel, if you use the Range.Copy method. Here's how to use this method (I used the screws GTA to open Excel and to get the Excel.Application ActiveX reference, it was faster to validate the code of interest)

Ben64

-

Code 646, cannot install dates. The question of liciense agreement is not required.

Code 646, cannot install dates. The question of liciense agreement is not required.

Try this FixIt:

Code error '0 x 80070646', '646', or '1606' when you try to install the Office updateshttp://support.Microsoft.com/kb/2258121 "a programmer is just a tool that converts the caffeine in code" Deputy CLIP - http://www.winvistaside.de/

-

Can a weird question we use Data Guard to apply the bidirectional log?

Hi I have a question about Data Guard, can we use Data Guard to apply the bidirectional log? I mean keeping the two of them primary and standby to Active status (read write Mode).

Maybe my question seems silly, but I just wanted to check on it.

Thank youLaughing out loud

That Golden Gate or water courses that will work in two ways. One or the other. Puts additional requirements on the hardware is the downside.

Data Guard does not do that.

-

Copy data from one schema to another. Reopen the Question

Hello

I had asked this question earlier to copy data from tables of one schema to another in the same database using the database link. In my previous post have mentioned below.

Now I am faced with the question for the remote copy of database

I have created a DB connection between my local system and the remote database. with the chain of connection as shown below

CREATE THE DATABASE PUBLIC LINK "MERU_DEV_LOCAL_DEV".

In the above script

CONNECT TO MERUDEV IDENTIFIED BY MERUDEV WITH THE HELP OF "MERUPROD";

I try to copy remote database to my local database tables using the script below.LOCAL DEVELOPMENT scheme IS

REMOTE schema is MERUDEV

network_link DEVELOPMENT impdp = schema MERU_DEV_LOCAL_DEV = MERUDEV remap_schema = MERUDEV:DEVELOPMENT TABLE_EXISTS_ACTION = REPLACE

But the copy of the table does not occur. What could be the problem? Please suggest me or do I need to modify the script

Thank you

SudhirYou can:

-monitor the BONE with ps - ef

-monitor data pump: jobs are dba_datapump_jobs and dba_datapump_sessions

-monitor with logops: v$ session_longops -

Question to load data using sql loader in staging table, and then in the main tables!

Hello

I'm trying to load data into our main database table using SQL LOADER. data will be provided in separate pipes csv files.

I have develop a shell script to load the data and it works fine except one thing.

Here are the details of a data to re-create the problem.

Staging of the structure of the table in which data will be filled using sql loader

create table stg_cmts_data (cmts_token varchar2 (30), CMTS_IP varchar2 (20));

create table stg_link_data (dhcp_token varchar2 (30), cmts_to_add varchar2 (200));

create table stg_dhcp_data (dhcp_token varchar2 (30), DHCP_IP varchar2 (20));

DATA in the csv file-

for stg_cmts_data-

cmts_map_03092015_1.csv

WNLB-CMTS-01-1. 10.15.0.1

WNLB-CMTS-02-2 | 10.15.16.1

WNLB-CMTS-03-3. 10.15.48.1

WNLB-CMTS-04-4. 10.15.80.1

WNLB-CMTS-05-5. 10.15.96.1

for stg_dhcp_data-

dhcp_map_03092015_1.csv

DHCP-1-1-1. 10.25.23.10, 25.26.14.01

DHCP-1-1-2. 56.25.111.25, 100.25.2.01

DHCP-1-1-3. 25.255.3.01, 89.20.147.258

DHCP-1-1-4. 10.25.26.36, 200.32.58.69

DHCP-1-1-5 | 80.25.47.369, 60.258.14.10

for stg_link_data

cmts_dhcp_link_map_0309151623_1.csv

DHCP-1-1-1. WNLB-CMTS-01-1,WNLB-CMTS-02-2

DHCP-1-1-2. WNLB-CMTS-03-3,WNLB-CMTS-04-4,WNLB-CMTS-05-5

DHCP-1-1-3. WNLB-CMTS-01-1

DHCP-1-1-4. WNLB-CMTS-05-8,WNLB-CMTS-05-6,WNLB-CMTS-05-0,WNLB-CMTS-03-3

DHCP-1-1-5 | WNLB-CMTS-02-2,WNLB-CMTS-04-4,WNLB-CMTS-05-7

WNLB-DHCP-1-13 | WNLB-CMTS-02-2

Now, after loading these data in the staging of table I have to fill the main database table

create table subntwk (subntwk_nm varchar2 (20), subntwk_ip varchar2 (30));

create table link (link_nm varchar2 (50));

SQL scripts that I created to load data is like.

coil load_cmts.log

Set serveroutput on

DECLARE

CURSOR c_stg_cmts IS SELECT *.

OF stg_cmts_data;

TYPE t_stg_cmts IS TABLE OF stg_cmts_data % ROWTYPE INDEX BY pls_integer;

l_stg_cmts t_stg_cmts;

l_cmts_cnt NUMBER;

l_cnt NUMBER;

NUMBER of l_cnt_1;

BEGIN

OPEN c_stg_cmts.

Get the c_stg_cmts COLLECT in BULK IN l_stg_cmts;

BECAUSE me IN l_stg_cmts. FIRST... l_stg_cmts. LAST

LOOP

SELECT COUNT (1)

IN l_cmts_cnt

OF subntwk

WHERE subntwk_nm = l_stg_cmts (i) .cmts_token;

IF l_cmts_cnt < 1 THEN

INSERT

IN SUBNTWK

(

subntwk_nm

)

VALUES

(

l_stg_cmts (i) .cmts_token

);

DBMS_OUTPUT. Put_line ("token has been added: ' |") l_stg_cmts (i) .cmts_token);

ON THE OTHER

DBMS_OUTPUT. Put_line ("token is already present'");

END IF;

WHEN l_stg_cmts EXIT. COUNT = 0;

END LOOP;

commit;

EXCEPTION

WHILE OTHERS THEN

Dbms_output.put_line ('ERROR' |) SQLERRM);

END;

/

output

for dhcp

coil load_dhcp.log

Set serveroutput on

DECLARE

CURSOR c_stg_dhcp IS SELECT *.

OF stg_dhcp_data;

TYPE t_stg_dhcp IS TABLE OF stg_dhcp_data % ROWTYPE INDEX BY pls_integer;

l_stg_dhcp t_stg_dhcp;

l_dhcp_cnt NUMBER;

l_cnt NUMBER;

NUMBER of l_cnt_1;

BEGIN

OPEN c_stg_dhcp.

Get the c_stg_dhcp COLLECT in BULK IN l_stg_dhcp;

BECAUSE me IN l_stg_dhcp. FIRST... l_stg_dhcp. LAST

LOOP

SELECT COUNT (1)

IN l_dhcp_cnt

OF subntwk

WHERE subntwk_nm = l_stg_dhcp (i) .dhcp_token;

IF l_dhcp_cnt < 1 THEN

INSERT

IN SUBNTWK

(

subntwk_nm

)

VALUES

(

l_stg_dhcp (i) .dhcp_token

);

DBMS_OUTPUT. Put_line ("token has been added: ' |") l_stg_dhcp (i) .dhcp_token);

ON THE OTHER

DBMS_OUTPUT. Put_line ("token is already present'");

END IF;

WHEN l_stg_dhcp EXIT. COUNT = 0;

END LOOP;

commit;

EXCEPTION

WHILE OTHERS THEN

Dbms_output.put_line ('ERROR' |) SQLERRM);

END;

/

output

for link -.

coil load_link.log

Set serveroutput on

DECLARE

l_cmts_1 VARCHAR2 (4000 CHAR);

l_cmts_add VARCHAR2 (200 CHAR);

l_dhcp_cnt NUMBER;

l_cmts_cnt NUMBER;

l_link_cnt NUMBER;

l_add_link_nm VARCHAR2 (200 CHAR);

BEGIN

FOR (IN) r

SELECT dhcp_token, cmts_to_add | ',' cmts_add

OF stg_link_data

)

LOOP

l_cmts_1: = r.cmts_add;

l_cmts_add: = TRIM (SUBSTR (l_cmts_1, 1, INSTR (l_cmts_1, ',') - 1));

SELECT COUNT (1)

IN l_dhcp_cnt

OF subntwk

WHERE subntwk_nm = r.dhcp_token;

IF l_dhcp_cnt = 0 THEN

DBMS_OUTPUT. Put_line ("device not found: ' |") r.dhcp_token);

ON THE OTHER

While l_cmts_add IS NOT NULL

LOOP

l_add_link_nm: = r.dhcp_token |' _TO_' | l_cmts_add;

SELECT COUNT (1)

IN l_cmts_cnt

OF subntwk

WHERE subntwk_nm = TRIM (l_cmts_add);

SELECT COUNT (1)

IN l_link_cnt

LINK

WHERE link_nm = l_add_link_nm;

IF l_cmts_cnt > 0 AND l_link_cnt = 0 THEN

INSERT INTO link (link_nm)

VALUES (l_add_link_nm);

DBMS_OUTPUT. Put_line (l_add_link_nm |) » '||' Has been added. ") ;

ELSIF l_link_cnt > 0 THEN

DBMS_OUTPUT. Put_line (' link is already present: ' | l_add_link_nm);

ELSIF l_cmts_cnt = 0 then

DBMS_OUTPUT. Put_line (' no. CMTS FOUND for device to create the link: ' | l_cmts_add);

END IF;

l_cmts_1: = TRIM (SUBSTR (l_cmts_1, INSTR (l_cmts_1, ',') + 1));

l_cmts_add: = TRIM (SUBSTR (l_cmts_1, 1, INSTR (l_cmts_1, ',') - 1));

END LOOP;

END IF;

END LOOP;

COMMIT;

EXCEPTION

WHILE OTHERS THEN

Dbms_output.put_line ('ERROR' |) SQLERRM);

END;

/

output

control files -

DOWNLOAD THE DATA

INFILE 'cmts_data.csv '.

ADD

IN THE STG_CMTS_DATA TABLE

When (cmts_token! = ") AND (cmts_token! = 'NULL') AND (cmts_token! = 'null')

and (cmts_ip! = ") AND (cmts_ip! = 'NULL') AND (cmts_ip! = 'null')

FIELDS TERMINATED BY ' |' SURROUNDED OF POSSIBLY "" "

TRAILING NULLCOLS

('RTRIM (LTRIM (:cmts_token))' cmts_token,

cmts_ip ' RTRIM (LTRIM(:cmts_ip)) ")". "

for dhcp.

DOWNLOAD THE DATA

INFILE 'dhcp_data.csv '.

ADD

IN THE STG_DHCP_DATA TABLE

When (dhcp_token! = ") AND (dhcp_token! = 'NULL') AND (dhcp_token! = 'null')

and (dhcp_ip! = ") AND (dhcp_ip! = 'NULL') AND (dhcp_ip! = 'null')

FIELDS TERMINATED BY ' |' SURROUNDED OF POSSIBLY "" "

TRAILING NULLCOLS

('RTRIM (LTRIM (:dhcp_token))' dhcp_token,

dhcp_ip ' RTRIM (LTRIM(:dhcp_ip)) ")". "

for link -.

DOWNLOAD THE DATA

INFILE 'link_data.csv '.

ADD

IN THE STG_LINK_DATA TABLE

When (dhcp_token! = ") AND (dhcp_token! = 'NULL') AND (dhcp_token! = 'null')

and (cmts_to_add! = ") AND (cmts_to_add! = 'NULL') AND (cmts_to_add! = 'null')

FIELDS TERMINATED BY ' |' SURROUNDED OF POSSIBLY "" "

TRAILING NULLCOLS

('RTRIM (LTRIM (:dhcp_token))' dhcp_token,

cmts_to_add TANK (4000) RTRIM (LTRIM(:cmts_to_add)) ")" ""

SHELL SCRIPT-

If [!-d / log]

then

Mkdir log

FI

If [!-d / finished]

then

mkdir makes

FI

If [!-d / bad]

then

bad mkdir

FI

nohup time sqlldr username/password@SID CONTROL = load_cmts_data.ctl LOG = log/ldr_cmts_data.log = log/ldr_cmts_data.bad DISCARD log/ldr_cmts_data.reject ERRORS = BAD = 100000 LIVE = TRUE PARALLEL = TRUE &

nohup time username/password@SID @load_cmts.sql

nohup time sqlldr username/password@SID CONTROL = load_dhcp_data.ctl LOG = log/ldr_dhcp_data.log = log/ldr_dhcp_data.bad DISCARD log/ldr_dhcp_data.reject ERRORS = BAD = 100000 LIVE = TRUE PARALLEL = TRUE &

time nohup sqlplus username/password@SID @load_dhcp.sql

nohup time sqlldr username/password@SID CONTROL = load_link_data.ctl LOG = log/ldr_link_data.log = log/ldr_link_data.bad DISCARD log/ldr_link_data.reject ERRORS = BAD = 100000 LIVE = TRUE PARALLEL = TRUE &

time nohup sqlplus username/password@SID @load_link.sql

MV *.log. / log

If the problem I encounter is here for loading data in the link table that I check if DHCP is present in the subntwk table, then continue to another mistake of the newspaper. If CMTS then left create link to another error in the newspaper.

Now that we can here multiple CMTS are associated with unique DHCP.

So here in the table links to create the link, but for the last iteration of the loop, where I get separated by commas separate CMTS table stg_link_data it gives me log as not found CMTS.

for example

DHCP-1-1-1. WNLB-CMTS-01-1,WNLB-CMTS-02-2

Here, I guess to link the dhcp-1-1-1 with balancing-CMTS-01-1 and wnlb-CMTS-02-2

Theses all the data present in the subntwk table, but still it gives me journal wnlb-CMTS-02-2 could not be FOUND, but we have already loaded into the subntwk table.

same thing is happening with all the CMTS table stg_link_data who are in the last (I think here you got what I'm trying to explain).

But when I run the SQL scripts in the SQL Developer separately then it inserts all valid links in the table of links.

Here, she should create 9 lines in the table of links, whereas now he creates only 5 rows.

I use COMMIT in my script also but it only does not help me.

Run these scripts in your machine let me know if you also get the same behavior I get.

and please give me a solution I tried many thing from yesterday, but it's always the same.

It is the table of link log

link is already present: dhcp-1-1-1_TO_wnlb-cmts-01-1 NOT FOUND CMTS for device to create the link: wnlb-CMTS-02-2

link is already present: dhcp-1-1-2_TO_wnlb-cmts-03-3 link is already present: dhcp-1-1-2_TO_wnlb-cmts-04-4 NOT FOUND CMTS for device to create the link: wnlb-CMTS-05-5

NOT FOUND CMTS for device to create the link: wnlb-CMTS-01-1

NOT FOUND CMTS for device to create the link: wnlb-CMTS-05-8 NOT FOUND CMTS for device to create the link: wnlb-CMTS-05-6 NOT FOUND CMTS for device to create the link: wnlb-CMTS-05-0 NOT FOUND CMTS for device to create the link: wnlb-CMTS-03-3

link is already present: dhcp-1-1-5_TO_wnlb-cmts-02-2 link is already present: dhcp-1-1-5_TO_wnlb-cmts-04-4 NOT FOUND CMTS for device to create the link: wnlb-CMTS-05-7

Device not found: wnlb-dhcp-1-13 IF NEED MORE INFORMATION PLEASE LET ME KNOW

Thank you

I felt later in the night that during the loading in the staging table using UNIX machine he created the new line for each line. That is why the last CMTS is not found, for this I use the UNIX 2 BACK conversion and it starts to work perfectly.

It was the dos2unix error!

Thank you all for your interest and I may learn new things, as I have almost 10 months of experience in (PLSQL, SQL)

-

Question about the creation of cluster data store

I'm running vSphere 5.1 and want to enable storage Drs before as I have create the cluster data store, I was reading through VMware vSphere 5.1, but don't see any confirmation when I add data to the cluster storage, the virtual machines using these storage of data is not affected.

Can anyone confirm that the addition of a data to a data cluster store store has no impact on virtual machines running?

Thank you.

Can anyone confirm that the addition of a data to a data cluster store store has no impact on virtual machines running?

You can add and remove data warehouses in a cluster of data with VMS running store.

-

Question about the impact of a data file missing

Oracle Version: 10 gr 2

If a data file (not a data file belonging to the tablespace SYSTEM or any other data file associated with Oracle Internals) is lost, I can bring the database up somehow?Nichols wrote:

Keita,

What happens if I don't have a backup of the data file that is lost?OK understood

If you do not have a backup of your database and that you have lost a data file, then you must re - create control file (which will contain the names of data inside files) without indicating lost data file name

You must create the controlfile creation script and remove the line of lost data file name and create a controlfile. After that, you should be able to open your database successfully

- - - - - - - - - - - - - - - - - - - - -

Kamran Agayev a. (10g OCP)

http://kamranagayev.WordPress.com

[Step by step installation Oracle Linux and automate the installation by using Shell Script | http://kamranagayev.wordpress.com/2009/05/01/step-by-step-installing-oracle-database-10g-release-2-on-linux-centos-and-automate-the-installation-using-linux-shell-script/] -

scan of the index systematic range

Hello

I read about the differences between the systematic index scan range, single scan, skip scan.

According to the docs, to how the CBO Evaluates in-list of iterators, http://docs.oracle.com/cd/B10500_01/server.920/a96533/opt_ops.htm

, I can see that

"The

IN-list iterator is used when a query contains aINclause with values." The execution plan is the same which would result for a statement of equality clause instead ofINwith the exception of an extra step. This step occurs when theIN-list iterator feeds section of equality with the unique values of theIN-list. »Of course, the doc is Oracle9i Database. (I do not find it in the docs of 11 g)

And the example 2-1 list iterators initial statement, shows that is used in the INDEX RANGE SCAN.

On my Oracle 11 GR 2 database, if I issue a statement similar to the example of the doc, so: select * from employees where employee_id in (7076, 7009, 7902), I see that it uses a SINGLE SCAN

On Oracle Performance Tuning: the Index access methods: Oracle Tuning Tip #11: Unique Index Scan , I read that

If Oracle should follow the Index Unique Scan, and then in SQL, equality operator (=) must be used. If any operator is used in other than op_Equality, then Oracle cannot impose this Unique Index Scan.

(and I think this sentence is somewhere in the docs also).

Thus, when using predicates in the list, why in my case Oracle used the unique scan on primary key column index? Because it wasn't a level playing field.

Thank you.

It is Internet... find us a lot of information a lot but don't know who to trust.

Exactly! It is thought, you should ALWAYS have in the back of your mind when you visit ANY site (no matter the author), read a book or document, listen to no matter WHAT presentation or read responses from forum (that's me included).

All sources of information can and will be errors, omissions and inaccuracies. An example which is used to illustrate a point can involve/suggest that it applies to the related points as well. It's just not possible to cover everything.

Your post doc 9i is a good example. The earliest records (even 7.3 always available online docs) often have a LOT of better explanations and examples of basic concepts. One of the reasons is that there were not nearly that many advanced concepts that explaining necessary; they did not exist.

michaelrozar17 just posted a link to a 12 c doc to refute my statement that the article you used was bad. No problem. Maybe this doc has been published because of these lines:

The database performs a unique sweep when the following conditions apply:

- A query predicate refers to all columns in a unique index using an equality operator key, such as

WHERE prod_id=10. - A SQL statement contains a predicate of equality on a column referenced in an index created with the

CREATE UNIQUE INDEXstatement.

The authors mean that a single scan is ONLY performed for these conditions? We do not know. There could be several reasons that an INLIST ITERATOR has not been included in this list:

1. a LIST is NOT for this use case (what michaelrozar might suggest)

2. the authors were not aware that the CBO may also consider a unique analysis for a predicate INLIST

3. the authors WERE aware but forgot to include INLIST in the document

4. the authors were simply provide the conditions most common where a single sweep would be considered

We have no way of knowing what was the real reason. This does not mean that the document is not reliable.

In the other topic, I posted on the analysis of hard steps, site of BURLESON, and Jonathan contradicted me. If neither Burleson isn't reliable, do not know which author have sufficient credibility... of course, the two Burleson and Jonathan can say anything, it's true I can say anything, of course.

If site X is false, site is fake, Z site is fake... all people should read the documentation only and not other sites?

This is the BEST statement of reality to find the info I've seen displayed.

No matter who is the author, and what credibility that they could rely on the spent items you should ALWAYS keep these statements you comes to mind.

This means you need to do ' trust and verify. " You of 'trust', and then you "checked" and now have a conflict between WORDS and REALITY.

On those which is correct. If your reality is correct, the documentation is wrong. Ok. If your reality is wrong, then you know why.

Except that nobody has posted ANY REALITY that shows that your reality is wrong. IMHO, the reason for this is because the CBO probably MUCH, done a LOT of things that are not documented and that are never explored because there is never no reason to spend time exploring other than of curiosity.

You have not presented ANY reason to think that you are really concerned that a single scan is used.

Back to your original question:

Thus, when using predicates in the list, why in my case Oracle used the unique scan on primary key column index? Because it wasn't a level playing field.

1. why not use a single sweep?

2. what you want Oracle to use instead? A full table scan? A scan of the index systematic range? An index skip scan? A Full Scan index? An analysis of index full?

A full table scan? For three key values? When there is a unique index? I hope not.

A scan of the index systematic range? Look a the doc 12 c provided for those other types of indexes

How the Index range scans work

In general, the process is as follows:

- Read the root block.

- Read the bundle branch block.

- Replacing the following steps until all data is retrieved:

Read a block of sheets to get a rowid.

- Read a block to retrieve a table row.

. . .

For example, to analyze the index, the database moves backward or forward through the pads of sheets. For example, an analysis of identifications between 20 and 40 locates the first sheet block that has the lowest value of key that is 20 or more. The analysis produced horizontally through the linked list nodes until it finds a value greater than 40 and then stops.If that '20' was the FIRST index value and the '40' was the LAST one who reads ALL of the terminal nodes. That doesn't look good for me.

How to index full scans of work

The database reads the root block and then sailed on the side of the index (right or left hand if do a descending full scan) until it reaches a block of sheets. The database then reads down the index, one block at a time, in a sorted order. The analysis uses single e/s rather than I/O diluvium.

Which is about as the last example is not?

How to index Fast Full Scans work

The database uses diluvium I/O to read the root block and all the blocks of leaf and branch. Databases don't know branch blocks and the root and reads the index on blocks of leaves entries.

Seems not much better than the last one for your use case.

Skip index scans

An index skip scan occurs when the first column of a composite index is "skipped" or not specified in the query.

. . .

How Index Skip scan work

An index skip scan logically divides a composite index in smaller subindex. The number of distinct values in the main columns of the index determines the number of logical subindex. The more the number, the less logical subindex, the optimizer should create, and becomes the most effective analysis. The scan reads each logical index separately and "jumps" index blocks that do not meet the condition of filter on the column no leader.

Which does not apply to your use cases; you do not have a composite index, and there is nothing to jump. If Oracle were to 'jump' between the values of the list in it would be still reads these blocks 'inbetween' and them to jump.

Which brings back us to the using a single scan, one at a time, for each of the values in the list in. The root index block will be in the cache after the first value lies, so it only needs to be read once. After that just Oracle detects that the entry of only ONE necessary index. Sounds better than any other variants for me if you are only dealing with a small number of values in the IN clause.

- A query predicate refers to all columns in a unique index using an equality operator key, such as

-

The HARD drive that was my OS (Windows XP Pro SP3) failed and lost quite a few areas which are essential for the operating system running. Other data is still readable. A got another HARD drive and installed Windows XP SP2, Firefox and other programs. I was able to retrieve the bookmarks, security certificates, and other profile information using the information found in bandages.

None of them addressed how do to recover the modules or their data. Specifically, there are several large, elegant scripts that took months to develop and customize.

Articles related to migration and other do not work for me because they require the old copy of FF is functional, that is not because the OS on this HARD drive is damaged. Is it possible to recover these data, similar (or not) about how I could get the other profile info?

Have you copied the entire folder C:\Documents and Settings\username \Application Data\Mozilla\Firefox\ on the old drive?

If this is not the case, can you?

If so, make a copy and save this folder just in case.If so, you could replace this folder on the new facility by \Profiles\ [with your profiles inside] folder and the profiles.ini file [delete all other files / folders that may also be in the folder "Firefox"] -and then replace with the same folder named from the old failed drive. Note that you will lose what you already have with the new installation / profile!

Your profile folder contains all your personal data and customizations, including looking for plugins, themes, extensions and their data / customizations - but no plugins.

But if the user logon name is different on the new facility that the former, any extension that uses an absolute path to the file in its prefs will be problems. Easily rectified, by changing the path to the file in the file prefs, js - keep the brake line formatting intact. The extensions created after the era of Firefox 2.0 or 3.0, due to changes in the 'rules' for creating extensions usually are not a problem, but some real old extensions that need only "minor" since that time can still use absolute paths - even though I have not seen myself since Firefox Firefox 3.6 or 4.0.View instead of 'Modules' I mentioned the 4 types of 'Modules' separately - Plugins are seen as 'Add-ons', but they are not installed in the profile [except those mislabelled as a "plugin", when they are installed via an XPI file], but rather in the operating system where Firefox 'find' through the registry.

Note: Migration articles can tell you do not re - use the prefs.js file, due to an issue that I feel is easily fixed with a little inspection and editing. I think you can manage that my perception is that you have a small shovel in your tool box, if you encounter a problem you are able to do a little digging and fixing problems with the paths to files - once you have been warned.

Overall, if you go Firefox 35 35 or even Firefox 34 to 35, I don't think you will run in all the problems that you can not handle [that I cross my fingers and "hope" that I'm not on what it is obvious].

With regard to the recovery of the 'data' for individual extensions - there are many ways that extension developers used to store their data and pref. The original way should save in prefs.js or their own file RDF in the profile folder. While Firefox has been developed more, developers started using their own files in the profile folder. And because Mozilla has started using sqlite database files in Firefox 3.0, Mozilla extended their own use of sqlite, as have extension developers.

Elegant uses the file stylish.sqlite to store 'styles', but something in the back of my mine tells me that 'the index' maybe not in this file with the data. But then again, I can be confusing myself a question I had with GreaseMonkey awhile back where I copied the gm_scripts folder in a new profile and with already installed GreaseMonkey but with no script. These GM scripts worked, but I could not see them or modify them - they do not appear in the user GM extension interface window in Firefox. -

not the most up-to-date firefox

Firefox has seemed to have downgraded, every time that I update I get a message that it is not up-to-date. I can tell just by looking whether there is an older version or at least appears to be one.

Hello KoiMer, you use firefox 21 which is the latest version. the message you see is probably just show up, because you are using google.com/firefox as one of your landing pages, which has only been maintained up to what firefox 3 and displays now the warning message that you have referred to as no. matter what browser version you are using. change the homepage of Subject: House to answer the question and get the current home page of firefox...

-

Having recently updated Firefox to 7.0.1 (x 86 en - GB), I was offered the opportunity to submit performance data. Before reading the "Other Info" bit, I clicked on the Yes"" button. When you read the bit of info 'Other Info', I was directed to:

"

(Also known as the telemetry) usage statistics. Starting with version 7, Firefox includes a feature that is disabled by default to send to non-personal use Mozilla, performance and statistical reactivity on the interface features user, memory and hardware configuration. The only potentially personal data to Mozilla when this feature has been activated is IP addresses. Usage statistics are transmitted using SSL (a method of protection of data in transit) and help us improve future versions of Firefox. Once sent to Mozilla, statistical usage are stored in form aggregated and made available to a wide range of developers, including Mozilla employees and public contributors. Once this feature is enabled, users can disable in Firefox Options/preferences. Simply uncheck the item "Submit-performance data.

"

However, I have no option under Tools/Options, on my XP, home edition, Service Pack 3, netbook.

Thanks for all the help and all your efforts to make Mozilla exists.See tools > Options > advanced > general: system default: 'send performance data '.

-

Decommissioning: Yosemite will work after restoring the Capitan of TM data?

I need to downgrade back Capitan in Yosemite, too many applications do not work with Capitan - including iMovie.

Since I'm on Capitan for about 2 months, the restoration of data from Time Machine will include the Capitan OSX app

So my question:

These data and the Time Machine Capitan OSX clash with the recently installed OSX Yosemite?

you restore your system to a period of time where the data would work, this snapshot would therefore have the correct versions of the Apps for the OS. Your new projects registered can be fully functional or inconsistent depending on what you work in applications and if those applications were different versions between 10.10 and 10.11, usually it would be 3rd apps from third parties and those who have changed the version durning that time increments, if you have something like that... Save your work and backup before restore.

Use Time Machine to back up or restore your Mac - Apple Support

Maybe you are looking for

-

Keyboard Satellite P100-190 number does not

My keypad does not work, even thought the numlock light on. For these days, the numlock was constantly on - it is not possible to turn off - but the numbers or functions will not work? Everyone knows this? I did a system restore to before it started

-

2nd R710 PERC causes high rpm fan

I installed a PERC H800 alongside of the already installed PERC 6 / i... Before installing the H800 server was quiet, the fans ran very low. After you have installed the H800 fans run at 11-12 K rpm all the time. Anything else except the addition o

-

I developed an application that needs code signing (uses SQLite functions), but unlike other positions, I found that I can't run on the Simulator. I have a ControlledAccessException. (application) refused permission to "files". Any help would be appr

-

Can anyone inform me if there was an update to Photoshop in the past few nearly 24 hours?

-

Hello worldDoes anyone have a recommendation of a tutorial of 'skinning' quick start? JDeveloper is very new to me, and I now want to add a little spice to my application newly developed a ' hello world ' because it's ugly ! Finally, I will develop a