Questions of size and speed of Collection stored

Hello everyone,Developing a search engine for text, I was looking for a quick tool and low level storage. Berkeley DB I got and it seemed to suit my needs. I used Java cards to my process if it appeared logical to use the Collections API.

Structure database (or card):

DOCUMENT MAP

- docKey a simple integer ID generated in the order

- docEntry small number of properties

TERMMAP

- termKey, a unique string value

- termEntry small number of properties

INDEXMAP

- indexKey two foreign key values: docKey, termKey

-number of occurrence indexEntry of this term in the doc

There is a secondary index for INDEXMAP termKey interrogation.

I use the tuples of the key entities serializable for entries and I removed the redundant entities serializable key value using the transient modifier.

Here's the algorithm

The program retrieves the terms of a document and add the doc in the DOCUMENT Explorer.

For each term, if it is not in the TERMMAP, it is inserted.

This isn't in the INDEXMAP, it is inserted and the number of occurrence = 1 if the number of occurrence is triggered.

DOCUMENT map is quite small, while TERMMAP and INDEXMAP in particular have a huge amount of entries.

When testing it, it has very well worked and provided a quick mark. However, I noticed a few problems that could be critical:

-The size of the log is very high

1, 5 GB size of total newspaper for 100 MB of text indexing, then the same index (without the secondary index) takes 100 MB in ASCII text.

I tried to reduce the total log by increasing CLEANER_MIN_UTILIZATION but it has not reduced sufficiently.

I thought that maybe does not keep good things, but by displaying the contents of the card, all goes well, no redundancy.

-The storage is too slow

With a simple file storage, it's very fast if the bottleneck comes from db storage.

I noticed that it is faster to store temporary data in java cards (TreeMap to have sorted input values), then add them to the maps stored than to use the saved directly map.

To conclude

My final goal is to process a huge amount of data (collections of giga or terabyte) and I'll probably have to use parallel processing. However, if a simple test gives good results, I fear that I'll have to find another way to store my data.

This is the first time that I use berkeley db, so maybe I did something wrong. To avoid asking help for nothing I tried a lot of changes in the configuration: size, cleaner use, size of the cache, off transactional stuff and nothing gave significant results. I followed the tutorial for Java Collections and read the Javadoc. Finally, I used the JConsole plugin to take a look at the stats. This is my last chance...

If more details are needed, just ask,

Thank you in advance,

Nicolas.

Tags: Database

Similar Questions

-

Envy 17 t J000 - maximum hard drive size and speed

I'm going to put a 2nd hard drive into the open space in my Envy 17 t-j000.

The States of Maintenance and Service Guide;

Hard drives Supports 6.35 cm (2.5 in.) 9.5 mm hard disks (. 37-in) and thicknesses of 7.0 mm (. 28 - in) (all hard drives use the same bracket)

Customer-accessible

Serial ATA

Supports the following drives:

● 1 TB 5400 RPM, 9.5 mm

● 750 GB 5400 RPM 9.5 mm

● 500 GB 5400 RPM 9.5 mm and 7.0 mm

Configurations to two hard disks:

● 2 TB: (1 TB 5400 RPM x 2)

● 1500GO: (750 GB 5400 RPM x 2)

This seems to mean that I cannot use a single hard drive 2 TB 2 and cannot use a 7200 RPM drive.

Is this correct? Bios denominator do not allow more than a drive of 1 TB or the 7200 RPM?

CouldI partition just the 2nd 2-1 TB disk?

Or the manual simply means that the standard unit of HP is not provided with larger than these sizes and I could actually use a 2 TB in the Bay of 2nd?

It's the last... the list says just what HP sale: it does not describe a limitation of the system. However, I do not think that yet, the market offers a 2 TB drive that fits the available space. 2 TB 2.5-inch SATA drives I've seen are too thick. 7200 rpm or hybrid SSD; No problem.

To add a second drive you'll need a second hard drive enclosure and cable.

http://www.newmodeus.com/shop/index.php?Main_Page=product_info&cPath=2_5&products_id=542

If it's 'the Answer' please click on 'Accept as Solution' to help others find it.

-

newb question: page size and links

Hi all

I have problems with two things (probably a lot more to come).

(1) in the presentation "Discover the berkeley", he said that I could change the size of the page in my database. Where is this configurable?

(2) I read in textbooks that to store values in a key-value pair, I can use an entity (I currently use), or I can make it serializable, or create links. My objects are currently composed of 2 integers (ints), a short, a double and a byte [8]. It is not a complex object, so I do not think that I have to use the catalogue of the stored class serializable or custom bindings. Is it not the case?

The entity option seems easiest. Is - this slower to use or something?

The serializable option makes it sound like I can only store a single primitive. Is this fair?

Please let me know - any help would be greatly appreciated.

Thank you

JulianJulian,

The page size is a parameter for the C of Berkeley DB version. We are on the forum Java Edition, then maybe you want to be on the forum for product C - Berkeley DB

Have you had a chance to read through http://www.oracle.com/technology/documentation/berkeley-db/je/GettingStartedGuide/bindAPI.html? Here's a brief overview of links, while a full text that explains how to choose a link is to the http://www.oracle.com/technology/documentation/berkeley-db/je/collections/tutorial/collectionOverview.html#UsingDataBindings.

But before you dive too deep into the links, consider using the Direct persistence layer API. Which will let you use store and retrieve objects Java directly from Berkeley DB Java Edition and Berkeley DB without management of the bindings explicitly.

Kind regards

Linda

-

Configuration of disk - size and speed?

According to the recommendations of the forum, I want to use raid0 on board for the swap file, media Cache because the rate of break-up for small media Cache files is best on the raid0. Because I need 2 with readers, how big and fast Gbps is usually sufficient?

Also, regarding recommended isolated disks for export (and cut projects I think)... should I go as fast and important that I can afford? Or more rather than faster depending on budget, so more room for storage?

C: [1 x 300 GB 10 K rpm Disk] OS, programs - CURRENT

[Raid0, 7200 2 discs] D: pagefile, hiding from media (edge) - NEED

E: [raid5, 4 x 1 t 7200 3 Gbit/s drives] previews of media, projects, (LSI/3Ware97504i) - COURSES

F: [1 player] exports, trimmed project - NEED

Thanks, NAN

Logical, but I would make sure that the export drive is the same make and model as those of your raid5.

RAID5 using only the built-in controller is very slow, so you can expand the raid 5 disks to increase his speed, and then you can use your drive to export this and get an external drive as export destination. First see if the speed edge it is good enough for your application, you decide to go on the road to raid extended or not.

-

Hello

I wanted to collect script inventory VM VM name with location, name of the Cluster and data store total size and free space left in Datastore.I have script but his mistake of shows during its execution. Any help on this will be apreciated.

Thank you

VMG

Error: -.

Get-view: could not validate the argument on the parameter "VIObject". The argument is null or empty. Provide an argument that is not null or empty, and then try

the command again.

E:\script\VM-DS-cluster.ps1:7 tank: 20

+ $esx = get-view < < < < $vm. Runtime.Host - name of the Parent property

+ CategoryInfo: InvalidData: (:)) [Get-view], ParameterBindingValidationException)

+ FullyQualifiedErrorId: ParameterArgumentValidationError, VMware.VimAutomation.ViCore.Cmdlets.Commands.DotNetInterop.GetVIViewGet-view: could not validate the argument on the parameter "VIObject". The argument is null or empty. Provide an argument that is not null or empty, and then try

the command again.

E:\script\VM-DS-cluster.ps1:8 tank: 24

+ $cluster = get-view < < < < $esx. Parent - the name of the property

+ CategoryInfo: InvalidData: (:)) [Get-view], ParameterBindingValidationException)

+ FullyQualifiedErrorId: ParameterArgumentValidationError, VMware.VimAutomation.ViCore.Cmdlets.Commands.DotNetInterop.GetVIViewGet-view: could not validate the argument on the parameter "VIObject". The argument is null or empty. Provide an argument that is not null or empty, and then try

the command again.

E:\script\VM-DS-cluster.ps1:9 tank: 24

+ += get-view $report < < < < $vm. Store data-name of the property, summary |

+ CategoryInfo: InvalidData: (:)) [Get-view], ParameterBindingValidationException)

+ FullyQualifiedErrorId: ParameterArgumentValidationError, VMware.VimAutomation.ViCore.Cmdlets.Commands.DotNetInterop.GetVIViewIt seems that your copy/paste lost some

. I have attached the script

-

need to know how to enlarge events tracing buffers

What is the size of your swap file? increase the size and place it in the root of your systemdrive!

André "a programmer is just a tool that converts the caffeine in code" Deputy CLIP - http://www.winvistaside.de/

-

Question about size of sequence (anamorphic) and the distortion of the image.

If I create a sequence with sizes 1920 x 810 is a way to bring images in the sequence and did not get distorted? I would prefer just to crop the top and the bottom of the image. Ultimately the file video or original, but just the size of the sequence remains the same. Is this possible?

Right click on the clip on the timeline and if "Scale to frame size" is checked. If so, turn it off. This can be done globally in Edit > Prefs, but only affects clips when you import, does not affect those already in the project.

This should provide the effect desired cropping the top and bottom, but always curious to see how it will be out. If you want to go to Blu - ray or whatever it is a "standard video", then it is preferable to use the full HD image size and add black bars to get the 'look' that you want

Thank you

Jeff

-

Should we leave how much free space on the C drive before affecting the performance and speed?

Hello!

Hard drive of my new PC (C) is 1 TB (processor Samsung 7i, Windows 8.1). It doesn't have any other disk/partition. How this space is safe to use to store large files (videos, esp., stored in the native video library file) without harming the performance and speed?

And if I create another partition (D) and just use it for storage, it will make much difference compared to the above?

Thank you!

Anna

Sunday, March 1, 2015 10:59 + 0000, AnaFilipaLopes wrote:

And if I create another partition (D) and just use it for storage, it will make much difference compared to the above?

Planning your Partitions

The Question

Partitions, how much should I have on my hard drive, what do I use

each of them for, and what size should each one be?It s a common question, but unfortunately this doesn t have a

only simple, just answer to all the world. A lot of people will respond with

the way they do, but their response isn't necessarily best for the

person seeking (in many cases it isn't right even for the person)

response).Terminology

First, let's rethinking the terminology. Some people ask "should I".

partition my drive? That s the wrong question, because the

the terminology is a little strange. Some people think that the word

"partition" means divide the drive into two or more partitions.

That s not correct: to partition a drive is to create one or several

partitions on it. You must have at least one partition to use

He who think they have an unpartitioned disk actually

have a player with only one partition on it and it s normally

Called C:. The choice you have is to have more than one

partition, not that it's the partition at all.A bit of history

Back before Windows 95 OEM Service Release 2 (also known as Windows

95 (b) was published in 1996, all MS-DOS and Windows hard drives have been set

using the file system FAT16 (except for very tiny to aid

FAT12). That 16-bit only because were used for addressing, FAT16 has a

maximum 2 GB partition size.More than 2 GB of hard disks were rare at the time, but if you had

one, you must have multiple partitions to use all the available

space. But even if your drive was not larger than 2GB, FAT16 created

Another serious problem for many people - the size of the cluster has been

more great if you had a larger partition. Cluster sizes increased from 512

bytes for a partition to no greater than32Mb all the way up to 32 KB for a

partition of 1 GB or more.More the cluster size, the space more is wasted on a hard drive.

That s as space for all the files is allocated in whole clusters

only. If you have 32 KB clusters, a 1 byte file takes 32 KB, a file, a

greater than 32 k byte takes 64 k and so on. On average, each file

about half of his last group waste.If large partitions create a lot of waste (called "soft"). With a 2 GB

FAT16 drive in a single cluster, if you have 10,000 files, each

lose half a cluster of 32 KB, you lose about 160 MB for relationships. This s

back in an important part of a player that probably cost more than $400

1996 - around $ 32.So what did the people? They divided their 2 GB drive in two,

three or more logical drives. Each of these logical drives has been

smaller the real physical disk, had smaller clusters, and

so less waste. If, for example, she was able to keep all the partitions

less than 512 MB, cluster size was only 8 KB, and the loss was reduced to a

a quarter of what it would be otherwise.People partitioned for other reasons also, but back in the days of

FAT16, it was the main reason to do so.The present

Three things have changed radically since 1996:

1. the FAT32 and NTFS file systems came along, allowing a larger

partitions with smaller clusters and so much less waste. In

with NTFS, cluster sizes are 4 K, regardless of the size of the partition.2 hard drives have become much larger, often more than 1 TB (1000 GB) in

size.3 hard drives have become much cheaper. For example, a 500 GB drive

can be bought today for about $50. That s 250 times the size of this

Player 2Gb typical 1996, about one-eighth of the price.What these things mean together which is the reason to be old to have

multiple partitions to avoid the considerable wastage of disk space left.

The amount of waste is much less than it used to be and the cost of

that waste is much less. For all practical purposes, almost nobody does

should no longer be concerned about slack, and it should no longer be

has examined when planning your partition structure.What Partitions are used for today

There are a variety of different ways people put in place several

scores of these days. Some of these uses are reasonable, some are

debatable, some are downright bad. I'll discuss a number of Commons

partition types in the following:1. a partition for Windows only

Most of the people who create such a partition are because they believe

If they never have to reinstall Windows properly, at least they

He won t lose their data and he won t have to reinstall their applications.

because both are safe on other partitions.The first of these thoughts is a false comfort and the second

is downright bad. See the analysis of the types of partition 2 and 4

below to find out why.Also note that over the years, a lot of people who find their windows

partition that has begun to be the right size proves to be too

small. For example, if you have such a partition for Windows and later

upgrade to a newer version of Windows, you may find that your Windows

partition is too small.2. a partition for installed programs

This normally goes hand in hand with the partition type 1, a partition for

just Windows. The thought that if you reinstall Windows, your

installed application programs are safe if they are in another

partitions is simply not true. That s because all programs installed

(with the exception of an occasional trivial) have pointers to the inside

Windows, in the registry and elsewhere, as well as associated files

buried in the Windows folder. So if Windows, pointers and

the files go with it. Given that the programs need to be reinstalled if Windows

the fact, this reasoning to a separate partition for programs not

work. In fact, there is almost never a good reason to separate

Windows of the software application into separate partitions.3. a partition for the pagefile.

Some people think mistakenly that the pagefile on another

score will improve performance. It is also false; It doesn t

help and often I hurt, performance, because it increases the movement of the head

to get back to the page to another file frequently used

data on the disk. For best performance, the paging file should normally

be on the most widely used score of less used physical player. For

almost everyone with a single physical disk than the same drive s

Windows is on C:.4. a partition for backup for other partitions.

Some people make a separate partition to store backups of their other

or partitions. People who rely on a "backup" are a joke

themselves. It is only very slightly better than no backup at all.

because it leaves you likely to be simultaneously the original losses

and backup for many of the most common dangers: the head crashes and other

types of drive, serious glitches to power failure, near lightning

strikes, virus attack, even stolen computer. In my opinion,.

secure backup must be on a media removable and not stored in the

computer.5. a partition for data files

Above, when I discussed separate Windows on a clean partition,

I pointed out that separate data from Windows is a false comfort if

He of done with the idea that data will be safe if Windows ever

must be reinstalled. I call it a false comfort that's because

I'm afraid many people will rely on this separation, think that their

data are safe there and so do not take measures to

Back it up. In truth the data is not safe there. Having to reinstall

Windows is just one of the dangers to someone a s hard disk and not

probably even one. This kind of "backup" falls into the same

category, as a backup to other partitions partition; It lets you

sensitive to the simultaneous loss of the original and the backup on many of

the most common dangers that affect the entire physical disk, not

just the particular partition. Security comes from a solid backup

diet, not how partition you.However, for some people, it may be a good idea to separate Windows and

programs on the one hand of the data on the other, putting each of the

two types into separate partitions. I think that most people

partitioning scheme must be based on their backup system and backup

plans are generally of two types: whole hard disk imaging

or data only backup. If you back up data, backup is

usually facilitated by the presence of a separate with data only partition;

to save just the score easily, without having to

collect pieces from here and elsewhere. However, for

those who backup by creating an image of the entire disk, there is

usually little, if any, benefit the separation of data in a partition of

its own.Furthermore, in all honesty, I must point out that there are many

very respected people who recommend a separate partition for Windows,

Whatever your backup plan. Their arguments haven t convinced

me, but there are clearly two views different here.6. a partition to image files

Some people like to deal with the images and videos as something separate

other data files and create a separate partition for them. To my

the spirit, an image is simply another type of data and there is no

the advantage in doing so.7. a partition for music files.

The comments above related to the image files also apply to music

files. They are just another type of data and must be dealt with the

just like the other data.8. a partition for a second operating system to dual-boot to.

For those who manage several operating systems (Windows Vista, Windows

XP, Windows 98, Linux, etc.), a separate partition for each operating

system is essential. The problems here are beyond the scope of this

discussion, but simply to note that I have no objection to s

all these partitionsPerformance

Some people have several partitions because they believe that it

somehow improves performance. That s not correct. The effect is

probably low on modern computers with modern hard disks, but if

whatever it is, the opposite is true: more music mean poorer

performance. That's because normally no partition is full and it

so are gaps between them. It takes time for the drive s

read/write heads to cross these gaps. Close all files

are, faster access to them will be.Organization

I think a lot of people overpartition because they use scores as a

organizational structure. They have a keen sense of order and you want to

to separate the apples from the oranges on their readers.Yes, separating the different types of files on partitions is a

technical organization, but then is to separate different types of

files in folders. The difference is that the walls are static and

fixed in size, while the files are dynamic, changing size automatically

as needed to meet your changing needs. This usually done records

a much better way to organize, in my opinion.Certainly, partitions can be resized when necessary, but except with the latter

versions of Windows, which requires a third-party software (the and the

possibility to do so in Windows is primitive compared to the third-party

solutions). These third party software normally costs money and not

any point and how stable it is, affects the entire disk.

with the risk of losing everything. Plan your partitions in

first place and repartitioning, none will be necessary. The need

to repartition usually occurs as a result of overpartitioning in

the first place.What often happens when people organize with partitions instead

records are that they make a miscalculation of how much room they need on each

This partition, and then when they run out of space on the partition

When a file is logically, while having plenty of space

on the other hand, they simply saving the file in the score of "poor".

Paradoxically, therefore, results in this kind of score structure

less organization rather than more.So how should I partition my drive

If you read what came before, my findings will not come as a

surprise:1. If your backup set is the image of the entire disk, have just one

single (usually c partition :));2. If you backups just data, have two partitions one for Windows and

application programs installed (usually c :)), the other for data

(normally D :).)With the exception of multiple operating systems, it is rarely

any advantage to have more than two partitions. -

buffer size and sync with the cDAQ 9188 problems and Visual Basic

Hi all, I have a cDAQ-9188 with 9235 for quarter bridge straing caliber acquisition module.

I would appreciate help to understand how synchronization and buffer.

I do not use LabView: I'm developing in Visual Basic, Visual Studio 2010.

I developed my app of the NI AcqStrainSample example. What I found in the order is:

-CreateStrainGageChannel

-ConfigureSampleClock

-create an AnalogMultiChannelReader

and

-Start the task

There is a timer in the VB application, once the task begun, that triggers the playback feature. This function uses:

-AnalogMultiChannelReader.ReadWaveform (- 1).

I have no problem with CreateStrainGageChannel, I put 8 channels and other settings.

Regarding the ConfigureSampleClock, I have some doubts. I want a continuous acquisition, then I put the internal rate, signal source 1000, continuous sample mode, I set the size buffer using the parameter "sampled by channel.

What I wonder is:

(1) can I put any kind of buffer size? That the limited hardware of the module (9235) or DAQ (9188)?

(2) can I read the buffer, let's say, once per second and read all samples stored in it?

(3) do I have to implement my own buffer for playback of data acquisition, or it is not necessary?

(4) because I don't want to lose packets: y at - it a timestamp index or a package, I can use to check for this?

Thank you very much for the help

Hi Roberto-

I will address each of your questions:

(1) can I put any kind of buffer size? That the limited hardware of the module (9235) or DAQ (9188)?

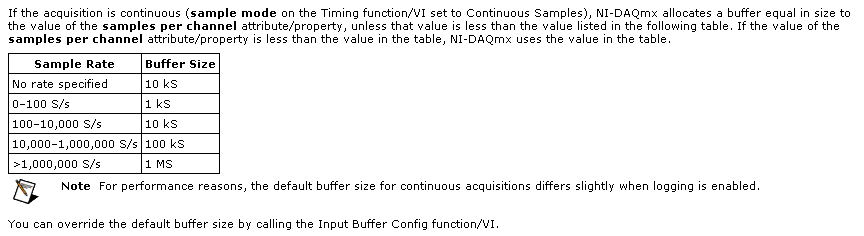

The samplesPerChannel parameter has different features according to the synchronization mode, you choose. If you choose finished samples the parameter samplesPerChannel determines how many sample clocks to generate and also determines the exact size to use. But if you use streaming samples, the samplesPerChannel and speed settings are used together to determine the size of the buffer, according to this excerpt from the reference help C DAQmx:

Note that this buffer is a buffer software host-side. There can be no impact on the material available on the cDAQ-9188 or NI 9235 buffers. These devices each have relatively small equipment pads and their firmware and the Driver NOR-DAQmx driver software transfer data device to automatically host and the most effective way possible. The buffer on the host side then holds the data until you call DAQmx Read or otherwise the input stream of service.

(2) can I read the buffer, let's say, once per second and read all samples stored in it?

Yes. You would achieve this by choosing a DAQmx Read size equal to the inverse of the sampling frequency (during 1 second data) or a multiple of that of the other playback times.

(3) do I have to implement my own buffer for playback of data acquisition, or it is not necessary?

No, you should not need to implement your own stamp. The DAQmx buffer on the host side will contain the data until you call the DAQmx Read function. If you want to read from this buffer less frequently you should consider increasing its size to avoid the overflow of this buffer. Which brings me to your next question...

(4) because I don't want to lose packets: y at - it a timestamp index or a package, I can use to check for this?

DAQmx will meet you if all packets are lost. The default behavior is to stop the flow of data and present an error if the buffer of the side host DAQmx overflows (if, for example, your application does not pick up samples of this buffer at a rate equal or faster than they are acquired, on average).

If, for any reason, you want to let DAQmx to ignore the conditions of saturation (perhaps, for example, if you want to sample continuously at a high rate but want only interested in retrieving the most recent subset of samples), you can use the DAQmxSetReadOverWrite property and set it to DAQmx_Val_OverwriteUnreadSamps.

I hope this helps.

-

question about SQL and VMDK places

Hello world. Im kinda new here so please be gentle

I'm confused on the locations of the files for sql.

At this moment I have 1 with 3 vmdk files sql server executes a muscular 100 GB sql database.

My disks are as follows

1 OPERATING SYSTEM

2 DB

3 newspapers

They are all thick and they are all stored with the virtual machine in the virtual machine configuration.

It lies in a cluster HA/DRS and we use them both.

The question boils down to performance.

If my VM is stored on DATASTORE_A and the VM folder on this data store has all the VMDK files in it, it would be safe to say that they are all on the same disk as much as vsphere is concerned.

Now to get best performance, I want to separate my VMDK files to separate data (with faster operation) warehouses to avoid conflicts of drive on my current data store. What is the right way to go about this?

How separate you your VMDK to other data warehouses, and enjoy other performance data store? WE do not use DTS at all so im sure it although we use HA/DRS in our group... and yet, I don't think that it is important in this case... just more of an FYI.

What do you do to get your SQL servers to run in vmware?

Seems like a basic question.

The answer seems easy. Separate disks so that they do not fight.

'S virtually done it with VMDK but the data store underlying is 1 speed and they all exist on this subject, so... How to separate them at that level for best performance?

Also... once I keep them separated, how can I keep my sanity by knowing my VMDK are not with the virtual machine? This kind of made a mess . Of course the performance takes a front seat for my mental health.

Thank you all

1. If I sVmotion my other vmdk files via the advanced options of one in another data store, it will create the files folder structure based on my VM name and place the vmdk inside? (I guess I could just test it and see).

Yes, the VM configuration file (.) VMX) will be modified to point to the new location of the virtual disk. You can use the virtual machine property editor to check for this, once the Storage vMotion.

2. with regard to the disk controller, I assume you mean a virtual controller by VMDK in the vmx / correct the settings of the virtual machine and not a phyical on the hardware controller? (IM pretty sure I know the answer here but I just want to be clear).

Yes, the virtual controller is correct.

If so, what type of controller? LSI Logic or it would benefit all a paravirtual adapter?

As long as your operating system is supported, go with the PVSCSI every time - especially for newspapers and the controller file data.

-

Issues slide and speed PDF raster vs. vector

We are currently using v24 to create PDF-based multi-rendus items in 1024 x 768 for all versions of the iPad. As mentioned in other posts, the PDF base is returned to the 108 dpi and buttons/slideshows are rendered as png 72 dpi files. The quality of the image of the buttons/slideshows seems mediocre on the retina due to their low resolution screens.

Our items are made up of vignettes on the PDF of base that can be used to display a larger version of the image in a slide show. Expanded images are bitmap-based photographs. There are two major problems with this type of interface design:

(1) the size of the file is very large, because the slideshow items are saved in png format.

(2) the image quality is poor because they are rendered at 72 dpi.

Our solution is to use the vector format instead of raster for overlays. Images in the slide show are then incorporated into an additional PDF file and floated on the PDF of basic when the user triggers it. Embedded images are saved as JPG at 108 dpi. This fixes two problems that I mentioned above, but there is a drawback, and it's speed. Many online resources, it is shown that vector content takes more time to load than the content of the frame. So far, we have not noticed performance issues. That said, we have completed only a fraction of the overall content in folio and we do not want to paint us in a corner.

Here's my question: where we will see an impact on the time vector overlays processor? Are there delays when a user is striking swipe pages and the app attempts to preload adjacent pages in memory? Or when a user attempts to use a button that triggers the slideshow?

Thanks for your help

Sidenote: in the analysis process, we have been copying the content from our folios of an iPad to a local HD using xcode. It is interesting to note that when scanning images are open in Photoshop, they are 109 dpi not 108.

When you place the images in a slideshow of vector overlay change you display experience. You will see a version low resolution of each image show first, then the high version plug-in software component resolution. Many publishers are not like that. Expand the side folio overal too, as we incorporate the image full size and lower quality thumbnails.

Unless you create a simple editing application, you must publish two renditions, one standard deviation and a HD create your designs in the form of 1024 x 768 InDesign documents and place images into slideshows that are large enough to be beautiful on an ipad to the retina. Use a slide show of raster.

Then use the source InDesign document to create articles in two sheets: one in 1024 x 768 and the other at 2048 x 1536. We will be smart and scaling the images in the slide show according to the size of the folio. If you have a file sidecar.xml the work to create the second rendering in the Folio Builder Panel are very fast. You can create a vacuum folio PDF of 2048 x 1536 and import all the finished InDesign at the same time items.

Publish two folios and you're done. Nice content on SD card, nice content to hard drive.

Neil

-

A way to change the font, size and "BOLD" to each page?

Due to problems described here https://support.mozilla.org/en-US/questions/1012057?esab=a & s = & r = 0 & as = s I erase that I need something (an extension, perhaps) I want to recognize and change the font size and "BOLD" fonts in each page.

Is there something like this?

Maybe also for the bookmarks bar...You can use the NoSquint extension to define the size of the font (zoom text/page) and the color of the text on web pages.

-

Firefox "Tools/Options / ' always had the option to backup and restore my passwords stored in an xml file, but I find it anymore this option and questions on the saved passwords on this site refers to a file 'db' instead of 'xml '. What about the files ' export-passwords - date - .xml? How to restore the same saved passwords that I restored years. Any comments or advice is greatly appreciated.

Use Firefox Addons

Advance - backup your profile - Mozilla Firefox stores all your personal settings, such as bookmarks, passwords and extensions, in a profile on your computer folder

-

icons change size and move between the start up... Why?

I move and resize the icons on my desktop... then, if I stop and restart the computer, the icons are in a different size and location where I want. What arrangement made change to make that happen? I am the only user on the computer. Vista Home Premium.

Hello

I would like the season you try these methods and check.

Method 1:

Make the SFC scan and check the status of the issue.

How to use the System File Checker tool to fix the system files missing or corrupted on Windows Vista or Windows 7

http://support.Microsoft.com/kb/929833

Method 2:

You can check if you are facing the same question in the user account new or different administrator account. You can create a new user account on your computer, and then check.

Create a new user account-

http://Windows.Microsoft.com/en-us/Windows7/create-a-user-accountIf you are not faced with the same question in a new user account, you can view the link below and use the steps provided to fix a corrupted - user profile

http://Windows.Microsoft.com/en-us/Windows7/fix-a-corrupted-user-profile -

I don't know how to change the size and quality of the text on the screen on Windows 7

I just installed a new Windows 7 but I don't know how to change the size and quality of the text on the screen. I tried all the things normal, resolution, size of icons, dpi etc but nothing gives me what I want. The text in clear 'wonderful' thing is just terrible. When I go through the 4 steps I see no text boxes which make the text nothing better. All options are terrible, so the result on screen is too terrible. When I do any huge I do not get a full screen of things - view the Start button disappears or I did not closing small cross at the top - of the suggestions to inprove the situation? I must mention that my screen/monitor is 2006 - I need to buy a new one? How much is this Windows 7 is going to cost me, or maybe I should go back to XP!

I sincerely thank all you smart "technicians" for all the answers you always gave myself and all others who ask questions. I'm not too smart with all this new technology as I fell into it very late. I am now in my 60s and only started using a pc when I was over 50 years so... In any case, I asked a question about the size of screen resolution and text and received much good advice from all that you guys. I had recently changed in XP to Windows 7. However, the bit of advice that I was given in 'Cyberking' here at the Portugal had set the resolution of the screen, the advanced settings, 75 to 60 Hertz Monitor tab. Very basic, and the first question asked the technician (Lady) was "what size is your screen? Naturally as a smaller screen that any 'techno' would be considered dead aid, solving the problem was simple and straightforward without me having to stretch my knowledge and my skills by downloading all programes weird (to me) or do the funny stuff in the bios or something else. I send this comment to you for you to realize that the simple solution is not always bad and maybe we should go for the easiest first and then work up to the more difficult ones.

Just for you all to see - now my screen resolution is perfect, the text on the screen is clear and legible without difficulty, even though the size of text and icon is small. Clarity is all!

Thank you all once again! Best regards, Blondie blue.

Maybe you are looking for

-

Exception in the external code called by calling the library function node problem

Hello, I am a complete newbie in LabVIEW and need help. I have run tests of reliability and get this error about 2000 cycles. The code sends the digital output of a cylinder which operates the actuator downwards, he reads a signal of the thing I'm te

-

Why move my icons on my desktop? Windows 7

My icons keep moving when I restart my computer running windows 7. He has never done this before. Recently, I had a Trojan virus on my computer. Twice I tried a system restore but it failed twice asking me to turn my anitivirus. It seems that I got r

-

problem write/read an xml file

Hello world IM using ksoap library to access my webservice, if everything is ok, my webservice returns an xml file, then I use this file to show some information in my application. In the Simulator, everything works perfectly (BB simulator_5.0.0.411_

-

stereo mix is available, but not sound in Windows 8

I have a problem of Stereo-Mix with a new desktop computer Windows 8 HP. The stereo mix is set as default in the HEALTHY management system registration window. When I play something on the computer (for example a .wav file) there is no indication t

-

Premiere Pro CC 2014 error 'first pro could not find capable video game modules.

Hello, I'm having a problem with Premiere Pro CC 2014. Whenever I try to open it, it displays an error saying that "Premiere pro can't find capable video game modules". In the start screen, when it starts to load, it stucks at loading "ImporterQuickT