Reflecting changes in XML data in the file swf online

Hi friends,I have access to an xml file that is in the server permanently with the intervals of distinct time of my swf in a page online.

If I make any changes in the xml data it does not reflect my online page because it's access to the XML content offline.

How to avoid this? I want my page be reflected immediately when I make a change in my xml file.

Is there any wawy to clear the content offline in ActionScript or ASP?

* I gave the full path of the server to load the xml file in my ActionScript.

Thanks in advance... - *.

Hello

Just add a variable to change to the URL of the xml file:

XML. Load ("Data.xml? foo =" + getTimer ());

In this way, the URL is never the same, so the XML is loaded each time. You cannot test this film more locally, because it throws file not found errors, but with a server it works fine.

see you soon,

blemmo

Tags: Adobe Animate

Similar Questions

-

Why Lightroom does not change the creation date of the file? (Mac OS)

I find that Lightroom changes the creation of files present during the incorporation of metadata. I noticed that this behavior is different from the bridge, which reaches to write metadata changes to the file without affecting the creation date, affecting only the modification date.

I read in other threads that it doesn't matter when even because moving files between disks will write a new file and, therefore, a new date of creation, but if this can be true in some systems (I don't know) is certainly not the case with Mac OS. For example, I have files dating back at least 1998 which have been moved from disc to disc to disc but still retain their original creation date. Which is as it should be. For many of my older images, there seems to be no capture embedded recognizable don't date anyway, so the creation date of the file is the single timestamp.

It seems quite logical that the modified date of a file must be updated to take into account that the file has been modified (for example if adding metadata), but it seems to make sense that the date of creation of the file should be kept as the date, this file has been created. Changing them both on the same date seems to be a needless loss of information.

Some would say that the process of adding metadata to a file creates a new file, the creation date must be updated to reflect this. But I would say that the creation date should be which would include a normal user as the creation date. We do not expect the creation date to change everything simply because a file is rewritten to the disk (for example when the defragmentation), however technically accurate, it would be to say that it is a newly created file. And the difference in behavior between Bridge and Lightroom shows that there is some confusion on this subject.

Photo software ignores generally the operating system created by file-modification dates, and when he is not ignoring them, treated generally in a non-standard way that varies from one program to a program. Rather than rely on the dates of the files of the operating system, the photo industry has defined fields of metadata with all sorts of specific photos dates: capture date/time (when the shutter button is depressed), date/time of scanning (when a movie or a print image was scanned digitally), date/time software photo altered image or metadata date/time of GPS location. While some photo software will attempt to maintain the dates of file-created and - updated, many programs and services Web will not (including, but not limited to, LR). In addition, many utilities files not always to preserve created and set dates to day that you move, copy it, backup and restore files.

Therefore, I highly recommend that if you care photo dates and times (as I do), so no matter which photo software you use, you store the dates of capture in pictures industry standard metadata by using the tools provided by LR and other photo software. In this way, you migrate the program to the program over the years, are you more likely to preserve that important metadata.

Old photos and scans without that metadata, when you first import into LR, LR will assume that the capture time is the hour of the modified file. LR to rewrite the date of capture of metadata in the file by selecting and making the metadata you can do > edit Capture time, clicking OK and then by metadata > save metadata to file. You can do it in batch - choose all your old photos and make metadata > edit Capture time, click OK, to metadata > save metadata to file. LR will affect its time updated each file capture time. If you want to set the time captured in the file create time, select this option in the window change the Capture time before clicking OK. But beware that many Windows and Mac file utilities cannot preserve create time, even if they retain changed time, in order to create your files time can be wrong. And of course, make sure that your backups are up to date and valid before making any new procedure file with which you aren't familiar.

But if you really want to use non-standard file dates to keep your metadata information, and you want Adobe to modify accordingly the LR, so please give your opinion and vote on it in the Adobe official feedback forum: Bug report: Lightroom modifies the image files creation date. This forum is a user forum in which is rarely involved Adobe, Adobe said they read every post in the forum comments (and sometimes they respond).

-

How can I change the creation date of the file through Labview 2012?

I want to change the format of the data stored in text files, but I want the dates of the files to stay the same. Currently, my program creates files with the new dates.

LabVIEW to my knowledge has no native functions to do.

You can use functions to get/set CreationTime, LastAccessTime and LastWriteTime .NET on Windows.

See attached VI (LabVIEW 8.6)

-

Explorer Windows in Windows 7 change suddenly last updated Date of the files attribute

I'm moving from XP Pro to Win 7 of last month during this process, I came across a disturbing behavior in Windows Explorer in Windows 7.

In Windows 7 (and I hear Vista as well) Windows Explorer will sometimes change the file Date changed when slide it / file to a new location. I spent hours researching this issue. In some discussions, some participants said it is a reasonable thing, since copying a folder with files creates a new folder and new files, so a new modified Date is guaranteed. I can understand that as a basis to change the creation Date. This was the behavior under XP and I think that NT as well. However, changing the Date of change is a fundamental departure from how the system of files are featured in the past, and it is also a serious departure from good sense. At the very least, it will make it impossible to find or organize files by date of update, since the original modification Date (date the actual content of the file may have been modified) is lost. It also seriously decreases the possibility of finding files duplicated by this attribute when simply copy a file it modifies.

Change the folder view options can prevent this behavior (Options: view folders: check always show icons, never thumbnails). In addition, edit a file to read-only will also stop this change of Date of modification. However, none of these options provide a viable solution to this problem. All first, change the option to display file then prevents look a thumbnails of the image files. Change once the copy of file causes the copied files to change Date change date created. The same is true for the readonly attribute (removing it causes the modification Date change), not mention that it is often impractical to effectively apply this strategy.

I also note that this behavior is not consistent in all areas. Files copied from a shared disc on a PC network do not seem to this behavior. But, files and folders transferred by using Dropbox (www.dropbox.com) show the unwanted behavior. Worse still, the behaviour was not consistent within the folder. I transferred a file with the 2007 via Dropbox eml files. In most tests, once the folder has been moved and dropped on the Win 7 machine (whether copied or moved) the Date of editing files has been changed to take account of the date and time that they were dropped. However, in one case, two of the files has not changed the Date of change. By clicking on the file or even open it to read has not made a difference. The modification Date remained unchanged. I don't really know why this was the case in this instance, given that these same files has been modified Date change in previous experiences.

Frankly, I'm puzzled and dismayed that this did not become a more important issue. Do not have a lot of imagination to see how this problem might affect users old files of e-mail to archiving as LME and anyone copy older files, who later need to search or sort them by the Date of change. There are solutions that I know, as the compression of the files before any copy or use Robocopy with the appropriate options, but they are much less practical as drag - drop. Furthermore, drag and drop is now so embedded in users what their likely natural choice for file copy or move. Once the modification Date is lost, it cannot be found without a restore of backup files - once again, an unrealistic option and a lot of time.

I think it is a serious problem that needs to be processed quickly by Microsoft. Good design keeps logical and mitigates the factors that can lead to human error. The above behavior fails both these needs. Anyone found a solution for this (maybe change registry) or to know if and when Microsoft plans to approach this?

I've just dealt with the same problem when copying all the contents of my hard drive and found an impressive (and without :-)) Microsoft Utility called RichCopy which solves this problem and makes it much easier to copy a large number of files/folders :-))

Here is a link to the article about this in Microsoft TechNet Magazine described the tool, which has a link to download it as well: http://technet.microsoft.com/en-us/magazine/2009.04.utilityspotlight.aspx

-

How to write the xml data to a file

Hi all

We have a requirement of writing, the xml data in a file in the data directory in the server. Generate xml data using the SQL below.

SELECT XMLELEMENT ("LINE",

XMLELEMENT ('CELL', xmlattributes (LIKE 'column name', ' EBIZCZMDL_01'), inventory_item_id).

XMLELEMENT ('CELL', xmlattributes (AS 'Name of COLUMN', ' EBIZFFMT_01'), attribute29).

XMLELEMENT ('CELL', xmlattributes ("COMMON" AS "Name of COLUMN"), inventory_item_id |) '' || attribute29 | "ITM")

"XMLDATA" AS "PRODUCE")

OF apps.mtl_system_items_b

When we try to write this query data in a file using UTL_FILE.put_line, the script gives error

PLS-00306: wrong number or types of arguments in the call to "PUT_LINE '.

If anyone can help pls...

Thanks in advanceThe call to dbms_xslprocessor.clob2file is in the cursor for the specific SQL loop.

This is obviously the problem.

The file is replaced with each iteration.Do not use a cursor at all.

See the example below, it should be close to your needs:

DECLARE l_file_name VARCHAR2 (30); l_file_path VARCHAR2 (200); l_xmldoc CLOB; BEGIN l_file_path := '/usr/tmp'; l_file_name := 'TEST_XREF4.xml'; SELECT XMLElement("xref", xmlattributes('http://xmlns.oracle.com/xref' as "xmlns"), XMLElement("table", XMLElement("columns", XMLElement("column", xmlattributes('EBIZFFMT_01' as "name")) , XMLElement("column", xmlattributes('COMMON' as "name")) , XMLElement("column", xmlattributes('EBIZQOT_01' as "name")) , XMLElement("column", xmlattributes('EBIZCZMDL_01' as "name")) , XMLElement("column", xmlattributes('EBIZCZGOLD_01' as "name")) ), XMLElement("rows", XMLAgg( XMLElement("row", XMLElement("cell", xmlattributes('EBIZCZMDL_01' AS "colName"), inventory_item_id) , XMLElement("cell", xmlattributes('EBIZFFMT_01' AS "colName"), attribute29) , XMLElement("cell", xmlattributes('COMMON' AS "colName"), inventory_item_id || '' || attribute29 || 'ITM') ) ) ) ) ).getClobVal() INTO l_xmldoc FROM apps.mtl_system_items_b WHERE attribute29 IS NOT NULL ; dbms_xslprocessor.clob2file(l_xmldoc, l_file_path, l_file_name, nls_charset_id('UTF8')); END; /BTW, the directory in DBMS_XSLPROCESSOR parameter. CLOB2FILE must be an Oracle Directory object, not a literal path.

-

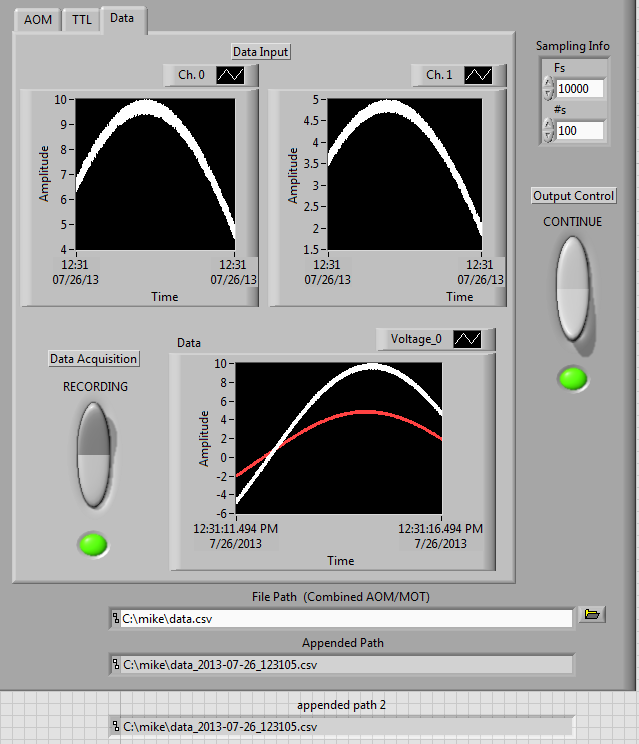

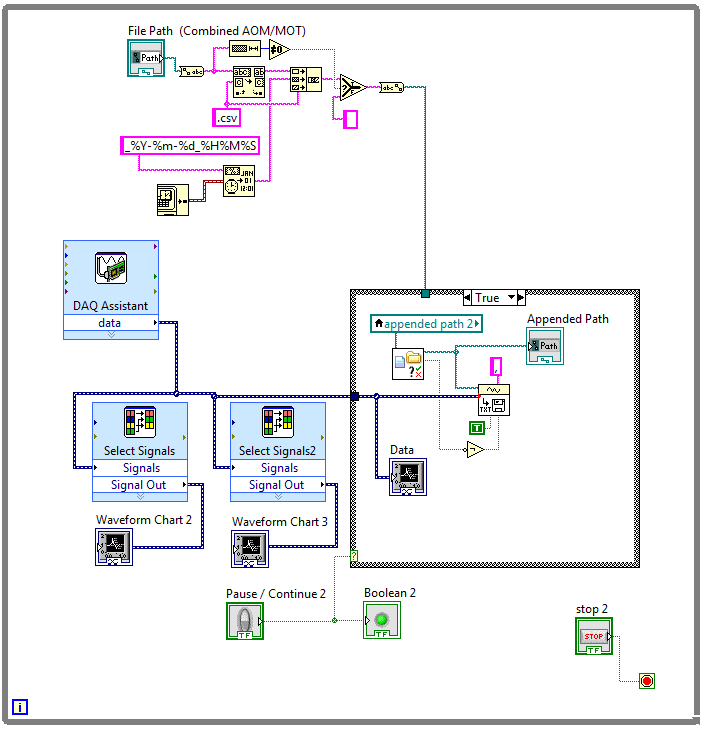

Try to write data to the file, but error 200279

I have problems when writing data to a file. 10 seconds in the recording process, I get the error 200279. I did some research on the subject, but I am unable to corect my code. I think I want to increase the size of the buffer, but he prefers, I suppose, to read the data more frequently. The way that I save my file is, before starting the VI, I attribute a location and name of the file (e.g. data.csv). The date and time is added at the end of the actual when file the I start to record the data (e.g. data_07-26 - 13_122615.csv). If the file does not exist, it creates a new file, and then adds the data of this file after each iteration of the loop. The reason why I did this way was so I don't have to worry about running out of memory, but apparently my code is wrong.

I will include a copy of the faulty section of my code. Any help would be greatly appreciated.

Thank you.

Your problem is that the writing on the disk is slow. It is pretty slow so it causes your DAQ buffer overflow and cause error and loss of data. What you need to do is to implement a producer/consumer. This will put the data acquisition and recording on the disc in separate loops. This will allow data acquisition work at speed, that he needs to deal with incoming samples and writing on disk can run at all what pace, he can. You send data from data acquisition to the loop of logging using a queue.

You can also think about changing how you write to the file. VI is constantly open and close the file, which is a very slow process if you do it inside a loop.

-

How can I write snapshot of my data to the file measured every 5 seconds

Hello

I try to take a snapshot of my stream once every 5 seconds and write it to a. File LVM. I have problems with the VI "write to a measurement file. The pace at which it writes data to the file seems to be dictated by the 'Samples to Read' parameter in the DAQ assistant. I tried placing the VI 'Write in a file measure' within a business structure and the launch of the structure to deal with a "time up" VI. As a result only in a delay of 5 seconds before the insiders 'Write in a file as' VI. Once the VI 'Write in a file as' is launched, it starts writing at 20 x per second. Is there a way to change it or dictate how fast the exicutes VI 'Write in a file measure'?

My reason for slowing down the write speed are, 1) reduce space occupied by my data file. (2) reduce the cycles of CPU use and disk access.

The reason why I can't increase the value of 'Samples to Read' in the DAQ Assistant (to match my requirement to write data), it's that my VI will start to Miss events and triggers.

I don't know I can't be the only person who needs high-frequency data acquisition and low-frequency writing data on the disk? However, I see a straight road to key in before that.

The equipment I use is a NI USB DAQ 6008, data acquisition analog voltage to 100 Hz.

Thanks in advance for your help

See you soon

Kim

Dohhh!

The re - set feature has not been put in time elapsed VI!

Thank you very much!

See you soon

Kim

-

Access to the data in the file permissions

I have a html file that is generated by a third-party application.

I want to give only the permissions to write data in the file, I don't want to give permissions to remove content.

(it's not the file's content in the file)

How can I put it please suggest me.As I said... I don't think that what you want is possible.

If someone can write (Edit) a file, then it can also remove all the contents of this file.It seems to me that you are looking for a software revision control... something that helps other people to make changes to a [copy of a] set of files, but gives a control authority the word to say as to when and if changes go in the direct copy. It also regression to an earlier point in time.

"Comparison of revision control software.

<>http://en.Wikipedia.org/wiki/Comparison_of_revision_control_software >HTH,

JW -

How to use the date of the file, when you download a file?

Using the basics of 12 c, APEX 5.0 and IE11

I am creating a file upload feature for my application. I'm able to get the uploadé file in my table, but one of my requirements is that I fill in a value in the table with the "file date".

Is there an easy way to get the "file date" in the file being downloaded and fill a field for all records with this date?

Tillie

Tillie says:

Using the basics of 12 c, APEX 5.0 and IE11

I am creating a file upload feature for my application. I'm able to get the uploadé file in my table, but one of my requirements is that I fill in a value in the table with the "file date".

Is there an easy way to get the "file date" in the file being downloaded and fill a field for all records with this date?

That depends on your definition of 'easy '...

The HTML5

Fileinterface can be used to access date in browsers supportthe file was last modified.1. Add a hidden page that contains the file download point (P6_FILE_DATE in this example).

2. create a dynamic action of change on the file upload question (P6_FILE):

Event: Change

Selection type: Agenda

Region:

Real Actions

Action: Run the JavaScript Code

Fire on Page load: NO.

Code

console.log('Get file modification date:') var fileItem = $x('P6_FILE'); if (window.File) { if (fileItem.files.length > 0) { var file = fileItem.files[0]; if (typeof file.lastModifiedDate !== 'undefined') { console.log('...lastModifiedDate for file \"' + file.name + '\": ' + file.lastModifiedDate); apex.item('P6_FILE_DATE').setValue(file.lastModifiedDate); } else { console.log('...browser does not support the HTML5 File lastModifiedDate property.'); } } } else { console.log('...browser does not support the HTML5 File interface.'); }If the browser has support for the

Fileinterface andlastModifiedDateproperty, the date, the file was last modified will be presented in the P6_FILE_DATE article in the form:Fri Jul 17 2015 10:43:44 GMT+0100 (GMT Daylight Time)You can analyze and convert your data load process to obtain a TIMESTAMP or DATE value according to the needs.

-

My select statement fails with the error:

The ORA-19011 string buffer too small

The select statement looks like:

SELECT TO_CLOB)

XMLELEMENT ("accounts",

XMLELEMENT ("count",

XMLATTRIBUTES)

rownum AS "recordId."

To_date('20130520','YYYYMMDD') AS "datestarted."

123456 AS "previousBatchId."

56789 AS 'previousRecordId '.

),

....

.... .

.....

XMLFOREST)

SIG_ROLE AS "SignatoryRole."

To_char(TRANSFER_DATE,'YYYY-mm-DD') AS "TransferDate."

NVL(Reason,0) AS 'reason '.

) AS the 'transfer '.

)

()) AS CRDTRPT

OF ANY_TABLE;

- It looks like I can choose only 4000 characters using the SELECT statement (please, correct me if I'm wrong)

I'd use the XMLGEN package. But the environment team says no mounted drives in the future with the arrival of the EXADATA.

NO HARD DRIVE MOUNTED, NO ACCESS TO THE DATABASE DIRECTORIES

No UTL_FILE

I need to use the REEL spool the resulting XML data of the SELECT query.

SQL is a standard in my org, but I can do with a PL/SQL solution also to load data into a table (cannot use a COIL with PL/SQL)

What I do is:

- a column of type CLOB to a xml_report of the loading of the above SELECT query table

- Then using SELECT * FROM xml_report to SPOOL the data to a file report.xml

No need of XMLTYPE data behind. Xml data stream is fine for me.

In addition, I need to validate the XML file, also using XSD.

Problem is that the resulting lines of the select query are supposed to be from 15000 to 20000 bytes long.

Oracle database version: Oracle Database 11g Enterprise Edition Release 11.2.0.3.0 - 64 bit Production

A Suggestion or a solution to this problem would be appreciated.

(Sorry for the use of "BOLD", just to make it more readable and highlight the imp points)

Bravo!

Rahul

It looks like I can choose only 4000 characters using the SELECT statement (please, correct me if I'm wrong)

You use the right method.

There is an implicit conversion from XMLType to the data type VARCHAR2 as expected by the function TO_CLOB, where the limitation, and the error.

To serialize XMLType to CLOB, use the XMLSerialize function:

SELECT XMLSerialize (DOCUMENT

XMLELEMENT ("accounts",

...

)

)

OF ANY_TABLE;

For the rest of the requirement, I wish you good luck trying to spool the XML correctly.

You may need to play around with the SET LONG and SET LONGCHUNKSIZE commands to operate.

-

source of XML data in the db column?

Hello world

If I want to use XML data as the source of data for BI Publisher, these data can be stored in a column of data

or do I have to store the data from the source XML files in a file system?

If it can be stored in a column db - is there something I need to consider regarding the definition of the data source and the creation of the data model?

Thank you

Concerning

Andy

These data can be stored in a column of data

or do I have to store the data from the source XML files in a file system?

Yes

the data can be stored in

(1) table xmltype or clob or blob column or...

so you can analyze this column somehow by xquery or xmltable or...

(2) in the file of the bone

bfilename as therapy

xmltype( bfilename('GET_XML', xmlfilename.xml)) -

write table 2D data in the file but the file is empty

Hello

I tried to write all reading of powermeter data to a file in two ways, I can see the output in the Arrais indicator data but when I write to "File.vi of the worksheet" or write to 'text file', the files are empty.

Please help me.

Try to remove the vi "STOP".

I suspect, it prevents the main vi before the backup occurs.

As a second point: given that you save data inside a while loop be sure you add the data from the file and not crash each time.

wire that is a 'real' terminal 'Add file '.

Kind regards

Marco

-

LabVIEW backup only 2 seconds of data in the file

Sorry for this question of beginner, but after a few hours of searching for an answer and trying to solve the problem, I still can't understand.

I use a DAQ Assistant vi, displaying the data on a chart in waveform, and to help to write it into a file as vi to try to save the data I collect (this is a while loop). A .tdm file is created successfully, but only about 2 seconds of data (25 k samples) are saved in the file. In addition, I write custom vi set to 'Add '.

I need to save the data for the duration of my collection (between 2-20 min).

I'm sure the solution is brutally simple, but any help would be greatly appreciated.

Thank you!

surffl wrote:

Yes, I use the importer of CT in OpenOffice as provided for by the regulation.

Do you trust to OpenOffice? What do you get when you use the importer with MS Office? I don't think you have a problem with your VI, but a problem with the importing program. LabVIEW is certainly save more than 1 second of data in the file. I thought that after each section headers might have caused and the file reading problem, but if you say settting it only write a header still causes problems with importing the data, I think that the problem comes from the importer.

-

Best way to record 50 kech. / s data to the file

I'm reading a data acquisition data at a sample rate of 50 kech. / s and save it in a file. My application must run for at least a few hours. At first, I tried a model of loop of consumer-producer with a writing on measurement file Express VI, but he wrote the data too slowly, and my queue. Now, I'm trying to use the Write to VI file spreadsheet with or without a loop of producer-consumer model (see attached screws). Both seem to write data to the file, but no written records the number of datapoints I expect (the two have a lot less datapoints).

What is the best way to write data to the file? Seems like a basic question, so if it has already been discussed in detail in another forum, or if there are examples of what someone could point me to, that would be appreciated also.

Thank you!

Hvea look at "stream directly to disk with TDMS in LabVIEW".

-

I use windows xp as the operating system. I bought the new 3G huawei data card. While surfing internet, between a pop-up is displayed

«Windows-delayed write failed, windows was unable to save all data in the file C:\Documents and settings\new\Local Settings\Application Data\Google\Chrome\User Data\Default\Session Storage\004285.log.» The data has been lost. This error can be caused by a failure of your computer hardware.

After this computer freezes and I have to restart my computer. Please help me how to fix this problem.

The error basically says that he tried to write something on your hard drive and for some reason that unfinished write operation. This could indicate a bad sector on your hard disk, damaged disk or a problem with your hardware.

Whenever a problem involving a disk read or write appears, my first approach is to perform a verify operation of the disc to the hard drive. Even if this is not your problem, it is a step of good routine maintenance. Run the disk check with the "/ R" or "Repair" option. Note that the real disk check will be presented at the next reboot, will run until Windows loads completely, cannot be interrupted and can take more than a few hours to run depending on the size of your hard drive, the quantity and type found corruption and other factors. It is better to perform during the night or when you won't need your computer for several hours.

'How to perform disk in Windows XP error Cherking'

<>http://support.Microsoft.com/kb/315265/en-us >

HTH,

JW

Maybe you are looking for

-

Reseantly I baught iphone 6splus after a month the music player sometimes blank page is plurarite of I don't know what the problem is.

-

Satellite Pro A300 PSAJ5C - power cable is broken

Power of PSAJ5C 00J00W of my Satellite Pro A300 cable is broken. Which cable buy to replace? Do I need to buy a special model or simply a Toshiba cable? Thanks for your help!

-

My Satellite M70 no longer works, and caps button flashes

My problem was explained in the title. I just want to know if there is a problem or not. I work on Linux and windows XP, but the problem can happen in the BIOS.Thank you, Vivien.If you need more information, please ask me :)PS: it's the Satellite M70

-

PowerEdge T310 - install second disc as raid1 with dell server administration software?

Hi all Quick question - we have a PowerEdge T310 with a disk in it. We want to install a second drive and put them in raid1. Issues related to the: Can we use software from dell server administrator to do? This will delete the volume on the current d

-

My first problem of java exception program (helloworld)

Hello I am very new to java programming for blackberry, and I read an e-book - blackberry for beginners. OK, for the moment... I currently have 3 files in/src /... HelloWorldApp.java package com.beginningblackberry; Import net.rim.device.api.ui.UiApp