RS - 232 ascii data analysis

I have a need to compare the incoming of RS-232 ascii data tables or tables for accuracy. I can generate data using the RS-232 module, but I don't know what to do with incoming data. Orders and incoming data vary. The data is a string variable. Because it varies with the command that I don't know how to compare it to a table of choice etc.

JLW

There is an error in my formula... it is based on a variable that is not really defined.

Thus,.

strval (substr (${STR_3}, strind (${STR_3}, "current =" "") + 10, strind (${STR_3}, "(") - strind (${STR_3}, "current =" "") + 10 ")))

the syntax is:

substr (String, start, length)

STRIND (String, search string) - gives you a number that is the beginning of the search string, must be added in advance at the end of the search string

strval (string) - transforms a string in a number

So, to deconstruct the formula

Browse to the location of the number, in this case, it is immediately after "current =", so look for this string and add 10 to advance beyond it. "

Find the location of the next bracket, look for "().

Calculate the length of the number by subtracting the position of the bracket of the position of the beginning of the number

current XXXXXXX = 42.0 (ampsx10)

^ ^ ^ A B C East strind (${STR_3}, "current =" "") B = A + 10 or strind (${STR_3}, "current =" "") + 10 C = strind (${STR_3} "(" ")") length b = C - B or C - 10 A +. So, using this notation, the above formula is STRVAL (SUBSTR (STRING, A + 10, C - A + 10))

Tags: NI Products

Similar Questions

-

ASCII data logging / or something readable!

Hi, I was using the attached code to exploit/collect data from a system of GC and it used to be saved as a file in a text which I opened and transfer the data to Excel or laboratory of origin. For some reason, the format that it is written in the file has changed, and if I try to import directly to origin or something like ascii, it now says that the ascii data is not readable by columns. If I try to open in something like a block of text, it just displays as lines of symbols, perhaps ansii?

I have absolutely no idea of what maybe I changed to do this and how to return to a "readable" format, any help would be greatly appreciated!

Thanks, Kelly

OK, convert your DBL timestamp and use the following format (be sure to set the schema format display of \-codes first constant!):

%.5e\t%.5e\n

-

Data analysis for peak max after sampling offsets

In the attached VI, each channel is to measure a point of acceleration, which appears as a global maximum on the chart. Ideally, what I want to happen is for both tables to search for their highlight (global max) and the difference in time between these two peaks are shown on the screen after that program ended. However, what I have right now is a sort of measure in real time. Any suggestions on going about doing this?

Thank you

John

Hey Johnny,

Every time that you run in the loop, you recalculate the value of the difference. When the loop is running again, it clears the value you had before. There are tons of ways that solve you the problem. A lot of it depends on if you need data analysis while you're in the loop. If you want to check it after it's done running, you can connect just the difference on the edge of the while loop and turn on indexing by right-clicking on the tunnel. This will save the value of the difference of each iteration of the while loop. You can also save under condition maybe (maybe you only care about the difference if there is between certain values) in writing to a text file by using the writing of text file or by building an array in a structure of housing. These are just some thoughts.

-

Import ASCII dates with different format

Hello

I need to import data from a CSV of ASCII.

The problem is the date format in this particular data file (.csv)

The date format for day< 10="" and="" month="" from="" jan="" to="" sep="">

AAAA/_M/_Dthe character '_' is a simple space, not an underscore literally

The format of the date days > = 10 and Jan to Sep month is:

_M/JJ/AAAA

The format of the date days > = 10 and months of Oct to dec is:

YYYY/MM/DD

The date format for day< 10="" and="" months="" from="" oct="" to="" dec="">

YYYY/MM/_D

Possible solution:

I already create a routine that recognizes the date in the title and one of the 4 (.stp) filter uses for data processing. These are the works.

Next problem:

The days are separated into 2 files, a file from 09:00 to 21:00 one day and the other from 21:00 to 09:00 on the following day. It's the way I've lost data when the day changes from 9th to the 10th of each month and the month change of Sept Oct and Dec to Jan.

I just need to delete the blankspace unconfortable before the changes of dates 2-digit, but I do not know how to deal with the before CSV imported to tiara (10.2).

My other idea is to recognize this file and any double (once with each please) but I need to exactly position the import is not the problem with the data of novalue. (Until know I got complicated for a simple mistake of blankspace).

I hope that you have ideas...

Thanks in advance...

I don't know if I understand that measures are not clear. Here's what I think you want to do:

(1) load data from different files in DIAdem

To do this, you must use "DataFileLoad("E:\Customer_Requests\caracasnet\log(111231).csv","caracasnet_log","Load") call.

You call DataFileLoad for each of the files.

(2) you want to concatenate the groups.

This should be no different than what you've done up to now(3) you want to store the data in a file (TDM).

To do this, you must call DataFileSave (...)Let me know if you have any other questions...

-

How to use Labview to send commands to Excel on data analysis

Hello, here's my situation:

I have a Labview VI that reads data from various sensors and uses the report generation tool to send all the data values to Excel. Because each race is ~ 8-10 hours long and there is tons of data, I'm not worth it to store them in a table in my VI.

However, in my Excel report I would like to include some statistics, like the initial report, max and min values of all the data from these points. Should what kind of command I send my VI to get Excel to do this? Or would it be easier to do within Labview instead?

Thanks to anyone who can give some advice!

Hello, Kalyie!

Puneet.Kapoor is indeed correct, you can create an Excel macro and call from LabVIEW. An example of this in LabVIEW under help > find examples. When searching for "Excel", you will find 'Excel Macro example.' It would be more useful for the automation of your functions, or if you try to do more complex operations on your data in Excel (min and max are simple relativelty, however).

As we have also seen, it may be easier to work with the data once it is in excel, using the '(Max) =' and the functions '= Min ()' - the choice is yours!

-

The JSON Foursquare data analysis

Hello world

I'm trying to parse a JSON data I got foursquare place search webservice.

What I really need, is only the City and the country of the result value of the research of venues. I'm not trying to bind the data to a lisy

Any thoughts? Thank you!!

The code below does not work

JsonDataAccess jda; QVariant jsonQVariant=jda.load(networkReply).value

(); QVariantList venueList = jsonQVariant.toMap()["venues"].toList(); foreach (QVariant v, venueList) { QVariantMap venueMap = v.toMap(); showToast(venueNameMap.value("city").toString()); } Below is the result of Foursquare sample json I need to analyze.

{"meta":{"code":200}, "response": {"venues": [{"id":"4cd8a50c15d8b60c4e31230e", "name":"HSBC Bank", "contact":{}, "location": {"lat":4.5850378697477656, "lng":92.70275974273682, "distance":77, "city":"Kuala Lumpur", "state":"Selangor", "country":"Malaysia", "cc":"MY"}, "canonicalUrl":"https:\/\/foursquare.com\/v\/kompleks-asia-mega-mas\/4cd8a50c15d8b60c4e326a0e", "categories": [{"id":"4bf58dd8d48988d130941735", "name":"Building", "pluralName":"Buildings", "shortName":"Building", "icon":{"prefix":"https:\/\/foursquare.com\/img\/categories_v2\/building\/default_","suffix":".png"}, "primary":true}], "verified":false, "restricted":true, "stats":{"checkinsCount":2872, "usersCount":858, "tipCount":6}, "specials":{"count":0,"items":[]}, "hereNow":{"count":1,"groups":[{"type":"others","name":"Other people here","count":1,"items":[]}]}, "referralId":"v-1372731815"}, .......Try something like this:

QString jsonString = QString("{response: {venues: [{},{}]}}"); bb::data::JsonDataAccess jda; QVariant list = jda.loadFromBuffer(jsonString); QVariantMap result = list.value(); QVariantMap response = result.value("response").toMap(); QVariantList venueList = response.value("venues").toList(); foreach (QVariant venue, venueList) { QVariantMap venueMap = v.toMap(); } -

The clob data analysis - helps in the regular Expression

Hello

Need to analyze a clob and and get its value.

with the temp as

(select '-dn=cn=9245fe4a-d402-451c-b9ed-9c1a04247482,ou=people,dc=idauth-userstore,dc=standardlife,dc=com' as double val)

Select * Temp;

"Analysis to include only the characters after ' dn = cn =" and ending with a comma, so I need to value the O/P as feadcbedca

How to achieve this using regular expressions.

Also is it a useful site to learn Oracle regular expressions for beginners like me.

If yes give me the link, it will be useful.

Thanks in advance

SQL> with temp 2 as 3 ( 4 select '- dn=cn=9245fe4a-d402-451c-b9ed-9c1a04247482,ou=people,dc=idauth-userstore,dc=standardlife,dc=com' as val from dual 5 ) 6 select regexp_replace(ltrim(regexp_substr(val, 'dn=cn=[^,]+'), 'dn=cn='), '[^[:alpha:]]') val 7 from temp; VAL ---------- feadcbedca SQL>

-

Functions in pipeline for the csv data analysis?

Hi all

I currently have a pl/sql procedure that is used to load and parse a CSV file in a table of database within the Apex.

Downloading csv files are quite large (nearly 1 million rows or more) and there is a time of significant waiting for the course ends. I tried both Wizard 4.2 data that was very slow loading and the apex plugin excel2collection who timed out/never finished.

I heard functions in pipeline and how they can offer great time savings for insert instructions where the database lines have no interconnect/dependencies to each other.

My question is, would the data through pipes to offer me a gain with my time insert statements, and if so someone could help me to implement? The current procedure is listed below, less any code validation etc. for readability. The CSV is first uploaded to a table in a BLOB file before be analyzed by the procedure.

-- Chunk up the CSV file and split into a line at a time rawChunk := dbms_lob.substr(bloContent, numChunkLength, numPosition + numExtra); strConversion := strConversion || utl_raw.cast_to_varchar2(rawChunk); numLineEnd := instr(strConversion,chr(10),1); --This will return 0 if there is no chr(10) in the String strColumns := replace(substr(strConversion,1,numLineEnd -numTrailChar),CHR(numSpacer),','); strLine := substr(strConversion,1,numLineEnd); strLine := substr(strLine,1,length(strLine) - numTrailChar); -- Break each line into columns using the delimeter arrData := wwv_flow_utilities.string_to_table (strLine, '|'); FOR i in 1..arrData.count LOOP --Now we concatenate the Column Values with a Comma strValues := strValues || arrData(i) || ','; END LOOP; --Remove the trailing comma strValues := rtrim(strValues,','); -- Insert the values into target table, one row at a time BEGIN EXECUTE IMMEDIATE 'INSERT INTO ' || strTableName || ' (' || strColumns || ') VALUES (' || strValues || ')'; END; numRow := numRow + 1; --Keeps track of what row is being converted -- We set/reset the values for the next LOOP cycle strLine := NULL; strConversion := null; strValues := NULL; numPosition := numPosition + numLineEnd; numExtra := 0; numLineEnd := 0; END IF; END LOOP;Apex-user wrote:

Hi Chris,

I'm trying to expand your code to use more tou both current columns, but having trouble with the format here...

- While (l_clob) dbms_lob.getlength > l_off and l_off > 0 loop

- l_off_new: = instr (l_clob, c_sep, l_off, c_numsep);

- line (csv_split_type)

- substr (l_clob, l_off, instr (l_clob, c_sep, l_off)-l_off)

- , substr (l_clob, instr (l_clob, c_sep, l_off) + 1, l_off_new - instr (l_clob, c_sep, l_off) - 1)

- ));

- l_off: = l_off_new + 2; -to switch c_sep and line (10 sep

How can I add more columns to this code? I'm mixed with all segments of substr and instr.

I've done a rewrite on it (12 sec for 50,000 lines, 4 columns ~ 7 MB, 2.2 sec for 10,000 lines)

create or replace function get_csv_split_cr (blob p_blob)

return csv_table_split_type

pipelined

as

c_sep constant varchar2 (2): = "";

c_line_end constant varchar2 (1): = Chr (10);

l_row varchar2 (32767).

number of l_len_clob;

number of l_off: = 1;

CLOB l_clob;

-below is used only for the call of dbms_lob.converttoclob

l_src_off pls_integer: = 1;

l_dst_off pls_integer: = 1;

number of l_ctx: = dbms_lob. DEFAULT_LANG_CTX;

number of l_warn: = dbms_lob. WARN_INCONVERTIBLE_CHAR;

Start

DBMS_LOB.CREATETEMPORARY (l_clob, true);

DBMS_LOB.converttoclob (l_clob, p_blob, dbms_lob.lobmaxsize, l_src_off, l_dst_off, dbms_lob. DEFAULT_CSID, l_ctx, l_warn);

-Attention: hypothesis that there is at least a 'correct' csv-line

-should perhaps find a better guard condition

-Hypothesis: last column ends with the separator

l_len_clob: = length (l_clob);

While l_len_clob > l_off and l_off > 0 loop

l_row: = substr (l_clob, l_off, instr (l_clob, c_line_end, l_off)-l_off);

line (csv_split_type)

-start of the first; occurrence - 1

substr (l_row, 1, instr (l_row, c_sep) - 1)

-first; second occurrence; accident - first; occurrence

, substr (l_row, instr (l_row, c_sep, 1, 1) + 1, instr (l_row, c_sep, 1, 2) - instr (l_row, c_sep, 1, 1) - 1)

-second; third occurrence; occurrence - second; occurrence

, substr (l_row, instr (l_row, c_sep, 1, 2) + 1, instr (l_row, c_sep, 1, 3) - instr (l_row, c_sep, 1, 2) - 1)

- and so on

, substr (l_row, instr (l_row, c_sep, 1, 3) + 1, instr (l_row, c_sep, 1, 4) - instr (l_row, c_sep, 1, 3) - 1)

));

l_off: = l_off + length (l_row) + 1; -to switch c_sep and line (10 sep

end loop;

return;

end;

You must change the csv_split_type also.

Update: I had to correct, combined version of two upward.

-

How to set up a chart and store data points in a table to allow me to do the analysis with him

Hello

I am relatively inexperienced, with the help of labview and I try to do the following with the help of an oscilloscope Tektronix TDS 2001 C but have no idea how start.

(1) load a previously saved regarding the volts/div and setting s/div on oscilloscope

(2) pass everything that appears on the o-scope on my computer screen

(3) save the data table so that I can do a scan with it

I watched some of the diagrams of block of example provided with the driver I downloaded for this particular unit but am still very confused. Any help would be greatly appreciated

Hello Chron0,

I know I guess a little here, but have you downloaded a specific device of ni.com/idnet driver? This is a good place to find the built-in drivers for third-party devices, and there is one for this particular model. That being said, when I looked at the examples in the downloaded zip file, I saw a couple of predefined options that look like they should satisfy question #2 of your project based on the method to use to acquire a waveform (permanently, several waveforms, etc.). Similarly, in the Public folder is a configuration of channel VI which I think should satisfy what you are wanting to do to the #1 question.

Depending on what you are willing to do data analysis, with regard to the software used, functions, etc., there are several reporting tools in LabVIEW which will allow you to save the table and manipulate later. A good place to begin with, it will be to search for "File i/o" in the search bar helps LabVIEW and select the option under the heading "Palette." There are several options here that I think might be useful, including writing in the file.vi worksheet.

It will be also good to be familiar with the Finder example OR in LabVIEW. There are examples of spreadsheets / saving data, as well as VISA, which you can do some reading on, because the interface VISA, it's how you communicate with this device (Fortunately, the driver already download it!)

Good luck to go ahead and keep us informed here on your project!

-Dave C

Technical sales engineer

National Instruments

-

Analysis of jpeg data. I can't go down in photoshop, loads of photo...

Hello.

I have pictures of the Philippines who have been downloaded on me. using the normal method. direct.When I try to place them in my Photoshop I get this message..."Could not complete your request, due to a problem, jpeg data analysis".This, I do not understand.Thank you to.Danny.You have pictures saved in a folder on your hard drive?

Can you open in paint?

-

Analysis of JPEG data problem?

Why I get the error "Could not complete your request because of a problem with JPEG data analysis" when I try to open the photo files in the elements (7,0) editor? I was able to open these files in Photoshop, a few weeks ago and now I get an error. This happens to a lot of my files. How can I fix it? Please help me!

I found a solution, however, only for MAC users:

Step 1: Download the image to your "downloads" folder

Step 2: Open your "Photos" on your Mac program. (I currently run OS El Capitan)

Step 3: Go to your 'File' menu and scroll down to "import".

Step 4: When the window opens, go to your folder that you have saved your photo in "downloads". Click on the name of your photo.

Step 5: After the opening of your photo, return to the 'File' menu, but this time click 'Export '. It will have an arrow that goes to the right and click on "export 1 Photo... »

Step 6: When the window opens, click on the "file type" JPEG to TIFF.

Step 7: After you change the type of file, go down to the right of the window and click "export."

Step 8: Click on the location you want to save the file, and then click New at the bottom right on "export".

The file appears in the folder you selected. I opened the files with success in different versions of Photoshop (2014 and later versions).

-

Hello

I have a few questions about how to manage and store data.

I am witing a calibration software where I need to display the chart with the probes.

then table of current vs probe 10 times Ref then calculations such as average, sigma, then conversion and data storage.

Using data from the previous program files, I have identified a type of format.

I reproduced it as a cluster with cluster as table follows this im using as a temporary variable when acquiring and pending approval of the user.

After that I'll have to move from cluster to a vi convert txt to be operated by another application.

My question is:

is it a good way cluster using rather vs. string table

one is typed so much more easy don't make mistake affecting each measure and the data calculated in the right place

but less if the cluster size has to change

with an array of strings, change can be easier but it is easy to make the mistake of index and by placing it in the wrong cell

What would you use?

Best regards

Tinnitus

Attached cluster VI

While I agree with Mark that by using the software OpenG is write a much easier configuration file (in fact you can use the variant to the ini cluster just write the table once), it seems you are actually asking how to store data, no? In this case, the format of configuration file would seem inappropriate if you plot the data that you need another script to extract data from this format. If you try to write data that the customer can extract, then the way you suggest (without having seen) is not unreasonable. Have that said, you considered PDM. There are plugins for playback of this format for several packages of data analysis (Igor, Matlab, Excel, etc.) and writing to the file in this format is child's play. There were converters even in ascii as others have developed on this site (I have not really tested it). Anyway, just a thought.

Cheers, Matt

-

How to view the monthly/annual statistics in terms of time past/calories burned, broken down by each individual activity such as run elliptical/outside etc. Y at - it a third party application that can help me to collect and display these data?

Hello

It is not currently possible to review the data the application integrated in activity or training on this basis. If you want Apple to consider adding this feature, you can suggest here:

https://www.Apple.com/feedback/watch.html

However, health and fitness data from other sources, iPhone, and Apple Watch are registered and grouped within the health on iPhone app. These data can be exported, which you may find useful to track the cumulative progress and/or analyze your activity more in detail.

IPhone app activity also has a button for sharing (top right of the screen) that allows to share data - including social media, Messages, Mail, Notes, and a printer.

Include third-party applications that can be useful, for example:

Access to QS

-"Access your HealthKit data in a table so you can Explorer using numbers, Excel, R, or any other tool compatible CSV."

- https://itunes.apple.com/gb/app/qs-access/id920297614?mt=8

SpectaRun workouts

-"View from the workouts of your Apple Watch on your iPhone and to export these workouts so you can download them to your favorite online running community."

- https://itunes.apple.com/gb/app/spectarun-workouts/id991723862?mt=8

Data can also be exported directly from the application of the health (Health Data > All - Share at the top button on the right).

Check the descriptions and support resources for third party applications for supported details of import and data analysis features.

More information:

Use the activity on your Apple Watch - Apple Support

-

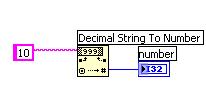

How can I convert data to a string in all types of data available in Labview?

Data analysed from the XML parser to give me all the data and specifications in the format string. I want to convert these data into various data (all available) types. Is there a possible solution for it?

Use a decimal number string

-

Digital acquisition of data streams

Hello

I tried (unsuccessfully) to acquire digital data of a sensor laboratory. The Guide from the manufacturer:

"When the excitement of voltage, DTC Decagon sensor makes a measurement. Three measurement values are passed for about 140 ms of excitement to the data logger as a character of flow series of ASCII. The series is 1200 asynchronous baud with 8 data bits, no parity and one stop bit. The voltage levels are 0-3, 6V and logical levels are (low active) TTL. The power must be removed and repeated his request for a new set of values to pass. The ASCII data stream contains three numbers separated by spaces. The first number is the depth of the water in mm, the second number is the temperature in degrees Celsius, with a resolution of 0.1 degree C, and the third number is the electrical conductivity in dS/m, with a resolution of 1 dS/m. A carriage return follows the three digits, then the character ' t "", which indicates that it is a sensor DTC, and then a control character, and finally a carriage return and supply line. ' "

My attached VI is a little dubious-it probably looks like something that someone used for analog signals may create. However, I was hoping it would be enough of a starting point for a more wise to work with LabVIEWer.

Thank you very much

Lacksagoo

The guide said in reality as a data acquisition card OR is the same as a data logger. I've never associated the two as being the same. What I think about a data logger is an autonomous instrument.

Looking for a more details in your original post, the voltage levels are not standard. You may need a shifter level or need to find a USB-RS232 adapter for TTL levels.

Maybe you are looking for

-

Hi, I can see there are a large number of threads on here about what could all boil down to the same problem, none of them seem to offer a clear explanation, or better yet a resolution. I have an iMac 2011 with 3 TB Drive merger and all the bells an

-

I have a HP Compaq presario CQ60 and Im locked and my number is 53428307 please help

-

Problems installing System Center Data Protection Manager 2010 in Server 2008 r2 DataCenter

I try to install DPM 2010 and I installed all 4 of the installation of packages and they are all in the same folder according to the installation instructions on Microsoft Technet for DPM site 2010 and I clicked on the 1st package install and it unpa

-

EOS 6 d zoom in remote mode live view sharper?

Hello I have this Canon EF 70-300mm Zoom lens on my EOS 6 d, connected via EOS Utility Remote on my Macbook Pro running MacOS 10.9.1. Everything works very well, and I'm really impressed by the level of detail that I can achieve by zooming in on the

-

What is the best pen for my new S2340T? The monitor of love! Thank you Howard