Size for the imported table descrepancy

Version: 11.2OS: RHEL 5.6

I imported a large table with (450 columns). I'm a little confused about the size of this table

Import: Release 11.2.0.1.0 - Production on Wed Aug 1 14:08:42 2012

Copyright (c) 1982, 2009, Oracle and/or its affiliates. All rights reserved.

;;;

Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.1.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

Master table "SYS"."SYS_IMPORT_FULL_01" successfully loaded/unloaded

Starting "SYS"."SYS_IMPORT_FULL_01": userid="/******** AS SYSDBA" DIRECTORY=dpump2 DUMPFILE=sku_dtl_%U.dmp LOGFILE=impdp_TST.log REMAP_SCHEMA=WMTRX:testusr REMAP_TABLESPACE=WMTRX_TS:TESTUSR_DATA1 PARALLEL=4

Processing object type TABLE_EXPORT/TABLE/TABLE

Processing object type TABLE_EXPORT/TABLE/TABLE_DATA

. . imported "TESTUSR"."SKU_DTL" 7.311 GB 9502189 rows <-------------------------------------------

Processing object type TABLE_EXPORT/TABLE/GRANT/OWNER_GRANT/OBJECT_GRANT

Processing object type TABLE_EXPORT/TABLE/INDEX/INDEX

Processing object type TABLE_EXPORT/TABLE/CONSTRAINT/CONSTRAINT

.

.SQL> select sum(bytes/1024/1024/1024) from dba_segments where owner = 'TESTUSR' AND SEGMENT_NAME = 'SKU_DTL' AND SEGMENT_TYPE = 'TABLE';

SUM(BYTES/1024/1024/1024)

-------------------------

2.28027344

-- Verifying the row count shown in the import log

SQL> SELECT COUNT(*) FROM TESTUSR.SKU_DTL;

COUNT(*)

----------

9502189-Info on indexes created for this table (if this info is of no use)

The total combined size of index into this array is 12 GB (hope the query below is correct)

SQL> select sum (bytes/1024/1024) from dba_segments

where segment_name in

(

select index_name from dba_indexes

where owner = 'TESTUSR' and table_name = 'SKU_DTL'

) and segment_type = 'INDEX' 2 3 4 5 6 ;

SUM(BYTES/1024/1024)

--------------------

12670.75The size is reported in the dump file is the amount of space the data took in the dumpfile. This does not necessarily mean that it is how much space it will discuss when they are imported.

One reason for this is that if the tablespace in that it is written is compressed or not. If the target tablespace is compressed, then once the import is complete, it will be much smaller than what has been written for the dumpfile.

I hope this helps.

Dean

Tags: Database

Similar Questions

-

Advanced for the import of a CD functionality disappeared.

Watch is more advanced for the import of a CD functionality. Without it you cannot copy several tracks on CD to an MP3. How can I get that back? I use iTunes 12.3.3.17 on a PC running Windows 7 Home Premium.

I guess that you are referring to the functionality of the slopes - it's still there, as long as:

- Titles are sorted by number

- You select the consecutive titles from the CD

- The function of join CD tracks is available in the drop-down Options menu.

-

I use Photos to make a schedule, and when I import two calendars of Mac, I can't play well together. By example, if I add the national holiday calendar to a personal calendar already selected for the import, national holidays replacement a date personal when the two entries in conflict. Users are allowed to several elements to show on a given date (I can add an article manually; and if I have two elements in a personal calendar, they both matter very well). Does not seem serious if I import successively or simultaneously, the holidays always seem to prevail on personal items. I am proud of my country, but want to avoid adding those who back in manually (and avoid having to check everything so closely).

Any suggestions? Thank you

John

(running Yosemite on a mini 2015)

There is no way to have both separate schedules added and share a date. Tell Apple what missing features you want restored or new features added in Photos via https://www.apple.com/feedback/photos.html.

You could create a new calendar with personal anniversaries and holidays. I think that would be the case with the exception of those holidays that might change the date to when if fell, which is Memorial Day for one.

-

where can I find the chassis for the import file in MAX to draw the circuit diagram before you buy

where can I find the chassis for the import file in MAX to draw the circuit diagram before you buy

chassis: NI SMU-1078

ini file to import into MAX

See attachment

THX

Hi again Koen,

Unfortunately, you can't simulate a complete PXI system, so we do not have the ability to simulate a PXI controller unit. The simulation, you can do is to set up the cards you want to use in you.

concerning

Lars -

What is the best size for the gigantic memory file pagefile.sys virtual?

pagefile.sys is gigantic 8 GB of files in Windows 7, I want free space of HDD on my SSD for faster backup purposes, but I don't want to hinder the performance that comes first.

I have 8 GB of DDR3 memory. What is the recommended size for the pagefile.sys for optimal performance?

Tuesday, April 6, 2010 20:44:59 + 0000, EcoWhale.com wrote:

> I wonder what would be the best size to choose for the performance as I have 8 GB of physical memory (assuming an SSD with a lot of space)?

I recommend leaving it alone and let windows decide for himself,

rather than make any custom setting. It works very well.

Ken Blake, Microsoft MVP (Windows desktop experience) since 2003

Ken Blake -

the font size for the page numbers in the index

I use 10 FM, creating an index for a book. I can adjust the font size for the text down to 9 points, but page numbers are stuck at 12 points. I can do 9 point individually, but they return to 12 points, when I save the book. What should I do to get page numbers to stay in the point 9? The same thing happens if I use the character or paragraph Designer.

Earle Fox

In the ... IX.fm file, what are the attributes of the tag IndexIXpara font by default ?

No chance that they have been rejected by a character Format or a simple substitution of element prototype <$pagenum> on The IX reference Page?

-

In Dreamweaver, there are 3 sizes of window pre defined (see photo). How can I change the size for the smartphone permanently?

Siegfried Bolz wrote:

Adding of new display sizes is not the problem, I want to change the default values for the shortcut icons on my screenshot.

No problem here on my desktop version of CS6. See these photos of my test results:

I don't use CC so I don't know why it only allows you to change the size of the window. In your picture, you have the arrows you will need to click to get the size of the right window, AFTER you have entered a new size. That's how I did on my version of CS6.

Good luck.

-

How to find the size of the partitioned tables?

How to find the size of the partitioned tables?Select nom_segment, sum (bytes) /(1024*1024) 'Size in MB' from dba_segments

where owner = 'owner name' and segment_type like '% PARTITION % '.

Group by nom_segment; -

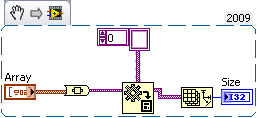

Problem of brick wall: clusters, the size of the reference table

Good morning forums,

I hit a brick wall with my application and I hope someone can help!

I have an array of clusters of size for an indeterminate period (the cluster is generic and data contained irrelevant.).

Entries to the VI are: a reference to the matrix and a Variant that contains the data.

My problem is that I need to determine the size of these two entries in table and I can't find a way to do it!

If the table contained a standard data type I can use the class name property to determine the data type and the size of the array returned by the property "select size" to determine the size of the dimension. With this information, I can throw the variant into the correct data type and use it to determine the size of the array. If the data is a cluster to the name class simply returns 'cluster' then I'm so not able to determine the size and can't get the Variant.

I'm not worried about the casting of data (once I've determined the size this bit is childless), I just need determine the size (and preferably number of dimensions) of the array. Is this possible?

Cheers for any help

J

Use 'Variant to data change' variant of an array of variant and take the importance of that.

Ed.

Opps missed the variable number of condition of dimensions, in this case, you want to "Format Picture" of OpenG as mentioned blawson.

-

Best app to make a custom template for the versatile tables?

I'm new as a result of creative and wonder what the best app is to make a custom template to view and edit tables such as Budgets and the Phases of the project for my clients in new design. Most of the models I find online are dull spreadsheets.

I want to:

- create my own templates with my own brand image

- Edit and adjust the information in the tables for the Phases and budget proposals.

- import data from spreadsheets or directly enter custom data

- export to PDF or JPG for quick sharing

It is better to make of spreadsheets and import them into my models? If so what is the most compatible adobe for this software? I take courses at Lynda.com and would like to know where to focus my hours of learning.

I appreciate any help!

Thank you

Gabe

If you want to make a spreadsheet, you must use the spreadsheet software as ms excellent. Adobe does not have the spreadsheet software.

and you could google that if you want something free or other choices.

-

How to take partial dump using EXP/IMP in oracle only for the main tables

Hi all

select*from v$version; Oracle Database 10g Enterprise Edition Release 10.2.0.1.0 - Prod PL/SQL Release 10.2.0.1.0 - Production "CORE 10.2.0.1.0 Production" TNS for 32-bit Windows: Version 10.2.0.1.0 - Production NLSRTL Version 10.2.0.1.0 - Production

I have about 500 huge data main tables in my database of pre production. I have an environment to test with the same structure of old masters. This test environment have already old copy of main tables production. I take the dump file from pre production environment with data from last week. old data from the main table are not necessary that these data are already available in my test environment. And also I don't need to take all the tables of pre production. only the main tables have to do with last week data.

How can I take partial data masters pre prodcution database tables? and how do I import only the new record in the test database.

I use orders EXP and IMP. But I don't see the option to take partial data. Please advice.

Hello

For the first part of it - the paintings of masters just want to - use datapump with a request to just extract the tables - see example below (you're on v10, so it is possible)

Oracle DBA Blog 2.0: expdp dynamic list of tables

However - you should be able to get a list of master tables in a single select statement - is it possible?

For the second part - are you able to qrite a query live each main table for you show the changed rows? If you can not write a query to do this, then you won't be able to use datapump to extract only changed lines.

Normally I would just extract all the paintings of masters completely and refresh all...

See you soon,.

Rich

-

What is advised to collect statistics for the huge tables?

We have a staging database, some tables are huge, hundreds GB in size. Auto stats tasks are performed, but sometimes it will miss deadlines.

We would like to know the best practices or tips.

Thank you.

Improvement of the efficiency of the collection of statistics can be achieved with:

- Parallelism using

- Additional statistics

Parallelism using

Parallelism can be used in many ways for the collection of statistics

- Parallelism object intra

- Internal parallelism of the object

- Inner and Intra object jointly parallelism

Parallelism object intra

The

DBMS_STATSpackage contains theDEGREEparameter. This setting controls the intra parallelism, it controls the number of parallel processes to gather statistics. By default, this parameter has the value is equal to 1. You can increase it by using theDBMS_STATS.SET_PARAMprocedure. If you do not set this number, you can allow oracle to determine the optimal number of parallel processes that will be used to collect the statistics. It can be achieved if you set the DEGREE with the DBMS_STATS. Value AUTO_DEGREE.Internal parallelism of the object

If you have the 11.2.0.2 version of Oracle database you can set SIMULTANEOUS preferences that are responsible for the collection of statistics, preferably. When there is TRUE value at the same TIME, Oracle uses the Scheduler and Advanced Queuing to simultaneously manage several jobs statistics. The number of parallel jobs is controlled by the JOB_QUEUE_PROCESSES parameter. This parameter must be equal to two times a number of your processor cores (if you have two CPU with 8 cores of each, then the JOB_QUEUE_PROCESSES parameter must be equal to 2 (CPU) x 8 (cores) x 2 = 32). You must set this parameter at the level of the system (ALTER SYSTEM SET...).

Additional statistics

This best option corresponds to a partitioned table. If the INCREMENTAL for a partitioned table parameter is set to TRUE and the DBMS_STATS. GATHER_TABLE_STATS GRANULARITY setting is set to GLOBAL and the parameter of DBMS_STATS ESTIMATE_PERCENT. GATHER_TABLE_STATS is set to AUTO_SAMPLE_SIZE, Oracle will scan only the partitions that have changes.

For more information, read this document and DBMS_STATS

-

Purge of work for the inner table of wwv_flow_file_objects$

Hi all

Can anyone tell me is there any internal apex job that deletes the data in table $ wwv_flow_file_object? I see the size of this table continues to increase.

I'm planing to create a job manually, if it is not there.

I use Oracle Apex 4.2.

ZAPEX wrote:

Thanks for sharing the information.

I checked the setting of the Instance. Job is enabled and is set to delete files every 14 days, but when I checked wwv_flow_files it contains files that was created on 2013.

Can you let me know what can be the reason? Is it possible that the work is broken?

It is more likely that he is not serving all types of files. It would be only broken if the retained files are of types listed in the documentation. You need to determine what these files are and how and why they have been downloaded.

You have applications that download files and not to remove / move them to a table of application? If Yes, then by editing these applications to store and manage files in the user tables rather than system tables is a better solution than blindly trying to purge everything.

-

Source monitor displays a different size for the program in CC2014 monitor

I have imported a clip and the source monitor panel shows a different size in the program monitor?

Thanks Chris

Looks like your sequence is set up for the right size. Select a video file and then right-click see 'New sequence of the Clip' if that helps. I see that your video hardware is HD do you have set up the sequence to match the stills sooner? It is perhaps greater than HD? If this is the case make a sequence of the video size and use "scale to the size of the image" for the stills.

-

to know the size of the multiple tables

Hi Oracle donations:

I need to check the size of several tables via a SQL query. It is how I could do this for a single table

Select bytes from dba_segments where nom_segment = 'table_name ';

But I need to do this for about 4 dozen tables.

Can someone point me along the corridor of law?

Thank you.

ANY 5

11.2.0.1Dan wrote:

Hi Oracle donations:I need to check the size of several tables via a SQL query. It is how I could do this for a single table

Select bytes from dba_segments where nom_segment = 'table_name ';

Select table_name, sum (bytes) in dba_segments where owner = 'schema_name' group from table_name;

Maybe you are looking for

-

best way to migrate project teststand

Hi all. I am new to teststand I have to work again on a former ramp driven by a core of teststand. to do this, I first need to copy all the data with another computer, just to see the source codes and .seq file, since I can't not work directly from t

-

Why the time display is set to 19 when I run the attached VI

Why the time display is set to 19 when I run the attached VI? Thank you.

-

I use my Toshiba Satellite 2435-S255 as a server connection to the Internet so I can access my music to any Spot Wi - Fi via my mobile device. I have a third part closed to the down/power-on demand in hibernation during the night and wake up in the

-

Security of Smartphones from blackBerry problem (device locked on idle)

Upgraded to a storm after being completely satisfied with the curve. My biggest complaint is related to security. My curve (if the active password) this would lock after sitting idle for a while. This kept prying of browsing through my files when my

-

CC of Photoshop and Photoshop CC2014 both installed

Somewhere along the line to confuse the versions and updates to day I unintentionally found myself with CC Photoshop and Photoshop CC2014 both installed. Since Photoshop CC is in my dock and I open it right there, I did not notice for a while. I'm as