Slow down execution SQL - extracting data from the FACT Table

taHi,

We have a SQL that runs slowly.

Select / * + ALL_ROWS PARALLEL (F 8) * /.

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_GRP_ID,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_BIL_UNT_ID,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_BNF_PKG_CD,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_UNDWR_CD,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_CAC_CD,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_RTMS_HLTH_COV_CD,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_ACTUR_RSRV_CATEG_CD,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_BNF_TYP_CD,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_GRP_BGIN_DT,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_GRP_END_DT,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_PLN_SEL_CD,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_SBGRP_NM,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_SBGRP_TYP_CD,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_SBGRP_TYP_DESC,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_COBRA_IND,

IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_SBGRP_POL_NBR,

IA_OASIS_GRP_CAPTR_D.GRP_POL_CD,

IA_OASIS_MBR_CAPTR_D.MBR_CAPTR_SBSCR_ALTN_ID,

IA_OASIS_MBR_CAPTR_D.MBR_CAPTR_RLNSP_CD,

IA_OASIS_MBR_CAPTR_D.MBR_CAPTR_GNDR_CD,

IA_OASIS_MBR_CAPTR_D.MBR_CAPTR_BTH_DT,

IA_OASIS_MBR_CAPTR_D.MBR_CAPTR_MBR_ID,

IA_OASIS_MBR_CAPTR_D.MBR_CAPTR_SBSCR_SCTR_CD,

IA_OASIS_MBR_CAPTR_D.MBR_CAPTR_SBSCR_RGN_CD,

IA_OASIS_MBR_CAPTR_D.MBR_CAPTR_SBSCR_ZIP_CD,

IA_OASIS_MBR_CAPTR_D.MBR_CAPTR_BDGT_RPT_CLS_CD,

IA_OASIS_MBR_CAPTR_D.MBR_CAPTR_WORK_DEPT_CD,

IA_OASIS_MBR_CAPTR_D.BSC_DUAL_IN_HOUSE_IND,

IA_OASIS_MBR_CAPTR_D.MEDCR_HICN_NUM,

IA_OASIS_CLM_SPEC_D.CLM_PRI_DIAG_CD,

IA_OASIS_CLM_SPEC_D.CLM_PRI_DIAG_BCKWD_MAP_IND,

IA_OASIS_CLM_SPEC_D.CLM_PRI_ICD10_DIAG_CD,

IA_OASIS_CLM_SPEC_D.ICD_CD_SET_TYP_CD,

IA_OASIS_CLM_SPEC_D.CLM_APG_CD,

IA_OASIS_CLM_SPEC_D.CLM_PTNT_DRG_CD,

IA_OASIS_CLM_SPEC_D.CLM_PROC_CD,

IA_OASIS_CLM_SPEC_D.CLM_PROC_MOD_1_CD,

IA_OASIS_CLM_SPEC_D.CLM_PROC_MOD_2_CD,

IA_OASIS_CLM_SPEC_D.CLM_POS_CD,

IA_OASIS_CLM_SPEC_D.CLM_TOS_CD,

IA_OASIS_CLM_SPEC_D.CLM_PGM_CD,

IA_OASIS_CLM_SPEC_D.CLM_CLS_CD,

IA_OASIS_CLM_SPEC_D.CLM_ADMIT_TYP_CD,

IA_OASIS_CLM_SPEC_D.CLM_BIL_PROV_PREF_STS_CD,

IA_OASIS_CLM_SPEC_D.CLM_BIL_PROV_PRTCP_STS_CD,

IA_OASIS_CLM_SPEC_D.CLM_ATTND_PROV_PRTCP_STS_CD,

IA_OASIS_CLM_SPEC_D.CLM_BIL_PROV_PLN_STS_CD,

IA_OASIS_CLM_SPEC_D.CLM_NDC,

IA_OASIS_CLM_SPEC_D.CLM_BRND_GNRC_CD,

IA_OASIS_CLM_SPEC_D.CLM_ACSS_PLS_OOP_IND,

IA_OASIS_CLM_SPEC_D.CLM_BNF_COV_CD,

IA_OASIS_CLM_SPEC_D.CLM_TYP_OF_BIL_CD,

IA_OASIS_CLM_SPEC_D.CLM_OOA_CD,

IA_OASIS_CLM_SPEC_D.CLM_SANCT_LAT_CALL_IND,

IA_OASIS_CLM_SPEC_D.CLM_SANCT_DED_PNLTY_IND,

IA_OASIS_CLM_SPEC_D.CLM_SANCT_COPAY_IND,

IA_OASIS_CLM_SPEC_D.CLM_SANCT_PCT_COPAY_IND,

IA_OASIS_CLM_SPEC_D.CLM_SANCT_FLAT_DLR_COPAY_IND,

IA_OASIS_CLM_SPEC_D.CLM_SANCT_HMO_ACCUM_COPAY_IND,

IA_OASIS_CLM_SPEC_D.CLM_BIL_ALLOW_APPL_CD,

IA_OASIS_CLM_SPEC_D.CLM_TMLY_FIL_APPL_CD,

IA_OASIS_CLM_SPEC_D.CLM_TPLNT_APPL_CD,

IA_OASIS_CLM_SPEC_D.CLM_ADJ_CD,

IA_OASIS_CLM_SPEC_D.GRP_PRVDR_TIER_CD,

IA_OASIS_CAPITN_CLM_D.CAPITN_CLM_SS_CD_CD,

IA_OASIS_CAPITN_CLM_D.CAPITN_CLM_HMO_COV_LVL_CD,

IA_OASIS_CAPITN_CLM_D.CAPITN_CLM_FUND_POOL_CD,

IA_OASIS_CAPITN_CLM_D.CAPITN_CLM_HMO_PRDCT_CD,

IA_OASIS_CAPITN_CLM_D.CAPITN_CLM_BNF_CATEG_CD,

IA_OASIS_CAPITN_CLM_D.CAPITN_CLM_ADJ_TYP_CD,

IA_OASIS_CAPITN_CLM_D.CAPITN_CLM_IPA_ACSS_PLS_IND,

IA_OASIS_CAPITN_CLM_D.CAPITN_CLM_FUND_ID,

IA_OASIS_CAPITN_CLM_D.CAPITN_CLM_FUND_DESC,

CLM_CLS_PLN_CAPTR_D.CLM_CLS_PLN_GRP_ID,

CLM_CLS_PLN_CAPTR_D.CLM_CLS_PLN_CLS_ID,

CLM_CLS_PLN_CAPTR_D.CLM_CLS_PLN_CLS_DESC,

CLM_CLS_PLN_CAPTR_D.CLM_CLS_PLN_PLN_ID,

CLM_CLS_PLN_CAPTR_D.CLM_CLS_PLN_PLN_DESC,

CLM_CLS_PLN_CAPTR_D.CLM_CLS_PLN_PRDCT_CATEG_CD,

CLM_CLS_PLN_CAPTR_D.CLM_CLS_PLN_PRDCT_CATEG_DESC,

CLM_CLS_PLN_CAPTR_D.CLM_CLS_PLN_PRDCT_ID,

CLM_CLS_PLN_CAPTR_D.CLM_CLS_PLN_PRDCT_DESC,

CLM_CLS_PLN_CAPTR_D.CLM_CLS_PLN_MTL_LVL_CD,

CLM_CLS_PLN_CAPTR_D.HIOS_PLN_ID,

CLM_CLS_PLN_CAPTR_D.HIX_GRP_ID,

OASIS_CLM_MBR_XREF. LGCY_SBSCR_ID,

OASIS_CLM_MBR_XREF. LGCY_MBR_SFX_ID,

OASIS_CLM_MBR_XREF. FACETS_SBSCR_ID,

OASIS_CLM_MBR_XREF. FACETS_MBR_SFX_ID,

OASIS_CLM_MBR_XREF. LGCY_CUST_ID,

OASIS_CLM_MBR_XREF. LGCY_GRP_ID,

OASIS_CLM_MBR_XREF. LGCY_BIL_UNT_ID,

OASIS_CLM_MBR_XREF. FACETS_PRNT_GRP_ID,

OASIS_CLM_MBR_XREF. FACETS_GRP_ID,

OASIS_CLM_MBR_XREF. FACETS_CLS_ID,

OASIS_CLM_MBR_XREF. FACETS_CONVER_MBR_EFF_DT,

IA_OASIS_TOT_PROV_CUR_D.PROV_ID AS BEN_BILLING_PROV,

IA_OASIS_TOT_PROV_CUR_D.PROV_ID AS BEN_ATTENDING_PROV,

IA_OASIS_TOT_PROV_CUR_D.PROV_ID AS BEN_PCP_NUMBER,

IA_OASIS_TOT_PROV_CUR_D.PROV_ID AS BEN_IPA_NUMBER,

EDW. CLM_CAPITN_F.CLM_CAPITN_FRST_SVC_DT_SK,-CAL_DT Dimension is associated and had CAL_DT_SK column

EDW. CLM_CAPITN_F.CLM_CAPITN_LST_PRCS_DT_SK,-CAL_DT Dimension is associated and had CAL_DT_SK column

EDW. CLM_CAPITN_F.CLM_CAPITN_FRST_LOC_DT_SK,-CAL_DT Dimension is associated and had CAL_DT_SK column

EDW. CLM_CAPITN_F.CLM_CAPITN_CHK_DT_SK,-CAL_DT Dimension is associated and had CAL_DT_SK column

EDW. CLM_CAPITN_F.CLM_CAPITN_ADMIT_DT_SK,-CAL_DT Dimension is associated and had CAL_DT_SK column

EDW. CLM_CAPITN_F.CLM_CAPITN_ICN,

EDW. CLM_CAPITN_F.CLM_CAPITN_LN_NBR,

EDW. CLM_CAPITN_F.CLM_CAPITN_PD_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_BIL_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_ALLOW_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_ALLOW_DY_NBR,

EDW. CLM_CAPITN_F.CLM_CAPITN_UNT_OF_SVC_QTY,

EDW. CLM_CAPITN_F.CLM_CAPITN_COB_SAV_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_COINS_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_RSN_CHRG_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_WTHLD_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_DED_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_SANCT_LAT_CALL_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_SANCT_DED_PNLTY_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_SANCT_COPAY_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_SANCT_PCT_COPAY_AMT,

EDW. CLM_CAPITN_F.SANCT_FLAT_DLR_COPAY_AMT,

EDW. CLM_CAPITN_F.SANCT_HMO_ACCUM_COPAY_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_TOT_NEGOT_RT_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_LN_NEGOT_RT_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_SEC_ALLOW_AMT,

EDW. CLM_CAPITN_F.CLM_CAPITN_GATWY_ID,

EDW. CLM_CAPITN_F.CLM_CAPITN_PROV_AGR_ID,

EDW. CLM_CAPITN_F.CLM_CAPITN_F_SNPSHOT_MO_SK

OF EDW. Partition CLM_CAPITN_F (JAN2012),

EDW. IA_OASIS_GRP_CAPTR_D IA_OASIS_GRP_CAPTR_D,

EDW. IA_OASIS_MBR_CAPTR_D IA_OASIS_MBR_CAPTR_D,

EDW. IA_OASIS_CLM_SPEC_D IA_OASIS_CLM_SPEC_D,

EDW. IA_OASIS_CAPITN_CLM_D IA_OASIS_CAPITN_CLM_D,

EDW. CLM_CLS_PLN_CAPTR_D CLM_CLS_PLN_CAPTR_D,

EDW. OASIS_CLM_MBR_XREF OASIS_CLM_MBR_XREF,

EDW. IA_OASIS_TOT_PROV_CUR_D IA_OASIS_TOT_PROV_CUR_D

WHERE

EDW. CLM_CAPITN_F.CLM_CAPITN_IA_GRP_CAPTR_EDW_SK = IA_OASIS_GRP_CAPTR_D.GRP_CAPTR_EDW_SK (+) and

EDW. CLM_CAPITN_F.CLM_CAPITN_IA_MBR_CAPTR_EDW_SK = IA_OASIS_MBR_CAPTR_D.MBR_CAPTR_EDW_SK and

EDW. CLM_CAPITN_F.CLM_CAPITN_IA_CLM_SPEC_EDW_SK = IA_OASIS_CLM_SPEC_D.CLM_SPEC_EDW_SK and

EDW. CLM_CAPITN_F.CLM_CAPITN_IA_CLM_EDW_SK = IA_OASIS_CAPITN_CLM_D.CLM_EDW_SK and

EDW. CLM_CAPITN_F.CLM_CLS_PLN_CAPTR_EDW_SK = CLM_CLS_PLN_CAPTR_D.CLM_CLS_PLN_CAPTR_EDW_SK and

EDW. CLM_CAPITN_F.MBR_XREF_SK = OASIS_CLM_MBR_XREF. MBR_XREF_SK (+) and

EDW. CLM_CAPITN_F.CLM_CAPITN_IA_BIL_PROV_EDW_SK = IA_OASIS_TOT_PROV_CUR_D.PROV_EDW_SK and

EDW. CLM_CAPITN_F.CLM_CAPITN_ATTND_PROV_EDW_SK = IA_OASIS_TOT_PROV_CUR_D.PROV_EDW_SK and

EDW. CLM_CAPITN_F.CLM_CAPITN_IA_PCP_EDW_SK = IA_OASIS_TOT_PROV_CUR_D.PROV_EDW_SK and

EDW. CLM_CAPITN_F.CLM_CAPITN_IA_IPA_PROV_EDW_SK = IA_OASIS_TOT_PROV_CUR_D.PROV_EDW_SK (+);

--------------------------------------------------------------------------------

Oracle Database 11 g Enterprise Edition Release 11.2.0.4.0 - 64 bit Production

PL/SQL Release 11.2.0.4.0 - Production

CORE Production 11.2.0.4.0

AMT for Solaris: 11.2.0.4.0 - Production Version

NLSRTL Version 11.2.0.4.0 - Production

VALUE OF TYPE NAME

------------------------------------ ----------- ------------------------------

OPTIMIZER_CAPTURE_SQL_PLAN_BASELINES boolean FALSE

optimizer_dynamic_sampling integer 2

optimizer_features_enable string 11.2.0.4

optimizer_index_caching integer 0

OPTIMIZER_INDEX_COST_ADJ integer 100

the string ALL_ROWS optimizer_mode

optimizer_secure_view_merging Boolean TRUE

optimizer_use_invisible_indexes boolean FALSE

optimizer_use_pending_statistics boolean FALSE

optimizer_use_sql_plan_baselines Boolean TRUE

Please suggest how this can be optimized.

VALUE OF TYPE NAME

------------------------------------ ----------- ------------------------------

db_file_multiblock_read_count integer 32

VALUE OF TYPE NAME

------------------------------------ ----------- ------------------------------

DB_BLOCK_SIZE integer 32768

CURSOR_SHARING EXACT string

Tags: Database

Similar Questions

-

Extract data from the table on hourly basis

Hello

I have a table that has two columns date all the hours of the base and the response time. I want to extract data from the date corresponding previous hourly basis with the response time. The data will be loaded into the table every midnight.

for example: today date 23/10/2012

I want to extract data from 22/10/12 00 to the 22/10/12 23

The sub query pulls the date as demanded, but I'm not able to take the time to answer.

with one also

(select min (trunc (lhour)) as mindate, max (trunc (lhour)) as AVG_HR maxdate)

SELECT to_char (maxdate + (level/25), "dd/mm/yyyy hh24") as a LEVEL CONNECTION dates < = * (1) 24;

Please help me on this.Try this

SELECT * FROM table_nm WHERE to_char(hour,'DD') = to_char(SYSDATE-1,'DD') -

extract data from the generic cursor xy graph

Hi, I'm new in this Forum.

In my VI I need to extract the data from the graph xy of one cursor to another, how can I do this?

I want to extract all the data between the two sliders, to develop.

Thank you

In addition to everything said altenbach, you want the slider list: property of the Index. That will tell you where in the table of data, the sliders are.

The attached VI shows how we can work and also meaningless how it can be with common types of XY data.

Lynn

-

Export data from the database Table in the CSV file with OWB mapping

Hello

is it possible to export data from a database table in a CSV with an owb mapping. I think that it should be possible, but I didn't yet. Then someone can give me some tips how to handle this? Someone has a good article on the internet or a book where such a problem is described.

Thank you

Greetings DanielHi Daniel,.

But how do I set the variable data file names in the mapping?

Look at this article on blog OWB

http://blogs.Oracle.com/warehousebuilder/2007/07/dynamically_generating_target.htmlKind regards

Oleg -

Can date in the fact table as a measure?

Dear all,

I need to migrate a form of dimensional model database relational model. IT sort of a human resources database. I don't know WHAT should I keep in the fact table. Ago only dates, such as the date, the employee joined the institution and the date that he will leave. Most of the other fields are not digital. much is also not digital, but we can calculate the duration, the employee worked from these dates.

What do you suggest me?Hello.

I suspect that you can add as a column to a minimum as calculation:

case that no employee.leave_date is zero then (employee.start_leave - employee.start_date) or null;which returns the work period.

Alex.

Published by: Alex B 07/29/2009 16:12

-

extracting data from the CLOB using materialized views

Hello

We have xml data from clob which I have a requirement to extract (~ 50 attributes) on a daily basis, so we decided to use materialized views with refreshes full (open good suggestions)

A small snippet of code

CREATE THE MWMRPT MATERIALIZED VIEW. TASK_INBOUND

IMMEDIATE CONSTRUCTION

FULL REFRESH ON DEMAND

WITH ROWID

AS

SELECT M.TASK_ID, M.BO_STATUS_CD, b.*

OF CISADM. M1_TASK m,

XMLTABLE (' / a ' XMLPARSE PASSING ())

CONTENT '< a > | M.BO_DATA_AREA | "< /a >."

) COLUMNS

serviceDeliverySiteId varchar2 (15) PATH

"cmPCGeneralInfo/serviceDeliverySiteId"

serviceSequenceId varchar2 (3) PATH "cmPCGeneralInfo/serviceSequenceId"

completedByAssignmentId varchar2 (50) PATH "completedByAssignmentId."

Cust_id varchar2 (10) PATH "cmPCCustomerInformation/customerId,"

ACCT_SEQ varchar2 (5) PATH "customerInformation/accountId"

AGRMT_SEQ varchar2 (5) PATH cmPCCustomerAgreement/agreementId"."

COLL_SEQ varchar2 (5) PATH "cmPCGeneralInfo/accountCollectionSeq"

REVENUE_CLASS varchar2 (10) PATH "cmPCCustomerAgreement/revenueClassCode"

REQUESTED_BY varchar2 (50) PATH ' attributes customerInformation/contactName',...~50

This ddl ran > 20 hours and no materialized view created. There are certain limits that we have

- Cannot create a materialized view log

- cannot change the source as its defined provider table

- cannot do an ETL

DB is 11g R2

Any ideas/suggestions are very much appreciated

I explored a similar approach, using the following test case.

It creates a table "MASTER_TABLE" containing 20,000 lines and a CLOB containing an XML fragment like this:

09HOLVUF3T6VX5QUN8UBV9BRW3FHRB9JFO4TSV79R6J87QWVGN UUL47WDW6C63YIIBOP1X4FEEJ2Z7NCR9BDFHGSLA5YZ5SAH8Y8 O1BU1EXLBU945HQLLFB3LUO03XPWMHBN8Y7SO8YRCQXRSWKKL4 ...

1HT88050QIGOPGUHGS9RKK54YP7W6OOI6NXVM107GM47R5LUNC 9FJ1JZ615EOUIX6EKBIVOWFDYCPQZM2HBQQ8HDP3ABVJ5N1OJA then an intermediate table "MASTER_TABLE_XML" with the same columns with the exception of the CLOB which turns into XMLType and finally a MVIEW:

SQL > create table master_table like

2. Select level as id

3, cast ('ROW' | to_char (Level) as varchar2 (30)) as the name

4 , (

5. Select xmlserialize (content

XMLAGG 6)

7 xmlelement (evalname ('ThisIsElement' | to_char (Level)), dbms_random.string ('X', 50))

8 )

9 as clob dash

10 )

11 double

12 connect by level<=>

(13) as xmlcontent

14 double

15 connect by level<= 20000="">

Table created.

SQL > call dbms_stats.gather_table_stats (user, 'MASTER_TABLE');

Calls made.

SQL > create table (master_table_xml)

Identification number 2

3, name varchar2 (30)

4, xmlcontent xmltype

5)

binary xmltype 6 securefile XML column xmlcontent store

7;

Table created.

SQL > create materialized view master_table_mv

2 build postponed

full 3 Refresh on demand

4, as

5. Select t.id

6, t.nom

7 , x.*

master_table_xml 8 t

9, xmltable ('/ r' in passing t.xmlcontent)

10 columns

11 path of varchar2 (50) ThisIsElement1 'ThisIsElement1 '.

12, path of varchar2 (50) ThisIsElement2 'ThisIsElement2 '.

13, path of varchar2 (50) ThisIsElement3 'ThisIsElement3 '.

14, path of varchar2 (50) ThisIsElement4 'ThisIsElement4 '.

15 road of varchar2 (50) ThisIsElement5 'ThisIsElement5 '.

16, road of varchar2 (50) ThisIsElement6 'ThisIsElement6 '.

17 road of varchar2 (50) ThisIsElement7 'ThisIsElement7 '.

18 road of varchar2 (50) ThisIsElement8 'ThisIsElement8 '.

19 road to varchar2 (50) ThisIsElement9 'ThisIsElement9 '.

20, path of varchar2 (50) ThisIsElement10 'ThisIsElement10 '.

21, road to varchar2 (50) ThisIsElement11 'ThisIsElement11 '.

22 road of varchar2 (50) ThisIsElement12 'ThisIsElement12 '.

23 road of varchar2 (50) ThisIsElement13 'ThisIsElement13 '.

24, path of varchar2 (50) ThisIsElement14 'ThisIsElement14 '.

25 road of varchar2 (50) ThisIsElement15 'ThisIsElement15 '.

26, path of varchar2 (50) ThisIsElement16 'ThisIsElement16 '.

27, way to varchar2 (50) ThisIsElement17 'ThisIsElement17 '.

28 road of varchar2 (50) ThisIsElement18 'ThisIsElement18 '.

29 road of varchar2 (50) ThisIsElement19 'ThisIsElement19 '.

30, path of varchar2 (50) ThisIsElement20 'ThisIsElement20 '.

31, path of varchar2 (50) ThisIsElement21 'ThisIsElement21 '.

32 road of varchar2 (50) ThisIsElement22 'ThisIsElement22 '.

33, path of varchar2 (50) ThisIsElement23 'ThisIsElement23 '.

34 road of varchar2 (50) ThisIsElement24 'ThisIsElement24 '.

35 road of varchar2 (50) ThisIsElement25 'ThisIsElement25 '.

36, road to varchar2 (50) ThisIsElement26 'ThisIsElement26 '.

37, path of varchar2 (50) ThisIsElement27 'ThisIsElement27 '.

38, path of varchar2 (50) ThisIsElement28 'ThisIsElement28 '.

39, path of varchar2 (50) ThisIsElement29 'ThisIsElement29 '.

40, road of varchar2 (50) ThisIsElement30 'ThisIsElement30 '.

41 road of varchar2 (50) ThisIsElement31 'ThisIsElement31 '.

42, path of varchar2 (50) ThisIsElement32 'ThisIsElement32 '.

43, road to varchar2 (50) ThisIsElement33 'ThisIsElement33 '.

44, path of varchar2 (50) ThisIsElement34 'ThisIsElement34 '.

45, path of varchar2 (50) ThisIsElement35 'ThisIsElement35 '.

46, path of varchar2 (50) ThisIsElement36 'ThisIsElement36 '.

47, path of varchar2 (50) ThisIsElement37 'ThisIsElement37 '.

48, path of varchar2 (50) ThisIsElement38 'ThisIsElement38 '.

49, path of varchar2 (50) ThisIsElement39 'ThisIsElement39 '.

50 road of varchar2 (50) ThisIsElement40 'ThisIsElement40 '.

51, path of varchar2 (50) ThisIsElement41 'ThisIsElement41 '.

52, path of varchar2 (50) ThisIsElement42 'ThisIsElement42 '.

53, path of varchar2 (50) ThisIsElement43 'ThisIsElement43 '.

54, path of varchar2 (50) ThisIsElement44 'ThisIsElement44 '.

55 road of varchar2 (50) ThisIsElement45 'ThisIsElement45 '.

56, path of varchar2 (50) ThisIsElement46 'ThisIsElement46 '.

57, path of varchar2 (50) ThisIsElement47 'ThisIsElement47 '.

58 road of varchar2 (50) ThisIsElement48 'ThisIsElement48 '.

59 road of varchar2 (50) ThisIsElement49 'ThisIsElement49 '.

60 road of varchar2 (50) ThisIsElement50 'ThisIsElement50 '.

(61) x;

Materialized view created.

The discount is then performed in two steps:

- INSERT INTO master_table_xml

- Refresh the MVIEW

(Note: as we insert in an XMLType column, we need an XML (only root) document this time)

SQL > set timing on

SQL >

SQL > truncate table master_table_xml;

Table truncated.

Elapsed time: 00:00:00.27

SQL >

SQL > insert into master_table_xml

2. select id

3, name

4, xmlparse (document '

' |) XmlContent |' ')5 master_table;

20000 rows created.

Elapsed time: 00:04:38.72

SQL >

SQL > call dbms_mview.refresh ('MASTER_TABLE_MV');

Calls made.

Elapsed time: 00:00:22.42

SQL >

SQL > select count (*) in the master_table_mv;

COUNT (*)

----------

20000

Elapsed time: 00:00:01.38

SQL > truncate table master_table_xml;

Table truncated.

Elapsed time: 00:00:00.41

-

How to extract data from the APEX report with stored procedure?

Hi all

I am doing a report at the APEX. the user selects two dates and click on the GO button - I have a stored procedure linked to this region of outcome for the stored procedure is called.

my stored procedure does the following-

using dates specified (IN) I do question and put data in a table (this painting was created only for this report).

I want to show all the data that I entered in the table on my APEX report the same procedure call. can I use Ref cursor return? How to do this?

Currently, I use another button in the APEX that basically retrieves all the data from table. Basically, the user clicks a button to generate the report and then another button for the report. which is not desirable at all :(

I m using APEX 3.1.2.00.02 and Oracle 10 database.

pls let me know if you need more clarification of the problem. Thanks in advance.

Kind regards

Probashi

Published by: porobashi on May 19, 2009 14:53APEX to base a report out of a function that returns the sql code... Your current code goes against a Ref cursor returns the values...

See this thread regarding taking a ref cursor and wrapping it in a function to channel out as a 'table' (use a cast to cast tabular function vale)...

(VERY COOL STUFF HERE!)

Re: Tyring to dynamically create the SQL statement for a calendar of SQL

Thank you

Tony Miller

Webster, TX -

problem to extract data from the database using UTL_FILE

Dear members

I make use of series for the SUBSTR and INSTR function to extract the fields of a data file and carve out spaces based on the number of post mentioned in the Excel worksheet. I was able to extract the first 3 areas namely, customer, manufacturer, product, but for quantity, requested delivery date, price, I can't do it.

the flat file structure is as follows:

Sample data in the flat file is as follows:Field Position From Position To CUSTOMER_NAME 1 30 MANUFACTURER 31 70 PRODUCT_NAME 71 90 QUANTITY 91 95 REQUESTED_SHIP_DATE 96 115 REQUESTED_PRICE 116 120

My program code is the following:BESTBUY SONY ERICSSON W580i 25 1-AUG-2008 50 BESTBUY SAMSUNG BLACKJACK 50 15-JUL-2008 150 BESTBUY APPLE IPHONE 4GB 50 15-JUL-2008 BESTBUY ATT TILT 100 15-JUN-2008 BESTBUY NOKIA N73 50 15-JUL-2008 200

I was able to extract the first 3 fields of the file data, but when I use the same SUBSTR and INSTR functions to extract then three fields I am unable to do so (I get 5, 6 on the ground as well when I extract the 4th field). I've hardcoded the position values in these functions, such as mentioned in the structure of flat file.CREATE OR REPLACE PROCEDURE ANVESH.PROC_CONVERSION_API(FILE_PATH IN VARCHAR2,FILE_NAME IN VARCHAR2) IS v_file_type utl_file.file_type; v_buffer VARCHAR2(1000); V_CUSTOMER_NAME VARCHAR2(100); V_MANUFACTURER VARCHAR2(50); V_PRODUCT_NAME VARCHAR2(50); V_QUANTITY NUMBER(10); V_REQ_SHIP_DATE DATE; V_REQ_PRICE NUMBER(10); V_LOG_FILE utl_file.file_type; V_COUNT_CUST NUMBER; V_COUNT_PROD NUMBER; v_start_pos number := 1; v_end_pos number; BEGIN DBMS_OUTPUT.PUT_LINE('Inside begin 1'); v_file_type := UTL_FILE.fopen(FILE_PATH, FILE_NAME, 'r',null); DBMS_OUTPUT.PUT_LINE('Inside begin 1.1'); LOOP BEGIN DBMS_OUTPUT.PUT_LINE('Inside begin 2'); UTL_FILE.GET_LINE (v_file_type,v_buffer); DBMS_OUTPUT.PUT_LINE('Inside begin 2.1'); select instr('v_buffer',' ', 1, 1) - 1 --into v_end_pos from dual; select substr('v_buffer', 1, 7) --into V_CUSTOMER_NAME from dual; select instr('v_buffer', ' ', 31, 2)-1 --into v_end_pos from dual; select trim(substr('v_buffer', 28, 43)) --into V_MANUFACTURER from dual; select instr('v_buffer', ' ', 45, 1) - 1 --into v_end_pos from dual; select trim(substr('v_buffer', 44, 45)) --into V_PRODUCT_NAME from dual; V_LOG_FILE := UTL_FILE.FOPEN(FILE_PATH, 'LOG_FILE.dat', 'A'); IF (V_QUANTITY > 0) THEN SELECT COUNT (*) INTO V_COUNT_CUST FROM CONVERSION_CUSTOMERS WHERE CUSTOMER_NAME = V_CUSTOMER_NAME; IF(V_COUNT_CUST > 0) THEN SELECT COUNT(*) INTO V_COUNT_PROD FROM conversion_products WHERE PRODUCT_NAME = V_PRODUCT_NAME; IF(V_COUNT_PROD >0) THEN INSERT INTO XXCTS_ORDER_DETAILS_STG VALUES (V_CUSTOMER_NAME, V_PRODUCT_NAME, V_MANUFACTURER, V_QUANTITY, V_REQ_SHIP_DATE, V_REQ_PRICE, 'ACTIVE', 'ORDER TAKEN'); ELSE DBMS_OUTPUT.PUT_LINE('PRODUCT SHOULD BE VALID'); UTL_FILE.PUT_LINE(V_LOG_FILE, 'PRODUCT SHOULD BE VALID'); END IF; ELSE DBMS_OUTPUT.PUT_LINE('CUSTOMER SHOULD BE VALID'); UTL_FILE.PUT_LINE(V_LOG_FILE, 'CUSTOMER SHOULD BE VALID'); END IF; ELSE DBMS_OUTPUT.PUT_LINE('QUANTITY SHOULD BE VALID'); UTL_FILE.PUT_LINE(V_LOG_FILE, 'QUANTITY SHOULD BE VALID'); END IF; EXCEPTION WHEN NO_DATA_FOUND THEN EXIT; END; END LOOP; END; /

It would be great if someone can tell me how to extract the three fields in the flat file.

Thank you

RomaricRomaric,

Why you use v_end_pos to trim spaces when you know the beginning and end of all columns positions?

I do not see the code when you check out the values of other 3 columns (Qty, Date & price).You can use substr underneath rather do SELECT each time.

V_CUSTOMER_NAME := SUBSTR(v_buffer,1,30); V_MANUFACTURER := SUBSTR(v_buffer,31,70); V_PRODUCT_NAME := SUBSTR(v_buffer,71,90); V_QTY := SUBSTR(v_buffer,91,95); V_SHIP_DATE := SUBSTR(v_buffer,96,115); V_PRICE := SUBSTR(v_buffer,116,120);-Raj

-

I read data from a STI flow meter using the base series writing and reading which used the VISA. The read string begins with 'OK' or an error code, then a carriage return, then the data follows streaming rate temp pressure flow rate comma comma comma comma Temp... and so on. The string ends with a CR LF. I need to pull the flow, temperature and pressure data out of the string and write it to a file. I have difficulties to find a function that will retrieve the data for the string. Any suggestions? Thank you.

Try the subset of string in the palette of the chain.

Or match the model if you want a more direct control.

Also a good way is the string analysis, but you must be willing to read oexactly, what happens or an error, pass the entrance.

-

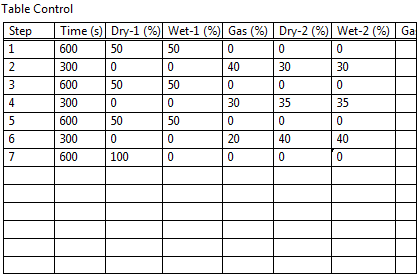

Using data from the control table

Hello

I would like to use the data entered in a table to automate volume/sequence of airflow for a test Chamber. As shown in the screenshot of control table, the first column indicates the number of iterations by elements of array in a series of tests and the second data column specifies the length of each line sequence. Entries in the other columns are specific to different valves and specify values set required in the flow meter.

I guess my question is how can I index/assign each column of the table such that the data to exploit the respective valves are obtained in subsequent iterations all acquire simultaneously with data from other components in the order? While I recognize that the solution may be very simple, I searched on through various examples and messages on the tables and the tables without knocking on a solution. The attached .vi allows me to be a part of the series of tests.

Best regards

Callisto

Hi Callisto,

If I understand your question then the solution is actually quite simple. The important point to keep in min is the fact that the Table control can actually be treated as an array of string. You can then use all the traditional table manipulation tools and techniques to manipulate your data as you wish. For example, use the Array Index function to retrieve specific columns and then if you want to spend the individual elements in a column in a loop, wire the table until the loop and ensure that indexing is enabled. If you then want to use these data elements to control your test application, you can convert a portion of the resulting string in more useful to digital.

All these concepts are illustrated in the attached VI. I hope this helps, but let me know if you have any other questions.

Best regards

Christian Hartshorne

NIUK

-

How to copy the data from the indicator table 1 d to the Clipboard

Is it possible to copy data from table 1 d indicator to the Clipboard as a text and for more than one cell?

I use LV8.6.

Leszek

Sorry, I wasn't sure if it was available in 8.6, apparently not.

You could create an indicator of fine print and use 'picture to a worksheet string' to complete. Now, you can just cut & paste the text instead.

-

Create the flat file data from the oracle table

d_adp_num char (10)

d_schd_date tank (8)

d_sched_code tank (25)

d_pay_code char (50)

d_mil_start char (4)

d_mil_end char (4)

d_duration char (5)

d_site_code char (4)

d_dept_id tank (6)

Select payroll_id,

schedule_date,

reason_code, (sched_code)

reason_code, (pay_code)

start_time,

end_time,

total_hours,

site_code,

department_id

of dept_staff

where schedule_date between (sysdate + 1) and (sysdate + 90)

loading data for the date range instead.

sched_code - if 'Unavailable' reason_code = 'OD' and 'THE '.

pay_code - "Berevevement BD" If reason_code = "BD".

"UP PTO without reasonable excuse" If reason_code = 'UP '.

"RG" If reason_code = "SH".

"PTO" If reason_code = "GO".

Here are some...

start_time and end_time - convert military time

based on start_ampm and end_ampm

On this basis, I need help to create a flat file. Sewing of the flat and data file in dept_staff sample

If site_code is there so no need to get department_id (see the sample flat file)

------------------------------------

examples of data to flat file

ZZW002324006072012 PTO

0800160008.00

ZZW002428106072012 RG

1015174507.50HM34

ZZW002391606072012 RG

1100193008.50

ZZW002430406072012 RG

1100193008.50 130000

----------------------------

dept_staff table data

REASON_CODE_1 PAYROLL_ID SCHEDULE_DATE REASON_CODE START_TIME, END_TIME START_AMPM END_AMPM TOTAL_HOURS SITE_CODE DEPARTMENT_ID

ZZW0024468 08/06/2012 HS HS 730 HAS 400 850 12 P

ZZW0000199 08/06/2012 HS HS 730 HAS 400 850 14 P

ZZW0023551 08/06/2012 SH SH 1145 A 930 975 GH08 95 P

ZZW0024460 08/06/2012 SH SH 515 HAS 330 P 1025 GH08 95

ZZW0023787 08/06/2012 SH SH 630 HAS 300 850 24 P

ZZW0024595 08/06/2012 TR TR 730 HAS 400 850 90 P

ZZW0023516 08/06/2012 OD OD 800 HAS 400 800 95 P

ZZW0023784 08/06/2012 OD OD 800 HAS 400 800 5 P

ZZW0024445 08/06/2012 SH SH 1145 A GH08 930 975 5 P

ZZW0024525 08/06/2012 OD OD 800 HAS 400 800 23 P

ZZW0024592 08/06/2012 TR TR 730 HAS 400 850 5 P

ZZW0024509 08/06/2012 SH SH 95 MK21 830 HAS 330 P 700

ZZW0023916 06/14/2012 SH SH 1100 A 850 27 730 PHow to ask questions

SQL and PL/SQL FAQUTL_FILE allows to write the OS file

-

Java code to extract data from a custom table to form

I need to transfer data from one table to the form. Please provide the java method to do this. The table has 4 columns. I need to fill in all the data form as level. It will be a great help if sombody can provide the same java code and the logic of xpress to achieve the same.Please refer to the documentation for the com.waveset.util.JdbcUtil API. The class has functions for the features you need.

-

move old data from the mysql table

I have a Web page that retrieves the content in a database. The table are filled via a restricted page that was created for the data entry. I just want to know is anyway we can push the old data to another table after so long. For example if the data was created in table1 for seven days, the eighth day, I like the data that have been put on the day 1 to table2 kind that table1 will have room for the data that will be created to update 8. I would like to only 7 days worth of data in table1. Also how to remove completely from table2 data after 90 days. Also, what must you do to have the last entry in the table of row1.of

Thank you

keramatali wrote:

what I need to do to display the data of the day 2 to day 7 instead of shoiwng given from day 1 to day 7

It's a simple matter of logic. Get the last seven days is to retrieve dates that have overtaken less seven. To get days 2 to 7, you need to dates that are less than or equal to today less than 1 AND greater than or equal to 7 today.

SELECT column1, column2, column3FROM myTableWHERE date_created <= SUBDATE(NOW(), 1) AND date_created >= SUBDATE(NOW(), 7)ORDER BY date_created DESC -

Disadvantages of the default tablespace using to store data from the partitioned table?

Can someone tell me, are there disadvantages, performance problems using default storage in oracle?

I not create any tablespace during the creation of the database, but all the data partitioned in a tablespace named 'USERS' default... I will continue using the same tablespce...? I'm storing the data in the table where the growth of the table will be great... it can contain millions of records...? It will produce no degradation of performance? Suggest me on this...Different storage areas for administration and easier maintenance. In some cases for performance reasons so different disks are representative to the database (fast and not so fast, different levels of raid...)

For example if you have several diagrams of database for different applications, you may want to separate schema objects to different storage spaces.

Or, for example, you want to keep in database read-write tablespaces and just only read. Read-write tablespaces with the data files will be on very fast disks, read only the cheaper and perhaps more slowly (not required). Again separate tablespaces makes this very easy thing to do.

Or you would like to keep separate indexes from tables and keep them in a different tablespace on the different mountpoint (disc). In this case probably better is to use ASM, but it's more than a reason to separate.And in your case-, it may be easier to manage if you create a new storage space for these new objects.

For example:

1 storage space for small tables

1 storage space for small index

1 storage space for large tables

1 storage space for large index

and so on. All depends on your particular architecture and database data growth and what you do with these data after a year, two years, three...

Maybe you are looking for

-

S5200-903 Bluetooth Installation PA3121U-1BTM

Hello worldI have an a S5200-903 where I want to install a Bluetooth PA3121U-1BTM map, which he said must fit into this computer. My problem is I don't know where it should be placed - and I guess I need an aerial cable - someone has an idea how to i

-

Outlook express password problem

I have a xp tablet and I can't express outlook to connect to att.net. I have all the protocols pop3 and smtp settings corect, but it keeps rejecting my password - which I know is correct. What should do? I checked all the settings, and I can acces

-

Source audio not available on the timeline of the program

I'm editing sequences blocking pieces, that I have already edited. However, when I select the titles that I want on my side source, they are not available on my calendar program on the map. If I run the cut audio tracks come from an arbitrarily on

-

My Lightroom does not work as it should, the part "change in" for the sending of photoshop is grayed out, so nothing works, all my creative cloud programs are up to date, I think it started after the presentation and download latest camera Raw CC. Th

-

I tried this thing over and over again nested controller, it simply doesn't train 'me '.I don't know why, I can get something as simple as that. I followed this example< fx:controller = "VBox com.foo.MainController" >< fx: include fx:id = "of dialogu