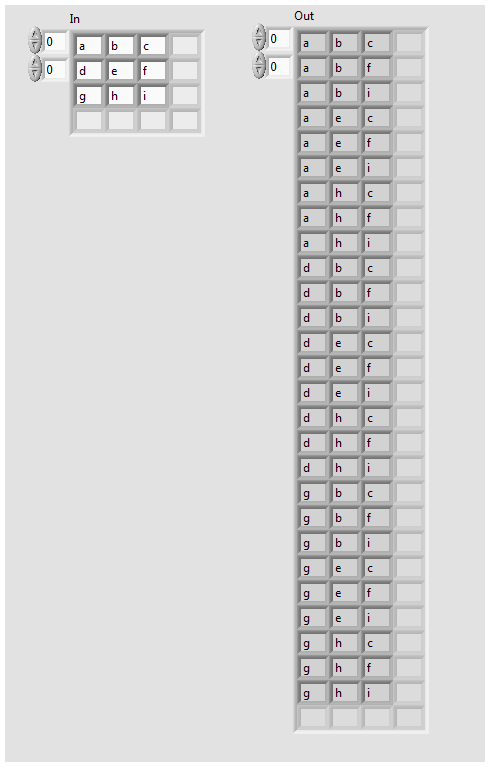

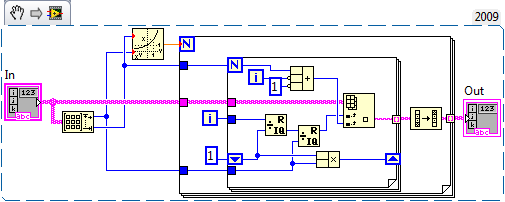

Table 2D product

What is the best way to solve the problem below? I don't know yet how to describe other I want the 'product' of a 2D array. A picture is the best way to illustrate what I want to do.

A Cartesian product under a different name is always the same. Simple mod to my version inplace of the CP.

Tags: NI Software

Similar Questions

-

How to read a table of product download?

If you generate a table of product on the supplier portal site download, what exactly counts as a "Download"? Only new customers, or re - installs also or even updates?

"All those you listed" meant that the chart displays everything you mentioned: new installs, updates, reinstall.

If you want something different, you generate a report instead, you download a CSV file and then make your own graph in a spreadsheet or whatever. Some of us create our own few tools to do this kind of thing.

There are also third-party services such as Distimo.

-

update a single table of production to dev

Oracle 9.2 in production and dev

I need to update a table from production to dev, only data refresh.

What is the best way to do it?

truncate table in dev, and then use the dblink to connect prod dev. then use insert... .dev in select... from prod.table

OR

import/export

Please throw some lights

Thank you.

S.

-

Rename the Oracle Tables in Production

If you need to rename tables Oracle in Production, the process is more efficient:

A.

TABLE xxx in EXCLUSIVE MODE LOCK

RENAME xxxTO NewName;

Renamed table.

B. dbms_redefinition

C. other...

Thank you!!All we can say is that dmbs_redefinition is an option to consider. Only the OP knows his environment, what type of window work will be done and synchronization problems between the changes of names and the move to the new code. As in the case where you need the names of new objects in place to run java compiles to move into the new classes.

At my current job we would take a window and close the database, put in restricted mode, the changes of names of the tables (and indexes associated with fine standards), move the code in place and test it. Complete testing may require bans the system or we could be able to grant only session restricted to some users. Once we are satisfied of the start us our daemon process and then release the batch schedule.

IMHO - Mark D Powell-

-

the data from the tables of production in Oracle in pipe delimited flat f

Hello

Please someone tel me the query how to extract data in oracle in pipe delimited flat files using plsql stored procedure as updates.plz incremental tel me its urgentHow to extract data in oracle in pipe delimited flat files plsql stored procedure

You can try utl_file.

and also do incremental updates.

This part, I do not understand. Updates of what? The above file?

Please tel me its urgent

Who cares.

-

Restore data deleted by mistake from the production oracle table

Forms [32 bit] Version 10.1.2.0.2 (Production)

Oracle Toolkit Version 10.1.2.0.2 (Production)

PL/SQL Version 10.1.0.4.2 (Production)

Oracle V10.1.2.0.2 - Production procedure generator

PL/SQL Editor (c) WinMain Software (www.winmain.com), v1.0 (Production)

Query Oracle 10.1.2.0.2 - Production Designer

Oracle virtual graphics system Version 10.1.2.0.2 (Production)

The GUI tools Oracle Utilities Version 10.1.2.0.2 (Production)

Oracle Multimedia Version 10.1.2.0.2 (Production)

Oracle tools integration Version 10.1.2.0.2 (Production)

Common tools Oracle area Version 10.1.2.0.2

Oracle 10.1.0.4.0 Production CORE

This morning I deleted data tables of production not having is not all correct conditions. This removed some required data.

Y at - it a command to retrieve these data? Help is appreciated. The table name is

dept_staff

"where SCHEDULE_DATE between ' 9-mar-2014' and 15-mar-2014."

I used this query

SELECT * from dept_staff

FROM TIMESTAMP

TO_TIMESTAMP ('2014-03-03 07:00 ',' YYYY-MM-DD HH)

"WHERE the SCHEDULE_DATE between ' 9-mar-2014' and 15-mar-2014."

Is this OK. Is - this show what existed in the table at 07:00 today? before deleting?

-

Forgive my question. I am very new to Oracle.

How can I make sure that changes in the key primary supplier_id (concerning the supplier table) would also appear directly in the FOREIGN KEY (supplier_id) in the products table?

Is that not all the primary key and FOREIGN KEY on?

My paintings:

I created 2 tables and connect to apply in the data base referential integrity, as I learned.

CREATE TABLE - parent provider

(the numeric (10) of supplier_id not null,)

supplier_name varchar2 (50) not null,

Contact_Name varchar2 (50).

CONSTRAINT supplier_pk PRIMARY KEY (supplier_id)

);

CREATE TABLE - child products

(the numeric (10) of product_id not null,)

supplier_id numeric (10) not null,

CONSTRAINT fk_supplier

FOREIGN KEY (supplier_id)

REFERENCES beg (supplier_id)

);

I inserted the following text:

INSERT INTO provider

(supplier_id, supplier_name, contact_name)

VALUES

(5000, 'Apple', 'first name');

I expect that the supplier_id (5000) to the provider of the table also appears in the products table under key supplier_id having the same value which is 5000. But this does not happen.

How to get there?

Thanks in advance!

Hello

What is a foreign key in Oracle?

A foreign key is a way to ensure referential integrity in your Oracle database. A foreign key means that the values of a table must appear also in another table.

Ok!??

What is now the right way to implement referential integrity in your Oracle database that the values of a table must also be included in another table?

A foreign key referential integrity indeed enfore in ensuring that the value in the child table must have a corresponding parent key (otherwise you will encounter an error, as evidenced by "SomeoneElse"). However, it will never automatically insert a row in the other table.

If you are looking for a solution that automatically inserts a record in the other table, maybe you should go for triggers:

See:

-

Large table must be moved schema

Hi guru Oracle

IM using AIX 5.3 with oracle 11.2.0.2

I just finished a job where I took a LOW partition of a partitioned table on our production database and installs all the data table into its own not partitioned our database archive. This table on Archive is 1.5 to.

Just to give you a brief overview of what I've done, successfully:

-Created a partition no table on Production with exactly the same structure as the partitioned table set apart from the partitions. Then, I moved all the subject segments in the same tablespace as the table to make it TRANSPORTABLE.

-J' then took an expdp of the metadata tables by using the transport_tablespaces parameter.

-Take the tablespace to read write I used the cp command to transfer the data files to the new directory.

-Then on the database to ARCHIVE, I used impdp to import metadata and direct them to new files.

parfile =

DIRECTORY = DATA_PUMP_DIR

DUMPFILE = dumpfile.dmp

LOGFILE logfile.log =

REMAP_SCHEMA = schema1:schema2

DB11G = "xx", "xx", "xx"...

My problem now is that there is some confusion and I traced the wrong schema, this isn't a major problem, but I wouldn't like to groom, so instead of saying REMAP_SCHEMA = schema1:schema2 must be REMAP_SCHEMA = schema1:schema3

To the question: what is the best way to occupy the table schema3 (1.5 to in scheam2).

To the question: what is the best way to occupy the table schema3 (1.5 to in scheam2).

The easiest way is to use EXCHANGE PARTITTION to just 'swap' in the segment.

You can only 'swap' between a partitioned table and a non-partitioned table, and you already have a non-partitioned table.

So, create a table that is partitioned with a score in the new schema and table not partitioned in the same pattern again.

Then exchange the old schema with the partition table in the new schema. Then share this new partition with the new table not partitioned in the new scheme. No data should move at all - just a data dictionary operation.

Using patterns HR and SCOTT. Suppose you have a copy of the EMP table in SCOTT that you want to move to HR.

- as SCOTT

grant all on emp_copy HR- as HR

CREATE TABLE EMP_COPY AS SELECT * FROM SCOTT. EMP_COPY WHERE 1 = 0;

-create a partitioned temp table with the same structure as the table

CREATE TABLE EMP_COPY_PART

PARTITION OF RANGE (empno)

(partition ALL_DATA values less than (MAXVALUE)

)

AS SELECT * FROM EMP_COPY;-the Bazaar in the segment of the table - very fast

ALTER TABLE EXCHANGE PARTITION ALL_DATA EMP_COPY_PART TABLE SCOTT. EMP_COPY;-now share again in the target table

ALTER TABLE EXCHANGE PARTITION ALL_DATA WITH TABLE EMP_COPY EMP_COPY_PART; -

Extracting data from table using the date condition

Hello

I have a table structure and data as below.

create table of production

(

IPC VARCHAR2 (200),

PRODUCTIONDATE VARCHAR2 (200),

QUANTITY VARCHAR2 (2000).

PRODUCTIONCODE VARCHAR2 (2000).

MOULDQUANTITY VARCHAR2 (2000));

Insert into production

values ('1111 ', '20121119', ' 1023', 'AAB77',' 0002');

Insert into production

values ('1111 ', '20121122', ' 1023', 'AAB77',' 0002');

Insert into production

values ('1111 ', '20121126', ' 1023', 'AAB77',' 0002');

Insert into production

values ('1111 ', '20121127', ' 1023', 'AAB77',' 0002');

Insert into production

values ('1111 ', '20121128', ' 1023', 'AAB77',' 0002');

Insert into production

values ('1111 ', '20121201', ' 1023', 'AAB77',' 0002');

Insert into production

values ('1111 ', '20121203', ' 1023', 'AAB77',' 0002');

Insert into production

values ('1111 ', '20121203', ' 1023', 'AAB77',' 0002');

Insert into production

values ('1111 ', '20130103', ' 1023', 'AAB77',' 0002');

Insert into production

values ('1111 ', '20130104', ' 1023', 'AAB77',' 0002');

Insert into production

values ('1111 ', '20130105', ' 1023', 'AAB77',' 0002');

Now, here I want to extract the data with condition as

PRODUCTIONDATE > = the current week Monday

so I would skip only two first rows and will need to get all the lines.

I tried to use it under condition, but it would not give the data for the values of 2013.

TO_NUMBER (to_char (to_date (PRODUCTIONDATE, 'yyyymmdd'), 'IW')) > = to_number (to_char (sysdate, 'IW'))

Any help would be appreciated.

Thank you

MaheshHello

HM wrote:

by the way: it is generally a good idea to store date values in date columns.One of the many reasons why store date information in VARCHAR2 columns (especially VARCHAR2 (200)) is a bad idea, it's that the data invalid get there, causing errors. Avoid the conversion of columns like that at times, if possible:

SELECT * FROM production WHERE productiondate >= TO_CHAR ( TRUNC (SYSDATE, 'IW') , 'YYYYMMDD' ) ; -

Creating a table, to replace an old

Salvation of the experts;

I'm looking for advice about what I'll do.

This is the situation;

I had a table of production with more than 1 000 000 000 of records.

queries of this table are too slow, and I decided to create a new table, to replace this one.

But my doubts are:

How can I create a new table, including the same structure as that old and with data.

I received this request:

Create new_table in select * from ancienne_table

where trans_year = '2012 '.

I know with this I got the structure, but what about clues?

Another my doubt is, to replace the old, just I drop and rename a new?

This table belongs to an oracle 9i DB.

Thanks for your comments.

Aluser12048358 wrote:

owner = raditdb exp username/password@raditdb tables = raditdata query = "where status = 'start'" file=/mnt/raditdata.dmp buffer = 50000 log=/mnt/raditdata.log "You must escape the quotation marks:

exp username/password@raditdb owner=raditdb tables=raditdata query=\"where status= 'Start'\" file=/mnt/raditdata.dmp buffer=50000 log=/mnt/raditdata.logGood luck.

-

Load data from an intermediate table into a real table by using the value of the indicator

I need a code quick and dirty pl/sql to read the intermediate table ' STG_TABLE, line by line and load data into a PROD_TABLE.» The load should fail altogether, is a restoration should occur when he there an error occurs during registration to insert into a table of production using a flag value of Y as outcome and N as a failure.

Any suggestions?Hello

It seems that if you want something like:

BEGIN :ok_flag := 'N'; INSERT INTO prod_table (col1, col2, ...) SELECT col1, col2, ... FROM stg_table; :ok_flag := 'Y'; END;RESTORATION is done automatically in case of error.

Instead of a link to ok_flag variable, you can use another type of variable that is defined outside the scope of this PL/SQL code.

Published by: Frank Kulash, June 5, 2012 22:47

-

Guys,

A basic question.when we build our ODI model we build using database development using the topology of dev and we build our interfaces using this model. When we move to production, we just need to configure the topology pointing to production and use that when we execute our interface.is that right? or do we build our model using tables of production using the topology of production and then build our interface using this model.

than the one that we need to do? or do we need the two models of odi and then use dev model to build the interface, and then when we run

Just use the topology of production... IAM get confused a bit here, could you please me clear on this.

See you soonWhen you have a standard installation of ODI: (one master repository and several repositories of work - one for the dev and test for prod).

You will define all the dev, test and prod connections in the architecture of the physical topology.

You will need to create a context for the dev, a test and one for prod.

For each of these contexts, you will link the physical according to connection. What you have to do through the logical architecture.

The models that you use in your scenario s, interfaces, procedures, variables are related to this logical architecture and will use the correct physical architecture when you choose "dev" or "test" or "prod" context when executing it. -

update to column values (false) in a copy of the same table with the correct values

Database is 10gr 2 - had a situation last night where someone changed inadvertently values of column on a couple of hundred thousand records with an incorrect value first thing in the morning and never let me know later in the day. My undo retention was not large enough to create a copy of the table as it was 7 hours comes back with a "insert in table_2 select * from table_1 to timestamp...» "query, so I restored the backup previous nights to another machine and it picked up at 07:00 (just before the hour, he made the change), created a dblink since the production database and created a copy of the table of the restored database.

My first thought was to simply update the table of production with the correct values of the correct copy, using something like this:

Update mnt.workorders

Set approvalstat = (select b.approvalstat

mnt.workorders a, mnt.workorders_copy b

where a.workordersoi = b.workordersoi)

where exists (select *)

mnt.workorders a, mnt.workorders_copy b

where a.workordersoi = b.workordersoi)

It wasn't the exact syntax, but you get the idea, I wanted to put the incorrect values in x columns in the tables of production with the correct values of the copy of the table of the restored backup. Anyway, it was (or seem to) works, but I look at the process through OEM it was estimated 100 + hours with full table scans, so I killed him. I found myself just inserting (copy) the lines added to the production since the table copy by doing a select statement of the production table where < col_with_datestamp > is > = 07:00, truncate the table of production, then re insert the rows from now to correct the copy.

Do a post-mortem today, I replay the scenario on the copy that I restored, trying to figure out a cleaner, a quicker way to do it, if the need arise again. I went and randomly changed some values in a column number (called "comappstat") in a copy of the table of production, and then thought that I would try the following resets the values of the correct table:

Update (select a.comappstat, b.comappstat

mnt.workorders a, mnt.workorders_copy b

where a.workordersoi = b.workordersoi - this is a PK column

and a.comappstat! = b.comappstat)

Set b.comappstat = a.comappstat

Although I thought that the syntax is correct, I get an "ORA-00904: 'A'. '. ' COMAPPSTAT': invalid identifier ' to run this, I was trying to guess where the syntax was wrong here, then thought that perhaps having the subquery returns a single line would be cleaner and faster anyway, so I gave up on that and instead tried this:

Update mnt.workorders_copy

Set comappstat = (select distinct)

a.comappstat

mnt.workorders a, mnt.workorders_copy b

where a.workordersoi = b.workordersoi

and a.comappstat! = b.comappstat)

where a.comappstat! = b.comappstat

and a.workordersoi = b.workordersoi

The subquery executed on its own returns a single value 9, which is the correct value of the column in the table of the prod, and I want to replace the incorrect a '12' (I've updated the copy to change the value of the column comappstat to 12 everywhere where it was 9) However when I run the query again I get this error :

ERROR on line 8:

ORA-00904: "B". "" WORKORDERSOI ": invalid identifier

First of all, I don't see why the update statement does not work (it's probably obvious, but I'm not)

Secondly, it is the best approach for updating a column (or columns) that are incorrect, with the columns in the same table which are correct, or is there a better way?

I would sooner update the table rather than delete or truncate then re insert, as it was a trigger for insert/update I had to disable it on the notice re and truncate the table unusable a demand so I was re insert.

Thank youHello

First of all, after post 79, you need to know how to format your code.

Your last request reads as follows:

UPDATE mnt.workorders_copy SET comappstat = ( SELECT DISTINCT a.comappstat FROM mnt.workorders a , mnt.workorders_copy b WHERE a.workordersoi = b.workordersoi AND a.comappstat != b.comappstat ) WHERE a.comappstat != b.comappstat AND a.workordersoi = b.workordersoiThis will not work for several reasons:

The sub query allows you to define a and b and outside the breakets you can't refer to a or b.

There is no link between the mnt.workorders_copy and the the update and the request of void.If you do this you should have something like this:

UPDATE mnt.workorders A -- THIS IS THE TABLE YOU WANT TO UPDATE SET A.comappstat = ( SELECT B.comappstat FROM mnt.workorders_copy B -- THIS IS THE TABLE WITH THE CORRECT (OLD) VALUES WHERE a.workordersoi = b.workordersoi -- THIS MUST BE THE KEY AND a.comappstat != b.comappstat ) WHERE EXISTS ( SELECT B.comappstat FROM mnt.workorders_copy B WHERE a.workordersoi = b.workordersoi -- THIS MUST BE THE KEY AND a.comappstat != b.comappstat )Speed is not so good that you run the query to sub for each row in mnt.workorders

Note it is condition in where. You need other wise, you will update the unchanged to null values.I wouold do it like this:

UPDATE ( SELECT A.workordersoi ,A.comappstat ,B.comappstat comappstat_OLD FROM mnt.workorders A -- THIS IS THE TABLE YOU WANT TO UPDATE ,mnt.workorders_copy B -- THIS IS THE TABLE WITH THE CORRECT (OLD) VALUES WHERE a.workordersoi = b.workordersoi -- THIS MUST BE THE KEY AND a.comappstat != b.comappstat ) C SET C.comappstat = comappstat_OLD ;This way you can test the subquery first and know exectly what will be updated.

This was not a sub query that is executed for each line preformance should be better.Kind regards

Peter

-

Columns of the nested Table and ADF BC 11.1.2

I think coming to a new conception of the application, including a redesign of the database. In this application, there are users who cannot change tables of production directly, but their amendments must be approved (and possibly modified) before applying them to production tables. The production tables are part of an existing system and are fairly well standardized, with a main table and several paintings of detail.

So for the new design, I want to have a table intermediate, mirrored in the main table, where the user's changes are stored until they are approved and applied to the production tables. The intermediate table contains some additional columns for the user "add, change or delete", who supported the change, the date modified is requested. After you apply the change, the intermediate folder must be copied in a historic change and deleted from the staging table. In this way, the intermediate table is never a lot of data in it.

Here's the question:

I need to treat the tables in detail. I could have staged versions of each table in detail, but I thought it might be easier to manage if detail tables have been included in table nested table columns main staging area. Most of the detail tables contain only a few rows with rank of master. But ADF BC 11.1.2 can treat the nested table columns? Is it easy to use in an application?Hello

and ADF Faces does support nested tables? lol so even if ADF BC would be, where would you go with this approach? Polymorphic views would be an option (think hard)?

Frank

-

Need to download a CSV/Excel file to a table in the page of the OFA

I have a table of products can be changed on a page of the OFA. I provide this table with the button 'Download', clicking on, the user must be able to locate the file excel/csv(It contain some products) local office and be able to download it in the products table.

No idea how this can be done?

Do we need to use messageFileUpload > get DataObject > > convert StringBuffer > > > and divide it into lines > > > > insert each line through VO > > > > > refresh the VO?

Who is the process or do we not have any other process. If Yes can you please report it to a few examples of messageFileUpload?

Thank you

Sicard.' Public Sub processFormRequest (pageContext OAPageContext, OAWebBean webBean)

{

super.processFormRequest (pageContext, webBean);

OAApplicationModule m = pageContext.getApplicationModule (webBean) (OAApplicationModule);

OAViewObjectImpl vo = am.findViewObject("XxCholaCsvDemoVO1") (OAViewObjectImpl);

If ("Go".equals (pageContext.getParameter (EVENT_PARAM)))

{

DataObject fileUploadData (DataObject) = pageContext.getNamedDataObject ("FileUploadItem");

String fileName = null;

String contentType = null;

Long fileSize = null;

Integer fileType = new Integer (6);

BlobDomain uploadedByteStream = null;

BufferedReader in = null;Try

{

fileName = (String) fileUploadData.selectValue (null, "UPLOAD_FILE_NAME");

contentType = (String) fileUploadData.selectValue (null, "UPLOAD_FILE_MIME_TYPE");

uploadedByteStream = (BlobDomain) fileUploadData.selectValue (null, fileName);

in = new BufferedReader (new InputStreamReader (uploadedByteStream.getBinaryStream ()));file = new Long size (uploadedByteStream.getLength ());

System.out.println ("FileSize" + FileSize);

}

catch (NullPointerException ex)

{

throw new OAException ("Please select a file to download", OAException.ERROR);

}try {}

Open the CSV file for reading

String lineReader ="";

t length = 0;

String linetext [];

While (((lineReader = in.readLine ())! = null)) {}

Split delimited data and

If (lineReader.trim () .length () > 0)

{

System.out.println ("lineReader" + lineReader.length ());

LineText = lineReader.split(",");t ++ ;

Print the current line current

System.out.println (t + "-" +)

LineText [0]. Trim() + "-" + linetext [1] .trim () + ' - ' +.

LineText [2]. Trim() + "-" + linetext [3] .trim () + ' - ' +.

LineText [4]. Trim() + "-" + linetext [5] .trim ());

If (! vo.isPreparedForExecution ()) {}

vo.setMaxFetchSize (0);

vo.executeQuery ();

}

Line = vo.createRow ();

row.setAttribute ("Column1", linetext [0] .trim ());

row.setAttribute ("Column2", linetext [1] .trim ());

row.setAttribute ("Column3", linetext [2] .trim ());

row.setAttribute ("Column4", linetext [3] .trim ());

VO. Last();

VO. Next();

vo.insertRow (row);}

}

}

catch (IOException e)

{

throw new OAException (e.getMessage, OAException.ERROR);

}

}

ElseIf (Upload".equals (pageContext.getParameter (EVENT_PARAM))) {" "}

am.getTransaction () .commit ();

throw new OAException ("Uploaded SuccessFully", OAException.CONFIRMATION);}

}

}

Maybe you are looking for

-

Hello! I have a problem with the #2 Microsoft tun miniport adapter. It cannot start. FileVersion pilot because it is 6.0.6001.18000 (longhorn_rtm 080118-1840). I have a problem because my internet is not working because of the adapter, so I'm not abl

-

What do you do when all printers stop working? I reinstalled the printer drivers.

-

BlackBerry Smartphones home screen photo

I changed my image of the home screen and now I don't like the look of it, how do I get the old AT & T photo back screen I checked my pictures and I do not see here. Any help? Thank you.

-

Acer computer - wx7, version premium - AMD Athlon 64 processor family. I click on my icon espeak - the program happens - but microsoft speech engine does not appear on the desktop - error message indicates that the engine is not properly installed -

-

Hi allThanks in advance.I use the following version of the database:Database Oracle 12 c Enterprise Edition Release 12.1.0.2.0 - 64 bit ProductionI want to return the values (primary key), generated by the identity column in a merge of statement. I f