Why my binary file is saved in ASCII?

Hello

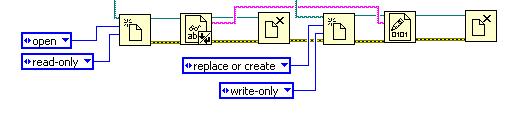

I open a text (file file.txt) file and try to save it in a binary file (file.bin). It just creates another ASCII file. The two look alike in Notepad. What I am doing wrong?

Thank you!

Shok

A "text" file is a binary file. A byte is a character, a character corresponds to a byte. What shows on the screen in Notepad is determined by the ASCII table mapping.

What did you expect to see differently in your "binary" when you read the text file for reading data and sent the data without changing anything to the function of binary to write?

Tags: NI Software

Similar Questions

-

I would rather not re - write the old of the IDE I have used for years but rather replace them with the style sheets for xml/html/web. Konqueror is running locally the binary with a launcher script. Firefox, Chrome, re-Konq, WebPositive and IE Explorer will not. If Firefox or SeaMonkey should run my binary that I could easily replace the old of the IDE and myself and my customers save a lot of time and money.

Firefox does not execute binary files for security reasons. In other words, to prevent novice users to install malicious software.

-

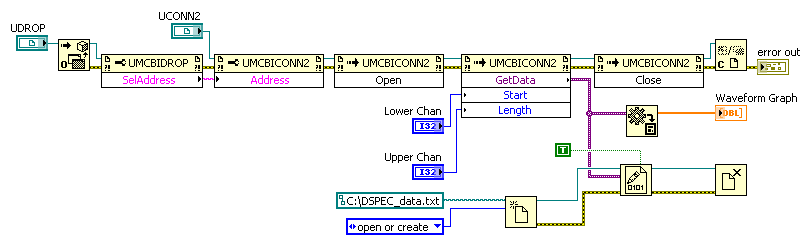

I want to save data in a binary file. This VI takes into account several channels and displays them in a bar chart. The generated waveform out with precision, but the generated text file does not save the data. It's only a few characters long, and I don't seem to be able to interpret it at all. I tried to save the data of type variant and the data after the conversion (before it is in graphic form). The files and the VI are attached below. Any advice on where I'm wrong would be greatly appreciated.

Shultz,

Do you intend to write a binary file (more effective, unreadable by humans) or (less effective, human-readable) text file? They are of two different file types.

The code in your screenshot opens a text file but then saves the binary data of the Variant. These data are likely not as ASCII (text) format so when you try to play you see what appears to be garbage (really, what happens is that your text editor's interpretation of the 1 and 0 of binary data of type variant as ASCII coding).

If the chart appears correctly, then I think that the GetData UMCBICONN2 returns an array of numbers. In this case, you want to convert this table of numbers to strings, and then use writing text VI of file to save to disk as follows:

Sorry for the screenshot - I would normally post an excerpt or at least fix the VI but I work on a development machine that does not have a version of LabVIEW on it and you don't would not be able to open any VI I saved.

I hope this helps. Best regards, Simon

-

read in a labview complex binary file written in matlab and vice versa

Dear all. We use the attached funtion "write_complex_binary.m" in matlab to write complex numbers in a binary file. The format used is the IEEE floating point with big-endian byte order. And use the "read_complex_binary.m" function attached to read the complex numbers from the saved binary file. However, I just don't seem to be able to read the binary file generated in labview. I tried to use the "Binary file reading" block with big-endian ordering without success. I'm sure that its my lack of knowledge of the reason why labview block works. I also can't seem to find useful resources to this issue. I was hoping that someone could kindly help with this or give me some ideas to work with.

Thank you in advance of the charges. Please find attached two zipped matlab functions. Kind regards.

Be a scientist - experiment.

I guess you know Matlab and can generate a little complex data and use the Matlab function to write to a file. You can also function Matlab that you posted - you will see that Matlab takes the array of complex apart in 2D (real, imaginary) and which are written as 32 bits, including LabVIEW floats called "Sgl".

So now you know that you must read a table of Sgls and find a way to put together it again in a picture.

When I made this experience, I was the real part of complex data (Matlab) [1, 2, 3, 4] and [5, 6, 7, 8] imagination. If you're curious, you can write these out in Matlab by your complex function data write, then read them as a simple table of Dbl, to see how they are classified (there are two possibilities-[1, 2, 3, 4, 5, 6, 7, 8], is written "all real numbers, all imaginary or [1, 5, 2, 6, 3, 7, 4) [, 8], if 'real imaginary pairs'].

Now you know (from the Matlab function) that the data is a set of Sgl (in LabVIEW). I assume you know how to write the three functions of routine that will open the file, read the entire file in a table of Sgl and close the file. Make this experience and see if you see a large number. The "problem" is the order of bytes of data - Matlab uses the same byte order as LabVIEW? [Advice - if you see numbers from 1 to 8 in one of the above commands, you byte order correct and if not, try a different byte order for LabVIEW binary reading function].

OK, now you have your table of 8 numbers Sgl and want to convert it to a table of 4 complex [1 +, 2 + 6i, 5i 3 +, 4 + i8 7i]. Once you understand how to do this, your problem is solved.

To help you when you are going to use this code, write it down as a Subvi whose power is the path to the file you want to read and that the output is the CSG in the file table. My routine of LabVIEW had 8 functions LabVIEW - three for file IO and 5 to convert the table of D 1 Sgl a table of D 1 of CSG. No loops were needed. Make a test - you can test against the Matlab data file you used for your experience (see above) and if you get the answer, you wrote the right code.

Bob Schor

-

Hello

Today, I need to import data from a binary file / mixed text. The structure is

CH1 octet1 Ch1 Ch2 Ch2 octet2 CrLf octet1 octet2

CH1 octet1 Ch1 Ch2 Ch2 octet2 CrLf octet1 octet2

CH1 octet1 Ch1 Ch2 Ch2 octet2 CrLf octet1 octet2

My first attempt was a DataPluginExample3.VBS updated the

Void ReadStore (File)

Sun block: SetBlock = File.GetBinaryBlock)

Dim Channel1: Set channel 1 = Block.Channels.Add ("Low-Timer", eU16)

Canal2 Dim: Set Canal2 = Block.Channels.Add ("High-Timer", Ue16)

Dim canal3: Canal3 Set = Block.Channels.Add ("CrLf", Ue16)

Dim ChannelGroup: Set ChannelGroup = Root.Channelgroups.Add ("ESR_Timing")

ChannelGroup.Channels.AddDirectAccessChannel (Channel1)

ChannelGroup.Channels.AddDirectAccessChannel (Channel2)

ChannelGroup.Channels.AddDirectAccessChannel (Channel3)

"(Kanal 3 ist nur $OD$ OA CrLf)"End Sub

Unfortunately, each odd sample, the data are corrupted and then become misaligned. And the beginning of data entry does not exactly start a new line.

So I would use the CrLF to re-sync.

How can I mix the approach of the ASCII-readline with binary data?

The following does not work: I try to tell the trainer to use vbNewLine (= CrLf = $0D $0) to separate lines and use the channels of direct access with the data type of U16. But when you use the line break, apparently the import filter also expects the delimiters instead of raw binary values.

Void ReadStore (File)

File.Formatter.LineFeeds = vbNewLine

File.SkipLine () ' Sicherstellen, dass erste wird ignoriert line unvollstandige

Sun block: SetBlock = File.GetStringBlock()

Dim Channel1: Set channel 1 = Block.Channels.Add ("Low-Timer", eU16)

Canal2 Dim: Set Canal2 = Block.Channels.Add ("High-Timer", Ue16)

' Dim canal3: canal3 Set = Block.Channels.Add ("CrLf", Ue16)

Dim ChannelGroup: Set ChannelGroup = Root.Channelgroups.Add ("ESR_Timing")

ChannelGroup.Channels.AddDirectAccessChannel (Channel1)

ChannelGroup.Channels.AddDirectAccessChannel (Channel2)

End Sub

Thank you for your comments.

Michael

Hi Michael,

I think this use made the rounds. I had to ignore all partial to the first row of values in order to keep the correspondence of the line of the high and low timer values. Also note that by default the U16s are read with LittleEndian byte order - you can change that with File.Formatter.ByteOrder = eBigEndian.

Brad Turpin

Tiara Product Support Engineer

National Instruments

-

With the help of LV2010. I have a program that stores data in a binary file. The file is a set of strings and floating point values. I need to write another program in VB.NET that can save/read these files, so I need information on the actual file format of the data. Is there a documentation which describes how the file is saved? Thank you.

Interesting. Usually, this question gets asked in the opposite direction with people trying to decode in a cluster.

If you use the WriteToBinary function, your data are written as native data types in order to cluster in the binary file. Because you use a cluster, each string is being preceded by a length, which I believe is an I32.

This is described in the help file for writing to a binary file. I think the people of thing travel length of string/array much however.

-

data binary file plugin: navigate through different types of data

Hello

I am currently trying to load a binary file in DIAdem with the following structure:

block 1

1 x 8 - bit ascii

12 x double

block 2

int16 1280 x

block 3

int16 1280 x

block 4

12 x double

Block 5

int16 1280 x

block 6

int16 1280 x

block 7

12 x double

block 8

int16 1280 x

block 9

int16 1280 x

...

I managed to read the first value chain, but I did not get any further.

Could you please give me an advance on how to proceed?

/ Phex

Hello Phex,

Try this. Not sure its exactly what you are looking for, but probably close.

Andreas

-

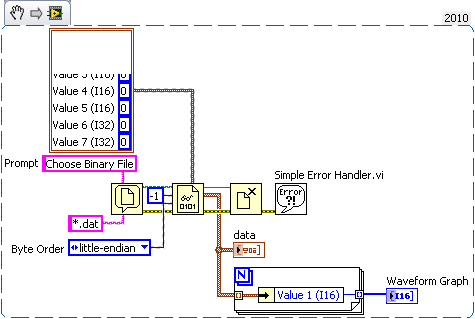

Read and analyze a binary file

I can't properly analyze a binary file. I use the Labview 'Reading binary file' example, I joined to open the file. I suspect that I use incorrect settings on the command "binary file reading.

Here is a little history on my request. The binary file, that I'm reading has given stored as 16-bit and 32-bit unsigned integers. The data comes in blocks of bytes, 18; in this piece of 18 bytes are the five values 16-bit and two 32-bit values. At the end of the day, I fear that with pulling on one of the 16-bit of each data segment values, so the amount of the fine if the sorting method interprets the 32 bit values as two consecutive 16-bit values.

Any suggestions on how to properly analyze the binary file? Thanks for your suggestions!

P.S. I have attached an example of binary file I am trying to analyze. She doesn't have an extension so I chagned it in .txt for download. It has 40 k + events, and a piece of 18-byte data is saved for each "event", so the binary is long enough.

You can read the file until all the bytes and do some gymnastics Unflatten or specify the data type for the binary file reading. No need to feed the size, just let it read all of the file at a time. Nice how the extracted from cultures of the constant of cluster.

-

Hello

I wrote a simple VI to make playback of audio files and to record signals. It seems to do what I want (although I appreciate the General comments on good programming habits). The biggest problem I have is that the binary file, that is to save too much size. A few seconds registration gives a few megabytes of data. Although I can open binary file in matlab and analyze the data very well, the large file size does not look good.

Anyone know why this is the case? I tried to save the data into something else of the double rooms, but he always gives large file sizes. Played around with the byte order too without great success. Any help is appreciated (LV 2009 attached file).

Thank you.

FA

If your data acquisition unit is 16 bits or less, just to store data like I16. you store data more DBL and this is the number of pr of 8 bytes. It is perfectly feasible in I16 as wav files. However (in Labview at least) wav files is not a tablet. If the file size will be much larger than the MP3 files which is a compressed file format.

-

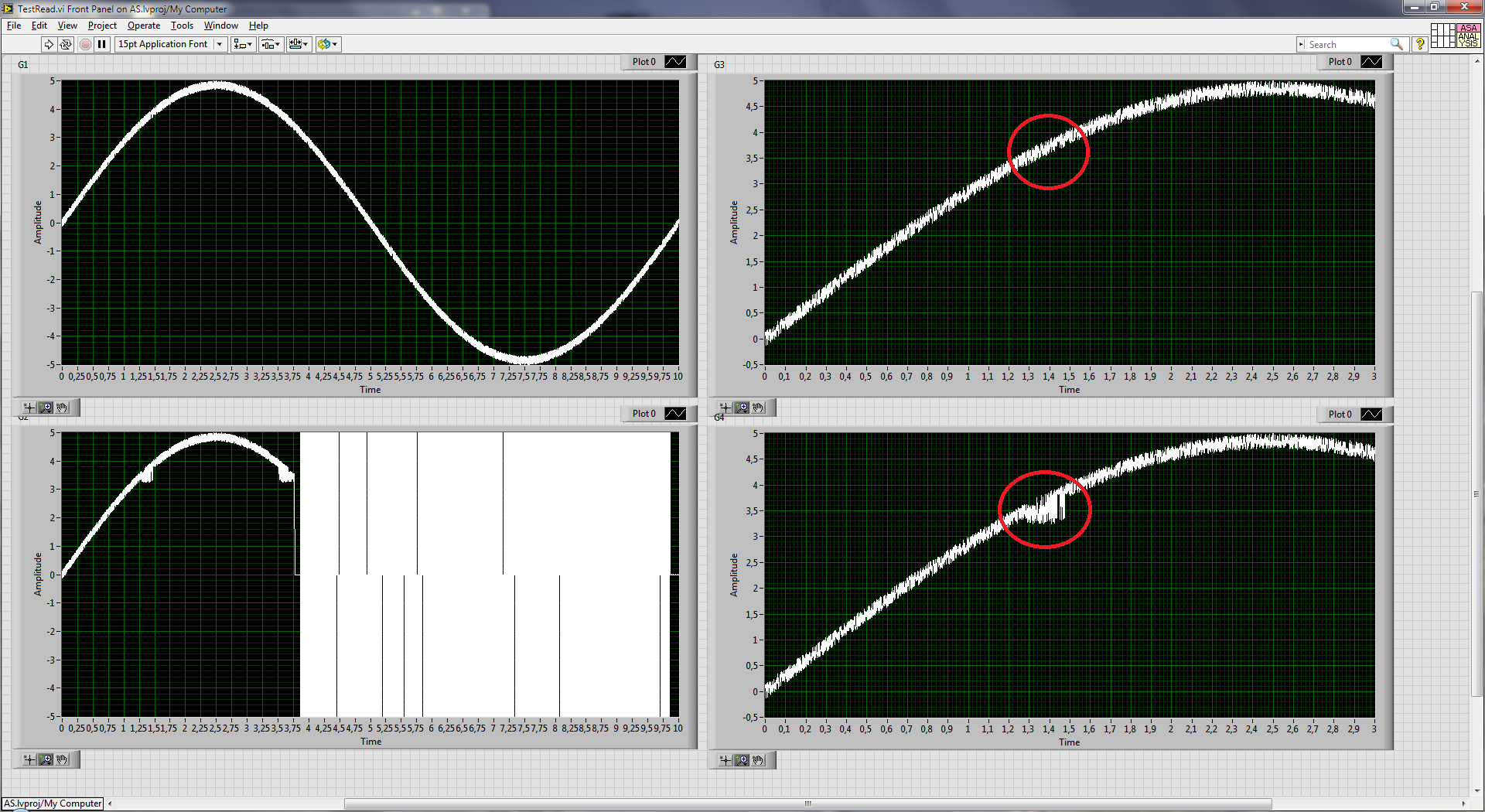

Strange behavior when reading binary file

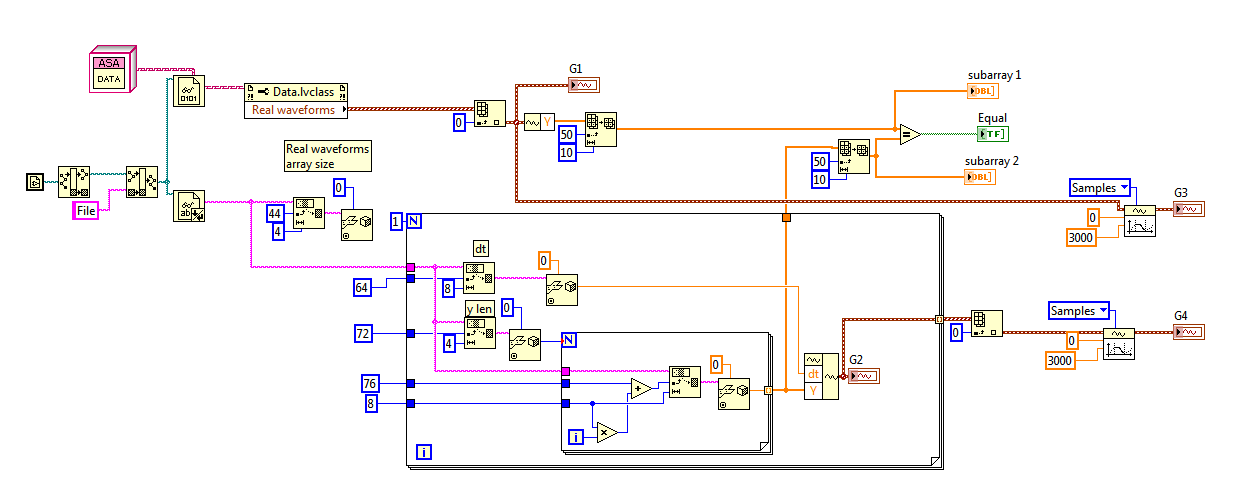

I'm reading from the binary data as flattened. The file was saved as a class of labview which contained the table of waveforms and other data. When I read the file with read binary file vi with class attached to the type of data the data are correct. But when I read the as flattened data something strange happened (see attached image). The two best shows correct data and two charts below shows the partially correct data. When you write code to read the flattened data I followed the instructions on http://zone.ni.com/reference/en-XX/help/371361H-01/lvconcepts/how_labview_stores_data_in_memory/ and http://zone.ni.com/reference/en-XX/help/371361H-01/lvconcepts/flattened_data/.

The strange is that there is gap around 1.4 s (marked in red), but the samples before and after game.

I don't know what I'm doing wrong.

I have LV2011.

Demand is also attached.

Assuming that you your decoding of binary data is correct (that is, you have the data structure of the serialized class figured out) the problem is probably that you don't the read as binary data, you read in the text using the text file... Modify the read fucntion than binary.

-

How to determine the size of the binary file data set

Hi all

I write specific sets of data in table in a binary file, by adding each time so the file grows a set of data for each write operation. I use the set file position function to make sure that I'm at the end of the file each time.

When I read the file, I want to read only the last 25 data sets (or numbers). To do this, I thought using the position set file to set the file position where it was 25 sets of data from the end. Math easy, right? Apparently not.

Well, as I was collecting data file size as I began the initial tet run, I find the size of the file (using file order size and get number of bytes so) as the size increases the same amount every time. My size and the format of my data being written is the same every time, a series of four numbers double precision.

I get increments are as follows, after the first write - 44 bytes, after 2nd - 52 bytes, 3 - 52 bytes, bytes 44 4th, 5th - 52 bytes, 6 - 52 bytes, 7th - 44 bytes and it seems to continue this trend in the future.

Why each write operation would be identical in size of bytes. This means that my basic math for the determination of the poistion of correct file to read only the last 25 sets of data won't be easy, and if somewhere along the line after I've accumulated hundreds or thousands of data sets, what happens if the model changes.

Any help on why this occurs or on a working method, all about the problem would be much appreciated.

Thank you

Doug

-

Hello

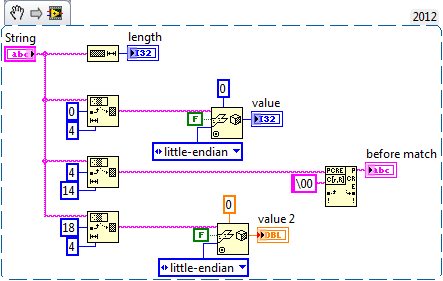

I have the script program, which can produce the desired data in csv, ASCII and binary file format. Sometimes not all of the useful numbers are printed in the csv file, so I need to read data from the binary file.

I have atttached what I have so far and also a small csv and the bin file, containing the same data.

I read the first value of the integer (2), but the second data type is a string, and I can't read this one... I get the error at the end of the file, I guess because the type does not, or I guess I should read the data in a different way?

According to what I see in the csv file, the structure of the binary file should be the following:

integer, String, float, and repeated once again all the lines in the file csv...?

Thanks for the tips!

PS. : the site does not allow me to upload files, so here they are:

https://DL.dropboxusercontent.com/u/8148153/read%20Binary%20File.VI

https://DL.dropboxusercontent.com/u/8148153/init_in_water.csv

https://DL.dropboxusercontent.com/u/8148153/init_in_water.bin

Here is a basic example of what I mean. Oh, the whole analysis and the string. The floating-point number at the end is not decode properly. I tested on the first entry in your file binary. If you have access to the code that generates this data, you can see exactly what format the data is. Your data seems to be 22 bytes of length.

-

Write binary file structure and add

I have a very simple problem that I can't seem to understand, which I guess makes that simple, for me at least. I did a little .vi example that breaks down basically what I want to do. I have a structure, in this case 34 bytes of various types of data. I would iteratively write this structure in a binary as the data in the structure of spending in my .vi will change over time. I'm unable to get the data to add to the binary file rather than overwrite since the file size is still 34 bytes bit matter how many times I run the .vi or run the for loop.

I'm not an expert, but it seems that if I write a structure 34 bytes in a file of 10 times, the final product must be a binary file of 340 bytes (assuming I'm not padded or preceded by size).

A really strange thing is that I get the #1544 error when I wire the refnum wire entry on the function of writing file dialog, but it works fine when I thread the path of the file directly to the write function.

Can someone melt please in and save me from this task of recovery?

Thanks for all the help. Forum rules of NEITHER!

Have you considered reading the text of the error message? Do not set the "disable buffer" entry to true - just let this thread continues. Why you want to disable the buffering?

In general, the refnum file must be stored in a register to shift around the loop instead of using the spiral tunnels, that way if you have zero iterations you will always get through properly file refnum. In addition, there is no need to define the Position of file inside the loop, since the location of the file is always the end of the last entry, unless it is particularly moved to another location. You might want it once out of the loop after you open the file, if you add an existing file.

-

Unable to replicate the frations seconds when you read a timestamp to a binary file

I use LabVIEW to collect packets of data structured in the following way:

cluster containing:

DT - single-precision floating-point number

timestamp - initial period

Table 2D-single - data

Once all the data are collected table of clusters (above) is flattened to a string and written to a text file.

I try to read binary data in another program under development in c#. I have no problem reading everything execpt the time stamp. It is my understanding that LabVIEW store timestamps as 2 unsigned 8-byte integer. The first integer is the whole number of seconds since January 1, 1904, 12: 00, and the second represents the fractional part of the seconds elapsed. However, when I read this information in and convert the binary into decimal, the whole number of seconds are correct, but the fractional part is not the same as that displayed by LabVIEW.

Example:

Hex stored in the binary file that represents the timestamp: 00000000CC48115A23CDE80000000000

Time displayed in LabVIEW: 8:51:38.139 08/08/2012

Timestamp converted an Extended floating-point number in labview: 3427275098.139861

Timestamp binary converted into a decimal number to floating-point in c#: 3427275098.2579973248250806272

Binary timestamp converted to a DateTime in c#: 8:51:38.257 08/08/2012

Anyone know why there is a difference? What causes the difference?

http://www.NI.com/white-paper/7900/en

The least significant 64 bits should be interpreted as a 64-bit unsigned integer. It represents the number of 2-64 seconds after the whole seconds specified in the most significant 64-bits. Each tick of this integer represents 0.05421010862427522170... attoseconds.

If we multiply the fractional part of the value (2579973248250806272) by 2-64 so I think that you have the correct time stamp in C.

-

Hello

Just hoping someone might be able to help me with the following. I'm new to labview and don't do not have has IT melts so understand binaries and how to read is beyond my capabilities.

Quite simply, I try to open a series of binary files written in the following format (see attachment data.txt) then in labview, process the data. I tried the examples of binary file reading but seem to be long around in circles. I also enclose examples of the 8sept4.3ld of file format, then the same file in an ascii format which is what I'd like the output in labview to look like.

I'd appreciate any help with this, especially if it's stupid guide!

See you soon,.

Kath

Maybe you are looking for

-

How.do remove an ad on in.my.friends.list

Remove. Ad.ons.in my friends list

-

I forgot the password for iphone 4. now disabled and will not restore from mac

followed all the steps to restore phone says not eligible

-

Fixed IP address assignment to a PAP2T

PAP2T hung in the WRT54G. When I put the config PAP2T DHCP = Yes, it works fine, but the router assigns a dynamic IP address. When I put the PAP2T for DHCP = No and provide a fixed IP, subnet mask and gateway, it does not connect to the host of VOiP.

-

Remote VPN - change user password

Hello I have configured the remote access VPN on ASA (7.2) with local user database and the user connects via the Cisco VPN Client. Can the user change their password VPN themselves or not he to was made by the administrator directly on the SAA. Than

-

My flash drive is really messed up

I recently discovered a problem on my flash drive. When I plugged in my laptop Windows 7 yesterday, I found that all my folders and files were replaced with shortcuts to the program cmd in C:\Windows\System32. I've used this flash drive on my compute