Write binary file structure and add

I have a very simple problem that I can't seem to understand, which I guess makes that simple, for me at least. I did a little .vi example that breaks down basically what I want to do. I have a structure, in this case 34 bytes of various types of data. I would iteratively write this structure in a binary as the data in the structure of spending in my .vi will change over time. I'm unable to get the data to add to the binary file rather than overwrite since the file size is still 34 bytes bit matter how many times I run the .vi or run the for loop.

I'm not an expert, but it seems that if I write a structure 34 bytes in a file of 10 times, the final product must be a binary file of 340 bytes (assuming I'm not padded or preceded by size).

A really strange thing is that I get the #1544 error when I wire the refnum wire entry on the function of writing file dialog, but it works fine when I thread the path of the file directly to the write function.

Can someone melt please in and save me from this task of recovery?

Thanks for all the help. Forum rules of NEITHER!

Have you considered reading the text of the error message? Do not set the "disable buffer" entry to true - just let this thread continues. Why you want to disable the buffering?

In general, the refnum file must be stored in a register to shift around the loop instead of using the spiral tunnels, that way if you have zero iterations you will always get through properly file refnum. In addition, there is no need to define the Position of file inside the loop, since the location of the file is always the end of the last entry, unless it is particularly moved to another location. You might want it once out of the loop after you open the file, if you add an existing file.

Tags: NI Software

Similar Questions

-

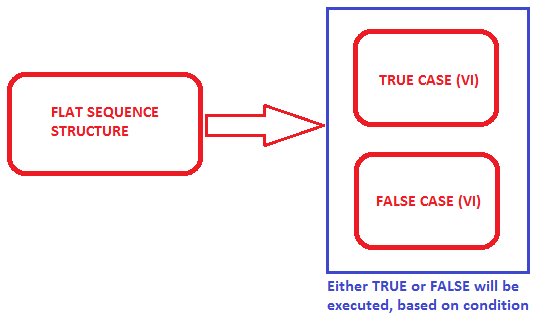

How to create flat sequence structure and add Subvi with true or false on an another flat following?

How can I create next. flat and add a colum with VI and true Sub VI falseSub?

What should be the flowchart:

-

read/write binary file in struct

Hi all,

I created a profile of struct defined as follows:

typedef struct {profile

name char [30];

Electric float;

Double frequency;

Profile of struct * next;}

I can write structures in a file, but I have to do that when you try to insert a struct, you control the domain name and only if there is no other struct with this name, it is inserted into the file.

Please help me!I solved it!

-

CEP - read the binary file (png) and download with $.ajax

Hi all

I'm trying to create the POST request with extension PRC binary image

I read the PNG file under

fileContents var = window.cep.fs.readFile (fileData.files [f], cep.encoding.Base64);

Then I need to create the object with that content converted to binary Blob data (?), but I always

Please notify

found the solution

Use the function base64DecToArr to mozilla foundation

-

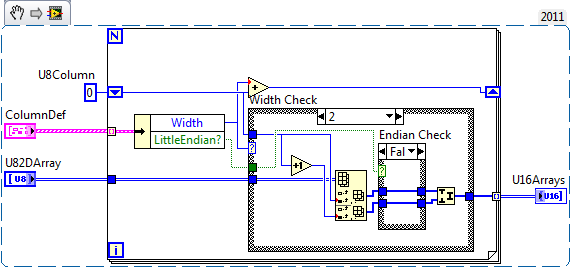

Treatment of mixed fast representation binary files

Hello world

I see several ways to tackle this, but I'm looking for the fastest approach that my data set is very large...

The question:

I have a binary data file with 2D data.

It encodes 200 + differnet "columns" which are repeated over time (sampled)

The data contains data mixed representaitons: a mxiture of I8, U16, I16 etc., U8.

They are all regullarly repeated in a structure of known file (660 bytes per 'line')

I want to gnereate the form of tables 1 d differnet 200 + file each using the correct data retrieved (or a subset of the columns).

I can load the file using the binary file read and I specify U8 as data type. Then I can rediension to the correct 2D array.

I am now stuck on the fastest method to treat the columns of data (bytes 1-2) in the tables in 1 d retrieved digital corect (2 x U8 i16 etc.).

Scanning, byte-by-byte would be very slow.

any suggesitons?

Looks like you have a pretty good handle on that.

Here is how I would do it, assuming I had the back down at U8 when necessary at a later time.

-

RT file IO and IO priority network

I have a Subvi that receives UDP packets and apply a time stamp to each. The Subvi is defined on the system of execution of data acquisition (LabView RT) and high priority. This UDP receiver puts data in a RT FIFO.

I have an another sub - VI, which sets up a TDMS file (creates and adds properties of channel etc.) which is called at the start of the acquisition. The Subvi is the priority normal, and on the standard runtime system. Another Subvi runs the RT FIFO in the TDMS file on the same system of execution of normal priority.

When installing TDMS VI runs for the first time, there is a significant delay in the UDP VI that causes a number of backup packages in the Protocol UDP receive buffer (which causes a flurry of timestamps that are incorrect).

What can I do to receive the Protocol UDP Subvi didn't get interrupted? It seems that the Protocol UDP receive function itself may not run at the priority of the Sub - VI that calls it. But I don't know why.

The other oddity is that after the first performance of received UDP Subvi, he can run again with no apparent effect on the Subvi the UDP receiver (even if it does the same thing).

Thank you

XL600

Answered my own question. Trace RT Viewer is your friend! I had a FGV the priority value subroutine that was blocking the high-priority UDP receive Subvi which took place to run in the same thread, but only in the first inning through the VI. Go figure...

Note for later... Look at these priority settings!

-

space the binary file for reading as 0x00 0x20

Trying to read from a binary file that contains values hexa% point floating in single precision. With the help of the service binary file reading and store values in an array. The problem is that LabVIEW reads the null character (0x00) as a space (0x20) character. For example, reading in 3F800000 which is 1.0 floating-point. The output in LabVIEW reads 1.00098 (rounded by LabVIEW), or a hexadecimal value of 3F80201C. No rounded hexadecimal value must be 3F802020 for this number. Is this a known problem and are there solutions? I am attaching a jpeg file of my diagram as well as binary data. I could not download a .bin file then I saved as .txt. Thanks in advance.

-

Hello:

I'm fighting with digital table of 1 d writeing in a binary file and start a new line or insert a separator for each loop writing file. So for each loop, it runs, LABVIEW code will collect a table 1 d with 253 pieces of a spectrometer. When I write these tables in the binay file and the following stack just after the previous table (I used MATLAB read binary file). However whenever if there is missing data point, the entire table is shifted. So I would save that table 1-d to N - D array and N is how many times the loop executes.

I'm not very familiar with how write binary IO files works? Can anyone help figure this? I tried to use the file position, but this feature is only for writing string to Bodet. But I really want to write 1 d digital table in N - D array. How can I do that.

Thanks in advance

lawsberry_pi wrote:

So, how can I not do the addition of a length at the beginning of each entry? Is it possible to do?

On top of the binary file write is a Boolean entry called ' Prepend/chain on size table (T) '. It is default to TRUE. Set it to false.

Also, be aware that Matlab like Little Endian in LabVIEW by default Big Endian. If you probably set your "endianness" on writing binary file as well.

-

Structuring of data read from binary file

Hi people,

I am very new to matlab and trying to get a comprehensive program that will read my library of recordings of electric fish. It is a simple binary format which auto contains information necessary for playback. After a few days before the software, I was able to write a single passage that dissects the first record of my file. However, I want to structure the data, so that I can easily navigate through each record added to a particular file. I attatched the file .vi so far I came with... I don't know that I have to write a loop and add data to a table or a cluster, although the procedure for this escapes me. Is there an effective way more to code this? Thank you very much for any help you can be able to provide!

Best,

JGWell, I made my mistake cardinal on the forums and called Labview Matlab in my previous post. Apology. I've made some progress on this point, with a loop that adds data to a structure...

Now, I would like to find a way to achieve more effectively... Any ideas? Thanks in advance!

-

"Read binary file" and efficiency

For the first time I tried using important binary file on data files reading, and I see some real performance issues. To avoid any loss of data, I write the data as I received it acquisition of data 10 times per second. What I write is an array double 2D, 1000 x 2-4 channels data points. When reading in the file, I wish I could read it as a 3D array, all at the same time. This does not seem supported, so many times I do readings of 2D table and use a shift with table register building to assemble the table 3D that I really need. But it is incredibly slow! It seems that I can read only a few hundred of these 2D members per second.

It has also occurred to me that the array of construction used in a shift register to continue to add on the table could be a quadratic time operation depending on how it is implemented. Continually and repeatedly allocating more larger and large blocks of memory and copy the table growing at every stage.

I'm looking for suggestions on how to effectively store, read effectively and efficiently back up my data in table 3-d that I need. Maybe I could make your life easier if I had "Raw write" and "read the raw data" operations only the digits and not metadata.then I could write the file and read it back in any size of reading and writing songs I have if you please - and read it with other programs and more. But I don't see them in the menus.

Suggestions?

Ken

-

file read write binary error 116

Hi all

I am double, digital table in binary data record and then try to read back but keep on getting error 116 (cannot read binary file).

I've attached screenshots of the way I write my data in the binary file, then the way I'm reading it. Basically, my data are pieces of 2D double bays, which come at a frequency of 1 Hz and this is why I use the GET and set file size before saving to the file (i.e. so that whenever I add my file with new data).

I tried all combinations for binary and read Scripture to binary functions, which meant that I tried a few options big endian and native, but I keep getting the same error. Also played the way I add my data, i.e. I used the options of 'end of file' and "offset in bytes" just in case it makes a difference, but again no luck.

Any help would be much appreciated.

Kind regards

Harry

Try to set the 'pre append array or string of size' true.

That seems to work here...

-

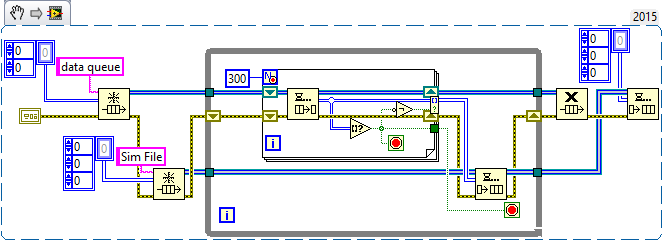

Adds data to the binary file as concatenated array

Hello

I have a problem that can has been discussed several times, but I don't have a clear answer.

Normally I have devices that produce 2D image tables. I have send them to collection of loop with a queue and then index in the form of a 3D Board and in the end save the binary file.

It works very well. But I'm starting to struggle with problems of memory, when the number of these images exceeds more than that. 2000.

So I try to enjoy the fast SSD drive and record images in bulk (eg. 300) in binary file.

In the diagram attached, where I am simulating the camera with some files before reading. The program works well, but when I try to open the new file in the secondary schema, I see only the first 300 images (in this case).

I read on the forum, I have to adjust the number of like -1 in reading binary file and then I can read data from the cluster of tables. It is not very good for me, because I need to work with the data with Matlab and I would like to have the same format as before (for example table 3D - 320 x 240 x 4000). Is it possible to add 3D table to the existing as concatenated file?

I hope it makes sense :-)

Thank you

Honza

- Good to simulate the creation of the Image using a table of random numbers 2D! Always good to model the real problem (e/s files) without "complicating details" (manipulation of the camera).

- Good use of the producer/consumer in LT_Save. Do you know the sentinels? You only need a single queue, the queue of data, sending to a table of data for the consumer. When the producer quits (because the stop button is pushed), it places an empty array (you can just right click on the entry for the item and choose "Create Constant"). In the consumer, when you dequeue, test to see if you have an empty array. If you do, stop the loop of consumption and the output queue (since you know that the producer has already stopped and you have stopped, too).

- I'm not sure what you're trying to do in the File_Read_3D routine, but I'll tell you 'it's fake So, let's analyze the situation. Somehow, your two routines form a producer/consumer 'pair' - LT_Save 'product' a file of tables 3D (for most of 300 pages, unless it's the grand finale of data) and file_read_3D "consume" them and "do something", still somewhat ill-defined. Yes you pourrait (and perhaps should) merge these two routines in a unique "Simulator". Here's what I mean:

This is taken directly from your code. I replaced the button 'stop' queue with code of Sentinel (which I won't), and added a ' tail ', Sim file, to simulate writing these data in a file (it also use a sentinel).

Your existing code of producer puts unique 2D arrays in the queue of data. This routine their fate and "builds" up to 300 of them at a time before 'doing something with them', in your code, writing to a file, here, this simulation by writing to a queue of 3D Sim file. Let's look at the first 'easy' case, where we get all of the 300 items. The loop For ends, turning a 3D Board composed of 300 paintings 2D, we simply enqueue in our Sim file, our simulated. You may notice that there is an empty array? function (which, in this case, is never true, always False) whose value is reversed (to be always true) and connected to a conditional indexation Tunnel Terminal. The reason for this strange logic will become clear in the next paragraph.

Now consider what happens when you press the button stop then your left (not shown) producer. As we use sentries, he places an empty 2D array. Well, we dequeue it and detect it with the 'Empty table?' feature, which allows us to do three things: stop at the beginning of the loop, stop adding the empty table at the exit Tunnel of indexing using the conditional Terminal (empty array = True, Negate changes to False, then the empty table is not added to the range) , and it also cause all loop to exit. What happens when get out us the whole loop? Well, we're done with the queue of data, to set free us. We know also that we queued last 'good' data in the queue of the Sim queue, so create us a Sentinel (empty 3D table) and queue for the file to-be-developed Sim consumer loop.

Now, here is where you come from it. Write this final consumer loop. Should be pretty simple - you Dequeue, and if you don't have a table empty 3D, you do the following:

- Your table consists of Images 2D N (up to 300). In a single loop, extract you each image and do what you want to do with it (view, save to file, etc.). Note that if you write a sub - VI, called "process an Image" which takes a 2D array and done something with it, you will be "declutter" your code by "in order to hide the details.

- If you don't have you had an empty array, you simply exit the while loop and release the queue of the Sim file.

OK, now translate this file. You're offshore for a good start by writing your file with the size of the table headers, which means that if you read a file into a 3D chart, you will have a 3D Board (as you did in the consumer of the Sim file) and can perform the same treatment as above. All you have to worry is the Sentinel - how do you know when you have reached the end of the file? I'm sure you can understand this, if you do not already know...

Bob Schor

PS - you should know that the code snippet I posted is not 'properly' born both everything. I pasted in fact about 6 versions here, as I continued to find errors that I wrote the description of yourself (like forgetting the function 'No' in the conditional terminal). This illustrates the virtue of written Documentation-"slow you down", did you examine your code, and say you "Oops, I forgot to...» »

-

Hi all

I want to save my data in CSV and binary (.) (DAT). The VI adds 100 new double data in these files every 100 ms.

The VI works fine but I noticed that the binary file is bigger than the CSV file.

For example, after a few minutes, the size of the CSV file is 3.3 KB, while the size of the binary file is 4 KB.

But... If the binary files should not be smaller than the text files?

Thank you all

You have several options.

The first (and easiest) is to you worry not. If you use DBL and then decide the next month you want six-digit resolution, so he's here. If you use STR and decide next month, well, you're out of luck. Storage is cheap, maybe that works for you, maybe it's not.

Comment on adding it at the beginning of the header is correct, but it's a small overhead. Who is the addition of 4 bytes for each segment of 100 * 8 = 800, or 0.5 percent.

If you don't like that, then avoid adding it at the beginning. Simply declare it as a DBL file, with no header and make.

You are storing nothing but slna inside.

This means YOU need to know how much is in the file (SizeOf (file) / SizeOf (DBL)), but it's doable.

You must open the file during writing, or seek open + end + write + firm for each piece.

If you want to save space, consider using it instead of DBL SGL. If measured data, it is not accurate beyond 6 decimal digits in any case.

Or think to save it as I16s, to which you apply a scale factor during playback.

Those who are only if you seriously need to save space, if.

-

How can I recover a lost file bookmark and content and add to my current boolmarks?

I tried to access a folder with several subfolders with important favorite Web sites. It's not in my favorites and I searched the entire library. I need to recover this file and add it to my folders of bookmarks existing without change or disrupt the rest of the structure of Web sites or bookmarks. I guess I can get bookmarked all the value of an earlier date, but I need just to this folder. I unfortunately did not a recent backup so I don't have the ability to recover from my backup. In that case, might do the same to my backup copy of my favorites?

If you did a lot of re-organization of your files since your last backup, then you could try the following steps to restore only the missing folder you need (providing that it existed at your last backup).

- Save what you have now in a new format JSON backup file;

- Restore the previous backup;

- Turn off everything except the missing records;

- Export to a HTML file.

- Restore the backup that you created in step #1; then finally

- Import the HTML file created in step #4.

It is not the most elegant solution, but it will accomplish I hope that what you need, if I understand you correctly.

-

Write to the Cluster size in binary files

I have a group of data, I am writing to you in a file (all different types of numeric values) and some paintings of U8. I write the cluster to the binary file with a size of array prepend, set to false. However, it seems that there are a few additional data included (probably so LabVIEW can unflatten on a cluster). I have proven by dissociation each item and type casting of each, then get the lengths of chain for individual items and summing all. The result is the correct number of bytes. But, if I flattened the cluster for string and get this length, it is largest of 48 bytes and corresponds to the size of the file. Am I correct assuming that LabVIEW is the addition of the additional metadata for unflattening the binary file on a cluster and is it possible to get LabVIEW to not do that?

Really, I would rather not have to write all the elements of the cluster of 30 individually. Another application is reading this and he expects the data without any extra bytes.

At this neglected in context-sensitive help:

Tables and chains in types of hierarchical data such as clusters always include information on the size.

Well, it's a pain.

Maybe you are looking for

-

How to use DAY. E TOUCH as an e-reader?

Greetings from the Greece! I just bought the new Journ.E Touch Tablet.https://www.toshibatouch.EU/index2.php Although I am happy with different equipment, I have not yet found the way to read my e-books (pdf files), or any other docs 'office'... that

-

Hello I recently bought Dv6 model Hp laptop with Windows 7 home premium. I got to know once obtain registration of laptop Hp, its good to create the disc of recovery (DVD copy) of windows 7 (Recovery Manager) who need help in an emergency to break BO

-

HP pavilion g6 2241 his: power of hp pavilion g6 241sa failure

Hello forum members my query is, my problem was, Ive had several spills coffee light on my hp Pavilion 2241sa g6, al ive cleaned, new keyboards restarts etc, then for 3 months, he just put on the table with the battery, because the last attempt of cl

-

Can't send or receive messages. Error 0x80072EFE

I am just unable to check my email through Windows Live Mail, I tried all the solutions found in microsoft takes in charge, three different methods, but nothing has worked so far.

-

PNG do not correctly display in comps

I just installed after effects CC on a new machine, open a model that I had done on another machine.everything looked ok, except that one of the charts, a PNG, displayed as a large blocks of color, instead of the image.Any ideas how to solve this pro