adapt the code on CRIO 9068

Hi all

I have a code (supposed) job working on NI 901 x and x 900 dish-forms. I want to adapt to run on NI 9068.

I can connect to my CRIO 9068 but the local chassis is stil named (NI 9101), I can change it for CRIO 9068 but compilation failed during the compilation of the code (which is a simple use of NI 9870).

What can I do to adapt my code for CRIO 9068?

Kind regards

SASA.

I recommend the establishment of the new target of your project and then drag old cRIO target to the new everyingthing. Then delete the old target. You can recreate your specification of generation.

Tags: NI Software

Similar Questions

-

All,

I have a cRIO-9068 I try to use the scan mode for. I have intalled all the latest drivers and software as explained. However, when I put my chassis to scan mode, then select deployment all, I get this error on my chassis and all my modules:

"The current module settings require a NI Scan Engine support on the controller. You can use Measurement & Automation Explorer (MAX) to install a software package recommended NOR-Rio with NI Scan Engine support on the controller. If you installed LabVIEW FPGA, you can use this module with LabVIEW FPGA by adding an element of FPGA target under the chassis and drag and drop the module on the FPGA target element. »

Everyone knows this or know why labVIEW does not recognize that the software is installed on my cRIO or is it not installed correctly?

AGJ,

Thanks for the image. I saw a green arrown beside all my pictures of chip and it seemed that meant that the software wasn't really being installed. I formatted my cRIO and did a custom install. My problem was that I had the two labview 2013 and 2014 installed and the cRIO put conflicting versions of software. After doing a custom installation and choose only the versions of 2014, my picture now looks like yours!

-

The model Interface Toolkit does support the cRIO-9068 again based on Linux?

Hello, I have a cRIO-9068 and need to integrate a Simulink model in my controller. The model Interface Toolkit does support the cRIO-9068 again based on Linux? Besides, don't Veristand? This page assumes that it is not:

http://digital.NI.com/public.nsf/allkb/2AE33E926BF2CDF2862579880079D751

Thank you

Hi Southern_Cross,

Based on the readme:

http://digital.NI.com/public.nsf/allkb/D3F40C101B66128186257D020049D679

It seems that it is now supported! These resources should provide a few more details:

http://zone.NI.com/reference/en-XX/help/374160B-01/vsmithelp/mit_model_support/

http://digital.NI.com/public.nsf/allkb/E552B0CD4E48215586257DF7005BE055

Please note that NI VeriStand 2014 can't stand it targets NOR Linux in real time.

Kind regards

-

How compile/link xnet with eclipse for the cRIO 9068

Hi all

I use a cRIO 9068 with a NI9862 (DRUM unit).

I'm programming in C/C++ with Eclipse on windows

Without seeking to CAN access via xnet, the C app compiles and works very well on the 9068.

Now, I want to use xnet functions to access the CAN.

I found a nixnet.h in

\Shared\ExternalCompilerSupport\C\include, then the compiler is convinced. But the linker can't find a library (I tried "nixnet" and "xnet") or don't find a library for cRIO xnet 9068 on my hard drive.

Where can I find this library? Do I have to install another driver in addition to the normal driver xnet xnet (I installed, version 14.0).

Thanks for your response in advance

Hello!

the driver Readme reads as follows:

The NOR-XNET software supports Microsoft Visual C/C++ version 6.

But for Linux-RT based targets (cRIO 906 x and x 903) in combination with modules XNET (986 x) I found a useful resource that indicates that you should be able to include the header "nixnet.h" (as apparently already do you) and ' link against libnixnet.so at run time to obtain the appropriate symbols.

You should find the file according to the following folder:

C:\Program Files (x 86) \National Instruments\RT Images\NI-XNET\Linux-armv7

Best regards

Christoph

-

I can connect an NI MXI-Express RIO 9154 off the cRIO-9068 serial?

I would use the cRIO-9068 a new system but will need a second wreath off the first chassis. Can I use NI MXI-Express RIO 9154? If so, how to connect the MXI cable until the 9068?

Not the MXI Express, but you can use EtherCAT: chassis NI 9144 8 locations EtherCAT Slave for C series i/o Modules

-

Translation in MAX problem when you configure the SSH server on a cRIO-9068

Hello

In my view, that there is a problem with the German translation of the remote switches max on the new cRIO-9068. When you look at the English Version, you see "Enable Secure Shell Server". In the German Version, you see "Secure Shell Server deaktivieren" which meens to disable the SSH server. The box did the same features, so after you disable SSH in can access.

This it seems that it is probably just a translation problem.

This it seems that it is probably just a translation problem.I have attached two screenshots.

Andreas

Thank you Andreas.

This has already been supported in Nov 2013 and should be fixed soon.

Marco Brauner NIG.

-

Optimization of DMA in the Code in time real (cRIO FPGA-> RT)

Howdy,

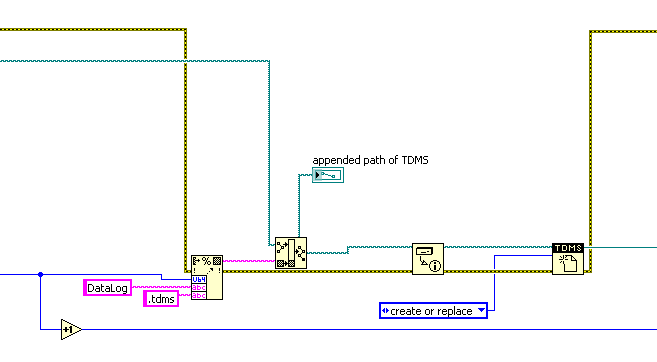

I am acquiring data from a microphone using the NI9234 module. I wrote my RT and FPGA code. I am communication of data between the FPGA and RT layers using a DMA FIFO. I noticed that the layer of RT has difficulty to empty the FIFO, since the FPGA layer runs at a much faster pace. I would like some pointers or suggestions on stupid things I do in the layer of RT that are causing it to run more slowly than necessary.

Currently, my RT code simply opens the FPGA reference in a loop, extracts data from the FIFO and writes to a file. I know that if I want to plot the data or perform an FFT on it, I would in a loop of lower priority, for not to slow down the data streamed from the FIFO. Are there accessories simple performance that I'm missing in my code? Thank you.

I enclose a copy my code FPGA and RT (screenshots and screw), so that you can watch.

I would seriously consider redesigning completely the code and using this reference request model.

http://zone.NI.com/DevZone/CDA/tut/p/ID/9196

There are reference points that show how this model makes. With the acquisition of waveform, there are a lot of optimization. This referenace application addresses all these to give you the best performance.

-

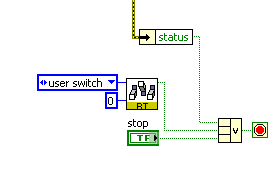

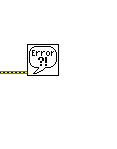

Hi, I have the following code which stops when a multibordure error.

But in the case of "Error 7", the code instead of stop, shows a dialogue with 'Continue' botton illustrated in the following figure.

I was wondering how I can change the code in a way that "Error 7" would have done stop the race continues to process does not open a dialogue with botton. In addition, I have the simple error handler at the end of the code.

//////////////////////////////////////////////////

////////////////////////////////////

////////////////////////////////

The simple error handler is what triggers the dialog box. Read the help file on this function.

If you want to stop based on a mistake, ungroup the cluster of error for status and the wire in the terminal stop ORing with everything cause normally your loop to stop.

-

use of devices high cRIO 9068 FPGA

Hello. I have problems with my cRIO 9068 (dual-core, Artix 7 667 MHz), which uses a lot of FPGA resources. I did a little VI FPGA (LabVIEW 2013 SP1) allowing USER FPGA led to two colors (green and orange) for 500ms and then it is off for another 500ms. use of device Watch report 9193 records used(8.6%) and 9744 LUTs (18.3%). I adapted the VI even for a cRIO 9072 (Spartan 3 - 1 M) which have been allotted to me and use of the device is much lower: registers used: 443(2.9%) and read: 644(4.2%) - please see attachments!

I used the XNET library in the past with the 9068 and 9862 CAN Module: since I knew that these libraries use the FPGA space, I removed XNET 1.8 cRIO software before this test. Before removing, use of FPGAS was even higher!

Can someone tell me how can I reduce the resources used to 'normal '? Thank you!

Hi Lucien,.

The increase in the use of core resources that you see is normal for the family of targets Zync. Try to compile white VI for each (no user written code LabVIEW). You will notice that the Zync platform always uses an important part of the fabric (which targets Zync is used).

This behavior is due to the very different architecture of the family of chips Zync. As the FPGA and ARM processors are on a shared matrix, a large part of the logic and OR treatment implemented in ASICs Spartan/Virtex advice should be moved on the FPGA itself.

You should also notice that, although there is an increase in the use of FPGA base fabric, the gross number of LUTs/flip flops at your disposal as a user is much greater on Zync objectives than a Spartan comparable unit.

-

How to get the console on cRIO based on linux?

Hi all

I develop new things on a cRIO 9068. This cRIO runs Linux RT.

I built a framework of cRIO app and use for several years, I use the console to debug my code and have the status of my displayed cRIO.

I can't find a way to see the console with the 9068 when I send strings to it (see the simple example attached to this post).

Can someone help me see that I send to the console?

BTW: I do not want to write debugging on the serial port, only to watch channels.

In fact, in order to obtain the result (which is for the port series, either through the exit option "monitor" that requires activation of the console series see, or the serial port as usual without configuring the console out) to be visible, the network, there is not a great, simple way to do a little more ethernet today.

One option you have is to change how lvrt is launched to save to a file and to access this file (either from lvrt, webdav or ssh), wrote messages on the screen. It is a more down-n-dirty approach, but he could get the results you wanted.

Change the /etc/init.d/lvrt-wrapper or/usr/local/natinst/labview/lvrt-wrapper file to channel the monitor console to a file

(note that brackets [] denote a touch, the lines that begin with / / are notes and does not have to be entered)

VI/location/of/lvrt-wrapper

Go to the line that looks like 'exec. "/ lvrt.

[i]

Add the following code at the end of the line

[space]>[space]/home/lvuser/lvrt.log

[:] [w]

The line should now look like 'exec. "/ lvrt > /home/lvuser/lvrt.log.

Restart your target, check the contents of the file with "cat /home/lvuser/lvrt.log.

-

Call to a shared library a VI on a target NI Linux RT (cRIO 9068)

Hi, I wrote a very small shared library (.so) with Eclipse (toolchain 2014), which essentially serves as a wrapper for more complex, but I am having problems with the call go to in LabVIEW RT on a target Linux RT - specifically, the cRIO 9068. First: the library was copied in/usr/lib and ldconfig has been correctly implemented. More important yet, I have also written a C program (using Eclipse as well) that calls the single function currently implemented in the shared library: this works perfectly, both in Eclipse and you connecting directly on the cRIO with a Putty terminal. So I guess the shared library itself is ok and can be called from code/external programs. Now, dating back to LabVIEW (2014 here btw). Here's where things get difficult, I guess. Initially, I had the symptoms listed here--> http://forums.ni.com/t5/LabVIEW/How-to-create-a-c-shared-library-so-for-linux-real-time-for/td-p/302... who prevented me from actually run any code on the target of RT. Then I changed the call library function node: various tutorials suggest to put name_of_library.* in the path text box or the name of library, but unfortunately it doesn't seem to work, so I had to put the name and the extension too. But it still produces the error below ("the name of the function is not found" etc.) So I check the box 'specify path on diagram' and add/usr/lib as a parameter to the node library function call: now the VI can work and is actually transferred to the target of the RT... but the cluster of output error returns error 7, which is a kind of "File not found" error. However, I believe that this error message is misleading: indeed, if I try to debug remotely this library shared under Eclipse, I am actually able to pause it. and when I press the Pause button on the debugger, the goal of RT VI pauses, then continues as soon as I press the Resume button, I'm stuck... I tried searching forums and Google as well, but I have not yet found a solution. Any ideas on what's going on? Moreover, I can add more details if needed.

Problem solved, it was a bad configuration of the node library function call which prevented the actual library (.so) to be called.

-

The link on cRIO speed settings do not survive restart

I'm running a cRIO-9068 with firmware revision 1.0.0f1. It is part of a static network including setting on autonegotiation link speeds. However, if I put the cRIO to autonegotiation link speed, the cRIO fails to connect to the switch. Fail lights and pings on the cRIO and switch activity doesn't show any activity.

Curiously, the cRIO connects successfully when I set the connection speed to 100 Mbps/Full duplex or slower, and it is an acceptable workaround for me (for as far as the requirements of the project creep beyond 100 Mbps). My problem is that this link speed setting does not survive a reboot cRIO. After the reboot, the connection speed is reset to auto-negotiation and the cRIO is disconnected once more.

I'm doing the link configuration changes via web interface of the cRIO speed. I am logged in as an administrator and save my changes, and I get confirmation that the speed of the link has been set at 100/FDX. Despite this, restarts always resets the cRIO to auto-negotiation.

Another curiosity is the ratio of the switch that the cRIO is connected to 100/HDX.

I tried to make a file of script in /etc/init.d with the command "ethtool speed 100 duplex full s. I have updated using update - rc.d, but no joy. Any script OR bat mine is either not using ethtool, or it is not dans/etc/init.d. I don't know what else to watch because no where else to look at.

Change the setting of switching to 100/FDX has solved the problem, but this setting is applied to individual ports. This would force me to always use the same port for the cRIO, a restriction which I've had rather not commit.

The problem is obviously the switch, because the cRIO connects to my development computer fine with auto-negotiation framework. Unfortunately, the switch is a component not negotiable material project. The fix should be done on the side of things cRIO.

Any thoughts on why the cRIO doesn't remember it's link speed setting?

Red evening,

I found a known bug with this problem reported for LabVIEW 2013. I did a little test with LV 2014 shows that it is work as expected.

You can try to upgrade to the latest NOR-RIO device driver? I could not find the details of it being fixed, so I don't know if its on the side of the LabVEW or the driver but its worth a shot.

Car # is 464089 for your records.

One last thing, you should switch to RIO 14.0.1 because there was a bug with disocvered with some components in the FPGA that fixed us that you need to upgrade.

http://digital.NI.com/public.nsf/allkb/90AEA2EB87466CE786257D20005A3A44

-

Cannot write negative values on server modbus on cRIO 9068

Hello everyone,

I'm moving a project from a platform of 9114 cRIO a cRIO9068, the reason for a difference of heavy in terms of power CPU, memory, performance FPGA etc...

Real time I deploy a modbus TCP server, and I publish just I16 data.

The problem comes when the program tries to write a negative value to a binded on modbus variable. This variable is in the same format (I16), the program could write negative values, between 0 and -32768, but whenever the modbus force set to zero.

I tested the modbus also with the 'system of distributed OR 2014 Manager' but always impossible to write negative values on I16, but I can if I consider the data as I32!

(see files)

Furthermore, I deployed a modbus server on my PC and in this case, everything is fine.

More information:

I work with labView 14.0f1.

The cRIO are installed 'Labview RealTIme 14.0.0' and 'server Modbus I/O 14.0.0.

I tested the feature on three different cRIO 9068 with the same result.

I think it's something wrong with cRIO 9068, can anyone help me?

Thank you

MZ

Hi, Marcello,.

I was able to reproduce the problem cRIO 9068 and it look like a CAR (corrective action request). I've opened a request for Corrective Action (AUTO ID 511039) to report the issue OR R & D.

Have you tried to implement MODBUS slave on ana MODBUS master PC on cRIO? I tried and it works even with I16 data types.

I hope this will help you.

Kind regards.

Claudio Cupini

OR ITALY

Technical support

-

Hello:

We are planning a project in which we intend to use embedded vision and transformation of vision GigE cameras. Our client is interested in the cRIO-9068 embedded platform.

The question is: is there support for GigE Vision on the cRIO-9068?

I can't find a document that says, so I guess that no public support is granted. If this is the case, I would like to know why. I think that the VxWorks targets do not GigE support, but this cRIO running Linux. From the outside, I think it would be possible to provide a GigE for Linux support. The only devices that seem to favor GigE run Phar Lap ETS.

Thanks in advance for your kind reply.

Just to answer he question, the 9068 does not support GigE Vision. Layer MAC Ethernet embedded on the chip of architecting it uses does not meet the requirements of GigE Vision (similar to the old cRIOs VxWorks/PPC).

The 9068 does support USB3 Vision cameras via its USB 2.0 port well and a lot of GigE Vision cameras have identical models in variants of the USB.

Eric

-

FPGA code will stop when the code RT

I developed a FPGA code to manage a piece of hardware. It's the installer to read some default Panel control configuration values and then sit there constantly respond to signals input and output signal. The bitfile FPGA is written in flash to load FPGA memory and starts almost immediately. So far, everything is good.

Then I layered on the code of RT (cRIO-9075) system that opens a reference FPGA to the FPGA façade and allows me to follow a few indicators and change configuration settings and cause the configuration settings is changed be used by the FPGA. So far, it seems to work too when I run the RT code interactively (eventually there will be an interface for a host system).

The problem occurs when the code RT is ordered to stop. If you stop the RT code also, the judgment of the FPGA code and I will not stop the FPGA code. The RT code does not all calls to order the FPGA code to stop execution. When the RT code is stopped, the only thing she does in regards the link to the FPGA is to close the reference FPGA VI which was opened when the RT code was started.

What should I do to cause the code FPGA to continue to operate as the RT code is started and stopped?

I have to wait that I return to the office tomorrow to test this, but I think that this link has the answer to my problem.

http://lavag.org/topic/16412-confusion-regarding-FPGA-Deployment/#entry100294

It is said: "close FPGA VI reference. If you right click you have an option to close or by default close and reset. This means the VI FPGA is reset (read aborted in standard LV talking) when we close the reference. »

Maybe you are looking for

-

I accidentally erased all the synchronization settings. I want to reset to previous point.

I had a computer on 18/08/2014 crash. I tried to recharge my sync settings, but he's willing to default. How can I recover all parameters that were on my browser since 17/08/2014?

-

Satellite Pro P100 (PSPAEA) and the 64-bit virtualization

Hi all I hope that someone tried to do this before and has an answer. I am currently running Windows Vista (32 bit) on my P100 with the last BIOS V4.40 (also 32 Bit Version). I am trying to install a Windows XP 64 Bit Virtual Machine using VMWare Wor

-

Bad choice for opening a program

Hi all- I just spent two hours with the remote support to get rid of the virus with the help of HP. It uses Revo Uninstaller to go through everything. When he asked me to check the shortcuts of programs on my desktop, I clicked for Internet Explorer

-

Is it a good idea to update windows install?

I am running 3.1 on an XP SP3 machine... 4.5 must be installed? and how is this done? Thank you

-

Windows Mail - Contact icon and the icon of the Contact Group on the toolbar are filed

The icon for contact and the contact group on the tool icon bar in Windows Mail deposited. Cannot locate.