Binary read incorrectly

Hello

I did a vi to read a tiff file as binary.

But I found that the total number of bytes read by my vi alwas missing 15 bytes.

I checked the total number of bytes with another Binary Editor (for example, hex fiend and 0xOD).

Anyone know why this problem occurs?

I have attached my vi and the tiff image I tried to read it.

Xiang00 wrote:

Hello

I did a vi to read a tiff file as binary.

But I found that the total number of bytes read by my vi alwas missing 15 bytes.

I checked the total number of bytes with another Binary Editor (for example, hex fiend and 0xOD).

Anyone know why this problem occurs?

I have attached my vi and the tiff image I tried to read it.

You have a tiff file you should not interpret as a text file to count the number of bytes. You're probably losing bytes in the ASCII conversion). Use Get Size.vi file (from file IO - range of advanced functions of file) to get the total number of bytes or Binary File.vi reading (set the entrance of count-1 to read all bytes) and connect a constant U8 on the type of input data to count the number of bytes in the vi you posted (using the size of the array).

Ben64

Tags: NI Software

Similar Questions

-

Hello world

I read so many lines and topics related to this BinaryRead I wonder how could I not make it work properly.

Shrtly I have a LXI instrument I used via TCPIP interface. The LXI instrument is called hollow driver an IVI - COM who use NI-VISA COM.

SCPI sending back answers without problem. The problem start when I need to read a block of IEEE of the VXI instrument. My driver is based on VISA-COM library and implemented a few interfaces formatted as IFormattedIO488, IMessage, but no ISerial that I don't need to use the interface series. The binary response from the instrument is: #41200ABCDABCDXXXXXXXXXX0AXXXXXXXXX which is made of 1200 bytes representing 300 float numbers.

To avoid getting the Stop 0x0A character which is part of the binary data stream, I turn off the character of endpoint using the IMessage interface and by setting TerminationCharacterEnabled = FALSE.

When I read the bit stream of the ReadIEEEBlock is always stop playback at character 0x0A anything.

I read al of the places in this forum that read binary stream properly by any instrument requires two parameters not only one that I have just mentioned.

The first, I'm able to control is on EnableTerminationCharacter to FALSE.

The NDDN is the VI_ATTR_ASRL_END_IN attribute that could be put in a pit ISerial interface only and I couldn't access this interface of my IVI - COM driver.

As we do not expect to use the interface series with our instruments of TCP/IP what other options I have to allow reading full of the binary stream of bytes, even when a 0x0A is inside.

Our IVI - COM drivers are written in C++ and use NI-VISA version is 4.4.1.

Last resort I tried to change the character of endpoints via interface IMessage for TerminationCharacter = 0 x 00 (NULL), but the ReadIEEEBlock always stops the character 0x0A?

Binary reading is performed inside the IVI - COM driver and if successful the full range of float data points is passed a potential customer request inside a SAFEARRAY * pData object passed as parameter to a public service of the IVI - COM. The problem is that I couldn't read to pass beyond hollow character 0x0A VISACOM interface.

Thank you

Sorin

Hi yytseng

Yes, I can confirm that the bug is from VXI-11 implementation on instrument LXI himself. Until binary reading all of the previous answers have been terminated by LF (0x0A) character who has the character to end on the device_write function. The VXI-11 specifications are very bad when talking about terminator on device_write then talk to the END indicator on device_read which is not necessarily the same for device_write and device_read. I talked to the guys implementing LXI instruments VXI-11 module and the two agreed to a lack of clarity about the nature of writing and reading processed stop. Ultimately our instrument for reading binary is only the form #41200ABCDABCDXXXXXX format the only character of termination to set TermCharEnabled = TRUE and TermChar = 0x0A, who could be the interface IMessage hollow of the VISACOM interface.

If the problem was not within the VISACOM, but inside the LXI, VXI instrument - 11 which has send the response of VISA as soon as detected a 0x0A byte value in the bit stream. Now after the BinaryRead change pass beyond 0x0A character but I find myself struggling to read in a ReadIEEEBlock appeal huge amount of float up to 100,000 points data, equivalent to 400,000 bytes for buffers used between instruments LXI and VISA are very small about 1 KB only.

In any case the solution to my original question was the poor implementation of the VXI-11 instrument on the treatment of device_read termination characters

Thanks for your help

Sorin

-

Difficulty using the binary read/write

Hello

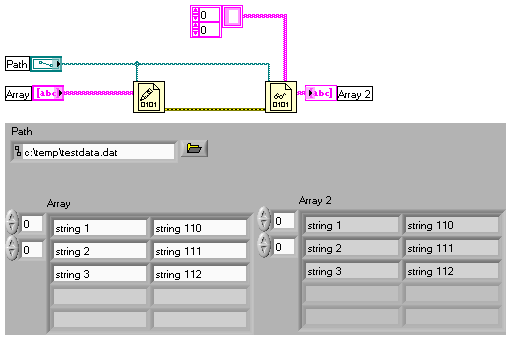

The binary read/write function somehow not working properly in my application.

I have one store a 2D strign binary array. When the user closes the application, the application stores the values in table in the Bin file.

N when the application starts, it reads the file and displays its contents on the table.

The system works well if the total size of the content is 3 bytes. But if the size of the content is greater than 3 bytes, the program simply returns a NULL value at first when I read the Bin file.

How to save:

Convert 2D in String table using "Flatten the string" fn and to save in a binary file.

Procedure to read:

read a binary file. convert the output string table 2D using 'string Unflatten ".

I enclose my code here. PLS, have a look at it and let me know the cause of the problem.

Ritesh

I don't understand why you're flattening of a string, a 2D channels table. It's redundant. You seem to be also the substitution of the default big-endian to little endian byte order. You try to save it in a format suitable for other programs on other platforms?

If this is not the case, all you have to do is:

-

Data acquisition reading incorrect when you use a loop

Hello

I wrote a simple VI (00, 01, 10, and 11) output to a circuit connected with 4 resistors. Based on what value the ciruit receives, it passes current through a particular resistance. It is again entered in Labview and traced.

The problem is when I send a particular value (i.e the 00, 01 and 10 and 11) and get that back, it's okay. But when I send and receive the consectively connected via the loop counter, they are incorrect (not synchronized with the number of the loop).

I made sure that circuit works very well. It has something to do with the loop synnchronization, reset, value compensation, etc. can be.

Please Guide...

Change your DAQ assistant that reads to be 1 sample on request.

Right now it is set for continuous samples. And 10 samples at 10 Hz. Then it runs and starts. The next iteration, you send a new digital out, but the wait for 4 seconds. When you read again, you get the next 10 samples that are put into the buffer of data acquisition, but now 40 samples have actually entered the DAQ buffer. In time your DAQ buffer will be finally complete and raise an error. In the meantime, you will continually read data continues to become more tainted by the iteration.

-

Binary read with one of the many possible definitions - or - how ot loop over different groups?

Hi all

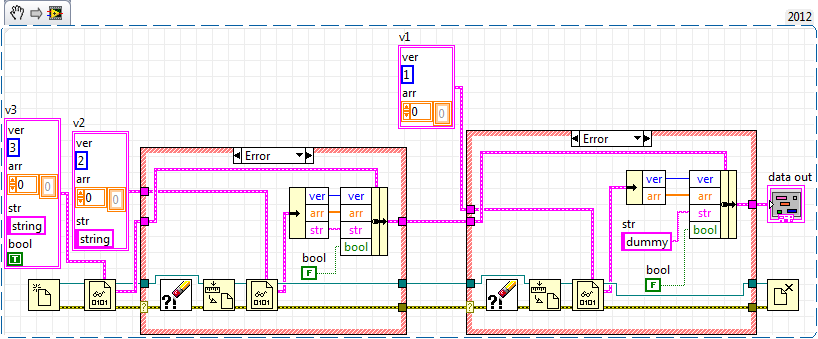

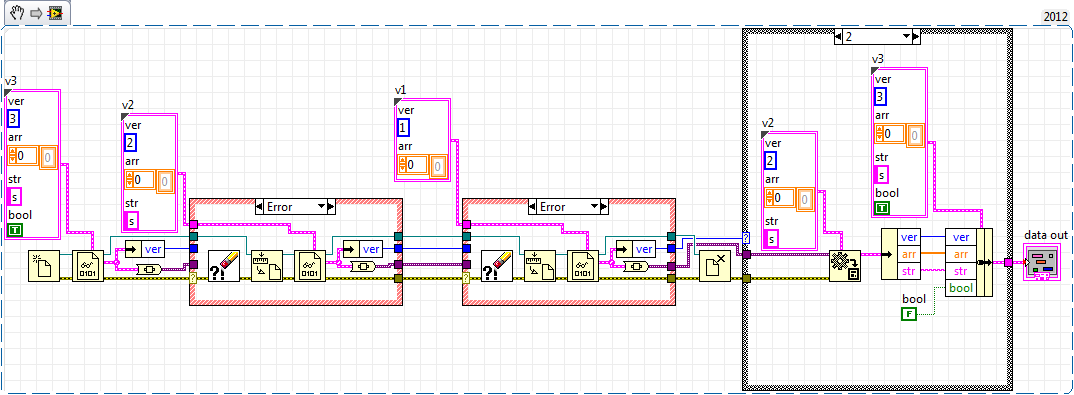

I have a group of different data types that I save as a binary file and read it again at a later date with an another VI. I saved the definiton of cluster with a typedef, so I am sure that I use the same type of reading and writing.

Now, I needed to change the cluster in the writing program should include another value. However, I still want to be able to use the VI of reading to read the old and new data files. I also have the opportunity to change the definition of binary file in the future (in VI writing) and be able to quickly adapt the VI to read the new definition while maintaining compatibility with the old.

My plan was to have a typedef of the pole for each revision, say v1.ctl, v2.ctl, v3.ctl...

Suppose I have a binary input file that has been saved using one of these typedefs but I don't know which. I now open the file and try to read its binary contents with each of my typedefs for data type and to stop once the reading of the binary VI gives no error. Then I would translate this cluster to the definition of cluster current version by wiring through existing values and assigning values of model / default for variables that are not on the binary file.

My idea on how this could work is described here, with three versions of filetype:

For each new version of filetype I would need a new business structure although the content of the business structures is almost identical.

Because I save the file type definition version in my files, I could even simplify and use a separate case structure to update the data and fill in the dummy data:

Now the error structures are completely identical, only their inputs differ by type. How can I work around this? How can I loop over the typedefs from different cluster effectively?

I use LabView 2012 SP1.

Since you already have a version of the cluster, just read this byte digital itself and then use a case structure date back to before the version and read the entire cluster based on whatever the version of the type definition read you.

-

binary read then exit by 1 channel

Hi Every1,

New in labview programming. Need help on my project.

Currently I have a txt file containing something like this:

1 0 1 0

0 1 0 1

1 1 0 0

0 0 1 1

How to read the text file and the output of the USB - 6501 through 1 digital output with an interval of 1 second.

The digital output line I shd get 1 0 1 0 0 1 0 1 1 1 0 0 0 0 1 1.

Any help is appreciated. Thank you.

Hi andy,.

check the below vi... in the present data in the string are converted into a table. You can use this table and the index of the element accordingly for your condition...

Let me know that it worked or not!

-

What could possibly make the date "manufactured by" in the window "about this mac" read incorrectly?

I have an early 2010 17 "Macbook Pro model to 2.66 GHz i7, but in the window"about this mac", it comes as made at the beginning of 2012.

All of you have service?

The Board has already been replaced?

That information is taken from the Board of Directors, I think.

-

Work of doesn´t of reading binary file on MCB2400 in LV2009 ARM embedded

Hello

I try to read a binary file from SD card on my MCB2400 with LV2009 Board built for the ARMS.

But the result is always 0, if I use my VI on the MCB2400. If use the same VI on the PC, it works very well with the binary file.

The

access to the SD card on the works of MCB2400 in the other end, if I

try to read a text file - it works without any problem.Y thre constraints for "reading a binary file" - node in Embedded in comparison to the same node on PC?

I noticed that there is also a problem

with the reading of the textfiles. If the sice of the file is approximately 100 bytes

It doesn´t works, too. I understand can´t, because I read

always one byte. And even if the implementation in Labview is so

bad that it reads the total allways of the file in ram it sould work. The

MCB2400 has 32 MB of RAM, so 100 bytes or even a few megabytes should

work.But this doesn´t seems to be the problem for binary-problem. Because even a work of 50 bytes binary file doesn´t.

Bye & thanks

Amin

I know that you have already solved this problem with a workaround, but I did some digging around in the source code to find the source of the problem and found the following:

Currently, binary read/write primitives do not support the entry of "byte order". Thus, you should always let this entry by default (or 0), which will use the native boutien of the target (or little endian for the target ARM). If wire you one value other than the default, the primitive will be returns an error and does not perform a read/write.

So, theoretically... If you return to the VI very original as your shift and delete the entry "byte order" on the binary file read, he must run a binary read little endian.

This also brings up another point:

If a primitive type is not what you expect, check the error output.

-

read in a labview complex binary file written in matlab and vice versa

Dear all. We use the attached funtion "write_complex_binary.m" in matlab to write complex numbers in a binary file. The format used is the IEEE floating point with big-endian byte order. And use the "read_complex_binary.m" function attached to read the complex numbers from the saved binary file. However, I just don't seem to be able to read the binary file generated in labview. I tried to use the "Binary file reading" block with big-endian ordering without success. I'm sure that its my lack of knowledge of the reason why labview block works. I also can't seem to find useful resources to this issue. I was hoping that someone could kindly help with this or give me some ideas to work with.

Thank you in advance of the charges. Please find attached two zipped matlab functions. Kind regards.

Be a scientist - experiment.

I guess you know Matlab and can generate a little complex data and use the Matlab function to write to a file. You can also function Matlab that you posted - you will see that Matlab takes the array of complex apart in 2D (real, imaginary) and which are written as 32 bits, including LabVIEW floats called "Sgl".

So now you know that you must read a table of Sgls and find a way to put together it again in a picture.

When I made this experience, I was the real part of complex data (Matlab) [1, 2, 3, 4] and [5, 6, 7, 8] imagination. If you're curious, you can write these out in Matlab by your complex function data write, then read them as a simple table of Dbl, to see how they are classified (there are two possibilities-[1, 2, 3, 4, 5, 6, 7, 8], is written "all real numbers, all imaginary or [1, 5, 2, 6, 3, 7, 4) [, 8], if 'real imaginary pairs'].

Now you know (from the Matlab function) that the data is a set of Sgl (in LabVIEW). I assume you know how to write the three functions of routine that will open the file, read the entire file in a table of Sgl and close the file. Make this experience and see if you see a large number. The "problem" is the order of bytes of data - Matlab uses the same byte order as LabVIEW? [Advice - if you see numbers from 1 to 8 in one of the above commands, you byte order correct and if not, try a different byte order for LabVIEW binary reading function].

OK, now you have your table of 8 numbers Sgl and want to convert it to a table of 4 complex [1 +, 2 + 6i, 5i 3 +, 4 + i8 7i]. Once you understand how to do this, your problem is solved.

To help you when you are going to use this code, write it down as a Subvi whose power is the path to the file you want to read and that the output is the CSG in the file table. My routine of LabVIEW had 8 functions LabVIEW - three for file IO and 5 to convert the table of D 1 Sgl a table of D 1 of CSG. No loops were needed. Make a test - you can test against the Matlab data file you used for your experience (see above) and if you get the answer, you wrote the right code.

Bob Schor

-

read an AVI using "Binary file reading" vi

My question is to know how to read an avi file using vi «The binary read»

My goal is to create a series of small avi files using IMAQ AVI write framework with the mpeg-4 codec to long 2 seconds (up to 40 images in each file with 20 frames per second) and then send them one by one in order to create a video stream. The image has entered USB camera. If I read these frameworks using IMAQ AVI read framework then compression advantage would be lost if I want to read the entire file itself.

I've read the avi file using "Binary file reading" with 8 bit unsigned data format and then sent to the remote end and save it and then post it, but it did not work. Later, I found that if I read an image using "Binary file reading" file with 8 bit unsigned data format and save it to local computer itself, the format should be changed and it would be unrecognizable. I'm doing wrong by reading the file format of number integer 8 bit unsined or should I have used other types of data.

I'm using Labview 8.5 and Labview vision development module and module vision 8.5 acquisition

Your help would be very appreciated.

Thank you.

Hello

Discover the help (complete) message to "write in binary.

"Precede the size of array or string" entry by default true, so in your example the data written to the file will be added at the beginning information on the size of the table and your output file will be (four bytes) longer than your input file. Wire a constant False "to precede the array or string of size" to avoid this problem.

Rod.

-

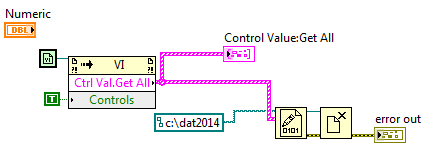

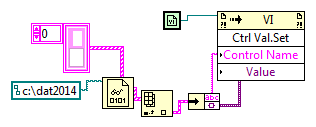

Change Ctrl.Val.Get or binary write format of LV8.5 until 2014

Hello

I have recently updated from LV8.5 to 2014 and a problem of compatibility. I used the Crl.Val.Get method to get an array of values and save them in a binary file:

to read later with a binary read:

My problem is that the files generated in this way with LV2014 (64-bit) cannot be read by LV8.5 (32 bit) - the result is a "LabVIEW: Unflatten or stream of bytes read operation failed due to corrupted, unexpected or truncated data" error or an application crash.

Writing binary VI changed of LV8.5 ro 2014? Is this a problem for 32/64-bit? Oddly enough LV2014 reads the files generated with LV8.5 without problem. Is it possible for me to this LV2014 program to ensure backward compatibility with LV8.5 (something that I need for a prolonged transition period).

Kind regards

Casper

About, unless you set the binary file format exactly (for example define how you store the data in the file until the byte level) I'd be dubious on the file is readable in the new/old versions of LabVIEW - especially if you are using types of complex data as of the beams.

Dealing with backward compatibility would be either define the binary file format and work to that or use a file format string in function (e.g., JSON, XML, INI) for which you can write a compatible rear parser. The OpenG library has screw for saving/loading of the controls in the Panel before a VI in an INI file, for example (even if OpenG stop supporting, it's a format string in function, so you can always write your own parser if you need to in the future). I know that don't helps you you in your current situation, but maybe something to keep in mind for the future.

-

Hi all!

I'm really newbie on chip in general. Sorry for the next bad English: s

We develop a mini-app to read the official license from the University of Seville open (without encryption) available data.

The smart card has a java-contact-chip with WG10 emulator for backward compatibility with older cards and with a capacity of 32 KB. There is also a mifare chip, but the data we need is the first chip.

The content of the chip-contact is organized like the following structure:

The data we want to read are written to the EFdatos 1005. This emission factor has more data that we need, so the concrete data are allocated to offset 0xF2 with length 8 d (length in bytes) and his label is "serial number".

Here is a small code to try to read the data of the card chip:

public void cardInfo() throws CardException, CardNotPresentException {

TerminalFactory factory = TerminalFactory.getDefault();

List <CardTerminal> terminals = factory.terminals().list();

CardTerminal terminal = terminals.get(FIRST_TERMINAL);

Card card = terminal.connect(PROTOCOL_T0);

CardChannel channel = card.getBasicChannel();

byte[] resp = {(byte)0x00, (byte)0xA4, 0x04, 0x00, 0x00 };

CommandAPDU command = new CommandAPDU(resp);

ResponseAPDU response = channel.transmit(command);

System.out.println(DatatypeConverter.printHexBinary(response.getBytes()));

card.disconnect(false);

}

It's the only possible output I get no errors/warnings as A 6, 82, 6 d 00, 6A 86... (SW1 |) SW2):

6F658408A000000018434D00A559734A06072A864886FC6B01600C060A2A864886FC6B02020101630906072A864886FC6B03640

B06092A864886FC6B040255650B06092B8510864864020103660C060A2B060104012A026E01029F6E061291111101009F6501FF9000

Other commands like GET DATA, READ BINARY etc did not work for me. And with the above data, I tried to decode with decoder ASN1 but neither has successful information on how to go further with it.

Please can someone help me or give me some idea how to continue. I'm really disappointed.

I really appreciate your help!

Thanks in advance,

Luis.

Hi again,

I think that "58 59 00 FF 00 04 46 01" is USING the application, the result is very well (it ends with SW:9000) and it seems that you have selected application successfully asked. Now, you are able to select any file in the application. I guess that your purpose is to read EFdatas that has a file identifier "1005". Please try the following sequence:

(1) select the application resp1 = {0x00, (byte) 0xA4, (byte) 0 x 04, 0 x 00, 0 x 08, 0 x 0, 58 x 59, 0 x 00, (byte) 0xFF, 0 x 00, 0 04, 0 x 46, 0 x 01, (byte) 0 x 00}; )

(2) the command "Select File" send with file DF1: resp1 = {0x00, (byte) 0xA4, 1 m env, 0 x 00, 0 02, xx, yy} where P1 = 0x00 or 0x01 and xx yy is the file identifier of 2 bytes (ask for it or use search algorithm loop)

(3) the command "Select File" send with EFdatas files: resp1 = {0x00, (byte) 0xA4, 1 m env, 0 x 00, 0 02, 0 x 10, 0 x 05} where P1 = 0x00 and 0x02

(4) "Binary reading" send command with EFdatas files resp1 = {0x00, (byte) 0xb0, 0 x 00, 0 00, 0xFF}

On the ISO7816-4 orders please read:

Standard ISO 7816-4 smart card (ISO7816, part 4, section 6): basic Interindustry commands

Concerning

-

GPS information are incorrect in lightroom cc.

Hello

I use Lightroom CC and change the program manager of Photo of Apple Aperture.

Lightroom is good program as a manager of photos, but there is a problem with gps information.

I use the Canon 6 d with GPS module. so when I take a picture, information GPS are written in photo.

When I import files into Aperture 3 and place tab (see the map), the GPS information I took a picture is correct.

However, when I import the same files in Lightroom, the GPS information is incorrect.

Latitude is correct, but the longitude is incorrect.

for example, I took a picture in Korea, but my photo is in Iran, when I see in Lightroom.

How can I solve this problem?

It seems that the software that wrote the coordinates GPS (Capture One 8?) written in a format that meets the standards of the industry, but who can not handle LR. I filed a bug report in the Adobe official feedback forum: Lightroom: GPS read incorrectly. Please add your vote and the details about it, including some which software has added GPS information. It will be a bit more likely that Adobe will give priority to a solution. (The developers of adobe products rarely participate in this forum here).

The bug report also includes a work around using the free Exiftool.

-

Is it true that if I download Firefox 6, Skype will be disabled?

I tried to download Firefox 6, but unless I read incorrectly, he said that I would not be able to use to use Skype more. Is this true? I really love Firefox but would not be able to leave Skype.

No, it is not true. All Firefox 6 would disable is the Skype for Firefox extension, which highlights the phone numbers on the web pages that are read in Firefox and then allows these phone numbers to call in Skype by clicking on the highlighted phone number.

-

DDF mount data to the database

Dear:

I need to design an application to read the data of the DDF file and mount them in database.

Could you please let me know how I can design an application to read these DDF files.

I offered to do the next step:

1 binary reading the orginal DDF file

2 convert to ASCII code

3. Finally, following the DASYLab format to enter data and transfer to the database file.

Thank you

There are two ways to do this:

First of all, just read the data using the DATA module to READ and write again with a DATA WRITE module configured to ASCII. Please see the ASCII options to ensure that the data separator, date/time format and the number of decimal places to your needs.

Make sure that you configure the DATA module to READ to read NOT in real time. The default is to replay in real-time.

Second, read the data using the DATA READING module and use the module output of ODBC and an Action to write directly to the database module. This implies relatively slow data and cannot be done at high speed.

Maybe you are looking for

-

How can I get the icon of application on the right side of my dock?

Hi all I've recently upgraded to El Capitan 10.6.8 Snow Leopard! WOW! I know that I lived in the past. in any case, I tried dragging the applications from the finder icon in the right side of my dock. It's for me are my application icons piled on eac

-

Wrong Page number, printing on LaserJet M1212nf MFP

I recently bought a LaserJet M1212nf MFP. My computer running Windows 7. I am trying to print a document in Word that displays the page number and the number of pages at the bottom of the page (Page 1 of 3, Page 2/3, Page 3/3), that the document on t

-

Please help me to match a cartridge for a printer (both HP)

I was hoping that someone would know a list where I can know which to a certain cartridge for HP printers (in this case, numbers 21, 22 and 58)). A complete list would be good since every so often, that I meet full cartridges in thrift stores. Thank

-

BlackBerry Smartphones HELP mms prob!

Hi all I got my bb 9700 in December and since then I cnt send mms there is no option to send a photo! I have tried everythin in photos and try options here! I tried sending a text msg n adding a pic but it just a photo! There is no option of mms anyw

-

How can I transfer my photoshop cs6 to a new pc?

How can I transfer my photoshop cs6 to a new pc?