collect the data to write to the file

Hello everyone!

I was wrong of wich pretty depressing problem my mind... for a few days. I have a VI with while the witch loop is part of the largest project... my goal is to collect data and save them to file after 1000 while loop activities... be more specific - after all done loop 1000 moves I want to write to the file, the number of strokes (in the 'Number' of an attachment photo) and the result of the value ('table') should be a kind of table (2columns 1000 lines)... I can't do more while ower loop all the VI because it allows the other VI to work... Can you suggest me sommething?

.. .to while say you the activities of the do loop iterations?...

Yes, it's wright, I couldn't find the wright expressin at the end of the night.

OK I will try to write to the table line by line... I'll check up is it posible to add to an existing spradsheet of the file a line of data for 1000 times after each iteration of the loop... it might be more reliable because each iteration of the loop is device once in a 30 min, and if your PC loses power I lose all data.

Thank you so much for the ideas!

Tags: NI Software

Similar Questions

-

When you use the copy and collect the files to the new location, I get the error file not found...

I have 24 hours to media manage and send a feature-length documentary online instead. Everything is ready to go and so I'm going in the media project manager manage all this and I get the following error message:

This is meaningless - why he's looking for the file that it is the copy in the destination folder? The file in question is not in offline mode, it is bound to correctly in the project file and reads without problems. I also tried manually copying the file in the destination folder to see if it changes anything, but no luck.

I would like ideas, that anyone could have!

Thank you!

-Michael

It seems that because the name of the file was a '-' in there. I changed the name of the file and then reconnected and it has been working fine since.

-

Nothing crazy, just the images and only sequence (not even the applied effects). I can open my file CC in 2014. If I try to open in Creative Suite 6, I get the file seems to be corrupted and cannot be opened. I can, however, always open in the trial version of CC 2014. I, of course, will want to return to the project after the trial.

I tried the things:

Re-recorded the CC project in 2014. Indicates still as corrupt in the CS6.

Tried to open the media browser project in CS6, at least to import. No luck.

Tried the saved copies, no luck.

Suggestions?

You will be not able for a project done in CC14 to open in CS6.

CC14 first is not backward compatible, has never been.

You can export the project CC14 as a (file/export/Final Cut Pro XML) XML and importing CS6.

You probably only remain with the film. Rest can be disappeared like the effects, transitions etc.

-

Harvest of the files (xsd/wsdl, etc.) does not

Hello world

I'm trying to collect the files and directories of BPEL projects via putty

The command I use is:-

*./Harvest.sh-URL http://172.18.79.90:7101 / oer1-user admin-password v2_1.G + NTr3az8thaGGJBn0vwPg == *.

-file /home/oracle/SOAApplication/SOABpelProject/xsd/Employee.xsd

v2_1.G + NTr3az8thaGGJBn0vwPg is here is the password that is generated in the HarvesterSettings.xml file after running fichier./encrypt.sh

but I get the below error: -.

com.oracle.oer.sync.framework.MetadataIntrospectionException: com.oracle.oer.sync.framework.MetadataIntrospectionException: cannot read file plugin: /home/oracle/soa_new/repository111/core/tools/solutions/11.1.1.6.0-OER-Harvester/harvester/./plugins/task.introspector

at com.oracle.oer.sync.framework.MetadataManager.init(MetadataManager.java:310)

to com.oracle.oer.sync.framework.Introspector. < init > (Introspector.java:201)

at com.oracle.oer.sync.framework.Introspector.main(Introspector.java:428)

Caused by: com.oracle.oer.sync.framework.MetadataIntrospectionException: cannot read file plugin: /home/oracle/soa_new/repository111/core/tools/solutions/11.1.1.6.0-OER-Harvester/harvester/./plugins/task.introspector

at com.oracle.oer.sync.framework.impl.DefaultPluginManager.processIntrospector(DefaultPluginManager.java:127)

to com.oracle.oer.sync.framework.impl.DefaultPluginManager. < init > (DefaultPluginManager.java:73)

at com.oracle.oer.sync.framework.MetadataManager.init(MetadataManager.java:308)

*... more 2 *.

Caused by: com.oracle.oer.sync.framework.MetadataIntrospectionRuntimeException: error: could not find the type of assets in REL: 898dd147-3680-11de-bee0-79d657a0a2b0. Please ensure that the harvester Pack Solution is installed in OER.

at com.oracle.oer.sync.framework.MetadataManager.putAssetType(MetadataManager.java:213)

at com.oracle.oer.sync.framework.impl.DefaultPluginManager.processIntrospector(DefaultPluginManager.java:104)

*... more 4 *.

can someone help me please to solve the issue?Please ensure that the harvester Pack Solution is installed in OER.

-

How many channels can I write to a file with the data Module write (Dasylab 12.00.00)?

I would like to write data from a large number of channels (up to 128) in the same file. Thanks to the write data module, I have up to 16 channels only! The only solution I found is to save data in different files 8... Is there a solution for this problem? I use DASYLAB V.12.00.00. Thank you

You can use the multiplexer unit, this will allow you to compress the 16 channels in 1 which saves up to 256 channels in a single file.

In the module of the file to write, you can then select options and, under the "input Type", select the values of mixed singles. You will then need to define how many channels in each entry will receive.

-

The monitoring of test data to write in the CSV file

Hi, I'm new to Labview. I have a state machine in my front that runs a series of tests. Every time I update the lights on the Panel with the State. My question is, how is the best way to follow the test data my indicators are loaded with during the test, as well as at the end of the test I can group test data in a cluster, and send it to an another VI to write my CSV file. I already have a VI who writes the CSV file, but the problem is followed by data with my indicators. It would be nice if you could just the data stored in the indicators, but I realize there is no exit node =) any ideas on the best painless approach to this?

Thank you, Rob

Yes, that's exactly what typedef are to:

Right-click on your control and select make typedef.

A new window will open with only your control inside. You can register this control and then use it everywhere. When you modify the typedef, all controls of this type will change also.

Basically, you create your own type as 'U8 numéric', 'boolean', or 'chain' except yours can be the 'cluster of all data on my front panel' type, "all the action my state machine can do," etc...

-

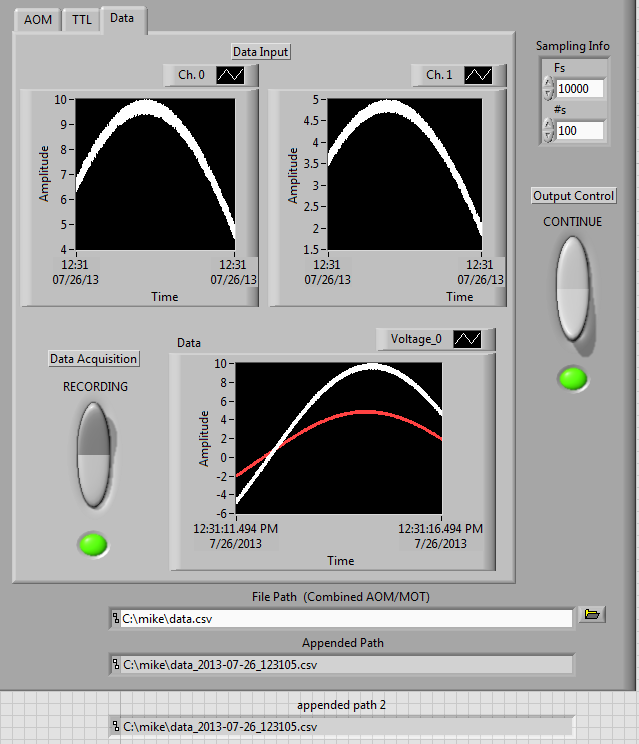

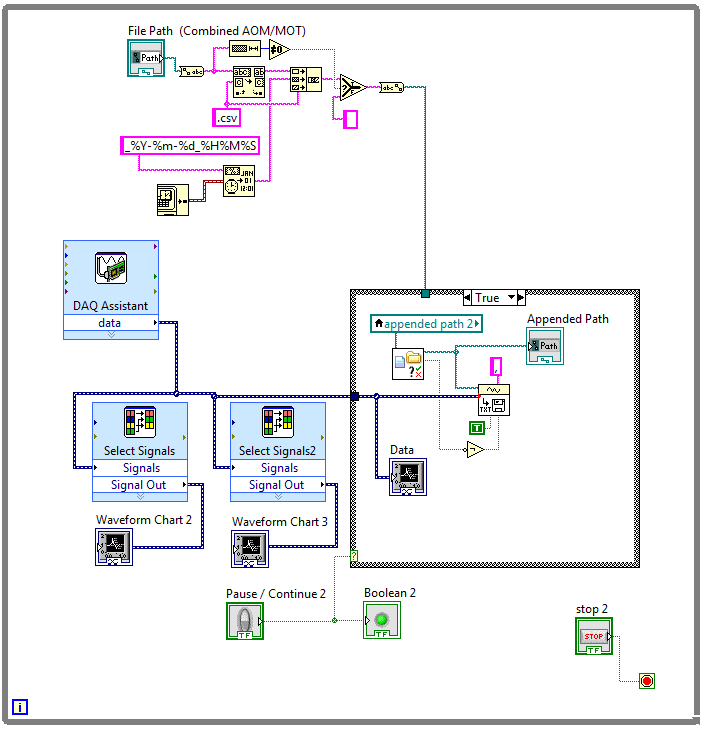

Try to write data to the file, but error 200279

I have problems when writing data to a file. 10 seconds in the recording process, I get the error 200279. I did some research on the subject, but I am unable to corect my code. I think I want to increase the size of the buffer, but he prefers, I suppose, to read the data more frequently. The way that I save my file is, before starting the VI, I attribute a location and name of the file (e.g. data.csv). The date and time is added at the end of the actual when file the I start to record the data (e.g. data_07-26 - 13_122615.csv). If the file does not exist, it creates a new file, and then adds the data of this file after each iteration of the loop. The reason why I did this way was so I don't have to worry about running out of memory, but apparently my code is wrong.

I will include a copy of the faulty section of my code. Any help would be greatly appreciated.

Thank you.

Your problem is that the writing on the disk is slow. It is pretty slow so it causes your DAQ buffer overflow and cause error and loss of data. What you need to do is to implement a producer/consumer. This will put the data acquisition and recording on the disc in separate loops. This will allow data acquisition work at speed, that he needs to deal with incoming samples and writing on disk can run at all what pace, he can. You send data from data acquisition to the loop of logging using a queue.

You can also think about changing how you write to the file. VI is constantly open and close the file, which is a very slow process if you do it inside a loop.

-

write table 2D data in the file but the file is empty

Hello

I tried to write all reading of powermeter data to a file in two ways, I can see the output in the Arrais indicator data but when I write to "File.vi of the worksheet" or write to 'text file', the files are empty.

Please help me.

Try to remove the vi "STOP".

I suspect, it prevents the main vi before the backup occurs.

As a second point: given that you save data inside a while loop be sure you add the data from the file and not crash each time.

wire that is a 'real' terminal 'Add file '.

Kind regards

Marco

-

filtering of the data sent to write to the file of measure

Hi everyone, I was wondering how to implement a "filter" that prevents the data to write in the function "write into file measuments. Say if the incoming data is a 0 (DBL), hwo to prevent it from being written?

Use a box structure. Put your writing to the file of the measure within a case structure, probably in the REAL case.

-

How can I write snapshot of my data to the file measured every 5 seconds

Hello

I try to take a snapshot of my stream once every 5 seconds and write it to a. File LVM. I have problems with the VI "write to a measurement file. The pace at which it writes data to the file seems to be dictated by the 'Samples to Read' parameter in the DAQ assistant. I tried placing the VI 'Write in a file measure' within a business structure and the launch of the structure to deal with a "time up" VI. As a result only in a delay of 5 seconds before the insiders 'Write in a file as' VI. Once the VI 'Write in a file as' is launched, it starts writing at 20 x per second. Is there a way to change it or dictate how fast the exicutes VI 'Write in a file measure'?

My reason for slowing down the write speed are, 1) reduce space occupied by my data file. (2) reduce the cycles of CPU use and disk access.

The reason why I can't increase the value of 'Samples to Read' in the DAQ Assistant (to match my requirement to write data), it's that my VI will start to Miss events and triggers.

I don't know I can't be the only person who needs high-frequency data acquisition and low-frequency writing data on the disk? However, I see a straight road to key in before that.

The equipment I use is a NI USB DAQ 6008, data acquisition analog voltage to 100 Hz.

Thanks in advance for your help

See you soon

Kim

Dohhh!

The re - set feature has not been put in time elapsed VI!

Thank you very much!

See you soon

Kim

-

I use windows xp as the operating system. I bought the new 3G huawei data card. While surfing internet, between a pop-up is displayed

«Windows-delayed write failed, windows was unable to save all data in the file C:\Documents and settings\new\Local Settings\Application Data\Google\Chrome\User Data\Default\Session Storage\004285.log.» The data has been lost. This error can be caused by a failure of your computer hardware.

After this computer freezes and I have to restart my computer. Please help me how to fix this problem.

The error basically says that he tried to write something on your hard drive and for some reason that unfinished write operation. This could indicate a bad sector on your hard disk, damaged disk or a problem with your hardware.

Whenever a problem involving a disk read or write appears, my first approach is to perform a verify operation of the disc to the hard drive. Even if this is not your problem, it is a step of good routine maintenance. Run the disk check with the "/ R" or "Repair" option. Note that the real disk check will be presented at the next reboot, will run until Windows loads completely, cannot be interrupted and can take more than a few hours to run depending on the size of your hard drive, the quantity and type found corruption and other factors. It is better to perform during the night or when you won't need your computer for several hours.

'How to perform disk in Windows XP error Cherking'

<>http://support.Microsoft.com/kb/315265/en-us >

HTH,

JW

-

Write delay failed unable to save all the data for the file $Mft

Have a frustrating problem. Help is greatly appreciated. Learned the hard way and lost a dwg important issue which was unrecoverable. Bought a new drive external hard seagate. Could not back up the entire system with software pre-installed. Download acronis true image home 2011. Tried to backup system. Receive error when trying to backup messages; 'Delay to failure of Scripture; Reading of the sector; Windows was unable to save all the data for the file $Mft. The data has been lost. Have tried many fixes. Unable to disable write caching method (grayed out / unclickable). Played regedit 'EnableOplocks' is not listed to select. Attempted to run microsoft 'fix it' and got the blue screen of death. Short hair out of my head. Suggestions appreciated.

I don't know where Microsoft 'Technical support engineers' get their information.

Write caching without a doubt "does apply to external hard drives", but it is usually disabled to prevent to sort the problem you are experiencing.

I've seen several positions reporting to the same question that you do. All of these positions were with SATA drives. Is your Seagate eSATA drive?

The disc is recognized as an external drive in Device Manager? In Device Manager, go to the drive properties dialog box and click the policies tab. An external drive should have two options: "Optimize for quick removal" and "Optimize for performance". An internal hard drive shows the options as gray (with 'performance' selected), but there should also be a checkbox "Enable disk write cache" under the second option.

What shows in your policies tab?

If I understand correctly, "writeback" or "write behind" is implemented by disc material or its pilot. If the option is not available on the drive properties > policies tab, I would suggest to contact Seagate support.

-

Write to the file of measurement with a loop For using the value of the dynamic data attributes

I looked and looked, but couldn't find a solution for this.

I currently have 15 points of different data that I try to write in an Excel file. I have all combined in a table and lie with the function "write into a file position. However, the column names are always "Untitled", "Untitled 1" etc. I then used the function 'Set the Dynamic Data attributes'; but for this I have to do 15 different functions "set data dynamic attributes. It was suggested to use a loop with the function 'Set the Dynamic Data attributes' inside of her, but I can't find how do.

I have several arrays consisting of 15 different values for 'Signal Index' 'Name of Signal' and 'Unit', but also a unique 'get Date/Time In Seconds' related to 'Timestamp '. The problem is that the error I get when I try to connect the output with Scripture at the entrance to measure file:

The source type is dynamic data table 1 d. The type of sink is Dynamic Data.

How can I fix it? I have attached a picture of my installation; Sorry if this is gross (I'm new on this!). Thank you!

It will get rid of the error, but it is not quite correct. What you need to do after that is to click on the output or the tunnel entry and select 'replace with the shift register. In addition, the array of values that you have wired to the Signal Index is wrong. Arrays are 0 based. Just wire the iteration Terminal here. And, finally, take the size of the table and this connection to the N terminal are stupid. Don't wire nothing to this.

-

Write to the file of accounts, data from customs (intersection POV) in the rules of HFM

Hi all

I'm trying to use scripture to deposit into the HFM rules. I am able to get the entity, scenario, year, time, and Timestamp to save to a file (see code below). Is it possible I can get accounts, customs and crossing of data also to write to a file and make the analysis of perfromance? Basically, how can I have the whole POV in a log file and test the rules.

f.WriteLine txtStringToWrite & '-for:-"& HS. Entity.Member & "-" & HS. Scenario.Member & "-" & HS. Year.Member & "-" & HS. Period.Member & "" & Now()

Thanks in advance. Looking forward to hear from you.

Chavigny.The account, PKI and custom dimensions are not part of the point of view that the entity, period, year, scenario and value. The only way that you can write these dimensions in a file is to call them in a table, as HS. OpenDataUnit, or list any. It is the only way to get the context for intersections.

If you try to write data to a file, you'll end up with a file that is at least as large as the database itself and could be a very slow process. If that is your intention, you would be better to simply analyze the database. Write to a file should be used only for debugging.

-Chris

-

I have data to the basic generic text file format that must be converted into Excel spreadsheet format. The data are much longer than 65536 rows and in my code, I was not able to find a way to see the data in the next column. Currently, the conversion is done manually and generates an Excel file which has a total of 30-40 complete columns of data. Any suggestions would be greatly appreciated.

Thank you

Darrick

Here is a possible solution to the (potential) problem. Convert an array of strings and erase the data before writing to the file

Maybe you are looking for

-

I was able to download Firefox 5. I transferred to my applications folder, it says that I can't open because my architecture does not support. Why did it clear that when I lifted the minimum requirements? According to this, I should be able to use it

-

short of edition Indian a1000-g ZTE memory

After update of default applications like chorme playstore etc seen standard internal memory will be filled to the top, after the full e-mail service and each sunchronisation is slaughter, fix software is necessary for the expansion of 500 MB to 1 GB

-

Integration of DAQ devices and touchscreen

I use the data USB-6210 acquisition read the output of a number of linear sensors and convert these tensions to indicate the actual position of the sensor. The code works fine on my computer, however when it is run on computers with touch screen (TPC

-

How Preserve timestamp when moving folder to different partitions?

I don't remember that happening before. When I cut a folder to another partition/drive to it, dates of mod and the creation of records are updated right now, which I don't want. This only happened on my other installation. What is a customizable opti

-

How can I disable JAVA on my computer Windows Vista Home Premium?