Nexus 1000v by cluster?

Ho mny nexus 1000v should deploy? I had a cluster with 8 hosts running a pair of nexus 1000v. If I set up a new cluster, do I need to deploy a new pair or nexus to the new cluster?

VSMs are used to control, no data. VEM is installed on the computers hosts - that's what manage switching.

Tags: VMware

Similar Questions

-

Nexus 1000v - a pair of VSMs by cluster?

Hello

I'll start migrating some of our groups to Nexus 1000v pretty soon. The environment consists of two data centers at least 2 groups in each SC. In total, we have 5 clusters in different places.

I plan on the deployment of the N1Kv in pairs for HA. Do I need a pair of N1Kv by cluster, or can I use the same for the different clusters?

Thank you

If this post was useful/solved your problem, please mark the points of wire and price as seem you. Thank you!

I would deffinitely go with multiple VSM in a scenario HA. You mention different places and several clusters by DC. Here's what I'd do.

First a Nexus 1000v VSM gets tied to a domain controller from VMware. You can have a Nexus 1000V manage multiple clusters as long as they are under the same VMware domain controller. You can add up to 64 ESX host to a single Nexus 1000V VSM.

So I do a VSM HA pair by DC and did manage multiple clusters. If your clusters have a large number of ESX host it might make sense to use two independent installations of 1000V Nexus by DC. When I say large I mean 32 hosts per cluster.

When you say that the clusters are in several places. I take that to mean different physical data centers. In this case I highly recommend that you install a Nexus 1000V at each location.

Louis

-

Nexus 1000v, UCS, and Microsoft NETWORK load balancing

Hi all

I have a client that implements a new Exchange 2010 environment. They have an obligation to configure load balancing for Client Access servers. The environment consists of VMware vShpere running on top of Cisco UCS blades with the Nexus 1000v dvSwitch.

Everything I've read so far indicates that I must do the following:

1 configure MS in Multicast mode load balancing (by selecting the IGMP protocol option).

2. create a static ARP entry for the address of virtual cluster on the router for the subnet of the server.

3. (maybe) configure a static MAC table entry on the router for the subnet of the server.

3. (maybe) to disable the IGMP snooping on the VLAN appropriate in the Nexus 1000v.

My questions are:

1. any person running successfully a similar configuration?

2 are there missing steps in the list above, or I shouldn't do?

3. If I am disabling the snooping IGMP on the Nexus 1000v should I also disable it on the fabric of UCS interconnections and router?

Thanks a lot for your time,.

Aaron

Aaron,

The steps above you are correct, you need steps 1-4 to operate correctly. Normally people will create a VLAN separate to their interfaces NLB/subnet, to prevent floods mcast uncessisary frameworks within the network.

To answer your questions

(1) I saw multiple clients run this configuration

(2) the steps you are correct

(3) you can't toggle the on UCS IGMP snooping. It is enabled by default and not a configurable option. There is no need to change anything within the UCS regarding MS NLB with the above procedure. FYI - the ability to disable/enable the snooping IGMP on UCS is scheduled for a next version 2.1.

This is the correct method untill the time we have the option of configuring static multicast mac entries on

the Nexus 1000v. If this is a feature you'd like, please open a TAC case and request for bug CSCtb93725 to be linked to your SR.This will give more "push" to our develpment team to prioritize this request.

Hopefully some other customers can share their experience.

Regards,

Robert

-

Design/implementation of Nexus 1000V

Hi team,

I have a premium partner who is an ATP on Data Center Unified Computing. He has posted this question, I hope you can help me to provide the resolution.

I have questions about nexus 1KV design/implementation:

-How to migrate virtual switches often to vswitch0 (in each ESX server has 3 vswitches and the VMS installation wizard will only migrate vswicht0)? for example, to other vswitchs with other vlan... Please tell me how...

-With MUV (vmware update manager) can install modules of MEC in ESX servers? or install VEM manually on each ESX Server?

-Assuming VUM install all modules of MEC, MEC (vib package) version is automatically compatible with the version of vmware are?

-is the need to create port of PACKET-CONTROL groups in all THE esx servers before migrating to Nexus 1000? or only the VEM installation is enough?

-According to the manual Cisco VSM can participate in VMOTION, but, how?... What is the recommendation? When the primary virtual machines are moving, the secondary VSM take control? This is the case with connectivity to all virtual machines?

-When there are two clusters in a vmware vcenter, how to install/configure VSM?

-For the concepts of high availability, which is the best choice of design of nexus? in view of the characteristics of vmware (FT, DRS, VMOTION, Cluster)

-How to migrate port group existing Kernel to nexus iSCSI?... What are the steps? cisco manual "Migration from VMware to Cisco Nexus 1000V vSwitch" show how to generate the port profile, but

How to create iSCSI target? (ip address, the username/password)... where it is defined?

-Assuming that VEM licenses is not enough for all the ESX servers, ¿will happen to connectivity of your virtual machines on hosts without licenses VEM? can work with vmware vswitches?I have to install nexus 1000V in vmware with VDI plataform, with multiple ESX servers, with 3 vswitch on each ESX Server, with several machinne virtual running, two groups defined with active vmotion and DRS and the iSCSI storage Center

I have several manuals Cisco on nexus, but I see special attention in our facilities, migration options is not a broad question, you you have 'success stories' or customers experiences of implementation with migration with nexus?

Thank you in advance.

Jojo Santos

Cisco partner Helpline presales

Thanks for the questions of Jojo, but this question of type 1000v is better for the Nexus 1000v forum:

https://www.myciscocommunity.com/Community/products/nexus1000v

Answers online. I suggest you just go in a Guides began to acquire a solid understanding of database concepts & operations prior to deployment.

jojsanto wrote:

Hi Team,

I have a premium partner who is an ATP on Data Center Unified Computing. He posted this question, hopefully you can help me provide resolution.

I have questions about nexus 1KV design/implementation:

-How migrate virtual switchs distint to vswitch0 (in each ESX server has 3 vswitches and the installation wizard of VMS only migrate vswicht0)?? for example others vswitchs with others vlan.. please tell me how...

[Robert] After your initial installation you can easily migrate all VMs within the same vSwitch Port Group at the same time using the Network Migration Wizard. Simply go to Home - Inventory - Networking, right click on the 1000v DVS and select "Migrate Virtual Machine Networking..." Follow the wizard to select your Source (vSwitch Port Groups) & Destination DVS Port Profiles

-With VUM (vmware update manager) is possible install VEM modules in ESX Servers ??? or must install VEM manually in each ESX Server?

[Robert] As per the Getting Started & Installation guides, you can use either VUM or manual installation method for VEM software install.

-Supposing of VUM install all VEM modules, the VEM version (vib package) is automatically compatible with build existen vmware version?

[Robert] Yes. Assuming VMware has added all the latest VEM software to their online repository, VUM will be able to pull down & install the correct one automatically.

-is need to create PACKET-MANAGEMENT-CONTROL port groups in ALL esx servers before to migrate to Nexus 1000? or only VEM installation is enough???

[Robert] If you're planning on keeping the 1000v VSM on vSwitches (rather than migrating itself to the 1000v) then you'll need the Control/Mgmt/Packet port groups on each host you ever plan on running/hosting the VSM on. If you create the VSM port group on the 1000v DVS, then they will automatically exist on all hosts that are part of the DVS.

-According to the Cisco manuals VSM can participate in VMOTION, but, how? .. what is the recommendation?..when the primary VMS is moving, the secondary VSM take control?? that occurs with connectivity in all virtual machines?

[Robert] Since a VMotion does not really impact connectivity for a significant amount of time, the VSM can be easily VMotioned around even if its a single Standalone deployment. Just like you can vMotion vCenter (which manages the actual task) you can also Vmotion a standalone or redundant VSM without problems. No special considerations here other than usual VMotion pre-reqs.

-When there two clusters in one vmware vcenter, how must install/configure VSM?

[Robert] No different. The only consideration that changes "how" you install a VSM is a vCenter with multiple DanaCenters. VEM hosts can only connect to a VSM that reside within the same DC. Different clusters are not a problem.

-For High Availability concepts, wich is the best choices of design of nexus? considering vmware features (FT,DRS, VMOTION, Cluster)

[Robert] There are multiple "Best Practice" designs which discuss this in great detail. I've attached a draft doc one on this thread. A public one will be available in the coming month. Some points to consider is that you do not need FT. FT is still maturing, and since you can deploy redundany VSMs at no additional cost, there's no need for it. For DRS you'll want to create a DRS Rule to avoid ever hosting the Primar & Secondary VSM on the same host.

-How to migrate existent Kernel iSCSI port group to nexus? .. what are the steps? in cisco manual"Migration from VMware vSwitch to Cisco Nexus 1000V" show how to generate the port-profile, but

how to create the iSCSI target? (ip address, user/password) ..where is it defined?[Robert] You can migrate any VMKernel port from vCenter by selecting a host, go to the Networking Configuration - DVS and select Manage Virtual Adapters - Migrate Existing Virtual Adapter. Then follow the wizard. Before you do so, create the corresponding vEth Port Profile on your 1000v, assign appropriate VLAN etc. All VMKernel IPs are set within vCenter, 1000v is Layer 2 only, we don't assign Layer 3 addresses to virtual ports (other than Mgmt). All the rest of the iSCSI configuration is done via vCenter - Storage Adapters as usual (for Targets, CHAP Authentication etc)

-Supposing of the licences of VEM is not enough for all ESX servers,, ¿will happen to the connectivity of your virtual machines in hosts without VEM licences? ¿can operate with vmware vswitches?

[Robert] When a VEM comes online with the DVS, if there are not enough available licensses to license EVERY socket, the VEM will show as unlicensed. Without a license, the virtual ports will not come up. You should closely watch your licenses using the "show license usage" and "show license usage " for detailed allocation information. At any time a VEM can still utilize a vSwitch - with or without 1000v licenses, assuming you still have adapters attached to the vSwitches as uplinks.

I must install nexus 1000V in vmware plataform with VDI, with severals Servers ESX, with 3 vswitch on each ESX Server, with severals virtual machinne running, two clusters defined with vmotion and DRS active and central storage with iSCSI

I have severals cisco manuals about nexus, but i see special focus in installations topics, the options for migrations is not extensive item, ¿do you have "success stories" or customers experiences of implementation with migrations with nexus?

[Robert] Have a good look around the Nexus 1000v community Forum. Lots of stories and information you may find helpful.

Good luck!

-

Hello

Thanks for reading.

I have a virtual (VM1) connected to a Nexus 1000V distributed switch. The willing 1000V of a connection to our DMZ (physically, an interface on our Cisco ASA 5520) which has 3 other virtual machines that are used successfully to the top in the demilitarized zone. The problem is that a SHOW on the SAA ARP shows the other VM addresses MAC but not VM1.

The properties for all the VMS (including VM1) participating in the demilitarized zone are the same:

- Tag network

- VLAN ID

- Port group

- State - link up

- DirectPath i/o - inactive "path Direct I/O has been explicitly disabled for this port.

The only important difference between VM1 and the others is that they are multihomed agents and have one foot in our private network. I think that the absence of a private IP VM1 is not the source of the problem. All virtual machines recognized as directly connected to the ASA (except VM1).

Have you ever seen this kind of thing before?

Thanks again for reading!

Bob

The systems team:

- Rebuilt the virtual machine

- Moved to another cluster

- Configured for DMZ interface

Something that they got the visible VM to the FW.

-

Update Virtual Center 5.0 to 5.1 (using Cisco Nexus 1000V)

Need advice on upgrading production please.

current environment

Race of Virtual Center 5.0 as a virtual machine to connect to oracle VM DB

3 groups

1: 8 blades of ESXI 5.0 IBM cluster, CLuster 2: 5 IBM 3850 x 5

2 cisco Nexus 1000v of which cluster only 1 use.

I know that the procedure of upgrading to 5.1

1. create DB SSO, SSO of installation

2 upgrading VC to 5.1

3. install WEB CLient set up AD authentication

IT IS:

I have problems with the Nexus 1000? I hope the upgrade will treat them as he would a distributed switch and I should have no problem.

He wj, treat the Nexus as a dVS.

-

Hey guys,.

Hope this is the right place to post.

IM currently working on a design legacy to put in an ESXi 5 with Nexus 1000v solution and a back-end of a Cisco UCS 5180.

I have a few questions to ask about what people do in the real world about this type of package upward:

In oder to use the Nexus 1000v - should I vCenter? The reason why I ask, is there no was included on the initial list of the Kit. I would say to HA and vMotion virtual Center, but the clients wants to know if I can see what we have for now and licenses to implement vCenter at a later date.

Ive done some reading about the Nexus 1000v as Ive never designed a soluton with that of before. Cisco is recommended 2 VSM be implemented for redundancy. Is this correct? Do need me a license for each VSM? I also suppose to meet this best practice I need HA and vMoton and, therefore, vCenter?

The virtual machine for the VSM, can they sit inside the cluster?

Thanks in advance for any help.

In oder to use the Nexus 1000v - should I vCenter?

Yes - the Nexus 1000v is a type of switch developed virtual and it requires vcenetr

Ive done some reading about the Nexus 1000v as Ive never designed a soluton with that of before. Cisco is recommended 2 VSM be implemented for redundancy. Is this correct? Do need me a license for each VSM? I also suppose to meet this best practice I need HA and vMoton and, therefore, vCenter?

I'm not sure the VSMs but yes HA and vMotion are required as part of best practices

The virtual machine for the VSM, can they sit inside the cluster?

Yes they cane xist within the cluster.

-

1000v VSM cluster separated with no VEM?

I was reading some documentation Cisco Nexus 1000v and it seems best practices recommends against VSMs running on ESX host computers that have VEM manages. For example, the environment could be a cluster of ESX 4 nodes with two VSMs and each node has a MEC. If it's bad ju - ju, it seems that I have two main variants:

(1) implement a cluster 2 ESX nodes that uses only standard vSwitches that host two VSMs. This group would have full network connectivity to the ESXi hosts that have the MEC and make full use of the functions of 1000v. All the other VMs production would go on the active cluster 1000v.

(2) buy a pair of Cisco Nexus 1010 s, which would host the redundant VSMs.

If the VSM/VEM have addictive tightened on vCenter to work, then it would probably make sense to vCenter/SQL host and an ad server on the VSM 2 nodes cluster so that it is self-sufficient? Basically, 2 cluster nodes VSM is the control center for the management of your network and virtual machines, which manages the main primary production clusters virtual machines?

There is absolutely no problem with your VSM running on your cluster of production. Do you really want to burn two hosts to host just your VSMs? If your VMware infrastructure already devotes a cluster for the management of virtual machines and devices - this might make sense, otherwise let them run with the rest of your virtual machines. VSMs come preprogrammed with reservation of CPU/memory so performance should not be a problem.

I've seen hundreds of clients running like this. It was a configuration fully supported since version SV1.2.

About the Neuxs 1010, yes they are great, but only because they offer features such as Vietnam and other blades of service on the horizon. I'd rather take the efficiency and mobility of a virtual Sup (VMotion), then having to devote two servers consume more power.

Kind regards

Robert

-

Nexus 1000v - this config makes sense?

Hello

I started to deploy the Nexus 1000v at a 6 host cluster, all running vSphere 4.1 (vCenter and ESXi). The basic configuration, license etc. is already completed and so far no problem.

My doubts are with respect to the actual creation of the uplink system, port-profiles, etc. Basically, I want to make sure I don't make any mistakes in the way that I want to put in place.

My current setup for each host is like this with standard vSwitches:

vSwitch0: 2 natachasery/active, with management and vMotion vmkernel ports.

vSwitch1: natachasery 2/active, dedicated to a storage vmkernel port

vSwitch2: 2 natachasery/active for the traffic of the virtual machine.

I thought that translate to the Nexus 1000v as this:

System-uplink1 with 2 natachasery where I'm putting the ports of vmk management and vMotion

System-uplink2 with 2 natachasery for storage vmk

System-uplink3 with 2 natachasery for the traffic of the virtual machine.

These three system uplinks are global, right? Or I put up three rising system unique for each host? I thought that by making global rising 3 would make things a lot easier because if I change something in an uplink, it will be pushed to 6 guests.

Also, I read somewhere that if I use 2 natachasery by uplink system, then I need to set up a channel of port on our physical switches?

At the moment the VSM has 3 different VLAN for the management, control and packet, I want to migrate the groups of 3 ports on the standard switch to the n1kv itself.

Also, when I migrated to N1Kv SVS management port, host complained that there no redundancy management, even if the uplink1 where mgmt-port profile is attached, has 2 natachasery added to it.

While the guys do you think? In addition, any other best practices are much appreciated.

Thanks in advance,

Yes, uplink port-profiles are global.

What you propose works with a warning. You cannot superimpose a vlan between these uplinks. So if your uplink management will use vlan 100 and your uplink of VM data must also use vlan 100 which will cause problems.

Louis

-

Configuring network DMZ, internal using Nexus 1000v

Hello peoples, this is my first post in the forums.

I am trying to build a profile for my customer with the following configuration;

4 x ESXi hosts on the DL380 G7 each with 12 GB of RAM, CPU Core X 5650 of 2 x 6, 8 x 1 GB NIC

2 x left iSCSI SAN.

The hardware components and several design features, on that I have no control, they were decided and I can't change, or I can't add additional equipment. Here's my constraints;

(1) the solution will use the shared for internal, external traffic and iSCSI Cisco network switches.

(2) the solution uses a single cluster with each of the four hosts within that group.

(3) I install and configure a Nexus 1000v in the environment (something I'm not want simply because I have never done it before). The customer was sold on the concept of a solution of cheap hardware and shared because they were told that using a N1Kv would solve all the problems of security.

Before I learned that I would have to use a N1Kv my solution looked like the following attached JPG. The solution used four distributed virtual switches and examples of how they were going to be configured is attached. Details and IP addresses are examples.

My questions are:

(1) what procedure should I use to set up the environment, should I build the dvSwtiches as described and then export it to the N1Kv?

(2) how should I document place this solution? In general in my description I will have a section explaining each switch, how it is configured, vital details, port groups etc. But all of this is removed and replaced with uplink ports or something is it not?

(3) should I be aiming to use a different switch by dvSwitch, or I can stem the heap and create groups of different ports, is it safe, is there a standard? Yes, I read the white papers on the DMZ and the Nexus 1000v.

(4) is my configuration safe and effective? Are there ways to improve it?

All other comments and suggestions are welcome.

Hello and welcome to the forums,

(1) what procedure should I use to set up the environment, should I build the dvSwtiches as described and then export it to the N1Kv?

N1KV replace dvSwitch, but there isn't that a N1KV ONLY where there are many dvSwitches N1KV would use the same rising in the world.

(2) how should I document place this solution? In general in my description I will have a section explaining each switch, how it is configured, vital details, port groups etc. But all of this is removed and replaced with uplink ports or something is it not?

If you use N1KV you rising the pSwitch to the N1KV.

If you use dvSwitch/vSwitch you uplink to the pSwitches to the individual dvSwitch/vSwitch in use.

(3) should I be aiming to use a different switch by dvSwitch, or I can stem the heap and create groups of different ports, is it safe, is there a standard? Yes, I read the white papers on the DMZ and the Nexus 1000v.

No standard and Yes in many cases, it can be considered secure. If your existing physical network relies on VLANs and approves the Layer2 pSwitches, then you can do the exact same thing in the virtual environment and be as safe as your physical environment.

However, if you need separation to the layer of pSwitch then you must maintain various vSwitches for this same separation. Take a look at this post http://www.virtualizationpractice.com/blog/?p=4284 on the subject.

(4) is my configuration safe and effective? Are there ways to improve it?

Always ways to improve. I would like to start looking into the defense-in-depth the vNIC and layers of edge within your vNetwork.

Best regards

Edward L. Haletky VMware communities user moderator, VMware vExpert 2009, 2010Now available: url = http://www.astroarch.com/wiki/index.php/VMware_Virtual_Infrastructure_Security'VMware vSphere (TM) and Virtual Infrastructure Security' [/ URL]

Also available url = http://www.astroarch.com/wiki/index.php/VMWare_ESX_Server_in_the_Enterprise"VMWare ESX Server in the enterprise" [url]

Blogs: url = http://www.virtualizationpractice.comvirtualization practice [/ URL] | URL = http://www.astroarch.com/blog Blue Gears [url] | URL = http://itknowledgeexchange.techtarget.com/virtualization-pro/ TechTarget [url] | URL = http://www.networkworld.com/community/haletky Global network [url]

Podcast: url = http://www.astroarch.com/wiki/index.php/Virtualization_Security_Round_Table_Podcastvirtualization security Table round Podcast [url] | Twitter: url = http://www.twitter.com/TexiwillTexiwll [/ URL]

-

I'm working on the Cisco Nexus 1000v deployment to our ESX cluster. I have read the Cisco "Start Guide" and the "installation guide" but the guides are good to generalize your environment and obviously does not meet my questions based on our architecture.

This comment in the "Getting Started Guide" Cisco makes it sound like you can't uplink of several switches on an individual ESX host:

«The server administrator must assign not more than one uplink on the same VLAN without port channels.» Affect more than one uplink on the same host is not supported by the following:

A profile without the port channels.

Port profiles that share one or more VLANS.

After this comment, is possible to PortChannel 2 natachasery on one side of the link (ESX host side) and each have to go to a separate upstream switch? I am creating a redundancy to the ESX host using 2 switches but this comment sounds like I need the side portchannel ESX to associate the VLAN for both interfaces. How do you manage each link and then on the side of the switch upstream? I don't think that you can add to a portchannel on this side of the uplink as the port channel protocol will not properly negotiate and show one side down on the side ESX/VEM.

I'm more complicate it? Thank you.

Do not portchannel, but it is possible the channel port to different switches using the pinning VPC - MAC mode. On upstream switches, make sure that the ports are configured the same. Same speed, switch config, VLAN, etc (but no control channel)

On the VSM to create a unique profile eth type port with the following channel-group command

port-profile type ethernet Uplink-VPC

VMware-port group

switchport mode trunk

Automatic channel-group on mac - pinning

no downtime

System vlan 2.10

enabled state

What that will do is create a channel port on the N1KV only. Your ESX host will get redundancy but your balancing algorithm will be simple Robin out of the VM. If you want to pin a specific traffic for a particular connection, you can add the "pin id" command to your port-type veth profiles.

To see the PIN, you can run

module vem x run vemcmd see the port

n1000v-module # 5 MV vem run vemcmd see the port

LTL VSM link PC - LTL SGID Vem State Port Admin Port

18 Eth5/2 UP UP FWD 1 305 vmnic1

19 Eth5/3 UP UP FWD 305 2 vmnic2

49 Veth1 UP UP 0 1 vm1 - 3.eth0 FWD

50 Veth3 UP UP 0 2 linux - 4.eth0 FWD

Po5 305 to TOP up FWD 0

The key is the column SGID. vmnic1 is SGID 1 and vmnic2 2 SGID. Vm1-3 VM is pinned to SGID1 and linux-4 is pinned to SGID2.

You can kill a connection and traffic should swap.

Louis

-

Doubt sober licenciamento Cisco Nexus 1000V

Algume pode me dar uma luz como works o licenciamento sequence Cisco Nexus 1000V?

I have a cluster of 8 hosts com 4 processors hexacore, esx 3.5.

Good afternoon Romeu.

O recurso CISCO NEXUS e licenciado a parte e voce so you can use-lo com a versão o more VMware vSphere Enterprise edition. O Preço image para licenciamento CISCO NEXUS $ 695.00 por processador e.

Para maiores information, you can access site o produto:

http://www.VMware.com/products/Cisco-Nexus-1000V/

Veja has comparison between NEXUS o e recursos other Soluções como vSwitch ESX 3.5:

http://www.VMware.com/products/vNetwork-distributed-switch/features.html

Espero ter colaborado.

Att.

Brahell

-

What does Nexus 1000v Version number Say

Can any body provide long Nexus 1000v version number, for example 5.2 (1) SV3 (1.15)

And what does SV mean in the version number.

Thank you

SV is the abbreviation of "Swiched VMware"

See below for a detailed explanation:

http://www.Cisco.com/c/en/us/about/Security-Center/iOS-NX-OS-reference-g...

The Cisco NX - OS dialing software

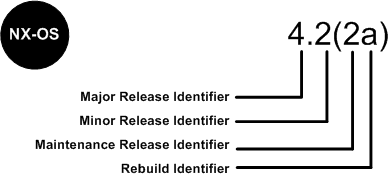

Software Cisco NX - OS is a data-center-class operating system that provides a high thanks to a modular design availability. The Cisco NX - OS software is software-based Cisco MDS 9000 SAN - OS and it supports the Cisco Nexus series switch Cisco MDS 9000 series multilayer. The Cisco NX - OS software contains a boot kick image and an image of the system, the two images contain an identifier of major version, minor version identifier and a maintenance release identifier, and they may also contain an identifier of reconstruction, which can also be referred to as a Patch to support. (See Figure 6).

Software NX - OS Cisco Nexus 7000 Series and MDS 9000 series switches use the numbering scheme that is illustrated in Figure 6.

Figure 6. Switches of the series Cisco IOS dial for Cisco Nexus 7000 and MDS 9000 NX - OS

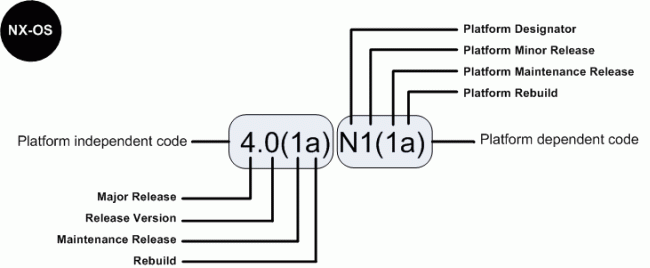

For the other members of the family, software Cisco NX - OS uses a combination of systems independent of the platform and is dependent on the platform as shown in Figure 6a.

Figure 6 a. software Cisco IOS NX - OS numbering for the link between 4000 and 5000 Series and Nexus 1000 switches virtual

The indicator of the platform is N for switches of the 5000 series Nexus, E for the switches of the series 4000 Nexus and S for the Nexus 1000 series switches. In addition, Nexus 1000 virtual switch uses a designation of two letters platform where the second letter indicates the hypervisor vendor that the virtual switch is compatible with, for example V for VMware. Features there are patches in the platform-independent code and features are present in the version of the platform-dependent Figure 6 a above, there is place of bugs in the version of the software Cisco NX - OS 4.0(1a) are present in the version 4.0(1a) N1(1a).

-

The Nexus 1000V loop prevention

Hello

I wonder if there is a mechanism that I can use to secure a network against the loop of L2 packed the side of vserver in Vmware with Nexus 1000V environment.

I know, Nexus 1000V can prevent against the loop on the external links, but there is no information, there are features that can prevent against the loop caused by the bridge set up on the side of the OS on VMware virtual server.

Thank you in advance for an answer.

Concerning

Lukas

Hi Lukas.

To avoid loops, the N1KV does not pass traffic between physical network cards and also, he silently down traffic between vNIC is the bridge by operating system.

http://www.Cisco.com/en/us/prod/collateral/switches/ps9441/ps9902/guide_c07-556626.html#wp9000156

We must not explicit configuration on N1KV.

Padma

-

Cisco Nexus 1000V Virtual Switch Module investment series in the Cisco Unified Computing System

Hi all

I read an article by Cisco entitled "Best practices in Deploying Cisco Nexus 1000V Switches Cisco UCS B and C Series series Cisco UCS Manager servers" http://www.cisco.com/en/US/prod/collateral/switches/ps9441/ps9902/white_paper_c11-558242.htmlA lot of excellent information, but the section that intrigues me, has to do with the implementation of module of the VSM in the UCS. The article lists 4 options in order of preference, but does not provide details or the reasons underlying the recommendations. The options are the following:

============================================================================================================================================================

Option 1: VSM external to the Cisco Unified Computing System on the Cisco Nexus 1010In this scenario, the virtual environment management operations is accomplished in a method identical to existing environments not virtualized. With multiple instances on the Nexus 1010 VSM, multiple vCenter data centers can be supported.

============================================================================================================================================================Option 2: VSM outside the Cisco Unified Computing System on the Cisco Nexus 1000V series MEC

This model allows to centralize the management of virtual infrastructure, and proved to be very stable...

============================================================================================================================================================Option 3: VSM Outside the Cisco Unified Computing System on the VMware vSwitch

This model allows to isolate managed devices, and it migrates to the model of the device of the unit of Services virtual Cisco Nexus 1010. A possible concern here is the management and the operational model of the network between the MSM and VEM devices links.

============================================================================================================================================================Option 4: VSM Inside the Cisco Unified Computing System on the VMware vSwitch

This model was also stable in test deployments. A possible concern here is the management and the operational model of the network links between the MSM and VEM devices and switching infrastructure have doubles in your Cisco Unified Computing System.

============================================================================================================================================================As a beginner for both 100V Nexus and UCS, I hope someone can help me understand the configuration of these options and equally important to provide a more detailed explanation of each of the options and the resoning behind preferences (pro advantages and disadvantages).

Thank you

PradeepNo, they are different products. vASA will be a virtual version of our ASA device.

ASA is a complete recommended firewall.

Maybe you are looking for

-

upgrade to 7.0.1 but does not start; programs incompatible w 2; Download version 3.0?

I was using Firefox 4.0.1 - informed 'precarious version' but my security suite and my password program would not work 7.0.1 new w... He said that they would be incorporated when available, so I thought that upgrade to 7.0.1 was the right thing to do

-

Dynadock U10 - USB mouse very slow in IE

Hello Our customers have a problem with the Dynadock port replicator. The mouse hangs or slow on Internet Explorer.The dynadock is connected to a HP 4520 s computer laptop (Windows 7) Tests that did not help:-Virus/spyware test-Windows updates / IE10

-

Re: Help Satellite A500 Windows 7 DRIVER IRQL NOT LESS or EQUAL BSOD

My Satellite Crash when I try to play games (or sometimes randomly). It starts late and then it dies just across the blue screen saying driver_irql not less or equivalent.I updated my drivers but it still crashes and I am unsure of how to identify th

-

Product: HP Pavilion p6130f OS: Vista 64 - bit QUADCORE Video always slows down in full screen after 15 to 25 minutes on DVD and online. Everything else on the computer works fine. It was announced as a quadcore and property specifications also list

-

APEX 5 treatment of automatic line (DML) update fails

Hi allI need help because it seems I'm missing something to get this working:-J' have a page with a form that contains editable elements and some hidden items.-J' have a button on the same page, open a modal dialog box.-the modal dialog box contains