Split a large data file

Hello:

I have a large .dat file that contains multiple groups of data. I tried the Import Wizard, but it is only able to analyze all the data channels (columns). How to create a data plugin that is capable of breaking a large file into several groups of data?

Example Structure:

Comments and header information

Group info

Group info

Group info

Channels

Data...

Group info

Group info

Group info

Channels

Data...

and it repeats.

My goal to have in separate groups is to import each group of data in the form of sheets in an excel file.

Hello stfung,

Please find attached a draft for the use. Download the file "URI" on your computer and then double click on it. This will install a use called "ModTest-text file.

The major section of the Plugin meta-data handling. reading data from the signal into groups is the smallest part.

If you are interested in this part of the script is in a function called "ProcessSignals(oFile,oGroup)".

Please let me know if the plugin works for you.

Andreas

Tags: NI Software

Similar Questions

-

How to split a large data file

Hi people,

I have problem here in oracle 10g on Windows 2003 (32 bit) so we have a datafile (.dbf) of 21GB size and we are not able to copy during cold backup OS throwing under error, we are working with the provider of the OS on this. Please let me know if any best way to divide data files in oracle.

Operating system error: insufficient system resources exist to complete the requested service.

Thank you

RambeauHello

If the data file size is 21 GB and you want to get rid of it, then I suggest to create a new tablespace with fixed data file size and then move all the objects in this tablespace to new tablespace and drop the old tablespace and rename tablespace again with the old.

-

How to install the large data file?

Anyone know how I can install large binary data to BlackBerry files during the installation of an application?

My application needs a size of 8 MB of the data file.

I tried to add the file in my BlackBerry project in the Eclipse environment.

But the compiler could not generate an executable file with the following message.

«Unrecoverable internal error: java.lang.NullPointerException.» CAP run for the project xxxx»

So, I tested with a small binary file. This time, the compiler generated a cod file. but the javaloader to load the application with this message.

"Error: file is not a valid Java code file.

When I tried with a small plus, we managed to load, but I failed to run the program with that.

"Error at startup xxx: Module 'xxx' has verification error at offset 42b 5 (codfile version 78) 3135.

Is it possible to include large binary data files in the cod file?

And what is the best practice to deal with such a large data files?

I hope to get a useful answer to my question.

Thanks in advance for your answer.

Kim.

I finally managed to include the large data file in library projects.

I have divided the data file in 2 separate files and then each file added to library projects.

Each project the library has about 4 MB of the data file.

So I have to install 3 .cod files.

But in any case, it works fine. And I think that there will not be any problem because I use library projects only the first time.

Peter, thank you very much for your support...

Kim

-

Hi can anyone help please?

I just noticed that one of our databases contains two large files of data (bigfile = NO) size as follows:

Tablespace 1-3200 MB AUTOEXTENSIBLE to max size 4000MB

Tablespace 2-3400 CanGrow MB in size 5000MB max

They are supposed to serve small files bigfile = NO, do you think that these large sizes may cause performance problems in the database?

Thank youDo you think these larger sizes could cause performance problems in the database?

None

-

How to split a large video file into several episodes/files?

Hi all

I have a 52 minute video I need to divide into 3 separate files until I can modify it (so I can download it on youtube as 3 separate episodes). I know how to separate the layers and make all make the base edition etcetera, but I'm stumped on how to turn those divide the layers into separate files.

Any help on this is much appreciated.

Thanks in advance

If you split just a video using Premiere Pro or The Adobe Media Encoder directly. You will save a lot of time. In the SOUL, you can set a duration for the three segments. In Premiere Pro just create three sequences and add them to the render tail. It's really basic things. You should study upward.

-

IO error: output file: application.cod too large data section

Hello, when I compile my application using BlackBerry JDE, I get the following error:

I/o Error: output file: application.cod too large data section

I get the error when you use the JDE v4.2.1 or JDE v4.3. It works very well for v4.6 or higher.

I have another forum post about it here.

I also read the following article here.

On this basis I tried to divide all of my application, but that doesn't seem to work. The best case that it compiles for awhile and the problem is that I add more lines of code.

I was wondering if someone could solve the problem otherwise. Or if someone knows the real reason behind this question?

Any help will be appreciated.

Thank you!

I finally managed to solve this problem, here is the solution:

This problem occurs when the compiler CAP is not able to give an account of one of the data resources. The CAP compiler tries to package the data sections to a size of 61440 bytesdefauly max. "You can use the option of CAP'-datafull = N' where N is the maximum size of the data section and set the size to be something less than the default value. With a few essays on the size, you'll be able to work around the problem.

If someone else if this problem you can use the same trick to solve!

-

Using .split on an import script with a comma delimited data file

Hi everyone-

Any attempt to create a script to import amount field to remove the apostrophes (') of a description field to account in a .csv data import file (any folder with an apostrophe will be rejected during the import phase). Now if it was a file delimited by semicolons (or other separators and more by commas), I could remove all the apostrophes recording with a string.replace command, then return the amount with a command of string.split. Unfortunately, there is a problem with this solution when using comma delimiters. My data file is comma-delimited .csv file with several amount fields that have commas in them. Even if the fields are surrounded in quotes, the .split ignores the quotes and treats the commas in fields amount as delimiters. Therefore, the script does not return the correct amount field.

Here is an example of a record of reference data:

"", "0300-100000", "description of the account with an apostrophe ' ', '$1 000.00",' $1 000.00 "," $1 000.00 ","$1 000.00"," $1 000,00 "" "

My goal is to remove the apostrophes from field 3 and return the amount in field 8.

Some things to note:

- If possible, I would like to keep this as an import script for amounted to simplify administration - but am willing to undertake the event script BefImport if this is the only option or more frank than the import script-based solution.

- I tried using regular expressions, as seems to be conceptually the simplest option to respect the quotes as escape character, but think that I am not implementing properly and impossible to find examples of regex for FDMEE.

- I know that we cannot use the jython csv on import the script by Francisco blog post - fishing with FDMEE: import scripts do not use the same version of Jython as event/Custom scripts (PSU510). This may be a factor to go with a script of the event instead.

- It's probably a little more engineering solution, but I have considered trying to write a script to determine where to start all the quotes and the end. Assuming that there are no quotation marks on the inside of my description of account (or I could remove them before that), I could then use the positioning of the quotes to remove commas inside those positions - leaving the commas for the delimiters as is. I could then use the .split as the description/amount fields have no commas. I think it may be better to create a script of the event rather than down this solution from the point of view to keep administration as simple as possible

- Yes, we could do a search and replace in the excel file to remove the apostrophes before import, but it's no fun

Thanks for any advice or input!

Dan

Hi Dan,.

If your line is delimited by comma and quote qualified, you can consider the delimiter as QuoteCommaQuote or ', ' because it comes between each field. Think about it like that, then simply divided by this value:

split("\",\"")Here's something I put together in Eclipse:

'''Created on Aug 26, 2014@author: robb salzmann'''import restrRecord = "\"\",\"0300-100000\",\"Account description with an apostrophe ' \",\"$1,000.00\",\"$1,000.00\",\"$1,000.00\",\"$1,001.00\",\"$1,002.00\""strFields = strRecord.split("\",\"")strDescriptionWithoutApos = strFields[2].replace("'", "") 'remove the apostrophestrAmountInLastCol = strFields[-1:].replace("\"", "") 'You'll need to strip off the last quote from the last fieldprint strDescriptionWithoutAposprint strAmountInLastColAccount with an apostrophe description

' $1 002,00

-

Help loading the (huge) large XML files

Hi all

I have a new project that will involve loading a 70 000 KB XML document that I will work on short time. I'm sure that there is no way I want to load all of this before the user can start using the data. Does anyone know what I should do to work with this large of a file in Flash? Any suggestions on books or Web sites that may offer a solution would be great. I do not expect a complete documentation and/or code just a point in the right direction on how to achieve this.

Thank youThanks Rothrock. It's pretty much exactly what I was looking for. I wasn't sure if I could get to 'parts' of the Flash xml file. I think I'll write a script to split the large file into smaller files or the mandrel in a database or something. This way I can access the smaller Flash data files.

Thanks for the tip on the Newsgroups.

-

Satellite Pro A110 crashes during the transfer of the large data in LAN

Hello

My Satellite Pro A110 crashes during the transfer of the large data in LAN. The network driver is a Realtek RTL8139/810 x Family Fast Ethernet NIC I've been looking through this forum and found some wire whit exactly the same problem, saying that the solution is to update Realtek lan set up in BIOS, not the computer BIOS, but I can't find any link to download the necessary upgrade file. Can anyone tell me please where to find this upgrade file or send it to me directly?

Thanks in advance

Is - this transfer of data in the company where you work? Do you want to download data from the server, or how to understand this? How to understand what you mean under big data?

Have you tried downloading data on the Internet? For example, if you try to download some trailer HD (more than 200 MB)?

-

How to read a selected part of a large ascii file (~ 200 MB)?

I have several large ascii files I need to read in. These files are part of a standard test for an application that I wrote. How well my application parses files determines how the program accomplishes its main task. For a real test, the application captures live data in the form of a 2D double table and analyzes the data of this form. This table is long of 3 million items (1 ms/s @ 3s). I usually never treat any form of ascii file because all data is stored as TDMS using this method (though I need to update this link with a few critical changes). (Thank you again Ben)

Ascii files I have to read all have two rows of data from the header, followed a ~ 8 million lines of data representing the data I capture in general. Each line contains point data and a value of accumulated time. I need to load each file separately, analyze the data within, and communicate the results. The part that I need help for loading of the file. So far, I was able to load a file without memory problems. A stay is similar to a real test, I need actually only 3 million lines for analysis. But I have to be able to select the 3 million lines by time values in the file. Technically, I only need the single column of data and the frequency of sampling, represented by time values.

How can I select a specific section of an ascii file and read them in LabVIEW as a double 2D array? Which is possible for 3 million points of data without crippling the system using all the memory just to accomplish this task? An alternative version of 'last resort' would be to run a separate program to create a file DDHN that I could then read and go from there. But I prefer to read the file directly to my request.

I'm running on an HP EliteBook 8540w with Win 7 Enterprise (64-bit), i7 CPU bicoeur (2.67 GHz) with 8 GB of RAM and 32-bit LV2011.

Thank you

Scott

-

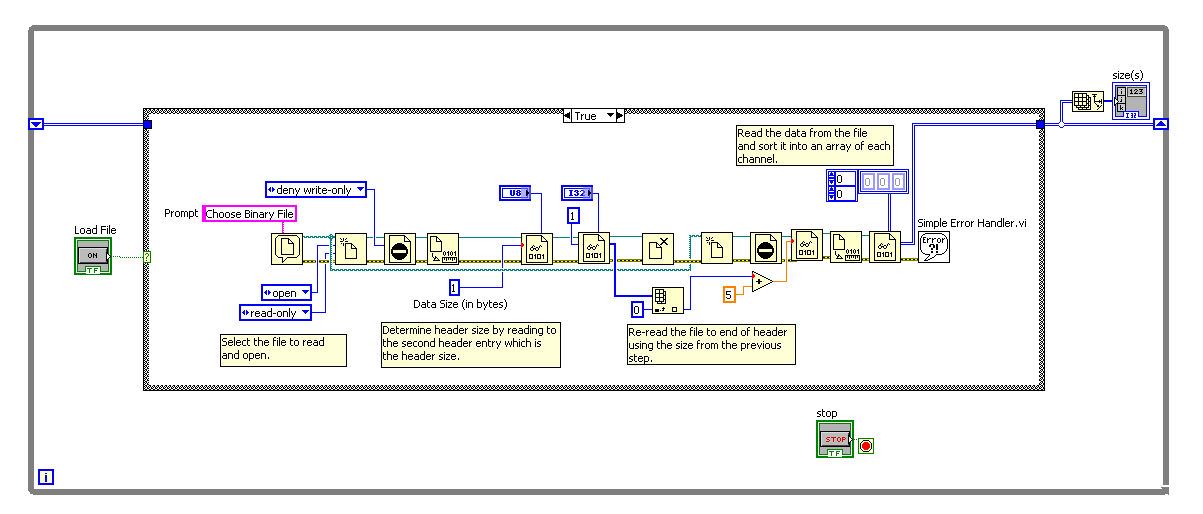

Read large binary files in addition to 1,000 records

I have a few large binary data files created in Labview which cannot be entirely read in Labview. Normally acquire us data in Labview and analyze in Matlab. But now we need to read them in Labview. The files have a header that tells us very well. Then there is data of 64 channels. There is a piece of data of each channel for each stamp of time for 300 000 locations. The table of data read in is therefore 64 lines by 300 000 columns.

MATLAB reads these files in their entirety. But Labview will show only the first 1000 columns. After reading the data file my table is only 64 lines per 1000 columns. It seems that is the default maximum table size or the maximum number of records for Binary File.vi reading is somehow limited. I searched and didn't find an answer.

My vi is attached. The data file is too large to post on the forum. If someone can tell me how to post large files, I'll put it up.

Any help would be greatly appreciated!

I can't download your data file. It is blocked by my antivirus because it is suspicious of your file hosting websites.

You have your program that creates the data file? See how writing can say more how the data file is packaged.

Try to set the data type for the last reading a constant I16 rather than an array of 2D I16. When you read a 2D picture, he expects that size data in row and column to be part of the information, in that it is reading. If this information is not there, then it will be you end up with an error message you get.

If the data have been written just as a series of values I16, then row and column sizes will not here. Then read in as a type of data I16 and you will get a 1 d table as long as necessary. Then, you would need to remodel in a table 2D of the appropriate dimensions.

-

CRC error on large compressed files (.zip, .rar, .cab, etc...) The installation fails too...

Hello world

I have a new Dell computer. It's a Dell Studio 540 with Windows Vista Home Premium 64 bit, with 1 TG of hard drive and 8 GB of RAM. I have problems with "decompression" of a large number of large compressed files (more than 50 GB). Also, because the files on a DVD are often compressed (in the cab for the majority file), the same error occurred on an installation package. Thus, to resume, I got CRC error on compressed files, while opening the zipper AND my different types of software installation fails with errors of Sami.

Before you answer, please read my steps below:

1. I reinstalled Windows Vista 2 times from scratch with a clean format. Whenever I get the same error on the same files even when there is nothing installed (even the drivers are not installed).

2. I tried to run Windows XP Mini, a very light version of XP is the launch of a CD at startup and run in RAM. The same problem.

3. I did all kinds of tests of the material myself AND with Dell technical support. Nothing was found, and I'm sure it's 99.9% true. Everything has been tested: RAM, hard drive, processor, motherboard, etc. The Dell technical support told me that my computer is 100% functional and that this error is due to some software (Windows) does not very well with 64-bit processor... Everything works well in my work all day EXCEPT for the decompression of data.

4. my files are not damaged: they unzip perfectly on other computers. In addition, the program that do not install because of the CRC error with compressed file are all used before on another computer OR are new. I even changed DVD to the store to get a new one and the problem is still there. I also tried to download the torrent from the .iso file and the same problem happened.

5. the files on my computer are not corrupted by the hard drive. I copy keys usb, directrly on the DVD to the hard drive. All possible tests have been done on the files, and they are ok.

6. I did all kinds of tests such as checkdisk, spyware, etc... but given that the installation has not helped with it, I don't think it's related.My last hope is here... MVP is maybe know a problem in which Windows with zip files. I read a lot of people of the CRC errors, so I'm sure something happens. I am currently with the Dell customer service to get a full refund to buy a new computer without a 64-bit processor because it looks too new for Microsoft.

So if anyone knows something I could try, tweak, change to help me with my problem please let me know.

Thank you very much!

DarkJaff

Hello!

This morning, I realized that I never returned to close this topic. In fact, I work with dell for a number of weeks, talk to like 10 or 15 support technical Member, try something else and you know what works?

Reinstall windows... But in my case, I have reinstalled windows 2 times before they ask me to do it again. So I did and everything worked perfectly... For the third time is lucky: P in fact, perhaps during the first installation of 2, RAM has not been installed properly or has failed for a second, because every file that has been read has become corrupted, but at the same time, everything is normal on my computer seems to work ok... In addition, it could be the update that I did for my BIOS. So, a good test would be to update your BIOS, shut down your computer, check if the ram is installed ok, restart, verify that your ram is detected.

After that, reinstall your windows, but DO NOT use the file you had on your "not working version of windows. I lost a lot of backup of the thing on my computer because even put on my new fresh install of windows, these rar did not work. I needed to pick up an old DVD and they worked

So, I hope this will help!

DarkJaff

-

"Backup and restore" in Windows 7 saves nothing, except the data files?

I want to save my 'Favorites' of internet list, messages stored in Live mail and my address book in Live mail. It seems that the "Backup and restore" function in Windows 7 saves only data files unless you choose the option of system image, and then it is not yet clear and seems to save much larger files that I could put on a DVD.

Can someone direct me to information on this topic or suggest a better method of simple backup.

By default, Windows 7 saving files in libraries and folders for all users. That includes everything in your user folder: data applications, contacts, desktop, downloads, Favorites, music, photos, documents, etc.. Windows Live Mail stores messages in the application data folder, so they are automatically backed up.

The system image contains everything on your computer.

Both types of backup can create more data that a DVD can hold. I recommend that you get an external drive for backups. Owner, Boulder computer Maven

Most Microsoft Valuable Professional -

PL/SQL code to add to the data file

Can someone help me with a PL/SQL code. I want to do the following:

get the latest data from the tablespace file

If (value > 30 GB)

then

Loop

change the database add datafile '+ DATA01' size 30 GB

# Note data file cannot be larger than 30 GB

# IE if 40 GB is entered as 2 entries are created one for 30 GB

# the second for 10 GB

end loop

on the other

same logic adds size datafile up to 30 GB

Loop

If you go over 30 GB that to create the new data file

End of loop

IfPlease excuse the syntax I know its not correct. In summary,.

what I want to do is to create data no larger than 30 GB files for the

extra space simply create new datafiles Nigerian, we reached the "value in".

limitHe can be hard-code "«+ DATA01»»

Note, I don't want to use datafile autoextend I want to control the size of my storage space...

Any code would be greatly apprecuated.

Thanks to all those who responded

create or replace procedure add_datafile( p_tablespace varchar2, p_size_in_gibabytes number ) is space_required number := 0; space_created number := 0; file_max_size number := 30; last_file_size number := 0; begin for ts in ( select tablespace_name, round(sum(bytes / 1024 / 1024 / 1024)) current_gigabytes from dba_data_files where tablespace_name = upper(p_tablespace) group by tablespace_name ) loop dbms_output.put_line('-- current size of ' || ts.tablespace_name || ' is ' || ts.current_gigabytes || 'G'); space_required := p_size_in_gibabytes - ts.current_gigabytes; dbms_output.put_line('-- adding files ' || space_required || 'G up to ' || p_size_in_gibabytes || 'G with files max ' || file_max_size || 'G'); last_file_size := mod(space_required, file_max_size); while space_created < (space_required - last_file_size) loop dbms_output.put_line('alter tablespace ' || ts.tablespace_name || q'" add datafile '+DATA01' size "' || file_max_size || 'G;'); space_created := space_created + file_max_size; end loop; if space_created < space_required then dbms_output.put_line('alter tablespace ' || ts.tablespace_name || q'" add datafile '+DATA01' size "' || last_file_size || 'G;'); end if; end loop; end; / set serveroutput on size unlimited exec add_datafile('sysaux', 65); PROCEDURE ADD_DATAFILE compiled anonymous block completed -- current size of SYSAUX is 1G -- adding files 64G up to 65G with files max 30G alter tablespace SYSAUX add datafile '+DATA01' size 30G; alter tablespace SYSAUX add datafile '+DATA01' size 30G; alter tablespace SYSAUX add datafile '+DATA01' size 4G; -

What is the difference between the cat of the IR and IR data files in lightroom it please

I have cat IR and IR data files in my backup. These two are big files of 500 MB, 900 MB respectively. My understanding is that these records did not contain real images but only thumbnails with the mods of lightroom. What is the difference between the cat of the IR and IR data files and should I make a second copy of both or the cat IR file

The.lrcat is the file in your catalog. This is an important safeguard.

The .lrdata files have previews. These can always be regenerated from the library, but it could take some time a large catalog.

It is advisable to save them both. But if you are short on disk space, save the .lrcat file.

Maybe you are looking for

-

This is the link that opens a new tab ever few min. https://0.client-channel.Google.com/client-channel/client?cfg= {222% 22% 3A % 22oz %22} & ctype = oz & xpc = {% 22cn % 22% 3A % 22GCComN6Q0d % 22 %2C % 22tp 22% 3Anull %2C % 22osh 22% 3Anull %2C % 2

-

How am I supposed to get help from microsoft when someone hacked my account? I've done everything asked, and nobody gets in touch. I'm losing business from day to day.I've now set up a separate hotmail address so that I can write on this forum. It's

-

Key not valid for use in specified state

OfficeJet 4630 receiving facility message "Key not valid for use in the State specified" while installing. Printing of basic but no other software is loaded. Operating system is Win 7 Tried HP support chat for several hours, but has been disconnect

-

Hello I installed a tunnel VPN between ASA and PIX. I want to implement security on the ASA or PIX so that some remote endpoint specfic IP can access resources of tunnel. is it possible to block additional IP addresses? Thank you Amardeep

-

Download dvd to you tube dell Windows 7

I have windows media, is it possible to download a dvd from my windows media directly to my u tube channel player