TCP communication

Hello world

I would like to use TCP communication to send data of temperature sensors and my USB camera data to another pc client. I tried using remote panels with limited success because of the requirements of bandwidth for the camera. With the help of the attached VI someone help me on how I can use TCP communication in this application. A figure (or reference) would be greatly appreciated.

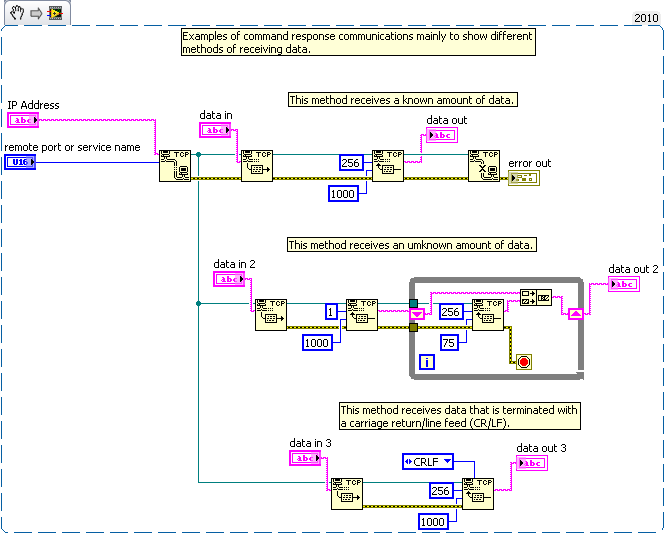

Here is a very basic example.

Do not run it because the code is not functional for communications in parallel. It is only for example. To actually use each method, read in the highest section of the page of code should be replaced with the desired playback method.

Tags: NI Software

Similar Questions

-

TCP communication with barcode reader Keyence SR-1000 series

So I have a client who needs help to connect the code reader barcode Keyence SR-1000 using TCP/IP to a LabVIEW application. After researching 'Connection 'document' of Keyence for TCP, I realize that it is based on their utility software alone.

As I have no experience with barcode readers, I'd appreciate any advice on what to look for and how to implement a 'driver'-based on the VISA for this device. Or, in general, how are the best scanners of bar codes connected to LabVIEW.

Thank you

IMED

michifez wrote:

As I have no experience with barcode readers, I'd appreciate any advice on what to look for and how to implement a 'driver'-based on the VISA for this device. Or, in general, how are the best scanners of bar codes connected to LabVIEW.

You must contact the manufacturer of the barcode reader and ask for documentation on TCP communication. Otherwise, the best you could do is to use a tool like WireShark to 'sniff' the TCP data and try to understand what the scanner data is read/write at their own software and try to reproduce it.

There are many manufacturers/types of barcode scanners - some have their own drivers (DLL, for example) you can call, some use Ethernet/RS-232, some simply to emulate a keyboard and "type" characters of the barcode.

-

RIO crashes when you use tcp communication and web server

Hello

my controller cRIO crashes after a short time (usually less than a minute), when I use simultaneously to the web server (to interact with a remote control) and make some tcp communication (using STM 2.0 library) for data logging. Is it a problem of overall performance of the controller, or a problem of band network bandwidth (I'm happy to send some values every 100ms), or a programming problem; in the latter case, what should I do to make the system more stable?

Kind regards

PS: I use a cRIO 9022 with LV 2009f2 + RT and NOR-RIO 3.3.0

Hello

You can try with a simple while loop + delay instead of loop timed for TCP communication loop.

Concerning

-

How to manage the threads for TCP communication?

Hello

I have a project to control two separate applications (C++ and LabView) and two of them are implemented as server. Another client program (C++) is used to control applications. Communication between them are implemented using TCP socket. The client program sends the message to start or stop tasks on servers. The client program also sends time (HH) with the message start and end to determine when to start or stop.

Application in C++ (server): Listening to the request for the connection and when gets one, it creates a thread of communication allowing to manage this and listen again to another connection. Communication wire crossing of handles and messages when it receives a start or a stop message, it creates a thread of timer with the received time to trigger a task at the time specified. And after that he expects the client message. So, here, when the thread (communication, timer) is necessary, it is created.

In LabView (server): I tried to create the same as the C++ server. But, in manual of LabView and other discussions of the forum, I got that LabView is multithreaded and it can be done with the help of the loop independent. So, I had to create four loops in a diagram:

1. wait the new connection

2 manage the communication for the already received connection

3. start the timer

4 stop the timer

and they are executed at the beginning of execution and communication between them are managed using local variables. But, 2, 3 and 4th loop can handle only one connection and it can handle another if the current is closed. The C++ application can handle multiple connections by creating the thread when it is necessary, but not at the beginning of the application.

Is there a better way to implement this in LabView?

Is it possible to manage multiple connections and create the diagram node/block (such as a wire) dynamically as C++?

Thank you.

There are several ways to do so in modern LabVIEW and you should probably seek the Finder example for TCP examples. The classic is to transfer the refnum of connecting the listening loop in a communications loop that adds to an array of login credentials and then constantly iterates through this array to make the communication. He works on LabVIEW 4.0 perfectly for me even for applications with basic HTTP communication protocol. But you must make sure that the communication for a connection is not delaying his work for reasons that would delay the handling of other connections too, because they are really of course worked on sequentially. If you encounter an error, the connection ID is closed and removed from the table.

The other is that create you a VI that makes your entire communication and ends on an error or when the order quite. Make this reentrant VI and then launch it through VI server as the instance home, passing the refnum of newly received connection form the listening loop. Then use the method to Run let start and operate as an independent thread.

For all these, you should be able to find an example in the example Finder when searching for TCP.

-

A result of sending the data to a while loop through another loop of TCP communication

Hi all

I have 2 screws

1 matching model (using the method of Grab) which is put inside a while loop. This VI works correctly and returns a string in a specific format (position and the angle of the object).

2 TCP server who always listens to a specific port, if it receives a correct command string, it sends the string above VI - 1 (position and angle).

The two 2 screws works if they are in separate VI. But I want to combine them into 1 single VI and I get the problem: Pattern matching doesn't work anymore (the video has only first frame then stops).

I tried to put the two while loop in parallel or put the TCP while loop inside while loop Pattern Matching. Nothing has worked.

Pls see my attached VI and the TCP module.

What is the solution for requirement?

Thank you all!

What I would do, is be your two screws at the same time. Given that you do not send the last result, store the result in a global variable. Your comparison models written VI the global variable of the results of each iteration of the loop and the TCP bed module global variable results when it receives the right to order and then send it.

-

Communicator TCP active & passive can not communicate with graphic

Hello

I send and receive TCP Communicator Active & Passive data.

I have change and can now show data(given fixed) and graphic.

My Active part can work. But I can't send liabilities...

confused ha... hahahs.

-

How to prevent a TCP connection that is closed when the VI who opened it terminated.

Hello everyone.

I'm developing an application based on the servers and clients communicate through TCP in LabVIEW 2012.

When the Server/client opens a TCP connection, it starts an asynchronosly running "Connection Manager", to which connection reference that takes so all communication happening. It all works very well.

-J' have a situation where a client connection manager can be informed of another 'new' server. I would like to open the connection (to see if it is still valid) and then pass this reference of connection to the main client code to spawn a new connection manager. This avoids lock me up the Client code main with an ish timeout if the 'new' server does not really accept connections.

The problem is that if the connection manager that opens the connection to the 'new' server is stopped, then it seems to destroy the reference he opened. This means that the other connection manager that has been happily character with the 'new' server has closed TCP communications (I get an error code 1 on an entry).

I created an example to illustrate the issue which should be used as follows:

1 run server.vi - he will listen for a connection on the specified port on his comics.

2. run Launcher.vi CH - it will open a connection to the server and pass the TCP reference to an instance of connection Handler.vi, which he started.

3. the connection manager needs to send data to the server

4. stop the Launcher.vi CH

5. the will of Handler.vi connection error.

Any suggestion would be appreciated.

See you soon

John

Do not perform the opening and closing of the TCP connection in Subvi. Do this to master VI.

-

Hello

I am tryting to change TCP Communicator - Active .vi as it generates only a single string instead of concatenated strings. At the moment program is just concatenate strings. I have

seeking a chain latest to the port. So when it receives the channel 2 it must purge the chain 1.

It comes to string1. (I have to perform certain functions of string on it and get a result from here)

It comes to string2. (So when I get that channel 2, channel 1 must have been emptied and string2 is only left so that I can perform the same operations on this string also.)

I tried but without success.

Thx for your help but I found easier practical solution. I just changed the standard TCP CRLF playback mode. And now I can get single complete strings directly from port

THX

-

Good method to reset the tcp connection after timeout error

I have an application that I build that communicates with a Modbus TCP device. If a communication occurs error I wish I could reset it TCP communication. What I have is a control that raises an event when pushed. In this case, I have a sequence that closes first the tcp connection and then opens a new connection. My application starts and works very well. To test the reset function, I removed the ethernet cable from the camera and waited until a timeout occurs. I plugged the cable reset back to and pushed my control. Sometimes the reset will take place, but most of the time I'll get a timeout in the TCP vi open error. After that, the only way I can establish communications must leave my application, disable and then enable the network device. Then, when I restart my application I have communication with my camera.

Any help would be appreciated on how I should be reset my TCP connection.

Thank you

Terry

Terry S of a. in writing:

I've attached an example vi (LV10) that shows just the connection TCP and Reset. An error occurs when you try to run the open in the event of reset tcp protocol.

As writing that your code should be fine. There is nothing inherently wrong with it. However, depending on the device, you communicate with you can try to restore the connection too quickly once you have closed the connection. The device allows multiple connections to it and may require some time to clean up the things on his end after you close a connection. An experimental basis try wait little time between TCP and the TCP Open shut it down. If possible you can try using Wireshark to see what is happening on the network. It may be useful to diagnose what is happening.

-

Modes of failure in TCP WRITE?

I need help to diagnose a problem where TCP communication breaks down between my host (Windows) and a PXI (LabVIEW RT 2010).

The key issues are:

1... are there cases where to WRITE TCP, a string of say 10 characters, write more than zero and less than 10 characters for the connection? If so, what are those circumstances?

2... is it risky to use a 1ms timeout value? A reflection seems to say that I won't get a timeout in uSec 1000 if we use a database of time 1-ms, but I don't know if this is true in PXI.

Background:

On the PXI system, I use a loop of PID-100 Hz, controlling an engine. I measure the speed and torque and control the speed and the throttle. Along the way, I am in a position 200 channels of various things (analog, CAN, instruments of TCP) at 10 Hz and sending masses of info to the host (200 chans * 8 = 1600 bytes every 0.1 sec)

The host sends commands, responds the PXI.

The message protocol is a type of variable to fixed header, payload: a message is a fixed 3-byte header, consisting of a U8 OpCode and a USEFUL of U16 load SIZE field. I flattened a chain structure, measuring its size and add the header and send it as a TCP WRITE. I get two TCP reads: one for the header, then I have the heading unflatten, read the SIZE of the payload and then another read for that many more bytes.

The payload can be zero byte: a READING of TCP with a byte count of zero is legal and will succeed without error.

A test begins by establishing a connection, configuration tips, and then sampling. The stream of 10 Hz is shown on the home screen for 2 Hz as digital indicators, or maybe some channels in a chart.

At some point the user starts RECORDING, and 10 Hz data go into a queue for later write to a file. It is while the motor is powered through a cycle prescribed target speed/torque points.

The registration lasts for 20 or in some cases for 40 minutes (24000 samples) and then recording stops, but sampling does not. Data are still coming and mapped. The user can then do some special operations, associated with audits of calibration and leaks, and these results are stored. Finally, they hit the DONE button and the mess is written to a file.

This has worked well for several years, but that the system is growing (more devices, more channels, more code), a problem arose: the two ends are sometimes get out of sync.

The test itself and all the stuff before configuration, works perfectly. The measure immediately after the test is good. At some point after that, he goes to the South. The log shows the PXI, sending the results for operations that were not opposed. These outcome data are garbage; 1.92648920e - 299 and these numbers, resulting from the interpretation of random stuff like a DBL.

Because I wrote the file, the connection is broken, the next test he reestablished and all is well again.

By hunting all of this, I triple-checked all my shipments are MEASURES of the size of the payload before send it. Two possibilities have been raised:

1... There is a message with a payload of 64 k. If my sender was presented with a string of length 65537, it would only convert a value U16 1 and the receiver would expect 1 byte. The receiver would then expect another heading, but these data come instead, and we are off the rails.

I don't think what is happening. Most messages are less payload of 20 bytes, the data block is 1600 or so, I see no indication of such a thing to happen.

2... the PXI is a failure, in certain circumstances, to send the entire message given to WRITE of TCP. If she sends a header promising more than 20 bytes, but only delivered 10, then the receiver see the header and wait more than 20. 10 would come immediately, but whatever the message FOLLOWING, it's header would be interpreted as part of the payload of the first message, and we are off the rails.

Unfortunately, I'm not checking the back of writing TCP error, because she's never not in my test here (I know, twenty lashes for me).

It occurs to me as I was him giving a value of timeout 1-mSec, since I am in a loop of 100 Hz. Maybe I should have separated the TCP stuff in a separate thread. In any case, maybe I do not get a full 1000 uSec, due to problems of resolution clock.

This means that TCP WRITE failed to get the data written before the time-out expires, but he wrote the part of it.

I suspect, but newspapers do not prove, that the point of failure is when they hit the DONE button. The General CPU on PXI is 2 to 5%, at that time there are 12 to 15 DAQ field managers to be close, so the instant the CPU load is high. If this happens to coincide with an outgoing message, well, perhaps that the problem popped up. It doesn't happen every time.

So I repeat two questions:

1... are there cases where to WRITE TCP, a string of say 10 characters, write more than zero and less than 10 characters for the connection? If so, what are those circumstances?

2... is it risky to use a 1ms timeout value? A reflection seems to say that I won't get a timeout in uSec 1000 if we use a database of time 1-ms, but I don't know if this is true in PXI.

Thank you

If a TCP write operation times out, it is possible that some data did in fact get placed in the buffer, and it will be read by the other side. This is why there is an output bytes written on TCP Write function, to determine what was actually put in the buffer.

To account for this, you can proceed as follows:

1. do an another TCP write and send only the subset of the first package that does not get completely passed. Use bytes written to Get String subset for the remaining data.

2. start with greater delays.

3. in the case of a timeout, the close link and force a reconnection so that the data of the partially filled buffer not get transformed by the other side.

-

Modbus tcp read holding registers return not requested quantity

Background: I have a client using ELAU motion system - they record data with records they want to be able to read on a cRIO match with some analog FPGA data (I have digital handshaking going on for this).

LabVIEW 2010 SP1

cRIO-9074

With the help of the library of VI of MOdbus.llb OR communicate with the other system. I can open the TCP communication without problem and actually get SOME records, but not ALL registry data, I want to read.

I want to do is read the registers individual operating 330 U16 values. I know how the data are split to represent different lengths (i.e. most of the data items use 2 records number represent a 32 bit). I want just to read all of the individual records and analyze the data in another VI to convert it to other data types.

I provided the .vi MB Ethernet Master Query (poly) with the starting address for the first register, then the amount of 330. The polymorphic instance selected is "reading record keeping. The array returned by this VI via 'Holding Registers' is only 74 elements and not requested 330. I have no exception code and no error in LV. Is there some intrinsic limit, i.e. the number of Holding Registers that can be read?

I do not use the (not sure if necessary) MBAP header entry.

Thank you.

Simple solution once I dug in the series MODBUS/TCP protocol protocol documentation out there via Google.

History of the modbus function series is the limitation that carried over TCP - the maximum amount of bytes in the pack a data can be only 256 bytes. So I was limited to approximately 125 ~ records at a time.

256 bytes is 2048 bits. The use of the 16-bit registers which gives maximum 128 registers. I went with 125 followed making easier totals.

-

I have an embedded device server that has a controllable set of 8 pins of the user. The format of order for these pieces requires a TCP message which is 9 bytes long and hexadecimal.

I use TCP Communicator - Active .VI in the LV7.1 examples, make a link to the server of the device. There is no problem with the connection - but I'm unable to send the message in HEX format.

The transmitter/receiver always converts my entry into ASCII and the command fails so. Also, he fragments the typed command bytes and when I probe the resultng with a network Analyzer (Ethereal) data package I see that the VI has fragmented the bytes of data in several discrete packages.

The order message I want to send is in this format: FF 00 00 00 FF 00 00 00 1B

Any suggestions to solve the problem?

Thank you

You must either create your string in hexadecimal display mode so that you know that you enter hexadecimal values, rather than characters, or use a byte array, and then use the function to the array of bytes to a string . I would opt for the latter because it is easier to understand.

Note that the table is an array of integers of U8 whose formatting the hexadecimal value, and using a field width minimum 2 characters, zeros on the left and with the radix displayed. Makes it more clear that this is a hexadecimal value.

-

Hi Experts

Greetings!

What is the default MSS size defined in cisco IOS to host TCP communication, I saw the package snipper output that the MSS is defined to 536 even if the MTU of the link is 1500. Why cisco IOS does not 1460 [header (20 + 20) to throw TCP IP &] as the default MSS for TCP communication and what is the purpose of choosing a minimum value of MSS 536 by deafult?

Thanks in advance

Bava -.

There have been some assumptions made about using other than the default size for datagrams with some unfortunate results. HOSTS MUST NOT SEND DATAGRAMS LARGER THAN 576 OCTETS UNLESS THEY HAVE SPECIFIC KNOWLEDGE THAT THE DESTINATION HOST IS PREPARED TO ACCEPT LARGER DATAGRAMS. This is a long established rule. To resolve the ambiguity in the TCP Maximum Segment Size option definition the following rule is established: THE TCP MAXIMUM SEGMENT SIZE IS THE IP MAXIMUM DATAGRAM SIZE MINUS FORTY. The default IP Maximum Datagram Size is 576. The default TCP Maximum Segment Size is 536.https://Tools.ietf.org/html/rfc879 -

Unusable Cineware of the slow, spinning wheel between everything I do. Connection/TCP problem?

Hi, I have problems with Cineware in AE CC 2014. If I create a new cinema 4 d of the file in a model, the whole program becomes unusably slow, a cursor of reel for 5 to 20 seconds-ish, whenever I have move the playback cursor or / select nothing in said computer, even things unrelated to the spinning Cineware layer. It's never worked normally on this machine, I only used it on my previous but used it without problems on other machines to work successfully. I don't get the error messages and everything works well.

I did a lot of research on the AE forums and found some people with the same kind of problem, but very few with any solution at all, and this small number does not work for me. It seems from what I read it is probably a problem with the TCP communication between two apps/connection wait times, but nothing that I tried so far has helped.

My specs:

- last updated OS X Yosemite 10.10.3 with quicktime 10.4

- iMac, retina 5 k, 32 GB of memory

- After Effects CC 2014.2 (just installed 2015, briefly tried and had the same problem)

- Cinema 4 d 16.038 Lite as installed with AE and fully implemented to date.

As I suspect it is a TCP problem, change the TCP port ports in options of Cineware seems to be the obvious thing to do, but I'm not sure this ones different to use: I scanned for ports with network utility, that I can see the open hole only has 5 numbers and cineware options allow only a number registered 4-digit! When I tested it before I tried some pretty random 4-digit (purge all cache/memory and restart AE in the meantime) does not.

Did not understand how I could go about opening/transfer a port and am not sure if it is still possible to do in OS X rather than the settings on my router to connect to the outside world...?

I tried other things:

- Turn off wifi (as shown here https://forums.adobe.com/thread/1877012) did not help

- .. .or a permissions preference setting (as in ml http://blogs.adobe.com/aftereffects/2014/06/permissions-mac-os-start-adobe-applications.ht( )

- permissions repaired in disk utility

- Have tried to screw up the AE preferences and make a C4D file without altering any prefs, same problem persists.

- Of OS X's firewall is off already so there is nothing to allow, (and I tried to turn on, but does not block anything) and I have no 3rd party security/firewall software.

- I don't have QuickTime 3rd party to try to disable or components of the video hardware to disconnect.

- Can't remember if I tried to log in as root when I tried to correct that earlier, will now make and update this shortly

- Change the TCP port in the Cineware options (as described above)

Let me know of ideas, any info would be much appreciated. It would be amazing to actually be able to use Cineware at all!

Thank you!

Just to let you know, it seems that the latest version of the software update Yosemite has corrected all that was the cause! So happy, but still don't know what was the problem exactly.

On this other thread someone has reported that they have solved the same problem earlier by doing a clean install of Yosemite: CreativeCOW

-

I tried contacting a developer by the name of Wouter Verweirder about my work, based on his native extension, but have been unable to reach him. His native UDPSocket extension can be found here:

https://github.com/wouterverweirder/air-mobile-UDP-extension

I hope someone here can help me about this survey:

I have reasons to write a native extension like hers, but for TCP communication and not UDP.

I am very familiar with the protocols UDP and TCP networking at the packet level, as well as at the level of the source code in several languages. I replaced its UDP components with their counterparts TCP depending on the case, but I get no communication at all.

To start from the square, I did firstly I could build its extension of the source code, then use DONKEY resulting with my Android application for UDP communication as he originally intended.

Then, I simply added the following as a test at the end of its UDPSocketAdapter constructor (in red):

public UDPSocketAdapter(UDPSocketContext context) { }

this . framework = context;

this . hasSentClose = fake;

try {

channel = DatagramChannel. Open ();

channel .configureBlocking (real);

socket = channel . Socket();

{ } catch (SocketException e) { }

{ } catch (IOException e) { }

}

theReceiveQueue = new LinkedBlockingQueue < DatagramPacket > ();

try {}

Tsocket socket = new Socket ("192.168.2.23", 8137);

OutputStream outStream is tsocket. getOutputStream ();

Byte [] = data {'h', 'e', 'l', 'l', 'o', ' w ',' ', 'o', 'r', 'l' ' d ' "};

outStream.write (data);

outStream.flush ();

outStream.close ();

tsocket. Close();

} catch (IOException e1) {}

Newspaper. put ("i/o exception: \r\n" + Log stack2string (e1) + "\r\n");

}

}

(Of course, I also imported the Socket and OutputStream classes.) After I have reconstitute the DONKEY and then recompile and run my application, not only I do not see my test TCP traffic in my sniffer, but all of its features UDP that worked before, is now broken and I have zero UDP communication!

The line of code in my catch block send anything to my file of log (via my logging class), so I don't know my test code generates an exception. (And in fact, my test code works fine in another entirely native Android app.)

I just have something fundamental missed? I appreciate any suggestion that anyone could have.

Thank you.

Finally, I decided that the Socket object must be instantiated in a worker thread, and then successful TCP connections.

For some reason if an attempt is made to instantiate the plug in the main thread, any communication of any kind to the native extension of breaks network.

I have no idea why that would be a problem for TCP, but not UDP, as evidenced by the native extension of UDP work, on which I based my work, which instantiates a DatagramSocket in the main thread without problem.

I suspect it has something to do with the behavior oriented TCP connection and negotiation tripartite server that happens immediately when a Socket object is created. Because the absence of that UDP is the only functional difference between the two cases.

Maybe someone with a deep understanding of native extensions AIR has more specific information.

Maybe you are looking for

-

How to make a tool bar empty for buttons disappear? Link to the image in the description.

https://www.dropbox.com/s/prdidvbwox208xr/screen%20Shot%202014-04-29%20at%207.02.27%20PM.PNG There nothing about this, but it won't go away.

-

HP ENVY 14-2050se Beats Edition Notebook PC

Currently, I have acquired the laptop next used: HP ENVY 14-2050se Beats Edition Notebook PCSerial number: {information}Product number: LW398UA Someone deleted the recovery files and I need to find a set of recovery disc for this. Y at - it somewhere

-

Can't access to my (e :)) error Hard Drive e/s external device (0 x 80070057).

Original title: Can't access to my (e :)) external hard drive) I connected my Seagate Freeagent drive a couple of days, after a long period of disuse. The first time I opened it, it works beautifully. But after I closed it, it disappeared from my com

-

Managing photos in windows live mail.

Managing photos in windows live mail gives me grief. Can't resize so that I have a reasonable file size to send. Just switched to Outlook after the purchase of a new computer and colleagues, we arrived to copy our drive hard is not compatible microso

-

Text is selected in a note: how to get the number of that note?

At first glance, the question seems ridiculously simple. Yet as far as I know, nobody answers it.