Best practices Apple ID

I help the family members and others with their Apple products. Probably the problem number one revolves around Apple ID I saw users follow these steps:

(1) share IDs among the members of the family, but then wonder why messages/contacts/calendar entries etc are all shared.

(2) have several Apple IDs willy-nilly associated with seemingly random devices. The Apple ID is not used for anything.

(3) forget passwords. They always forget passwords.

(4) is that I don't really understand. They use an e-mail from another system (gmail.com, hotmail.com, etc) as their Apple ID. Invariably, they will use a different password for their Apple ID than the one they used for other email, so that they are constantly confused about which account to connect to.

I have looked around for an article on best practices for creating and using Apple ID, but could not find such a position. So I thought I would throw a few suggestions. If anyone knows of a list or wants to suggest changes/additions please feel free. Here are the best practices for normal circumstances, i.e. not cooperate accounts etc.

1. every person has exactly 1 Apple ID.

2. do not share Apple ID - share content.

3. do not use an email address of another counts as your Apple ID.

4. When you create a new Apple ID, don't forget to complete the secondary information to https://appleid.apple.com/account/manage. It is EXTREMELY important questions your email of relief and security.

5. the last step is to collect the information that you entered in a document and save to your computer AND print and store it somewhere safe.

Suggestions?

I agree with no. 3, it is no problem with using a addressed no iCloud as the primary ID, indeed, depending on where you set up your ID, you may have no choice but to.

Tags: iCloud

Similar Questions

-

Best practices Upgrade Path - Server 3 to 5?

Hello

I am trying a migration and upgrade of a server in the Profile Manager. I currently run an older mac mini Server 10.9.5 and Server 3 with a vast installation of Profile Manager. I recently successfully migrated the server itself out of the old mac mini on a Xserve end 2009 of cloning the drive. Still of double controls everything, but it seems that the transition between the mini and the Xserve was successful and everything works as it should (just with improved performance).

My main question is now that I want to get this software-wise at day and pass to the Server 5 and 10.11. I see a lot of documentation (still officially Apple) best practices for the upgrade of the Server 3 to 4 and Yosemite, but can't find much on the Server 5 and El captain, a fortiori from 3 to 5. I understand that I'll probably have to buy.app even once and that's fine... but should I be this staging with 10.9 to 10.10 and Server 4... Make sure that all is well... and the jump off 10.11 and Server 5... Or is it 'safe' (or ok) to jump 3 to 5 Server (and 10.9.5 to 10.11.x)? Obviously, the AppStore is pleased to make the jump from 10.9 to 10.11, but once again, looking for best practices here.

I will of course ensure that all backups are up-to-date and make another clone just before any which way that take... but I was wondering if someone has made the leap from 3-5... and had things (like the Profile Manager) still work correctly on the other side?

Thanks for any info and/or management.

In your post I keep the Mini running Server 3, El Capitan and Server 5 install the Xserve and walk through setting up Server 5 by hand. Things that need to be 'migrated' as Open directory must be handled by exporting the mini and reimport on Xserve.

According to my experience, OS X Server facilities that were "migrated" always seem to end up with esoteric problems that are difficult to correct, and it's easier to adopt the procedure above that to lose one day try.

YMMV

C.

-

Hello, I have a question about restoring active links in a Web site. I understand that the best way of assets update batch is do them all in one folder. However, I have several large websites with hundreds of photos and other goods, and it seems not that it is the best way to manage property. For example, I have a client that I have 50-75 pictures added to their site each year for a project. I do not delete the pictures of previous years, just keep to add. It only seems fair to just continue to add assets to a record of great asset. But, I had the folder my documents moved a couple of times, whenever I put in exactly the same place on my drive hard as it was (long story, this time, that apple has messed up my documents folder by MOVING to icloud without my permission) and even if I put back it exactly where it was, Muse said all assets are missing and I need to manually recreate a link to each of them. I understand that, for this reason, everything should be in the same folder, but for larger sites, it doesn't seem like it's the best practice. Sometimes, I have 20 or more active in a folder and we rebuild those suddenly, it's great, but I still several hundred in a Web site into smaller folders.

Any ideas? I don't know why muse is so delicate on the moved files, if I end up putting them back in the same place. I know that it is better to leave them where you designed the originally, but of the things happening.

Annette

ADusa says:

the best practice

Annette

don't move images from one computer to another because they are not the same images in the new system... even if you put them in the same place.

I just take the .muse file then use Dreamweaver (something like it) to download images from the Web page on the new computer... new version of Muse has an active button fired but older versions of the muse is

-

What is the best practice to move an image from one library to another library

What is the best practice to move an image from a photo library to another library of Photos ?

Right now, I just export an image on the desktop, then remove the image from Photos. Then, I open the other library and import these images from the office in Photos.

Is there a better way?

Yes -PowerPhotos is a better way to move images

LN

-

Looking for the best non Apple-Thunderbolt display...

... for my rMBP of 13 inches (mid-2014). I know that I probably won't be able to get the full retina display. Looking at 24 or 27 inch size.

Dan...

Try this article > http://dailytekk.com/2015/04/24/the-5-best-non-apple-27-inch-monitors-for-your-m ac-shot of lightning-display-alternatives /

-

Bought one from Best Buy Apple Watch that had been returned. Original purchaser cannot be contacted. The phone is synchronized to their account, how can I sync to mine?

You can not. Take it back to Best Buy and ask for a refund.

-

Code/sequence TestStand sharing best practices?

I am the architect for a project that uses TestStand, Switch Executive and LabVIEW code modules to control automated on a certain number of USE that we do.

It's my first time using TestStand and I want to adopt the best practices of software allowing sharing between my other software engineers who each will be responsible to create scripts of TestStand for one of the DUT single a lot of code. I've identified some 'functions' which will be common across all UUT like connecting two points on our switching matrix and then take a measure of tension with our EMS to check if it meets the limits.

The gist of my question is which is the version of TestStand to a LabVIEW library for sequence calls?

Right now what I did is to create these sequences Commons/generic settings and placed in their own sequence called "Functions.seq" common file as a pseduo library. This "Common Functions.seq" file is never intended to be run as a script itself, rather the sequences inside are put in by another top-level sequence that is unique to one of our DUT.

Is this a good practice or is there a better way to compartmentalize the calls of common sequence?

It seems that you are doing it correctly. I always remove MainSequence out there too, it will trigger an error if they try to run it with a model. You can also access the properties of file sequence and disassociate from any model.

I always equate a sequence on a vi and a sequence for a lvlib file. In this case, a step is a node in the diagram and local variables are son.

They just need to include this library of sequence files in their construction (and all of its dependencies).

Hope this helps,

-

TDMS & Diadem best practices: what happens if my mark has breaks/cuts?

I created a LV2011 datalogging application that stores a lot of data to TDMS files. The basic architecture is like this:

Each channel has these properties:

To = start time

DT = sampling interval

Channel values:

Table 1 d of the DBL values

After the start of datalogging, I still just by adding the string values. And if the size of the file the PDM goes beyond 1 GB, I create a new file and try again. The application runs continuously for days/weeks, so I get a lot of TDMS files.

It works very well. But now I need to change my system to allow the acquisition of data for pause/resume. In other words, there will be breaks in the signal (probably from 30 seconds to 10 minutes). I had originally considered two values for each point of registration as a XY Chart (value & timestamp) data. But I am opposed to this principal in because according to me, it fills your hard drive unnecessarily (twice us much disk footprint for the same data?).

Also, I've never used a tiara, but I want to ensure that my data can be easily opened and analyzed using DIAdem.

My question: are there some best practices for the storage of signals that break/break like that? I would just start a new record with a new time of departure (To) and tiara somehow "bind" these signals... for example, I know that it is a continuation of the same signal.

Of course, I should install Diadem and play with him. But I thought I would ask the experts on best practices, first of all, as I have no knowledge of DIAdem.

Hi josborne;

Do you plan to create a new PDM file whenever the acquisition stops and starts, or you were missing fewer sections store multiple power the same TDMS file? The best way to manage the shift of date / time is to store a waveform per channel per section of power and use the channel property who hails from waveform TDMS data - if you are wiring table of orange floating point or a waveform Brown to the TDMS Write.vi "wf_start_time". Tiara 2011 has the ability to easily access the time offset when it is stored in this property of channel (assuming that it is stored as a date/time and not as a DBL or a string). If you have only one section of power by PDM file, I would certainly also add a 'DateTime' property at the file level. If you want to store several sections of power in a single file, PDM, I would recommend using a separate group for each section of power. Make sure that you store the following properties of the string in the TDMS file if you want information to flow naturally to DIAdem:

'wf_xname '.

'wf_xunit_string '.

'wf_start_time '.

'wf_start_offset '.

'wf_increment '.Brad Turpin

Tiara Product Support Engineer

National Instruments

-

best practices to increase the speed of image processing

Are there best practices for effective image processing so that will improve the overall speed of the performance? I have a need to do near real-time image processing real (threshold, filtering, analysis of the particle/cleaning and measures) at 10 frames per second. So far I am not satisfied with the length of my cycle so I wonder if he has documented ways to speed up performance.

Hello

IMAQdx is only the pilot, it is not directly related to the image processing IMAQ is the library of the vision. This function allows you to use multi-hearts on IMAQ function, to decrease the time of treatment, Arce image processing is the longest task for your computer.

Concerning

-

Best practices for the .ini file, reading

Hello LabViewers

I have a pretty big application that uses a lot of communication material of various devices. I created an executable file, because the software runs on multiple sites. Some settings are currently hardcoded, others I put in a file .ini, such as the focus of the camera. The thought process was that this kind of parameters may vary from one place to another and can be defined by a user in the .ini file.

I would now like to extend the application of the possibility of using two different versions of the device hardware key (an atomic Force Microscope). I think it makes sense to do so using two versions of the .ini file. I intend to create two different .ini files and a trained user there could still adjust settings, such as the focus of the camera, if necessary. The other settings, it can not touch. I also EMI to force the user to select an .ini to start the executable file using a dialog box file, unlike now where the ini (only) file is automatically read in. If no .ini file is specified, then the application would stop. This use of the .ini file has a meaning?

My real question now solves on how to manage playback in the sector of .ini file. My estimate is that between 20-30 settings will be stored in the .ini file, I see two possibilities, but I don't know what the best choice or if im missing a third

(1) (current solution) I created a vi in reading where I write all the .ini values to the global variables of the project. All other read only VI the value of global variables (no other writing) ommit competitive situations

(2) I have pass the path to the .ini file in the subVIs and read the values in the .ini file if necessary. I can open them read-only.

What is the best practice? What is more scalable? Advantages/disadvantages?

Thank you very much

1. I recommend just using a configuration file. You have just a key to say what type of device is actually used. This will make things easier on the user, because they will not have to keep selecting the right file.

2. I use the globals. There is no need to constantly open, get values and close a file when it is the same everywhere. And since it's just a moment read at first, globals are perfect for this.

-

Best practices on how to code to the document?

Hello

I tried to search the web tutorials or examples, but could not get to anything. Can anyone summarize some of their best practices in order to document the LabVIEW code? I want to talk about a quite elaborate program, built with a state machine approach. It has many of the Subvi. Because it is important, that other people can understand my code, I guess that the documentation is quite large, but NEITHER has yet a tutorial for it. Maybe a suggestion

?

?Thank you for your time! This forum has been a valuable Companion already!

Giovanni

PS: I'm using LabVIEW 8.5 btw

Giovanni,

Always:

Fill in the "Documentation" in the properties of 'VI '.

Add description to the controls of its properties

Long lines label

Label algorithm, giving descriptions

Any code that can cause later confusion of the label.

Use the name bundle when clusters

Add a description tag in loops and cases. Describing the intention of the loop/case

Follow the good style guide as will make reading easy and intuitive vi.

I'm sure there are many others I can't think...

I suggest you to buy "LabVIEW style book", if you follow what this book teaches you will produce good code that is easy to maintain.

Kind regards

Lucither

-

Best practices - dynamic distribution of VI with LV2011

I'm the code distribution which consists of a main program that calls existing (and future) vi dynamically, but one at a time. Dynamics called vi have no input or output terminals. They run one at a time, in a subgroup of experts in the main program. The main program must maintain a reference to the vi loaded dynamically, so it can be sure that the dyn. responsible VI has stopped completely before unloading the call a replacement vi. These vi do not use shared or global variables, but may have a few vi together with the main program (it would be OK to duplicate these in the version of vi).

In this context, what are best practices these days to release dynamically load of vi (and their dependants)?

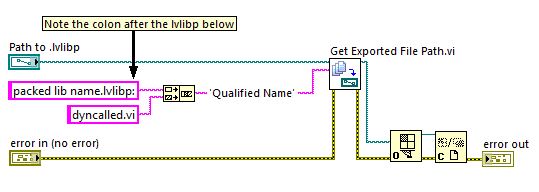

If I use a library of project (.lvlib), it seems that I have to first build an .exe that contains the top-level VI (that dynamically load), so that a separate .lvlib can be generated which includes their dependencies. The content of this .lvlib and a .lvlib containing the top-level VI can be merged to create a single .lvlib, and then a packed library can be generated for distribution with the main .exe.

This seems much too involved (but necessary)?

My goal is to have a .exe for the main program and another structure containing the VI called dynamically and their dependents. It seemed so straighforward when an .exe was really a .llb a few years ago

Thanks in advance for your comments.

Continue the conversation with me

here is the solution:

here is the solution:Runs like a champ. All dependencies are contained in the packed library and the dynamic call works fine.

-

Best practices of a partition of HDD on Windowes Server2008 and Windowes 2012

What is the best practice for partition of HDD on Windowes Server 2008 and 2012 Windowes

Could be interesting to ask more http://social.technet.microsoft.com/Forums/en-US/winservergen/threads that you can get more answers.

That said I would say that very much depends on what you intend to do with the server, and of course how much space you have available.

For a general server I would probably go with two volumes, the C:\ drive for the file system and a second volume for your data, for example E:\. I recommend at LEAST 30 GB for the C:\, 40-50 GB preference, since updates, patches, etc will burn way over time and it's much easier to start big than to try to develop later.

If you are running the Terminal Services, then you will probably need a larger C:\ amount as a large part of the user profile data is stored there in order to run lack of space fairly easily.

As I said, it depends on what you do, how much space and disks you have available etc, it isn't really a one-size-fits-all answers.

-

encoding issue "best practices."

I'm about to add several command objects to my plan, and the source code will increase accordingly. I would be interested in advice on good ways to break the code into multiple files.

I thought I had a source file (and a header file) for each command object. Does this cause problems when editing and saving the file .uir? When I run the Code-> target... file command, it seems that it changes the file target for all objects, not only that I am currently working on.

At least, I would like to have all my routines of recall in one file other than the file that contains the main(). Is it a good/bad idea / is not serious? Is there something special I need to know this?

I guess what I'm asking, what, how much freedom should I when it comes to code in locations other than what the editor of .uir seems to impose? Before I go down, I want to assure you that I'm not going to open a can of worms here.

Thank you.

I'm not so comfortable coming to "best practices", maybe because I am partially a self-taught programmer.

Nevertheless, some concepts are clear to me: you are not limited in any way in how divide you your code in separate files. Personally, I have the habit of grouping panels that are used for a consistent set of functions (e.g. all the panels for layout tests, all the panels for execution of / follow-up... testing) in a single file UIR and related reminders in a single source file, but is not a rigid rule.

I have a few common callback functions that are in a separate source file, some of them very commonly used in all of my programs are included in my own instrument driver and installed controls in code or in the editor of the IUR.

When you use the IUR Editor, you can use the Code > target file Set... feature in menu to set the source file where generated code will go. This option can be changed at any time while developing, so ideally, you could place a button on a Panel, set a routine reminder for him, set the target file and then generate the code for this control only (Ctrl + G or Code > Generate > menu control reminders function). Until you change the target file, all code generated will go to the original target file, but you can move it to another source after that time.

-

the best practice is implemented Server Exchange and the domain controller in the same server

the best practice is implemented in exchange server and the domain controller in the same server or

put on another serverHello

Your question of Windows 7 is more complex than what is generally answered in the Microsoft Answers forums. It is better suited for the public on the TechNet site. Please post your question in the following link for assistance:

http://social.technet.Microsoft.com/forums

Maybe you are looking for

-

I tried to create a second user account, but it does not, he will go so far then go straight to a blue screen, then I have to Ctrl + alt + delete and then go and delete the account, I really need to understand this, because I really need a second acc

-

BlackBerry Smartphones please help me how to uninstall OS 5.0 on my BB 9700... HELP, HELP, HELP

People, I spent weeks now trying to upgrade my BB 9700 OS 6.0. I've done several security wipes (2) and even used BBSAK (even different versions) six times to get the OS 5.0 off my phone and as an OS 5.0 disease remains on the phone. The key to sol

-

DV7-w/WVista 64 bit-WIFI disabled, I can not turn

Hello I have a HP pavilion dv7 2177cl with window vista home premium, 64-bit. I was connected by cable to the internet in my friend's House when after a few minutes the WLAN appeared disabled. The switch only changed from disabled to off and vice ve

-

Substitution strings are not read

HelloI'm working on APEX 4.2.2 and Oracle 11 g.We ask a how to download attachments of a report query. We use a v ('APP_ALIAS') substitution string to the procedure, which is not playing. Is there a limitation that a procedure should be called from p

-

I need to add a time table for a gym to a Web site and the owners of the gym want to be able to modify content information as it changes regularly. A bit like this:http://www.clubwoodham.co.UK/timetable/main/StudioDoes anyone have ideas on how best t