limited on PCI-6052E sampling frequency

I'm trying to measure with two channels with a PC containing a NI PCI-6052E card that is capable of 333KS/s on LabView 8.2. With two channels each channel must be able to 333KS/s / 2 = 166.5KS / s. everytime I try to set the sampling frequency above 94339 Hz I the following warning and the vi no longer works.

WARNING 200012 occurred DAQmx start Task.vi:1

Possible reasons:

Clock speed specified is greater than the maximum ADC conversion rate. ADC invaded errors are likely.

If I place a similar task in the Measument and Automation Explorer and I can easily make two channels measures to 160kHz without error. Above 166kHz I start getting the same caveat (200012). The code that I use calls first DAQmx create Channel.vi, then DAQmx Timing.vi and finally DAQmx start Task.vi. Everything works fine and I did measuements, but I can't rise above 94339 Hz sampling rate even if it should be possible.

I just looked and I can't understand the issue here.

Dear Voyn,

I thank very you much for your message on our forum. If you create a task for a single channel, you can get a higher sampling rate? Are you able to reproduce the same problem with an example from the finder example? Go to hardware to go end tab selected and output-DAQmx online-online-online-online Acq Cont voltage analog measures & chart voltage-Int Clk.vi

You can select several channels as well. If it shows the same behavior; which driver DAQmx do you use?

Best regards

Tags: NI Software

Similar Questions

-

PCI-6111 valid sampling frequencies

We have old card PCI-6111 that we still use. A question was posed regarding the applicable sampling frequency setting.

According to the manual, this thing must have base clock of 20 MHz and 100 kHz. There also a divisor of 24-bit and (not sure about this) multiplier of 2 bits. I guess that these can be used for the base of appropriate clock frequency scaling.

What sampling rate can be set on this thing? This is a spec 5 of average size, then that would be the upper limit. Can I put any arbitrary frequency (integer) under this? Or what I need to work explicitly what it's capable of? With a 24-bit divider, this would imply that there is a wide range of frequencies that doesn't "work" on this map.

What happens when I select a frequency that is "bad"? Round it inflicts, turn down?

Hello Isaac, it's Paul with engineering Applications to the OR.

This device offeres 2 at simultaneous sampling of the analog inputs, 5 MS/s per channel - The 2 AI can enjoy up to 5 Mhz per channel each. You can only have 1 frequency clock for your tasks to HAVE, but this clock frequency can be adjusted from 1 kHz to 5 Mhz, set by you. Note that most of our maps allow GOT down to 1 HZ, but this ADC is different because it uses a pipeline ADC convereter. In addition, since this card can taste at the same time, it means that two analog channels will be read at the same exact time (in parallel). In addition, the value of I is a 12-bit number.

-

High speed continuous measurement of encoder with sampling frequency of 1 kHz

I am able at all times the position of a linear encoder using a PCI-6602 counter card, and I need to know how to set up so that the counter rotating at high speed, but the data is inserted into the buffer at a frequency of 1 kHz. I am able suddenly to a hydraulic cylinder, and I am not concerned about the event recording to high frequency except to the extent where they throw off the number considerably if the equipment does not run fast enough to detect all the impulses of the encoder.

Now, I think is that the external sample clock signal control (routed internal pulse output counter) time rate whereby the equipment detects the impulses of the encoder and the rate at which it inserts data into the buffer. With a pulse 100 per inch encoder and a sampling frequency of 1 kHz, the extended final position of the cylinder is turned off by +/-0.15 inches, which is unacceptable.

I need calculate a speed of this information, so I prefer not to use software timed sampling to control this (it's more difficult programming for other reasons as well - several asynchronous measures). Any ideas on how to configure the hardware to count faster than the speed at which she inserts counties in the buffer?

OK, you're clearly on the right track here, so I will focus on some details.

1. How do you know that the +/-0.15 "differences are * measurement error rather than * error of movement? Why wouldn't be an accurate measure and a proposal which can vary slightly from the nominal value?

2. I wonder some all electric noise and defects that may produce false edges. The fact that the behavior was better by using a sampling rate limited (200 kHz) in the digital inputs may be that some of these flaws were so short that they were never captured.

I did a ton of work with the Commission to 6602 encoder and I can certainly confirm that count equipment is sensitive to the edges in a few tens of MHz. (I know its 80 MHz for edge counting, but I think I remember that it can be of the order of 20 to 40 MHz to accommodate the time of signal propagation extra of the quadrature decoding circuit).

A small point of clarification. You're talking about the speed at which the meter "works to. The value of count is a register whose value is changed completely by the circuit, * independent * of the sampling frequency. If you enjoy with material-clocked County in memory buffer or interrogation of software without buffer not a bit for circuits that increments / decrements the value of the counter register. (In other words, I am completely convinced that you would get commensurate with position end even if you took only 1 sample software-polled after the end of the move instead of sampling at 1 kHz all the way through.)

So, if the value of the counter is disabled, it is because the circuit detects producers of County of the edges that shouldn't be there. Something you can try is to set up digital debounce filter for input lines of the PFI corresponding to the encoder Source inputs and to the.

-Kevin P.

-

AI PXI-6255 sampling frequency

Hello

We use a simulated PXI-6255 device and with the LabVIEW example, apparently, that we can achieve a sampling rate of 20 kHz to 50 channels without warnings or errors.

The spec is the number max of 20 kHz channels must be approximately 38 channels (750000 / 20000).

1.25 single channel of MECH. / s

multi-channel of 750 ksps / s (aggregation)

The simulation cannot not representative of the unit or the real PXI-6255 does support a higher rate that we expect?

Hi jharris66,

It is not a limitation of the driver to the sampling frequency, so a simulated device reports any error in this case.

In this example, I think that the limit of 750 kHz comes to the multiplexer. I don't expect to see the same error messages with the real device, but your accuracy will probably not to spec because not allowing enough time settling between the channels.

The limit of 1.25 MHz is the maximum ADC sampling frequency. You can probably exceed this number somewhat, but once you go too far beyond 1.25 MHz you will eventually receive a synchronization from the hardware error when the ADC cannot follow.

Thus, the specification is still technically 750 kHz for channels multiple acquisitions. You can probably run at a higher rate than this (even on real hardware), but I wouldn't say that it is supported.

Best regards

-

How to change the DAQmx sampling frequency

Hello

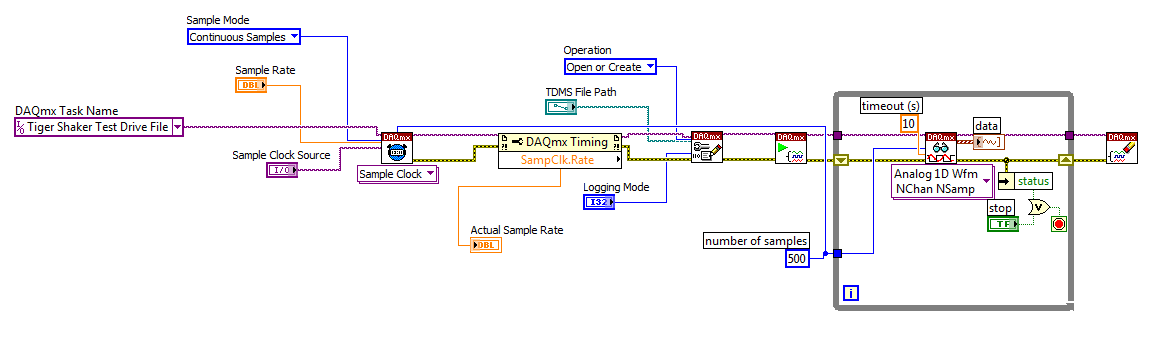

I'm trying to: record streaming channels (acceleration 21 and 1 tension) using a DAQmx task, then convert the data to a PDM file. The program files and output to the TDMS file very well. The issue I'm having is that I can't change the sampling frequency. I want to record 500samples/s and I can not get the "real sampling rate" of change of 1651.61samples / s. I am trying to use the clock to do this and I succumbed. I also tried to change the settings of "Timing" in the task without a bit of luck. Here is a screenshot of the .VI I created. I've also attached a copy of the file VI. Any help would be greatly appreciated!

Thank you

Tony

You will need to provide the model of your device. You can also look in the sheet/manual to see what the real supported sampling rates. Some devices have limited rates.

-

Why do my pci-6052e configured as traditional daq and daqmx in MAX?

I have an old computer of three year with 1 GB of RAM and a 3.00 GHz running Windows Server 2003. It has already been used for experiments of movement control and has a 7333 motion control card and a PCI-6052E. Now all I want to do is sample data of a pressure sensor and a flow meter using the PCI-6052E, but for some reason, this card is configured in MAX as a DAQmx device and a traditional DAQ (Leagcy) deivce. Unfortunately, when I write the LabVIEW 8.2 VI, the only available data acquisition tools are DAQmx and Max my two instruments are installed in traditional DAQ.

If there is only one card PCI-6052E in the computer, why MAX card PCI-6052E called dev3 DAQmx and a card PCI-6052E dev1 in traditional DAQ? How can I fix?

Cpesko,

I am happy here, you have a few things working under Windows Server 2003. We do not however have any software under this OS except what is listed here.

DAQmx and TDAQ are 2 different drivers and this is the behavior expected that devices that support both would be present in the title of each driver.

-

Sampling frequency of HAVE is incorrect for simulated ENET-9213, WLS-9213, and USB-9213

Hello

ENET-9213, WLS-9213 simulation and devices USB-9213, I'm able to correctly get the sampling frequency of I = 1351 samples/s using DAQmxGetDevAIMaxSingleChanRate, which is incidentally on the value of spec'ed of 1200 s/s.

However, when I create a task and add a voltage channel and then HAVE the sampling frequency of the task of query, I get a sampling rate of only 9 samples/s. I tried the same code with other devices and I get the sampling frequency corresponding to the device data sheet, it seems THST this problem is limited to 9213 devices.

Why sampling returned by the task using DAQmxGetSampClkMaxRate rate returns than 9 s/s.

And why the rate of conversion of DAQmxGetAIConvRate only 18 s/s.

I enclose the test code which may be used to reproduce this problem.

Kind regards

whemdan

The MathWorks

Hello

When I tried this with a USB-9213 simulation, I used the Sample clock Max Rate, as well as the Rate.vi of AIConvert:Max property node. I could see that for 1 channel, I could spend up to 675.67/s, and I couldn't for 16-channel get79.49S/s (which total is equal to 1271 S/s, which is in the specifications). The multichannel and single channel, I could get an AIConvert Max Rate of 1351.35.

Something that could happen is that you do not explicitly set this device runs in mode high speed. You'll want to set the property Get/Set/rest AI_ADCTimingMode channel at high speed, and you should see much better results in this way.

Something else to note - I use DAQmx 9.0

-

Cannot set the < 1609 sampling frequency

Hello

We have recently upgraded to LV 8.6 and 8.7 DAQmx and then you have problem with the acquisition of data that uses the DAQmx API. For example, we have a module HAVE cDAQ-9172 and 9239. The device can be specified by the user and a typical configuration could be a continuous CQI, one sample at 10 Hz. After the upgrade of the 200279 error "attempt to read samples that are no longer available... crushed" came little after the task was started. It turned out that the property sampleclkrate is not affected by the value that is put in the DAQmx Timing.vi, except if it has been set > 1612,9, if you set 10 100 or 1000 or whatever the sampling frequency will be always 1612,9 when you read in the property of timing.

If the buffer then of course becomes flew, but the question is why there is a minimum sampling frequency like this? Earlier, it was fine it set a value of arbitray and the acquisition would be at this rate.

There are a lot of solution to get around this (faster reading, etc.), but it is strange that the behavior of the code can change from one version to the other like that...

/ Henrik

I see a flaw in your program, you have the hardware timing and calendar in a software loop. The loop is limited by the expectation of the software. (I think it's on purpose for the demonstration).

I looked at the Manual for the 9239 and page 18 notice that the minimum entry rate is 1613kS/s

So that is explained, the only problem is that the timing DAQmx VI does not return an error or a warning when you set a too low rate.

Tone

-

Sampling frequency of adjustment for the analog output of sine

Hello

I tried to do something very simple: using an analog output card PCI 6221 to produce a frequency 50 Hz sine curve. For this I used a Vi to create a curve sinus and different screws DAQmx. But I have trouble understanding the principle of virtual channel and I think I do an error of adjustment of the sampling frequency and number of samples: once for the vi, second time sine "DAQmx - synchronization. Can I use the same values for both of these screws?

On my oscilloscope, with frequency = 50 Hz and the sampling frequency = 1 kHz, I get a null signal. Then according to two values, I'm differently evaluated signals. For example, with f = 1 Hz and sr = 10 kHz, a frequency 0.7 Hz sinus.

Make sure that the start for the analog input task occurs after the analog output. By plugging in the wrong thread to an analogue output start task first, and then to the start task, you guarantee that the AI cannot start until after the startup of the AO.

-

How to acquire with NiScope at different sampling frequencies and lengths Records?

I need to acquire the data of 2 channels of the NI PXI-5114 map two different sampling frequencies high, at the same time. Also, I put 2 different record length. Is this possible?

I understand that 'Vertical' settings can be configured for individual chains because the function 'Vertical niScope Configure' has 'channels of entry with which we can assign the desired channel. But for horizontal settings such as "min sampling rate" and the record min length, I could not find such an option to specify the channel. Would it not common to both channels?

I hope that the device is capable of simultaneous sampling and therefore channels can be configured individually to different sampling rate.

Hi AJ_CS,

Why do you have to be distinct from sampling frequencies on channels separated from the digitizer even? What different sampling rate do you want?

But for horizontal settings such as "min sampling rate" and the record min length, I could not find such an option to specify the channel. Would it not common to both channels?

You do not have an option to configure the settings of hoirizontal on a channel by channel basis because this concept does not exist in the traditional sense of the use of a scope. Compatible with the concept of IVI, an oscilloscope traditional benchtop will have only a button or a set of buttons for setting the parameters of synchronization of the unit. There is therefore no horizontal configuration to separate channels on the scanners NOR.

I hope that the device is capable of simultaneous sampling and therefore channels can be configured individually to different sampling rate.

Similar to a traditional benchtop oscilloscpe, the device is capable of simultaneous sampling. But as mentioned above, the channels can not be configured for different sampling frequencies high.

However, you can ignore data that you think is not relevant. For example, if you assign 100MS/s CH0 and CH1 to 50 MS/s, then you throw all other samples.

Alternatively, you can use separate scanners (a channel on each digitizer) and configure them to taste at different rates. You can set frequencies of sampling on scanners NOR separated and even synchronize them with TClk.

-Andrew

-

How to acquire the signal to very high sampling frequency

Hello world

My name is Luke Ho. I am trying to acquire the signal with Labview (Sthelescope). The signal comes from sensor acoustics, then filters and amplifiers to adapt to ADC rank (0 - 5V). Thus, the maximum frequency of the signal is 40 kHz.

According to the Nyquist theorem, I sampled at least 80 Khz signal.

Is there a sampling frequency devices like that? or y at - it another way of better? I used the Arduino before, but it was about 10 kHz.

I need your advice.

Thank you all and have a nice day.holucbme wrote:

Thanks for your recommendation

But is it possible without USB Data Acquisition, it is quite expensive for me.

This is the cheapest option to NEITHER. I tried to look for options to other companies, but more I found in the same price range, or not answering is not your condition of sample rate.

-

Xincrement is not agree with sampling frequency

I use two PXI-5114 scanners that are being synchronized. I am taking 5 seconds worth of data. I have = 1e3 sample rate and record length = 5e3. I get only about 1.3 seconds worth of data. I looked at the actual length of the record and the actual sampling frequency outputs and they said the same thing as the façade made. I looked at and then the info out of the Cluster.vi of Fetch NiScope Multi Wfm and he had the same record length, but the value of Xincrement is 262e-6. This fits with what I get. Any ideas?

Thanks in advance.

Hi AT1,.

Thanks for posting. What you see, this due to the fact that the minimum sampling rate of the PXI-5114 is 3.81 kech. / s. The digitizer will force any value less than 3.81 kech. / s up to this value, so a record of 5000 samples take 1.31 seconds to acquire. If you are looking to acquire 5 seconds worth of data, I recommend to increase the length of record about 19 050 samples, which should be about 5 seconds worth of data. Let me know if this would work for your application.

Kind regards

Joe S.

-

How to specify the sampling frequency? Must use "measurement & Automation Explorer '?

I use to measure the input current analog OR cDAQ-9171 (chassis only location USB) and NOR-9207. I have 2010 NOR-installed DAQmx and LabVIEW.

How can I specify the sampling frequency?

If I use M & A Explorer to create the task, I can specify the flow rate (Hz) on the Configuration tab-> sync settings.

For the acquisition of data NOR, it is mandatory to use M & A exploring?

If I don't want to use M & A Explorer, how can I specify the rate (Hz)?

Hello

You can specify the sampling frequency with "DAQmx Timing.vi" located in the function palette DAQmx (read context-sensitive help on how to use wisely).

You do not have to use M & A exploring (MAX) to create a task.

A simple and quick way is to use DAQ Assistant (same configuration as in MAX) to configure your measurement.

Another is to use blocks of DAQmx function to manually build your application code.

In my experience Assistant DAQ is ideal for simple tasks (one measure), with regard to the more complex measures (synchronized the analog and digital inputs).

I tend to use function blocks because they give you more freedom about code execution.

Note: You can also build DAQmx code from a wizard configured DAQ task.

Best regards

Matej

-

I have included my code as version 8.5 for those who have not yet upgraded to 8.6. I have also included some screenshots so that you can replicate the results I got. I hope that some signal processing guru can shed light on what I mention it further.

This VI convolves the signal of impulse response of a simulated servomotor which is essentially a damped sine the input pulse which is a step function. The signal resulting convolved should be IDENTICAL to that of the step response of the engine which is RED on the display 1. As you can see the convolution that results in table 2 shows the same structure of frequency, but its magnitude is INCORRECT. As you can see in the catches of 2 screen sizes differ by a factor of 2 & done the sampling frequency of the wave. Why the sampling frequency, impact on the scale is also very strange & disturbing.

Would appreciate any corrections & explanations so that I trust the convolution of the other wave forms of entry than just the step function.

OK, I think I have it working now. Your premise on the effect of sampling on the derivative is not the issue. Does it affect what the FREQ of levy is the basis of time of convolution. As the convolution product is not continuous but discrete the length of the array should be taken into account & the sampling frequency must be consistent with this length of array as well as 1 second corresponds to 1 second. If sampling freq is 2 kHz & the length of the array is 1000 then to get the correct time base by a factor of 2 must be taken into account. In addition, to take account of the DC, shift of the ZERO gain factor must be added to the convolved signal to get the correct size.

Thanks for making me think more deeply.

-

Synchronization of the inputs and outputs with different sampling frequencies

I'm relatively new to LabView. I have a NOR-myDAQ, and I am trying to accomplish the following:

Square wave output 10 kHz, duty cycle 50%.

Input sampling frequency of 200 kHz, synchronized with the output that I get 20 analog input samples by square wave, and I know what samples align with the high and low output of my square wave.

So far, I used a counter to create the square wave of 10 kHz, display on a digital output line. I tried to pull the document according to (http://www.ni.com/white-paper/4322/en), but I'm not sure how sample at a different rate than my clock pulse. It seems that this example is intended rather to taste one entry by analog clock pulse. There may be a way to create a faster clock (200 kHz) in the software and use that to synchronize the analog input collection as well as a slower 10 kHz output generation square wave?

I eventually have to use the analog inputs to obtain data and an analog output to write the data channel, so I need the impetus of the square wave at the exit on a digital PIN.

How could anyone do this in LabView?

Hi Eric,.

All subsystems (, AO, CTR) derive from the STC3 clocks so they don't drift, but in order to align your sample clock HAVE with pulse train that you generate on the counter, you'll want to trigger a task out of the other. I would like to start by a few examples taken from the example Finder > Input and Output material > DAQmx. You can trigger GOT off the train of impulses, start by Gen digital Pulse Train-keep -you probably already use a VI like this to generate 10 k pulse train. AI, start with an example like Acq Cont & chart voltage-Ext Clk - Dig Start.vi-you'll want to use the internal clock so just remove the control of the "Source of the clock" and it uses the internal clock. From there, simply set the "Source of the command" either be the PFI line generates the meter, or ' /

/Ctr0InternalOutput '-assuming that you are using the counter 0. You'll want to make sure that the start of the task HAVE faced the task of counter I is ready to trigger off the first impulse. They should be aligned at this point. For debugging, you can use DAQmx export Signal to export the sample clock - you can then brought the train line and the PFI pulse to make sure that they are aligned.

Hope this helps,

Andrew S

Maybe you are looking for

-

After updating my mozilla, I get a message not responding

-

M60: Turns off after power loss

My M60 sometimes turns off immediately after unplugging the power supply (AC power or plug the laptop CC), instead of out on the battery even though the battery is fully charged.The battery is OK & the laptop starts regularly on the battery after pre

-

CPU NTUDM met an invalid statement.

I met all of a sudden this error today and it keeps coming back every 30 sceonds or so if I close. I see only one solution says to make sure that all updates are installed. I have automatic updates and see that there are 8 which must be installed. Wh

-

Kindle fire trouble getting anything to computor transfer

I have trouble getting anything to transfer to my kindle from my computer. can I download games on my kindle also located on my computer? I'm not a computer genius, I know just enough to get by, I have vista on my laptop and home desktop xp. Please h

-

Thin client HP t5720 OS: Win7/WinXP questions

Just got a HP customer light t5720 from a friend asking me to check for malware. When I turn on the machine, it first displays a "Windows 7 Ultimate": logo .. .but when I right click on 'My Computer', it says Windows XP Embedded and the end of initia