Optimizing Labview VI

Hello

I want to optimize this code (HMI Pop Up Alpha KB).

This is a sample VI, but it is not very optimized.

This VI simulates a keyboard, alphanumeric, intended to be used on a touchscreen PC. The input control can be set to standard or display password.

PS: I do not think I do more to optimize it.

Thank you

Remove the timeout. Place the code that must run before the loop of data entry (ready for this?...) front (i.e. outside and to the left of) the data entry loop. Error line allows to connect them in series and bring the error line in the data entry loop.

Error line is your friend: use it! Each Subvi should have error in and error on wired devices to the connectors at the bottom right and bottom left. Error lines should run through your code, and it should be used.

HMI Pop Up Alpha Ko, you have the way PDF current open cable VI VI reference. The server VI 'This VI' reference made, in my view, the same thing.

Don't use no local Variables. In Pop Up Alpha HMI, replace the Tunnel carrying the text in the While loop with a shift register and have the indicator text attached to it. It will then auto-update, eliminating the need for local Variables (bad). Note that you need to wire up the thread of the text through the structure of the event, as well, so you can update from the KB Driver when necessary.

Bob Schor

Tags: NI Software

Similar Questions

-

For what display size is optimized Labview 2012?

Hello

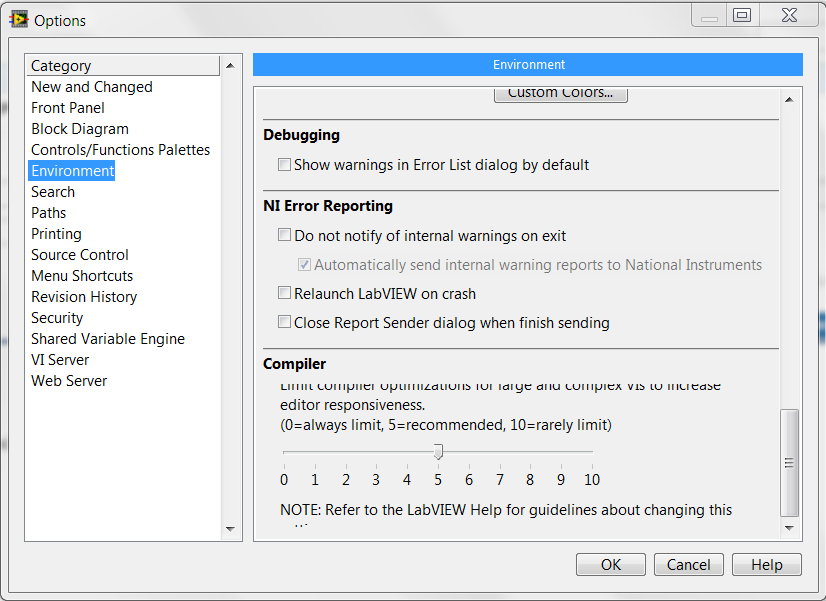

I have a top of Tower with a high resolution (1920 x 1080). After that I installed Labview2012, on some of the dialogs, e.g. Tools-> Options (see image) not all text is shown completely.

Also, when I opened VI designed for a lower resolution display I overlap on the labels and controls.

Can someone tell me if this is due only to the display resolution, or also the Labview? And what display size optimized Labview2012?

Concerning

Hi, I found the problem. Text size DPI in my Windows display settings has been set to 120 DPI. After, I changed to 96 DPI, text and labels returned to their normal position.

-

Why is-C code so much faster? (Image processing)

Hello!

I'm working on a larger application but I reduced to show you a problem I encounter at the beginning. I join all the files needed to run.

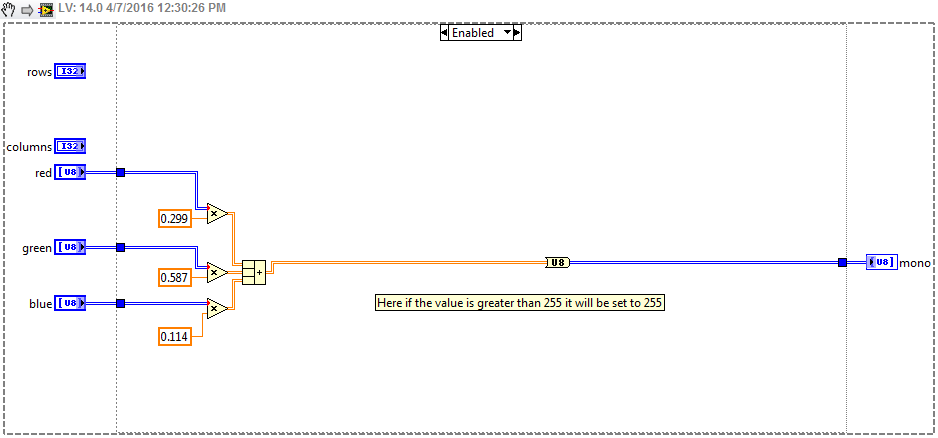

It is simple rgb2monochrome that I implemented in C code algorithm and code LabVIEW. I have not now why the C code is 4 times faster.

In the code C transfer tables with pointers so I'm working on a given memory area. I thought that LabVIEW can be slower, because it creates unnecessary copies of berries. I tried to solve this problem with Structure elements instead and value reference data but no effect.

I also tried to change the tunnels in the shift registers in some places (read in optimizing LabVIEW Embedded Applications). I changed options in the execution of the subVIs properties and I created EXE application to see if it will be faster. Unfortunately I can not yet reduce the difference time of execution.

I know that my algorithm can be better optimized, but this isn't the main problem. Now the two algorithm are implemented in the same way (you can check in cpp file) when it should have a similar execution times. I think I made a mistake in the LabVIEW code, maybe something with memory management?

And I know more... Maybe nothing wrong with LabVIEW code but something too good in C code

? It is 64 b library, implementation of usual way without forcing the parallelism. Moreover I brand performance in the thread of UI in COLD LAKE. But perhaps nevertheless CPU manages this function with multi hearts? I have 4 cores then the difference in execution time would be good

? It is 64 b library, implementation of usual way without forcing the parallelism. Moreover I brand performance in the thread of UI in COLD LAKE. But perhaps nevertheless CPU manages this function with multi hearts? I have 4 cores then the difference in execution time would be good  . But it's impossible, right?

. But it's impossible, right?I know that the notice that LabVIEW is sometimes slow, but approximately 15%, not 4 times. So I had to make a mistake... Anyone know what kind of

?

?Kind regards

ksiadz13Well, here are a few things that make faster LabVIEW code, there are other improvements that could be made, but I would need to do several tests to see if they are better based on your input data. First of all, I would like to enforce the VI Inline, not subroutine. Also, I'd work with arrays of data instead of scalar values. Also, you know the number of rows and columns because it is the size of the array, why keep this information? Oh and if you are working with curls you can try parallel for loops to work on more than one processor at a time.

And also, I'm not sure that all this work is in any way necessary. If you have a double and turn it into a U8, it will be 255 If the value was greater than that to start with. Attached is an updated release with several possible options to try.

Oh and another improvement, why are you even unbundling the data in Unbundle_imageCPU1.vi? Why not work with this table 2D-Red Green Blue? I realize that even VI is called in LabVIEW code options both C but with LabVIEW just deal with other data could make faster together. In particular, in view of the fact that regroup you after you're done anyway.

-

LabVIEW FPGA CLIP node compilation error

Hello NO,.

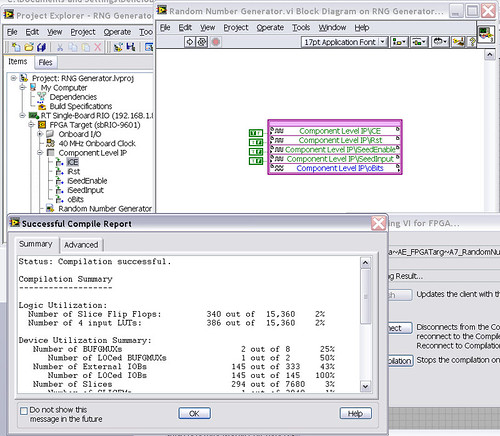

I work on an application for my Single-Board RIO (sbRIO-9601) and faced with a compile error when I try to compile my FPGA personality via the ELEMENT node. I have two .vhd files that I declare in my .xml file and all at this point works great. I add the IP-level component to my project and then drag it to the VI I created under my FPGA.

Within the FPGA personality, I essentially have to add some constants on the indicators and entries CLIP to my CLIP out and attempt to save/compile. With this simple configuration, I met a compilation error (ERROR: MapLib:820 - symbol LUT4... see report filling for details on which signals were cut). If I go back to my VI and delete indicators on the output (making the output pin of the CLIP connected to nothing), compiles fine.

I've included screenshots, VHDL and LV project files. What could be causing an indicator of the output of my VI to force compilation errors?

Otherwise that it is attached to the output ELEMENT, a successful compilation...

After that the output indicator comes with CLIP, compilation to fail...

NEITHER sbRIO-9601

LabVIEW 8.6.0

LabVIEW FPGA

Windows XP (32-bit, English)

No conflicting background process (not Google desktop, etc.).Usually a "trimming" error gives to think that there are a few missing IP. Often, a CLIP source file is missing or the path specified in the XML file is incorrect.

In your case I believe that there is an error in the XML declaration:

1.0

RandomNumberGenerator

urng_n11213_w36dp_t4_p89.vhd

fifo2.vhd

This indicates LV FPGA to expect a higher level entity called "RandomNumberGenerator" defined in one of two VHDL files. However, I couldn't see this entity in one of two files. If urng_n11213_w36dp_t4_p89 is the top-level entity, edit the XML to instead set the HDLName tag as follows:

urng_n11213_w36dp_t4_p89 Also - in your XML, you set the 'oBits' music VIDEO for output as a U32, however the VHDL port is defined as a vector of bits 89:

oBits: out std_logic_vector (89-1 downto 0)

These definitions must match and the maximum size of the vector CLIP IO is 32, so you have to break your oBits in three exits U32 output. I have added the ports and changed your logic of assignment as follows:

oBits1(31 downto 0)<= srcs(31="" downto="">

oBits2(31 downto 0)<= srcs(63="" downto="">

oBits3(31 downto 0)<= "0000000"="" &="" srcs(88="" downto="">Both of these changes resulted in a successful compilation.

Note: The only compiler errors when you add the flag because otherwise your CUTTING code is optimized design. If the IP is instantiated in a design, but nothing is connected to its output, it consumes all logic? Most of the time the FPGA compiler is smart enough to get it out.

-

Integration of IP node evil in LabVIEW FPGA

Hi all

I am having trouble with the integration of LabVIEW FPGA IP option and was hoping someone could shed some light here.

I use a simple VHDL code for a bit, 2: 1 MUX in order to familiarize themselves with the integration of IP for the LabVIEW FPGA.

In the IP properties of the context node, the syntax checking integration says:

ERROR: HDLParsers:813 - "C:/NIFPGA/iptemp/ipin482231194540D2B0CC68A8AF0F43AAED/TwoToOneOneBitMux.vhd", line 15. Enumerated value U is absent from the selection.

but I'm still able to compile. Once the node is made and connected, I get the arrow to run the VI but when I do, I get a build errors in Code Pop up that says:

The selected object is only supported inside the single-cycle Timed loop.

Place a single cycle timed loop around the object.

The selected object in question is my IP integration node.

I add a loop timed to the node, but even if I am able to run the VI, it nothing happens. the output does not illuminate regardless of the configuration.

I would say that I tried everything, but I can't imagine would be the problem might be at this point given that everything compiles and the code is so simple.

I have attached the VI both VHDL code. Please let me know if any problems occur following different boards of the FPGA.

Would be really grateful for the help,

Yusif Nurizade

Hey, Yusif,.

Looks that you enter in the loop timed Cycle and never, leave while the indicator of Output never actually is updated. Try a real constant of wiring to the break of the SCTL condition. Otherwise, you could spend all controls/indicators inside the SCTL and get rid of the outside while loop. You can race in the calendar of meeting bad in larger designs without pipeling or by optimizing the code if you take this approach, however.

-

LabVIEW fpga compile: translation error then again translate

Hello everyone,

I have a question about the process of compiling LV FPGA.

The context:

I am compiling a binary FPGA for the NOR-5644R (viterx6 inisde). the process is quite long (up to 7 hours depending on how is our CLIP). I am canvassing any idea of attaching the compilation process.

The fact:

By analyzing the log file of the previous compilation, I noticed that the stage translate is made twice, probably because one is a failure. the excerpt from log files are copy/paste below.

Further, it seems the errors (at least some of them) translate the first are induced by the commented lines of NOR provided file UCF (RfRioFpga.ucf)

The question:

Why to translate step to do it twice (the first being failed)? would it not quicker to make only the second succefull one? in other words, it is really necessary for the first fails to translate step for the FPGA binay?

Any ideas?

Thanks in advance!

See you soon,.

Patrice

----

log file extract 1:

"...

NGDBUILD Design summary of results:

Error number: 387

Number of warnings: 1443Total in time REAL until the end of the NGDBUILD: 2 h 16 min 45 s

Time CPU until total NGDBUILD: 2 h 11 min 21 secOne or more errors were found during the NGDBUILD. No file NGD will be written.

Writing the file of log NGDBUILD 'RfRioFpga.bld '...

'Translate' process failed

..."

log file excerpt 2:

"...

NGDBUILD Design summary of results:

Number of errors: 0

Number of warnings: 818Writing the file 'RfRioFpga.ngd '... NGD

Total in time REAL until the end of the NGDBUILD: 29 min 17sec

Time CPU until total NGDBUILD: 27 min 33secWriting the file of log NGDBUILD 'RfRioFpga.bld '...

NGDBUILD done.

'Translate' process completed successfully

..."

Hi Patrice,

It seems strange that it performs this step two times (and was worried when I saw the first time), but there is good reason for that, certainly. LabVIEW inserts constraints for components, he adds, but sometimes the components get optimized out by the compiler. When the compiler encounters stressed that points to the now non-existent component, it error. LabVIEW circumvents this by running the process to translate two times. The first time, it may fail. LabVIEW will remove the constraints that fail, then run again translate it. Unfortunately there is no way around this problem.

-

I want to know what is the optimal size of the photo of the Apple calendar. Particularly in the case of several photos per month.

Thank you and best regards

pamabi

Photo calendars are size 13 x 10.4 inches (or 33 x 26.4 cm).

Information about the books, cards and calendars ordered photos for OS X and iPhoto - Apple Support

The photos you use will be quite large, if the pixel size is high enough to support at least 200 dpi, best 250 dpi.

So the picture full size should be at least 2600 pixels wide, better 3350 pixels to 250 dpi.

If you want to have 3 pictures in a row, to split these numbers by three.

-

Is there an optimal resolution for iCloud sharing Photos

Is there an optimal resolution for iCloud Photos sharing on computers, iPad and Apple TV?

I want to scan photo album my grandparents to share with my friends and family. I scanned a few pages of test at 3200 x 2000 pixels. They have look great on my Mac and look great on an iPad also. I want to future proof them for 4 k and recent televisions.

Is the size of 3200 x 2000 pixels too small, too large or OK?

If you want to transfer photos using iCloud shared Albums, photo sharing, they will be transferred from smaller - the edge long will be at maximum 2048 pixels, unless they are panoramic.

I would scan the photos to a pixel size you want to keep for reference, so you can print them in a format you like - 4000 x 3000 pixels or more, and when you share with iCloud, sharing photos they will be slightly reduced in any way.

-

Do I need to import in order to export my final product as optimized media as media optimized?

Hello FCPX community!

Please let me know if my reasoning is correct here:

When I upload images that the original and proxy, which means that I can see the project in one of these two codec I'm editing. When I export the final result as Apple Pro Res 422 or more, the exported project will be visible as media optimized.

I do not need to import as media optimized in order to export as optimized media. Import as an optimized media only allows me to see the project as optimized media that I am editing.

Am I wrong?

I uploaded a bunch of images in my project as original and proxy, and the project is now nearly complete. The problem here is that if I need to transcode all media optimized, there are a lot of images and will take much time because I recorded several hours in 4 k footage. I have an old Mac here at work...

Thank you for your time.

I do not need to import as media optimized in order to export as optimized media.

That is right. If you export a master file in ProRes, you export what has basically optimized media.

-

I've been using Microsoft Word for Mac (2011) for a while (on a Macbook Pro, 2010, OSX 10.11.5) and sometimes encounter problems with this gel, especially when doing a wide track changes on a document. I don't know if this has to do with the limitations inherent in Word (I talked to PC users who have exactly the same problem with Word), my computer is not enough of fantasy to run this software at a reasonable speed (speed of the CPU? RAM?), or that my computer's hard drive is toast (recent diagnosis of a 'genius' of Apple). Or maybe all three.

Whatever it is, I need a new computer, and one that will be able to manage the work on complex Word documents (sometimes more than one at a time) using full tracking capabilities - the software changes, without crashing or slowing down to the speed of the turtle. My questions:

1. does MS Word work inherently less well on Mac than on PC, esp. When you make the stuff of fantasy as heavy track changes? (I wouldn't go PC, but as a professional editor, I have to put the right word running as my number one priority)

2 if there is hope for Word on Mac, what are the optimal system specifications? Microsoft said minimum RAM for Word for Mac 2016 is 4 GB. If that's the minimum, should I get more than that? 6, 8, etc. ? Furthermore, what about the speed and type of processor? (Assuming that the computer is used mainly for daily use of work: simultaneous web browsing, e-mail, editing a Word document or both.) No game, video or streaming music)

For example, it would be crazy to expect MS Word for Mac to run smoothly on a Macbook Air with these specs:

Processor: 1.6 GHz dual-core Intel Core i5 (Turbo Boost up to 2.7 GHz) with 3 MB cache shared N3

Memory: 4 GB on-board memory 1600 MHz LPDDR3

Storage: 256 GB of flash PCIe storage

General impressions nor special knowledge, you can share would be much appreciated!

I have Office 2016 for Mac on my MacBook Air - but I do not have something complex. However, I would say that you can post the same question on the Microsoft Office for Mac forum: http://answers.microsoft.com/en-us/mac/forum/macoffice2016?auth=1

-

Two (optimized and Original) iCloud libraries on the same mac.

Hello

I have a 2015 MacBook Pro with 256 GB of storage, a drive NAS 3TO and 700 GB iCloud photo library. Currently I have a local library of Photos on the laptop with the Optmize storage option in the Photos, the originals are in the cloud. Is it possible to have a file of additional library with a complete copy (with the originals) of the same library iCloud on the NAS drive? The library on the laptop could store the optimized content and the library on the NAS stock originals of the same library iCloud?

This way:

- I have a backup of the master files of the size if the library iCloud on the NAS in my house

- I would have a quick access to the full size of my files so at home - especially useful with videos

- I would have access to the photo library to iCloud on the laptop and other devices while outdoors and online

- While at home, I would have the ability to switch between libraries (optimized on the laptop, original on the NAS)- but both have the same content.

Could you let me know if it is by no means achieve this, please? And if so, how.

See you soon

None

1 - photo library can not be on a NAS - it can be on a local disk in Mac OS extended (journaled) format related to a wired ocnnection quick

2. only the system library synchronizes with ICPL and you can have a library of the system by user - you have two accounts on a single Mac each with its own library to ICPL system synchronization then you might have a library on an external hard drive connected correctly locally in a single account and a local library optimized in another account

LN

-

What would be the optimal OS to install on a 13 "MacBook Pro 2009? El Capitan can be advanced to install on such an old Mac Book Pro. Thanks for any input on this!

El Capitan is the only one to use, clean the shit of your Mac first and if you have less than 4G of Ram, increase to 4 or more

-

If Firefox for Android has not been optimized for 4.3?

After having updated my Nexus 10 to 4.3 Monday, everything works well except for Firefox. I have two 22.0 and Beta installed, and the problems are identical in both. Keyboard lag, characters appearing not to if I typed in the search/address, blank white bar screens well it shows the page has finished loading, the previous page always shows even if the poster address bar a new page was supposedly loaded, ugly scrolling. Chrome browser works perfectly as it is. I rebooted several times and no joy. I have not uninstalled and reinstalled Firefox, but I don't know who would do good. I will try that next, but I wonder if there is a known issue with Firefox for Android is not yet optimized for 4.3.

Hi all:

I wrote an article of SUMO KB on the 4.3 Android and Nexus 10 questions.

See you soon!

... Roland -

Optimized libraries remain optimized?

As I understand it, when you open an image on a device that has been implemented to optimize the storage, the high resolution photo will be downloaded.

What happens then?

In order to maintain optimized storage, it removes the version high resolution?

I guess so, otherwise fill you your space with images high resolution watching simply all your pictures.

The devices will keep the uploaded image for a short time, but he eliminate subsequently, s of storage needed. This will depend on the amount of free storage, you have, how long the uploaded image will be deleted.

-

Is the latest version of Firefox optimized for Yahoo

All I want to know is the latest version of Firefox optimized for Yahoo

As far as I know, Firefox is not "optimized" for specific web sites. If you see a version of Firefox that claims to be "optimized for Yahoo", it might be a new perfume created by Yahoo, but that's just a guess.

Maybe you are looking for

-

OfficeJet 6500 a Plus: Paper Jam on Officejet 6500 has more ADF

I analysis an article of newspaper & stuck to the ADF. I tried to shoot, but half of it is still in the tray and I can't find a way to move it.

-

Update for Windows Vista for x 64-based systems (KB971029) Download size: 13.0 MB Please help me

-

Processor and fun of rear cooling fan runs at full speed on Pavilion HPE h8-1120it

Hello, since yesterday when I turned on my HPE h8-1120it HP Pavilion the CPU fan and rear fun cooling are always rotating at high speed, and they never stop. I have nothing no hardware changes, no change in software. Two days I will I work all day wi

-

Impossible to compile using the beta 2 release

When I read that Beta 2 is out, I immediately had to download, install and try yesterday. Unfortunately I can not compile more I always get the error: dyld: library not loaded: libQtXml.4.dylib Applications/bbndk-10.0.6-beta/host_10_0_6_1/Darwin/x86/

-

BlackBerry Smartphones Appleberry theme and Weatherbug app?

Anyone using this combination on their storm? I dl the Appleberry theme today and have not installed the Weatherbug application since. Trying to get a heads up on all issues before trying it.