read tip or parameter to force physics

I'm using Oracle 11.2.0.3. I have a query that took 45 minutes the first time and it take 4 minutes to subsequent term in QC environment. In both cases he uses the same plan. If I try the query again in a few days, first time, it takes a lot of time. Most of the waiting is in scan limited index range - "parallel reading of file db.

Same query is executed within 2 minutes in a down environment with different plan. I used the tip to make the plan even in the environment of QC. Now query executes as expected, but I suspect he might slow down if the data is not cached. I do not have access to change the privileges of system buffer cache flush.

Is there a trick or the setting I can use physical force read?

What view will tell me if a table is always cached in memory?

I'm not sure that there is no parameter to force the physical reading. In addition to the view V$ BH, you can take a look at the output of the command SET AUTOTRACE as well. It would show if there are physics involved in the execution of the query or not bed. But for blocks, the view V$ BH would be useful. I would also like to add that a request would certainly be difference in performance after 1st run. That's why the "warm-up of the buffer cache" is recommended to be done by running the query at least 2 times before you start measuring its performance.

Aman...

Tags: Database

Similar Questions

-

force direct read instead of memory

Hello

is it possible via a tip or something to force oracle does not put blocks of a sql for the buffer cache and read directly to disk every time? That's a test question.

Thank you allHe has hidden parameter serialdirect_read.

The allowed values depend on the version:

before 11R2 - true or false

11R2 - true/false/always/never/autoalter session set "_serial_direct_read"=trueIt works in any version > = 8i.

-

"Physics read total multi block applications ' wrong?

Hello

The statistics "block the physical demands reading total multi" should count the number of calls to e/s over a reading block (doc here). So I expect to be equal to the 'file db scattered read' (or 'direct path read' if read series direct-path of access is used).

But this isn't what I get in the following unit test:

SQL > create table DEMO pctfree 99 as select rpad('x',1000,'x') n from dual connect by level < = 1000;

-I get the v$ filestat statistics in order to make a difference at the end:

SQL > select phyrds, phyblkrd, singleblkrds from v$ filestat when file #= 6;

PHYRDS PHYBLKRD SINGLEBLKRDS

---------- ---------- ------------

6291-96488-3286

I'm doing a table scan complete on a table of 1000 blocks, forcing sql_tracing and stamped readings

SQL > connect demo/demo

Connected.

SQL > alter session set "_serial_direct_read" = never;

Modified session.

SQL > alter session set events =' waiting sql_trace = true ";

Modified session.

SQL > select count (*) in the DEMO.

COUNT (*)

----------

1000

SQL > alter session set events = 'off sql_trace. "

session statistics see the 30 requests for e/s, but only 14 requests for multi block:

SQL > select name, value of v$ mystat join v$ statname using(statistic#) whose name like "% phy" and the value > 0;

NAME VALUE

---------------------------------------- ----------

total read physics applications of e/s 30

physical read total multi block 14 requests

total number of physical read 8192000 bytes

physical reads 1000

physical reads cache 1000

physical read IO request 30

bytes read physical 8192000

physical reads cache prefetch 970

However, I did 30 'db file squattered read:

SQL > select event, total_waits from v$ session_evenement where sid = sys_context ('userenv', 'sid');

TOTAL_WAITS EVENT

---------------------------------------- -----------

File disk IO 1 operations

1 log file sync

db file scattered read 30

SQL * Net message to client 20

SQL * Net client message 19

V$ FILESTAT counts them as multiblock reads as follows:

SQL > Select phyrds-6291 phyrds, phyblkrd-96488 phyblkrd, singleblkrds-3286 singleblkrds in v$ filestat when file #= 6

PHYRDS PHYBLKRD SINGLEBLKRDS

---------- ---------- ------------

30 1000 0

And this is confirmed by sql_trace

SQL > column tracefile new_value by trace file

SQL > select the process trace file $ v where addr = (select paddr in session $ v where sid = sys_context ('USERENV', 'SID'));

TRACE FILE

------------------------------------------------------------------------

/U01/app/Oracle/diag/RDBMS/dbvs103/DBVS103/trace/DBVS103_ora_31822.TRC

SQL > host mv and tracefile series-live - path.trc

SQL > host grep ^ WAITING series-live - path.trc | grep read | NL

1. WAIT #139711696129328: nam = "db-scattered files reading" ela = 456 file #= 6 block #= 5723 blocks = 5 obj #= tim 95361 = 48370540177

2. WAIT #139711696129328: nam = "db-scattered files reading" ela = 397 file #= 6 block #= 5728 blocks = 8 obj #= tim 95361 = 48370542452

3. wait FOR #139711696129328: nam = "db-scattered files reading" ela = 449 file #= 6 block #= 5737 blocks = 7 obj #= tim 95361 = 48370543216

4 WAIT #139711696129328: nam = "db-scattered files reading" ela = 472 file #= 6 block #= 5744 blocks = 8 obj #= tim 95361 = 48370543816

5. WAIT #139711696129328: nam = "db-scattered files reading" ela = 334 file #= 6 block #= 5753 blocks = 7 obj #= tim 95361 = 48370544276

6 WAIT #139711696129328: nam = "db-scattered files reading" ela = 425 file #= 6 block #= 5888 blocks = 8 obj #= tim 95361 = 48370544848

7 WAIT #139711696129328: nam = "db-scattered files reading" ela = 304 case #= 6 block #= 5897 blocks = 7 obj #= tim 95361 = 48370545370

8 WAIT #139711696129328: nam = "db-scattered files reading" ela = 599 file #= 6 block #= 5904 blocks = 8 obj #= tim 95361 = 48370546190

9 WAIT #139711696129328: nam = "db-scattered files reading" ela = 361 file #= 6 block #= 5913 blocks = 7 obj #= tim 95361 = 48370546682

10 WAIT #139711696129328: nam = "db-scattered files reading" ela = 407 file #= 6 block #= 5920 blocks = 8 obj #= tim 95361 = 48370547224

11 WAIT #139711696129328: nam = "db-scattered files reading" ela = 359 file #= 6 block #= 5929 blocks = 7 obj #= tim 95361 = 48370547697

12 WAIT #139711696129328: nam = "db-scattered files reading" ela = 381 file #= 6 block #= 5936 blocks = 8 obj #= tim 95361 = 48370548287

13 WAIT #139711696129328: nam = "db-scattered files reading" ela = 362 files #= 6 block #= 6345 blocks = 7 obj #= tim 95361 = 48370548762

14 WAIT #139711696129328: nam = "db-scattered files reading" ela = 355 file #= 6 block # 6352 blocks = 8 obj #= tim 95361 = 48370549218

15 WAIT #139711696129328: nam = "db-scattered files reading" ela = 439 file #= 6 block #= 6361 blocks = 7 obj #= tim 95361 = 48370549765

16 WAIT #139711696129328: nam = "db-scattered files reading" ela = 370 file #= 6 block #= 6368 blocks = 8 obj #= tim 95361 = 48370550276

17 WAIT #139711696129328: nam = "db-scattered files reading" ela = 1379 file #= 6 block #= 7170 blocks = 66 obj #= tim 95361 = 48370552358

18 WAIT #139711696129328: nam = "db-scattered files reading" ela = 1205 file #= 6 block #= 7236 blocks = 60 obj #= tim 95361 = 48370554221

19 WAIT #139711696129328: nam = "db-scattered files reading" ela = 1356 file #= 6 block #= 7298 blocks = 66 obj #= tim 95361 = 48370556081

20 WAIT #139711696129328: nam = "db-scattered files reading" ela = 1385 file #= 6 block #= 7364 blocks = 60 obj #= tim 95361 = 48370557969

21 WAIT #139711696129328: nam = "db-scattered files reading" ela = 832 file #= 6 block #= 7426 blocks = 66 obj #= tim 95361 = 48370560016

22 WAITING #139711696129328: nam = "db-scattered files reading" ela = 1310 file #= 6 block #= 7492 blocks = 60 obj #= tim 95361 = 48370563004

23 WAIT #139711696129328: nam = "db-scattered files reading" ela = 1315 file #= 6 block # 9602 blocks = 66 obj #= tim 95361 = 48370564728

24 WAIT #139711696129328: nam = "db-scattered files reading" ela = 420 file #= 6 block # 9668 blocks = 60 obj #= tim 95361 = 48370565786

25 WAIT #139711696129328: nam = "db-scattered files reading" ela = 1218 file #= 6 block # 9730 blocks = 66 obj #= tim 95361 = 48370568282

26 WAIT #139711696129328: nam = "db-scattered files reading" ela = 1041 file #= 6 block #= 9796 blocks = 60 obj #= tim 95361 = 48370569809

27 WAIT #139711696129328: nam = "db-scattered files reading" ela = file No. 300 = 6 block #= 9858 blocks = 66 obj #= tim 95361 = 48370570501

28 WAIT #139711696129328: nam = "db-scattered files reading" ela = 281 file #= 6 block #= 9924 blocks = 60 obj #= tim 95361 = 48370571248

29 WAIT #139711696129328: nam = "db-scattered files reading" ela = 305 file #= 6 block #= 9986 blocks = 66 obj #= tim 95361 = 48370572021

30 WAIT #139711696129328: nam = "db-scattered files reading" ela = 347 file #= 6 block #= 10052 blocks = 60 obj #= tim 95361 = 48370573387

So, I'm sure that I did 30 reading diluvium but block total multi physical read request = 14

No idea why?

Thanks in advance,

Franck.

Couldn't leave it alone.

A ran some additional tests with sizes of different measure, with and without the SAMS.

It seems that my platform includes no readings of scattered file db less than 128KB in the physical count. (or 16 blocks, given that I was with a block size of 8 KB).

Concerning

Jonathan Lewis

-

The Oracle trace: number of physical reads of the signifier of disk buffer

Hi all

Since yesterday, I was under the impression that, in a trace file Oracle, the "number of physical buffer of disk reads" should be reflected in the wait events section.Yesterday we add this trace file (Oracle 11 g 2):

call the query of disc elapsed to cpu count current lines

------- ------ -------- ---------- ---------- ---------- ---------- ----------

Parse 1 0.00 0.00 0 0 0 0

Run 1 0.04 0.02 0 87 0 0

Get 9 1.96 7.81 65957 174756 0 873

------- ------ -------- ---------- ---------- ---------- ---------- ----------

total 11 2.01 7.84 65957 174843 0 873

Elapsed time are waiting on the following events:

Event waited on times max wait for the Total WHEREAS

---------------------------------------- Waited ---------- ------------

SQL * Net message to client 9 0.00 0.00

reliable message 1 0.00 0.00

ENQ: KO - checkpoint fast object 1 0.00 0.00

direct path read 5074 0.05 5,88

SQL * Net more data to the customer 5 0.00 0.00

SQL * Net client message 9 0.01 0.00

********************************************************************************

We can see that the 65957 physical disk reads resulted only 5074 direct path read. Normally, the number of physical reads from disk is more directly reflected in the wait events section.

Is this normal? Why is that? Maybe because these discs are on a San have a cache?

Best regards.

Carl

direct path read is an operation of e/s diluvium, is not just to read 1 block

-

Share the reader of fingerprints between the physical Machine and VM

I have a lenovo W541 with build - in fingerprints, how to share.

PM with W8.1 already use it, how to use it also to open a session in OS in WM?

Best regards

Paolo

Hi Paolo,.

Also, I have a Thinkpad W541 and scratched my head on how to use the fingerprint reader, integrated runtime for example 10 Windows on a virtual machine-machine since my cell phone - and then came across this thread.

After comparing the 'Device Manager' on my VM-machine as well as on the physical laptop I have seen that on the VM machine there was no "biometric devices" not appearing on the VM machine. Of course, I also checked the hardware VM definition dialog if there was a possibility of adding such a device, but it was not.

So I thought that I was stuck. But I found a solution that is at least sufficient for my needs. I noticed that one of the icons on the side in the bottom right of my machine-VM running (running Windows 10) - there is an icon that has been disabled but when hovering with the mouse it will read "validity sensors sensors Synaptics PS (WBF) (PID = 0017).

After a right-click and selecting "Connect (disconnect host)" there's a new device that appeared in the Windows Device Manager 'Unknown device' and opening than treeviewitem I clicked and chose "Install driver" and voila! Now my built-in fingerprint reader can be used when running Windows 10 under my VM workstation!

It is not ideal because it allows to disable the fingerprint reader on the BONES of the physical machine too long that the Windows 10 VM machine is running, but I can live with that. And the good side is that Windows (?) is smart enough to automatically re-enable the drive of fingerprints on my physical machine as soon as the machine-VM is closed / stop.

I hope this helps!

-

Oracle Read-Ahead: not the same as looking forward, Yes?

Hello

I tried to understand the systems Oracle Read-Ahead, and I think I understand how it works, but I would like to say that I'm not mistaken.

What I managed to understand in the Oracle documentation, the Oracle read-ahead mechanism is especially used for sequential access to data blocks. For example, to perform operations such as table scans.

In this case, Oracle will for example use a physical operation called scattered read.

Read the straggling, in turn, is configured in the ini. ORA parameter a name like db_multiblock_read_count. A multiblock read count of 60, will make the scattered read read operation 60 blocks of sequential data from the drive, starting with the data block that was requested in the operation.

From what I understand, this is the basis of the read-ahead mechanism. It is use mult block transactions reading data rather than the simple operations of bock.

If what I've said so far is correct. There are two things I'd like to know:

1.

These 60 blocks read by the read sctarred. They go directly into the database buffer cache? Or are they loaded everything first in to a lower level cache, as a scattered read cache?

For example, if the table scan requested page 1. And the read operation recovered scattered pages from database 1 to 60. I guess that this single page 1 would be transferred to the buffer cache. More later when the requested table to page 2 scan, the cache buffers would recover the scattered read cache this page in the buffer cache. Or is it completely wrong? And when scattered reading gets 1 to 60 pages. It automatically transforms the data pages in the buffer cache in random positions of the main buffer?

2.

With Oracle read-ahead, table scans and other such physical operations always incur straggling expected read periodically. For example, every 60 pages, scanning table facing in another scattered read wait OK?

3.

Read-ahead in oracle anticipates a physical operator database page needs a specific point in time, Yes? The following does not occur:

While the analysis of the table is currently on page 50, with another 10 pages more left in the database buffer, background processes decides it's a good idea ask to get another 50 pages of data yet. So that the thread of forground treatment table scan never has a stop in reading e/s.

It is of to future research, and it is not a function of Oracle, is it?

Thank you for your understanding.

-------------------

I did a few tests where, although the size of the table to a data transformations are still the same (1 GB) but the time I take to make my exit from the source table varies. The processing time for each record has increased considerably from one test to the next. But I noticed that the time-out of the transformation of data remains constant, although the time cpu increases considerably. The increase in time CPU means that the extraction of time until that next buffer was reduced, which would give more time for the system to look forward. Eventally I could have done the time so much CPU, if read IO was executed in anticipation, that it would be possible for PLAYBACK of theortically waiting time at 0. I know is not going to happen. So I had just be certain of how early reading works.

My best regards.sono99 wrote:

HelloI tried to understand the systems Oracle Read-Ahead, and I think I understand how it works, but I would like to say that I'm not mistaken.

What I managed to understand in the Oracle documentation, the Oracle read-ahead mechanism is especially used for sequential access to data blocks. For example, to perform operations such as table scans.

In this case, Oracle will for example use a physical operation called scattered read.

Read the straggling, in turn, is configured in the ini. ORA parameter a name like db_multiblock_read_count. A multiblock read count of 60, will make the scattered read read operation 60 blocks of sequential data from the drive, starting with the data block that was requested in the operation.From what I understand, this is the basis of the read-ahead mechanism. It is use mult block transactions reading data rather than the simple operations of bock.

Don't know if you wanted to read several blocks at the same time or the features of the cache before reading provided by some storage devices. But it seems to me you speak CRBM (Multiblock read County) and no cache of early reading provided by storage vendors.

If what I've said so far is correct. There are two things I'd like to know:

1.

These 60 blocks read by the read sctarred. They go directly into the database buffer cache? Or are they loaded everything first in to a lower level cache, as a scattered read cache?For example, if the table scan requested page 1. And the read operation recovered scattered pages from database 1 to 60. I guess that this single page 1 would be transferred to the buffer cache. More later when the requested table to page 2 scan, the cache buffers would recover the scattered read cache this page in the buffer cache. Or is it completely wrong? And when scattered reading gets 1 to 60 pages. It automatically transforms the data pages in the buffer cache in random positions of the main buffer?

If my MBRC is 60 and none of the blocks are in a buffer and size of the table is more than 60 blocks then together 60 blocks would be transferred to the buffer cache. There was nothing called scattered read cache in the architecture of Oracle.

2.

With Oracle read-ahead, table scans and other such physical operations always incur straggling expected read periodically. For example, every 60 pages, scanning table facing in another scattered read wait OK?Yes file db scattered read wait event would mean that an another physical i/o was instituted for the extraction of blocks next records.

3.

Read-ahead in oracle anticipates a physical operator database page needs a specific point in time, Yes? The following does not occur:

While the analysis of the table is currently on page 50, with another 10 pages more left in the database buffer, background processes decides it's a good idea ask to get another 50 pages of data yet. So that the thread of forground treatment table scan never has a stop in reading e/s.It is of to future research, and it is not a function of Oracle, is it?

Yes, I don't think so it is a feature of Oracle, but provides some storage provider. It is also known as Read ahead.

Thank you for your understanding.

-------------------

I did a few tests where, although the size of the table to a data transformations are still the same (1 GB) but the time I take to make my exit from the source table varies. The processing time for each record has increased considerably from one test to the next. But I noticed that the time-out of the transformation of data remains constant, although the time cpu increases considerably. The increase in time CPU means that the extraction of time until that next buffer was reduced, which would give more time for the system to look forward. Eventally I could have done the time so much CPU, if read IO was executed in anticipation, that it would be possible for PLAYBACK of theortically waiting time at 0. I know is not going to happen. So I had just be certain of how early reading works.

My best regards.

I don't understand your last paragraph.

Concerning

Anurag -

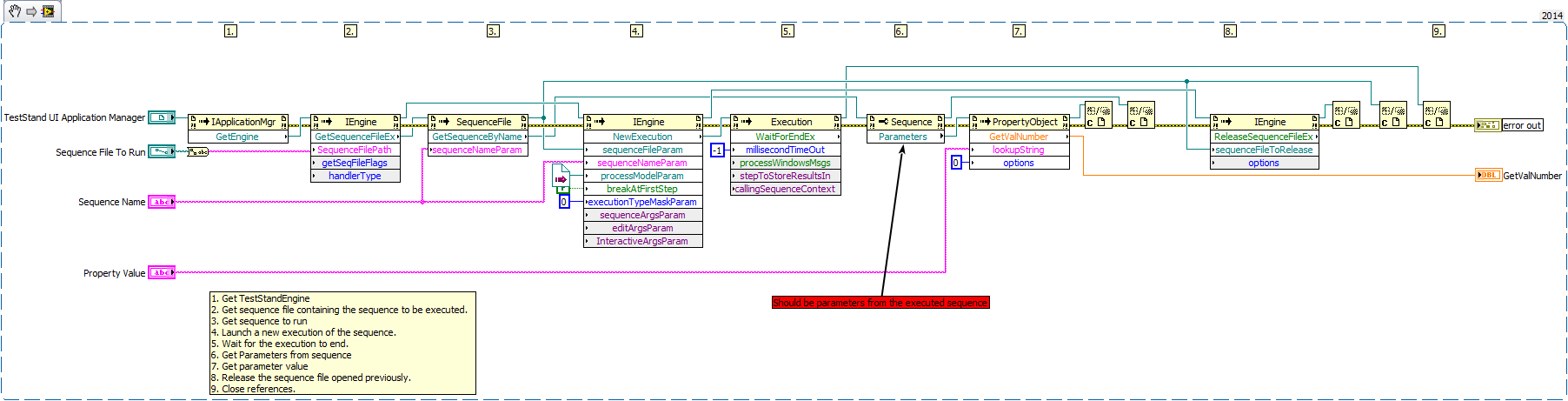

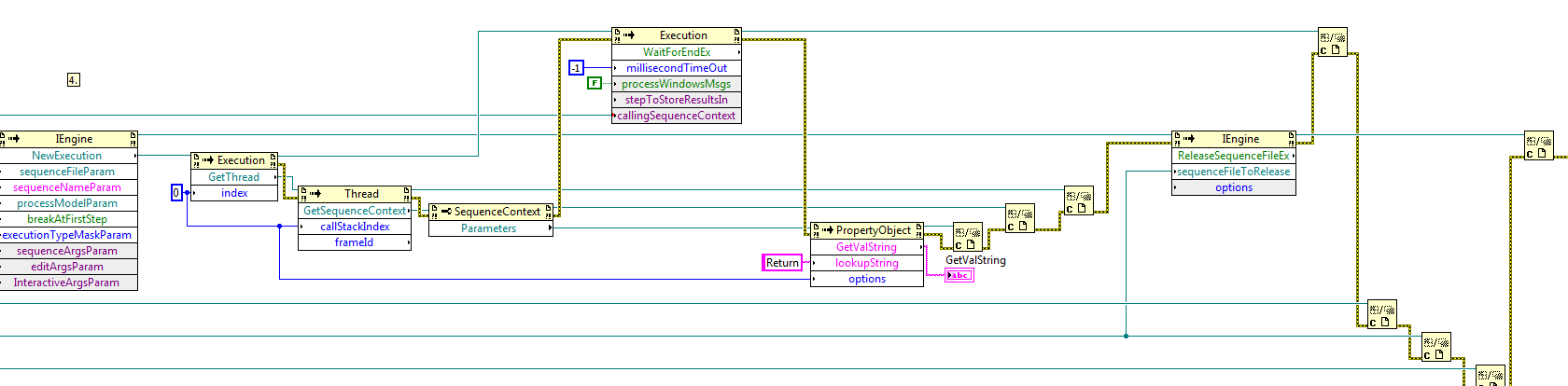

How to get the return parameter in sequence teststand ran by labview labview

Hello

Executes a test sequence of labview (essentially running "

\Examples\TestStand API\Executing sequences using API\LabVIEW\Execute with no Model.vi process") I need to get a parameter returned in 'Settings', after the sequence has run. My problem is that I get the value upon registration of the sequence and not the value after execution.

How?

Vagn

Vagn,

in order to obtain the values of the execution, you need access to execution. The following screenshot shows how to access.

Please note that this VI is lying will be called by TestStand. DO NOT USE THIS VI IN A CUSTOM USER INTERFACE!

Thank you

Norbert

EDIT: This dummy code shows how to read the string parameter 'Return '. Each parameter must be read separately with appropriate playback function.

-

Prechecks and disadvantage of cursor_sharing = force

Hi allOS: ALL

DB: 11 GR 2

I tried searching on the net, but couldn't get an answer satsfying. I need to know before I ask the FastInventory cursor_sharing = force, what everything has to be consider?

In addition, what is the downside to this patrameter as Force?

Thank you!

Your understanding is not correct.

parameter to force cursor_sharing is a temporary workaround for when your application uses bind variables. What will happen, is that internally, Oracle will take each literal and automagically turn it into a variable binding for you, even when it should not be a binding variable (for example, when you use an unchanging constant in a query). There are two drawbacks that I know (and have seen in the past in real situations):

(1) you really a unchanging constant in a query, and changing to a variable binding can lead to choose a suboptimal Oracle plan.

(2) expressions such as SUBSTR (column, 1, 5) is transformed into SUBSTR (column,: SYS_B0,: SYS_B1), which means that tools no longer know that the phrase is 5 characters. I've seen reports of customers because of this break.

CURSOR_SHARING should serve as a temporary crutch while fix you your application to use bind variables.

-

I use Adobe Reader and Adobe Acrabat (if there is no difference) and I have been a Sony tablet. I use a PDF file as a lesson plan, normally to just open the document and it moves automatically to 'recent' in 'my documents '. But when I close this document in particular I need to find it in my downloads and open again in Adobe, but the changes I made are not here obviously? How can I move an item in the recent myself? Help, please!

Hello

What is the operating system on your Sony Tablet? Windows or Android? What is the version of the operating system?

Your documents are stored on your device or on the cloud (Cloud Document Adobe, Adobe Creative Cloud) storage.

Please note that the recent section displays the local and cloud the documents you have open/read recently. Player does not physically move/transfer local or documents to the recent Division of clouds.

-

How to find the physical corruption

HelloI backup sed post order for physical corruption in my database as follows.

backup validate check logical database;

After his command, I asked v$ database_block_corruption.

12:04:47 SQL> select * from v$database_block_corruption; FILE# BLOCK# BLOCKS CORRUPTION_CHANGE# CORRUPTIO ---------- ---------- ---------- ------------------ --------- 1 11477 2 228760588 LOGICAL 1 11514 1 228760329 LOGICAL 12:05:09 SQL>

I'm looking for if it is a physical corruption of logical corruption?

I also used the dbv command to check my system datafile (file # 1). And the output is as foolows

D:\>dbv file=D:\ORACLE\ORADATA\OPERA\SYSTEM01.DBf DBVERIFY: Release 10.2.0.4.0 - Production on Mon Nov 18 11:56:23 2013 Copyright (c) 1982, 2007, Oracle. All rights reserved. DBVERIFY - Verification starting : FILE = D:\ORACLE\ORADATA\OPERA\SYSTEM01.DBf DBV-00200: Block, DBA 4205781, already marked corrupt DBV-00200: Block, DBA 4205782, already marked corrupt DBV-00200: Block, DBA 4205818, already marked corrupt DBVERIFY - Verification complete Total Pages Examined : 168960 Total Pages Processed (Data) : 127180 Total Pages Failing (Data) : 0 Total Pages Processed (Index): 25248 Total Pages Failing (Index): 0 Total Pages Processed (Other): 2440 Total Pages Processed (Seg) : 0 Total Pages Failing (Seg) : 0 Total Pages Empty : 14092 Total Pages Marked Corrupt : 3 Total Pages Influx : 0 Highest block SCN : 245793757 (0.245793757)

As dbv always checks the physical corruption, but how can I make sure using RMAn that whatever physical corruption of only logical corruption? Thank you

Alert log say something like the following

Sat Sep 14 00:22:50 2013

Errors in the d:\oracle\admin\opera\bdump\opera_p008_3916.trc file:

ORA-01578: block ORACLE (corrupted file # 1, block # 11478) data

ORA-01110: data file 1: ' D:\ORACLE\ORADATA\OPERA\SYSTEM01. DBF'

ORA-10564: tablespace SYSTEM

ORA-01110: data file 1: ' D:\ORACLE\ORADATA\OPERA\SYSTEM01. DBF'

"ORA-10561: TRANSACTION SUCCESSFUL DATA block type ' BLOCK ', object # 5121 data."

ORA-00607: an internal error occurred in making a change to a data block

[ORA-00600: internal error code, arguments: [kddummy_blkchk], [1], [11478], [6101], [], [], []]

SAQ

Hello

DBVerify reports two logical corruptions and physical intra-block by default. A good starting point would be to go through the MOS score below to understand.

Logical and physical block corruptions. Everything you wanted to know on this subject. (Doc ID 840978.1)

Basically, you will not be able to read data in the blocks in physical corruption corrupt some time may be able to read the data into logical corruption.

In addition, DBVerify report the errors of DBV-200/201 to logical corruption.

HTH

Abhishek

-

We can display physical Agent in slot name physical Architecture > Agents node

Hello

I am successfully able to connect to a stand-alone odi agent that is installed in an another m/c(other than ODI Server m/c). Can I use any name (for the name parameter) for the physical agent to be created under "physical Architecture > Agents node?

Please answer

Thank you

SébastienThe test passes if you have an agent started on the same host with the same port, regardless of its name.

But in order to start an agent, it must be entered in the (eponymous) topology. -

parameter number in the task of planning custom in R2

Gurus,

I developed a custom 11 GR 2 IOM planning task that has a parameter of number as one of the entry. I have defined this parm as below in my eventhandler.xml

< number-required param = 'true' helpText 'Number of records to retrieve' = > number of files < / param-number >

By reading this value in the schedule task code, I use this.

Try

{

Records string = String.valueOf (attributes.get ("number of Records"));

System.out.println ("Records" + Records);

}

catch (Exception e) {}

e.printStackTrace ();

}

I even tried the question below, but even.

Try

{

reviews int = Integer.parseInt ((String) attributes.get ("number of Records"));

System.out.println ("Records" + Records);

}

catch (Exception e) {}

e.printStackTrace ();

}

But my work fails said number format exception.

Can you please let me know some code snippet to read a number parameter in a scheduled task.Here's a code tested for you.

import java.util.HashMap;

Import oracle.iam.scheduler.vo.TaskSupport;SerializableAttribute public class Test extends TaskSupport {}

public void execute (attributes, HashMap) {}

try {}

Long REC = ((Long) attributes.get ("number of Records")) .longValue ();

Print ((int) REB);

}

catch (Exception e) {}

System.out.println ("Exception 1:"+e.getMessage());

}

}private void print(int a) {}

System.out.println ("a =" + a);

}public HashMap getAttributes() {}

Returns a null value.

}{} public void setAttributes()

}

} -

Log_archive_format with physical standby

Oracle RDBMS: 11.2.0.2 (RAC) on RHEL 5.6

We need to define any particular value for log_archive_format parameter when creating physical database from the night before?

In our primary, the value of the parameter is log_archive_format = 't_ % s_ % r.dbf % '. Do we need to change it to another value or can leave us like this for the physics of the standby database?

Number of documents online in various blogs include log_archive_format ='% t_ % s_ % r.arc as well as all the other required parameters for the physical creation of the standby database. I am bit confused, can someone please clarify.

Thank youHello;

Do we need to set any particular value for log_archive_format parameter while creating physical standby database?N °

In our primary the parameter value is log_archive_format='% t_% s_% r.dbf'. Do we need to change it to a different value or can we leave it like that for physical standby database?I love them the same thing only it gaps check more easily. Yours seems to be the default for Linux.

SQL> show parameter log_archive_format NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ log_archive_format string %t_%s_%r.dbf SQL>On all mine, I don't put that and allow you to apply the default value for the parameter.

Best regards

mseberg

-

Read the settings from the Illustrator

Hello

Is it possible to read the SDK settings when we begin to Adobe Illustrator.

As if I'm leaving command promt Illustrator by using the following command:

C:\Program File\Adobe\..\Windows> Illustrator "C:\file.ai" "Hi This Is Param"

In the command above the first Param is file name HAVE, and the second is my dummy parameter...

Now, I want to read the second parameter with the SDK and use this value in my plugin application.

Thank you

Hard

On Windows, you can use GetCommandLine

-

Compellent Replay Manager and VSS

Hi all

I am a new user of Replay Manager and I'm testing inside and sandbox environment.

I installed Replay Manager Services 7.0 on a physical Windows 2003 R2 x 64 server and tried to program in accordance with replays of the management tools Manager Replay--> all work perfectly.

But this server is also supported by our company backup tool: Symantec Backup Exec 2010 R3. I noticed that whenever a VSS backup is initiated by BackupExec, a coherent reading is done on the HDS. Is this normal? Can disable us this behavior?

Thanks in advance,

Kind regards.

Teo.

Finally, I found the solution:

Symantec Backup Exec Agent installed on windows system has a default setting to choose the right provider VSS: this parameter is 'automatic selection '.

With this automatic selection, the agent chooses the VSS provider Replay Compellent, instead of the microsoft VSS provider service. And so, the backup exec task triggers a replay on the Compellent storage system!

Solution: change the task parameter to force 'Microsoft's VSS provider' instead of 'automatic selection '.

Maybe you are looking for

-

I am on os 10.6.8 and and use just for logic, we was told by tech apple I can upgrade, but backward speed up my system to external hard drive using time machine. wouldn't it be better if I me copied hd on my external rather than use time machine? H

-

Windows 2008 R2 DC server / server Dhcp. my workstations (windows 10 and windows 7) have problems with the connection. they receive a limited connection. the tar that they get on the workstations, it's that "lack one or more protocols" his past to mu

-

BlackBerry Smartphones Priv do not receive notifications of Hub, unless the Hub app is open.

Strange... If I restart my phone... (and not to open all apps)... I get notifications by e-mail (sound and LED) for accounts in my Hub. But once I opened the hub and then close (no longer in my list of recent). I will no longer receive notifications

-

I would like to ask if CS5.5 programs work without problem on Windows 10?

-

If I update to lightroom 5 to cc will be iretain all current changes in lr and I keep my current downloaded and purchased presets?