R series analog input range

Hi all

I'm starting my first app using a card of RIO for the analog input and power (two PCI-7833).

My previous experience with DAQ systems was on PC so I'm especially familiar with DAQmx. It is my understanding that DAQmx is not really being used on these boards, there is no DAQmx tasks to play with.

So, my question is this: How can I dynamically set the range of the signal of the analog inputs so that I get the best possible resolution?

What I want to say in DAQmx if I know that the input signal is only 1 V pk to pk I can put this beforehand in the task.

Thank you!

OK, a little further digging shows the input range is set to +/-10V, is this correct?

Tags: NI Hardware

Similar Questions

-

PCI-6110 to change analog input range

The analog signal I want to measure is 24 volts and the maximum PCI 6110 is Volt.However 42, analog inputs that appear in the device under device NOR-DAQ traditional (old) configuration is 10 volts (single selection). I'm using LabView 7.1, DAQmx 8.6 and there is no function for allowed me to modify and change the analog device input range (Please find the print screen of the attachment). Can I know how can I change the analog inputs range?

I think that's what you're looking for:

S how to set up a data acquisition card series for the entry level so it does not Clip?

You must set up an appropriate gain so that the other ranges of voltage is displayed.

In addition, you can post on the forum instead of Labview data acquisition in the future, because the chances are that you will get better/faster responses there

Good luck!

-

PCIe-634 x independent analog input ranges

I have a PCIe-6343 and I want to be able to power different ranges on different channels of entry at the same time, more precisely with the customer is able to select ranges for each channel when necessary. Ideally I want to I could have HERE with-10 0 - 10, AI 1 to 04:55, AI 2 with-1 to 1 and AI 3 with - to 0.250 0.250. Anyone know if this is possible? I tried to put a table for the minimum and maximum values, and it did not work.

The only active channels are the ones you decide to put in the array. The only beaches that are enabled are the ones you decide to place in their bays. How you decide to complete the paintings belongs to you. I suggest a front screen where you are able to select one channel and the beach, then click to add to a list.

-

Input signal is set to +/-0 .5V when it is connected to an analog input

Hello

I have a difficulty connect an analog source to the analog inputs of my acquisition of data (USB-6215). The analog signal is output operational amplifier through a 10 k resistor. I wore the signal out of the amplifiers is 10V peak, I then move the probe across the 10 k (the analog input terminal) and the signal is clipped to +/-0 .5V. If I reduce the amplitude of the signals of source less than 0, 5V Ridge there is no clipping.

Maybe the analog input range the value +/-0 .5V which is causing this as a form of protection? I don't have a LabView to try to change the input range as I do just the wiring.

Analog source is connected to him HAVE 0 and the ground of the analog source is connected to the GND AI.

Thanks in advance.

J

Joel-

Have you tried to read the data through the data acquisition device? If that's what you try to do, I'm curious about what we read.

If you have measurement and Automation Explorer, go ahead and open a Panel to test for the unit and see what are your tensions.

Let us know how it goes.

-

Definition of series R Analog Input Mode programmatic

Hello

I understand that the mode setting of analog input for 7854R series card can be made through the project manager (RIO Device setup)

I would like to know if there is anyway we can do programmatically (as in the case of some cRIO modules HAVE).

Thanks in advance for the help!

Hey,.

No, you can not change programmatically. Right under the RIO device installation program.

Christian

-

How to read the analog inputs of one Board of R for (PXI-7851R) series

You can guide me please with the steps for reading of the analog inputs of a series a. card I use as the target fpga PXI-7851R.

Have you looked at the examples provided with LabVIEW? There are examples showing how to read the analog inputs.

-

Lack of analog input/output range

Hello!

I LV 8.6 installed on my PC with XP OS and my card is 6024E. I noticed that the analog input/output palette is not appearing or missing. I tried searching but no luck. NOR-Daq traditional 7.4.4 currently installed.

Try reinstalling the device driver CD/DVD and make sure you have selected traditional DAQ.

Say you you installed NOR-DAQ 7.4.4. But if it was made before you install LabVIEW, LabVIEW and then see.

-

NI USB - 6212 BNC analog input impedance matching

I just ordered a case NOR USB - 6212 BNC DAQ (should be delivered soon). I want to use to measure HV signals using a probe of high voltage of 1/1000 I have.

Now, datasheet of the probe (not a lot of info) says it has an impedance imput 100MOhm. I suppose that it consists of a simple resisitve divider, and if the ratio is 1/1000, I wait so to have a 99.9MOhm resistance in series with a 0.1MOhm resistance. However, the data sheet also specify that the probe is designed to be connected to an oscilloscope with an impedance of 1MOhm. As this input impedance is very low compared to the low value of the separator of resistance resistance, so I guess that the real resistance at the level of the sensor values 99.9MOhm and 0.11MOhm (to obtain the 0.99 and 0.1MOhm when it is connected to the oscilloscope for 1mW).

Therefore, given that the impedance of the USB-6212 according to the datasheet, the analog input is > 10GOhm, I expect to measure higher to true alternative voltages when connected to the acquisition of data from 10%. This assumption has a meaning?

What would be the best way to get around this? Do a calibration and correct the values acquired in LabVIEW code? Or should I add precision 1MOhm resistance at the same time to the acquisition of input data to decrease its resistance to entry to the value expected by the probe?

Thanks for your help!

Since you have a range of 1000: 1 I guess you also need bandwidth (I have a TEK 6015 A

), so you need based on the impedance input, a complex value, means he must not only watch but also the ability to input resistance (1 M). demarcation of the field probes have usually some elements of toppings to match the probe and the input scope. RTFM of the help of the probe

), so you need based on the impedance input, a complex value, means he must not only watch but also the ability to input resistance (1 M). demarcation of the field probes have usually some elements of toppings to match the probe and the input scope. RTFM of the help of the probe

BUT a more serious point is that with your probe, you have a very high resistance. And if you look in the specification of the 6212 you will find on page 2 by mistake ppm in logarithmic scale graph! and even 100 k source impedance it not shown.

So I'm afraid that a simple 1 M on the DAQ entry can work if you're only measuring DC, and only if you use a channel on the acquisition of data. A workaround is an amplifier separate buffer with an impedance of good entry corresponding to the specification of your probe and a low output impedance.

-

Full scale PXI - 6254 DAQmx Analog Input

Hello

I use PXI - 6254 Board to read the analog inputs. Configured channels using DAQmx create Channel.vi with sub parameters.

In the configuration: CSR

Min: 0

Max: 10

Units: Volt

I read the channel using DAQmx Read U16 2D with the sample of 1. I expected below the values.

data of 0 v = 0

10 volts = 65535 data

but it gives 10 volts = 31544 data. Please let me know why.

If I set up the channels with the settings below:

In the configuration: CSR

Min:-10

Max: 10

Units: Volt

He always reads the same values (data 0 v = 0, 10 volts = 31544).

Please let me know, how I can get 10 volts = 65535

Thank you

Hi LVTestek,

The PXI-6254 is not an interval 0 to 10 V input V. The specification of 625 x OR lists the available input ranges:

Entry level of ± 10 V, ± 5 V, ±2 V, ± 1 V, ±0, 5 V, ±0, 2 V, ±0, 1 V...

When you set Min = Max 0 = 10, DAQmx chooses the smaller input range that allows to measure signals between 0 V and 10 V without clipping. On the PXI-6254, the smaller input range that meets this criterion is the range of ± 10 V, where - 10 V corresponds to-32768 0 V corresponds to 0 and 10 V corresponds to 32767.

However, there is an additional complication: ranges entry on M Series devices are slightly wider to accommodate the software calibration. Otherwise, gain of a device could reduce the scope of actual entry, and offset error would move the ends of the effective input range. If the [-10 V...] 10 v] range on your PXI-6254 could be more like [-10.3 V...] 10.4 V]. 10 V is actually to 31544, rather than 32767. On another PXI-6254, 10 V could correspond to a different value of gross / scaleless and 31539 31552.

Another side effect of calibration of the software, is that the data returned by the flavours 'raw' and 'no' to the VI DAQmx Read are benchmarked. The KB explains further: is raw data DAQmx calibrated or chipped?

If you can modify your application to use one of the flavors "on the scale" (F64) VI DAQmx read, which should save a lot of effort. If not, could you explain why your program requires readings without scales/bullies? The right approach depends on the requirements. For example, if you want to save the data in a file and you need to reduce the file size by using raw data / scaleless, configuration DAQmx to save data directly a TDMS file can meet your needs. If you update an older application to work with DAQmx and M Series, a different approach may be more appropriate.

Brad

-

6008 analog input - invalid values

Hello

Does anyone know how the analog input voltage 6008 invalid handles? Specifically, what happens if the circumstantial channel is configured for a 0 - 10 range v and a voltage negaitve, (-19.0) volts is placed on the analog input?

I use the library, C/C++, OR-DAQMx library. The call that I use to set up the port of AtoD is:

DAQmxErrChk (DAQmxCreateAIVoltageChan (taskHandle, AINPSTR, "", DAQmx_Val_Diff, 0,0, (float64) s_dMaxAnalogInputVoltage, DAQmx_Val_Volts, ""));

where: s_dMaxAnalogInputVoltage = 10.0;

and

DAQmxReadAnalogF64 (taskHandle, 1, 1.0, DAQmx_Val_GroupByChannel, values, 5, & not read, NULL);

to read the circumstantial.

What will happen if any illegal input voltage is applied. I know that everything is a wide range, so I don't talk about something like -60 to + 60 volts.

Thank you

-Neil shore

These specifications are in the datasheet of the product - link to it in the product specifications of the page tab.

And no, there is no error generated when the input is out of reach. Scaling the proper entry so that this doesn't happen. or if this is the case, your software recognizes that is higher than expected.

-

Several analog inputs seem to change any of the other (details DAQ: 2120 BNC and 6062E)

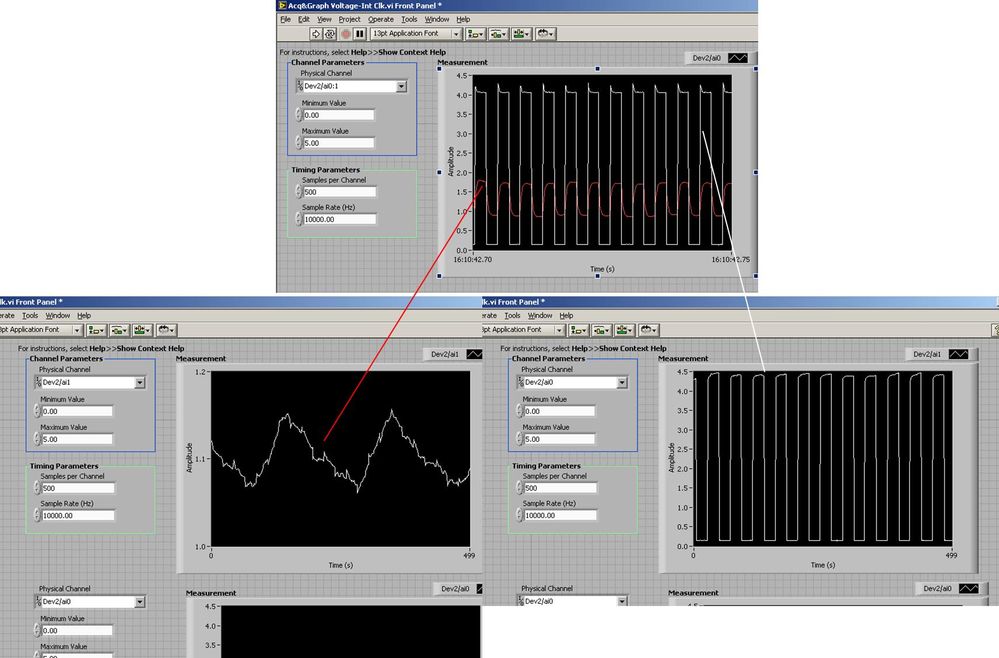

I use the BNC 2120 DAQ board connected to the data acquisition card 6062E to record two analog inputs. An entry is connected to ai0 and the other at ai1. Example vi: "Acq & graph int clk tension" has been used to measure the two entries with the value read NChan NSamp vi (channels being dev2 / ai0:1). The output is the top graph in the image. However, this seemed a bit strange to me that one of them should be modulating with a different frequency. When I record both entered individually (two in low pictures) they are indeed different since the entries shown in the top graph.

Why this would be the case, and how can I overcome this to measure the real signals?

Thank you!

The E series card takes the samples as soon as possible. Thus, for example,.

If you have 16 analog input channels but you only read of

channel 0 and 1, the map will show the channels 0 and 1 right

After and then wait 14 'ticks '. What's that little run-in

the origin of the afterglow.

I think you can get the card to wait a certain

number of ticks with a property node. I have attached a screenshot. You

can find the property node in the palette of functions >

Measurement of e/s > NOR-DAQmx > node Timing. Expand it

Property node so there's two entrances. The properties are in

Left click on the node and going more > converted >

Its properties delay units and sampling clock delay and delay that

you want.If the phase is important so the above is not the best

the option because it causes a delay in phase. So, if you need true simultaneous

sampling, then you will need different hardware. The S series is everything

simultaneous sampling.Or, rather than the Delay property and delay units, try the Rate property

find more > converted > rate.If this is not

work either, you can move the second signal source to, say, AI8 and

Connect everyone to the ground. Readings for these, but just do not take into account

the data. In this way the ADC will sag to the ground at the time where that can happen

the second string in the way so that you should not see this frequency

ghosting on the other channel. -

Problem with a precision of analog input on PCI-6111

Hello

I'm reading an analogue signal which varies from 0-11 V using a card of acquisition data PCI-6111. The signal comes from a Tube set (PMT) which is part of a microscope configuration, so it is very important that the resolution of the analog input signal be as wide as possible generate quality images. According to the data sheet for the PCI-6111, the analog input resolution is 12 bits, which should correspond to a sensitivity of ~2.686 mV for my voltage range.

To test this, I set up a task to analog input with a 0-11 V voltage range to read samples of an analog output, which I wrote a simple waveform. Since the 16-bit analog output resolution that I assumed that it would not limit the accuracy of this measurement. I have attached the VI I used for this measurement below. The analog input data are saved not truncated in a text file.

Analyzing these data, I found that the real input sensitivity is ~9.766 mV, corresponding to levels of voltage exactly 1126,4 and ~ 10 bits.

Is there a reason why the resolution of analog input is much lower that it is indicated on the card? What are some of the ways I could improve the sensitivity of this measure?

Best,

Keith

Sorry, when you mentioned the specs, I thought you already had them. If this did not come with your Board of Directors?

-

Hello

The data entry in LabVIEW by my USB-1208LS is accurate, but the analog input voltage values are quantified (there are only a few repeating values: 0,691 0.696 0,701 0.696 0,701 0,691 0,691 0,691, etc..). I am able the tensions of four photocells, propelled by the + 5V of USB-1208LS. Each cell is measured in a different channel using the differential input mode. Is it possible to fix the quantification of data?

I don't know if it's a problem of LabVIEW, but attached is my diagram, in the cases where I'm not seized the tension properly.

Thank you in advance.

On the input range, the resolution of this device is about 5 +/-10 V mV, which is exactly what you see. The resolution is established by calculating the length of the total voltage range (20 V) and dividing by the number of steps or bins the A/D converter on this beach (2 ^ 12 = 4096). Yes, resolution = 20/4096 = 4.883 mV.

To get the best resolution, you will need to use a smaller range (if your signal wil fits into a smaller range) or get a DAQ hardware with a higher resolution, for example a 16-bit converter, or 24-bit.

Lynn

-

PXI-6071e offset drift on the analog inputs

Hi, I have three cards PXI-6071E, sitting in a PXI-1042 chassis that is controlled by a computer with windows XP. The 6071Es are connected to the SCB-100 break out boxes that are wired to a pannel of BNC female Panel Mount on twisted pair.

I noticed that all of my analog inputs will drift around-10 V to + 10 V if they are not connected to what whether forcing them to a certain tension. This has always happened. We also see a bit of crosstalk between channels. For example if I open a panel of test in the measurement and automation Explorer I can watch the voltage read on the drift tickets through their full range, and alteration of the signals on nearby channels will appear on the channel, I am able.

Is this just standard behavior and to predict? Is there something more I could do to minimize this drift and crosstalk? I am trying to reduce noise in my system so I figure optimize my DAQ could not hurt.

Thank you

With nothing plugged into the catch to high impedance, drifting you see is quite normal. The front end of the circuitry builds up a charge, crosstalk is proabably due to the multiplexer input (did not check but I think that the 6071 has a) transferring the load to the other channels when they are analyzed.

Search the Forum of ghosting, you will find related discussions.

-AK2DM

-

What is the minimum response of analog input, through DSP online, output analog time?

Hello experts!

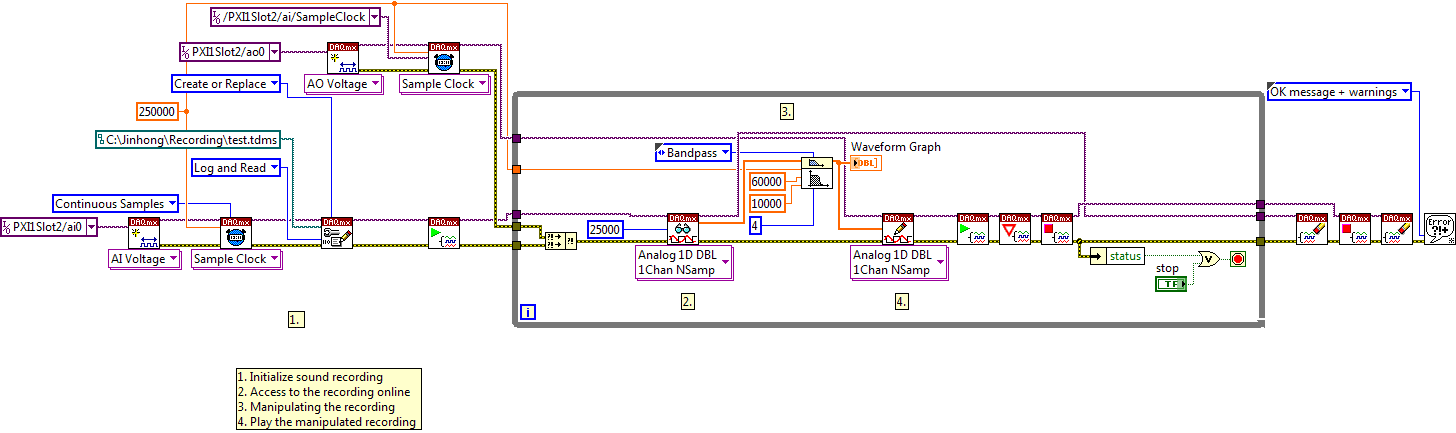

I want to know if it is possible to get a very quick response latency (~ 1 ms) sound recording (analog input), through online registration (DSP online), the presentation of his (analog output) processing, by using the DAQmx programming codes. My system of NEITHER includes NOR SMU 8135, SMU 6358 DAQ Multifunction controller and SMU 5412 arbitrary signal generator. I also have access to the latest version of Labview (2015 Version) software.

My project is on auditory disturbances, which inovles record vocalizations, manipulating the recorded vocalziations and then present the manipulated vocalizations. My current idea of how to achieve this fact triggered output voltage after reading the input using DAQmx Read samples. DAQmx Read output is filtered online and then passed as input for the DAQmx writing for analog output. For purposes of illustration, examples of code are presented below. Note for simplisity, codes for the trigger part are not presented here. It's something to work in the future.

My question here is If the idea above should be reaching ~ 1ms delay? Or I have to rely on a totally different programming module, the FPGA? I am very new to Labview so as to NEITHER. After reading some documentation on FPGA, I realized that my current hardware is unable to do so because I do not have the FPGA signals processing equipment. Am I wrong?

Something might be important to mention, I'm tasting with network (approximately 16 microphones) microphones at very high sampling rate (250 kHz), which is technically very high speed. Natually, these records must be saved on hard drive. Here again, a single microphone is shown.

I have two concerns that my current approach could achieve my goal.

First, for the DAQmx Read function in step 2, I put the samples to be read as 1/10 of the sampling frequency. It's recommended by Labview and so necessary to avoid buffer overflow when a smaller number is used. However, my concern here associated with the latency of the answer is that it might already cause a delay of 100 ms response, i.e. the time to collect these samples before reading. Is this true?

Secondly, every interaction while the loop takes at least a few tens of milliseconds (~ 30 ms). He is originally a State 30 late?

Hey, I've never used or familiar with the hardware you have. So I can't help you there.

On the side of RT, again once I don't know about your hardware, but I used NOR myRIO 1900, where he has a personality of high specific speed for the RT where I can acquire the kHz Audio @44 and process data. Based image processing is ultimately do the treatment on a wide range of audio data you have gathered through high sampling frequency and number number of samples as permitted by latency, please check this .

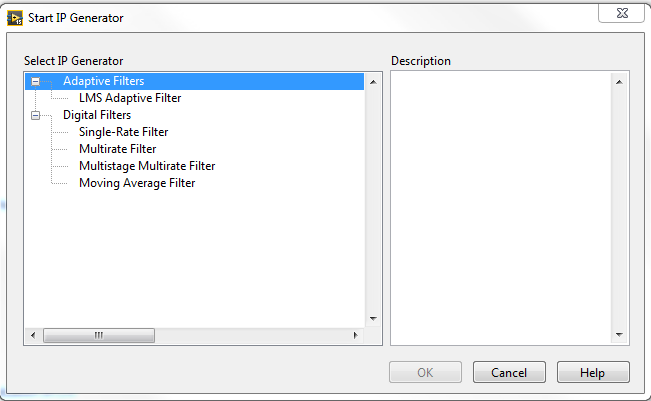

I lost about 2 weeks to understand host-side does not work and another 2 weeks to understand the even side of RT does not work for online processing (real time). Then, finally now I'm working on FPGA, where the sampling rate is 250 kHz (of course shared by multiple channels).

The complex thing with FPGA is coding, please check if the filter you want is given below as labview automatically generates some codes of some filters.

Most of them will work in 1 SCTL IE if your target has 40 MHz clock algorithm will run in 25 ns. That's what I was looking for, I hope you

See you soon... !

Maybe you are looking for

-

Button of the front panel & remote control questions Qosmio F30-140

Hello to all users of the Forum and to whom, who prefer Toshiba to a daily activity of s. I am owner of laptop Qosmio F30-140 for 7 months. I can say that I m happy with my laptop. But about 2 weeks back, I had some problems, which I can't resolve up

-

If the output of multiple string string

Hello I have this string input of the serial port that looks like this: Voltage20Current10Temperature32 They are all delimited by tabs. And I need them to divide into three outputs (possibly converted to numbers)--> Output 1--> 20 Output2--> 10 Outpu

-

How to remove a usb flash drive write protection

How do I remove my flah kingston usb drive write protect drive 8 gb

-

Stop the lock during a movie screen

How can I stop my surface of locking for a movie or to fall asleep... Please help... it's very frustrating to watch a movie when this happens? Thanks in advance.

-

javax.servlet.ServletException: means: Java heap space

Hi am in jdeveloper 11.1.1.7.0 I adf table who got about 250000 records when I scroll down trying to go to the last record get this error it keep on displaying data recovery XmlErrorHandler > < handleError > ADF_FACES - 60096:Server Exception during