Time manipulation

Hi guys,.

I took a peek at some of the video-Copilot tutorials on the manipulation of time and have a question or two around After Effects and frame rates.

The Panasonic camera I use, according to the documentation, allows to record several frequencies of images it has Variable speed capabilities. Footage native to the camera, right to the map only records around 192 MB per second (24 MB) and I don't think it's very good. If I save on an external device, the same second 10 results in 1880 MB / second (235 megabytes). It's obviously much better and it contains much more information to allow for more post work.

The problem I find, is that, according to the documentation, you can not register higher than 30 FPS to an external recorder, HD - SDI cannot handle 1080/30 p higher data rates. If I use the device (a Samurai recording device) apparently, each image is automatically embedded as a field in a stream of 1080/50i.

of course when I import directly into After Effects, I need to re - interpret the images and select the appropriate first - field movement is so good enough as slow movement (if they are imported into a composition standard 25 fps - I work in PAL land) with questions obvious movement (strobe or tilt). My question is this:

Is this the right way to go, or should I save standard 25 fps from the camera to the recorder (at a rate of 1766 MB / second (220.8 megabytes) data) and purchase a manipulator of time to party like Twixtor do the slow downs?

There's an interesting paragraph in the documentation for the camera I do not understand, and I'm not familiar with the mentioned software - if you allow me, I would like to quote paragraph (ok, I'll paraphrase) and see if someone can explain a little better?

"With the handling of mail, you can get 1080/60 p out of the AF100. the camera records already to 60 progressive frames per second. When the unit is in 1080/24 p or 1080/30 p and a cadence of 60 is selected, the camera records 60 discrete images every second. the file would have a rate of playback 24 p or 30 p encoded in it, but what happens if you have changed the reading rate? say, what happens if you went in Cinema Tools and 'Model' images to have a time base of 59,94 p instead of 29.97 p or p 23.976? of course, it would become whole, live 1080/60 p footage. 60 fps played back at 60 fps is full-screen, full of quality 1080/60 p. and of course the same applies to the 50 Hz mode - leading to hand full, 1080/50 p'

The parameters of the camera in Pal-land are PH 1080/50i, PH1080/25 p, 720/50 p of PH and PH720/25 p - since the that give better quality 50i, paragraph preceding confuses me a bit, I'd get 1080/50 p how about her? -of course, I'm assuming that the device is set to 1080/25 p and the Frame rate is set to 50 fps to above results. in any case, once again, perhaps not an entirely AFter Effects question, but any help would be greatly appreciated.

Stone

I know isn't what you're asking here. If you're planning on doing idle then save the higher frame rate, you can. If you want the time to match the images in real time to be interpretetd at the same rate, which has been used for filming. If you want to change the speed, to understand what will happen when you change the frame rate interpretation. Let me explain.

If you place then 10 seconds of film 60 p within a composition that is 20 fps or 24 fps, or 99 fps suddenly will be still long 10 seconds exactly. If the rate is greater than the rate of composition some of the frameworks will be abandoned. If the rate is less than the pace of the composition of that some of the frames will be duplicated. Movement of falling frames are not as noticeable as of artefacts of movement caused by duplication of images. Interpolation and other techniques available in After Effects to alleviate these problems. The third-party solutions like Twixtor can do a better job than the tools that come with AE but may be unnecessary unless you consider images slow way way down.

Let's talk first progressive images. If the rate is greater than the frame rate of your publication and you intend to use the built-in tools, the appropriate technique must match the cadence of the composition. If your publication is 24 fps then interpret the film at 24 frames per second. If the composition is 30 frames per second or 29.97 FPS then match these numbers. Then ten seconds of 60 p footage in a composition of 30 frames per second would play for 20 seconds, or be at 1/2 speed. Ten seconds of film 60 p in a model of 25 fps would play for 24 seconds. If you use the EA built in tools then this is your best option bit matter how you want to change the playback time.

If you use a third time like Twixtor manipulation tool, then you must follow their instructions. Twixtor will work just fine if you match the cadence at the actual rate of the images.

If the original movie is interlaced, you have another pair of sleeves. 50i footage there really 100 fields per second, or are there only 50? 60i pictures really 60 FPS with 120 fields per second or is it 59,97 frames per second at 29.97 frames per second? In both cases it is true. There is only one way to know for shure. You separate the fields and set the pace of your best estimate. Then, create a new composition with your images by dragging on the new icon of the model or by selecting the images and choosing new layout of sequences. Then you open the parameters of the model and to double the pace of the composition (you can do the math in the field by typing * 2 after the value that is there). Then scroll through the images of an image using the Page up key. This will immediately show if you have well interpreted the rate and whether or not the order is correct.

I have not run specific tests on your camera or your external recording device, but in all likelihood, your 50i footage is 50 frames per second and 25 frames per second. IOW, the correct interpretation is probably 25 fps separating the high fields first. Test to make sure.

Once you know exactly what you have, you have three options. My usual technique is to make just this model frame lined with a production format so I did not need to deal with interlaced images and the rest of my production pipeline. The other is to use the model frame doubled as a source of sequences for any handling time you will make ensuring that preserve the cadence of the nested compositions is turned on. The third option is just to use the images correctly interpreted.

One last thing before I go. If you use a third-party solution, you must follow their instructions specifically when you use interlaced images. Images must be interpreted correctly for third-party solutions work properly.

Tags: After Effects

Similar Questions

-

Problem: re-setup Creative Cloud

Hi all

I have a pretty annoying problem... I have to uninstall creative cloud of my computer and since I can't seem to reinstall.

After downloading the file (CC) on the Adobe site, I load the ".dmg", double click, between my password since I'm on Mac and after nothing never happens :/

I tried several time manipulation, nothing evolves...

If someone would have a solution I am really interested.

Thank you

Use a browser that allows cookies and pop-up windows, please contact adobe for hourly pst support by clicking here and, when it is available, click "still need help," http://helpx.adobe.com/x-productkb/global/service-ccm.html

-

Hello

I'm programming in Java since 5 months ago. Now I am developing an application that uses charts to present information to a database. This is my first time manipulation of tables in Java. I read the tutorial Swing of the Sun on JTable and more information on other sites, but they are limited to the syntax of the table and not in best practices.

So I decided what I think is a good way to manage data in a table, but I don't know which is the best way. Let me tell you the General steps that I'm going through:

(1) I query the data from the employee of Java DB (with EclipseLink JPA) and load it into an ArrayList.

(2) I use this list to create the JTable, prior transformation to an Object [] [] and it fuels a custom TableModel.

(3) thereafter, if I need to get something on the table, I search on the list and then with the index resulting from it, I get it from the table. This is possible because I keep the same order of the rows on the table and on the list.

(4) if I need to put something on the table, I do also on my list, and so on if I need to remove or edit an item.

Is the technique that I use a best practice? I'm not sure that duty always synchronized table with the list is the best way to handle this, but I don't know how I would deal with comes with the table, for example to effectively find an item or to sort the array, without first on a list.

Are there best practices in dealing with tables?

Thank you!

Francisco.You should never list directly update, wait for when you first create the list and add it to the TableModel. All future updates must be performed on the TableModel directly.

See the [http://www.camick.com/java/blog.html?name=row-table-model url] Table of line by a model example of this approach. Also, follow the link BeanTableModel for a more complete example.

-

Questions game graphics/animation

I'm new to Flash, so I'm * not * aware of some seemingly obvious things, such as the existence of certain methods or the way that .fla interacts with the code. Keep this in mind when you give explanations

, I was reading a tutorial and it says that you can directly reference the symbols with the name of the symbol. Is it possible to do that without action, but rather by using an external .as file? I tried, but I had a lot of mistakes not defined even if I import the stage class and so on. This is the site I was looking at: http://www.tutcity.com/view/keyboard-controls-in-as3.19742.html. However, it seems to require the addition of actions in flash. I got it works when you use the Flash actions as suggested, but I would like to have the code in the .as only files rather than depend on the Flash actions, for better control. I don't know how to get the .as file to recognize the scene, if I get the following error:

1120: access of undefined property scene.

1120: access of undefined property keyHit.

Do I have to instantiate the scene or something? Shouldn't the .fla file knows what scene? I tried import flash.display. * too and it didn't work. It's my code.

package code

{

import flash.events. *;

import flash.display.Stage;

import flash.display.MovieClip;

import flash.ui.Keyboard;Paradox/public class extends MovieClip

{

function keyHit(event:KeyboardEvent):void

{

var enemySpeed:uint = 5;switch (event.keyCode)

{

case Keyboard.RIGHT:

MechDragon.x += enemySpeed;

break;case Keyboard.LEFT:

MechDragon.x = enemySpeed;

break;

case Keyboard.UP:

MechDragon.y = enemySpeed;

break;

case Keyboard.DOWN:

MechDragon.y += enemySpeed;

break;

}

}

stage.addEventListener (KeyboardEvent.KEY_DOWN, keyHit);

}

}I do not understand the relationship between the .fla file and the file .as much as animation goes. Is there a command to refresh, or a next order of framework or something else? Also, is there a command to get the framework? I want to save a bunch of images to a list or something (doing time manipulation stuff). I also want to be able to change the graphics according to what is happening. For example, if the character moves to the left, pass the mobile left sprite. If the character moves upward, place you in moving to the top of sprite, etc.. But I want that he be bound to the same object, simply changing the image. I can do or do I make lots of objects and mess around with some switches to graphics?

You can do it in different ways... just as in same DirectX - you can animate highs all in Flash - see the graphics for this class.

You may have all your different animations in your player, on his calendar and just goto clip of different frameworks that you need.

Or you might have various clips or bitmaps event and redeem them that you need.

> But y at - it a way to, for example, to assign several different images of a symbol and then let say code Flash when to use that image (or image sequence)?

The best way of that would be to use the timeline and go to the frames you needed...

-

Hello

I have a custom dashboard where there is a TimeRange, I want to display the report on a date given (date) and exactly a month before (date). I created a script to calculate the value of date.

package user._foglight.scripts;

def startCal = Calendar.GetInstance)

startCal.setTime (timeRangeInput.getStart ())

startCal.add (Calendar.MONTH, - 1).

def endCal = Calendar.GetInstance)

endCal.setTime (timeRangeInput.getEnd ())

endCal.add (Calendar.MONTH, - 1).

timeRangeInput.getStart () .setMonth (startCal.get (Calendar.MONTH))

timeRangeInput.getStart () .setYear (startCal.get (Calendar.YEAR) - 1900)

timeRangeInput.getEnd () .setMonth (endCal.get (Calendar.MONTH))

timeRangeInput.getEnd () .setYear (endCal.get (Calendar.YEAR) - 1900)

return timeRangeInput

The problem is, when in the dashboard whenever I change the TimeRange, dateB is not changed, still using the old value. Help, please.

Ok. Solved my problem using this thread as a reference http://en.community.dell.com/techcenter/performance-monitoring/foglight-administrators/f/4788/t/19552460#47657

So here is what I do

I've created a script that will return the metadata 2:Custom slot

Calendar calendar = Calendar.GetInstance ();

def altTimeRange = timeRange.createTimeRange (calendar);

Calendar startCalendar = Calendar.GetInstance ();

startCalendar.setTime (altTimeRange.getStart ());

startCalendar.add (Calendar.MONTH,-1);

Calendar endCalendar = Calendar.GetInstance ();

endCalendar.setTime (altTimeRange.getEnd ());

endCalendar.add (Calendar.MONTH,-1);

def range = endCalendar.getTime () .getTime () - startCalendar.getTime () .getTime ();

def altTimeRange1 = functionHelper.invokeFunction ("system:wcf_common.9", range, startCalendar.getTime (), 0);

Return altTimeRange1;

These script, you can assign the value returned in commune: interval of time in the view

But this is the trickiest part, if you assign the value to the primary inputs , the script will be called only once, so no matter how many times you have tried to change the time of the range custom time remains unchanged from the beach. I don't know if this is a bug or not.

However, if you put the time range to additional entries these script will be called whenever the time slot changed

Took me a while to understand this hope of output, it will help someone :)

-

Re: Manipulation of the value at the time of the insertion

Hello

I have a text field. Once the user value in the text field and press the button to insert the

recordl, at the same time I like to manipulate the value using the sql function.

Where and how can I do this?

Thanks in advance.I gave the answer, please re-read my post.

You could also do it in an after submit computation on the item..What exactly do you want to doThank you

Tony Miller

Webster, TX -

How much time must be recharged Satellite Pro U200-10 b for the first time

Hello

How long the computer laptop Saturday Pro U200-10 b should there be for the first time?

Kind regards

CCHi Christoph

That s easy. You must charge the battery for laptops up to the battery LED switches to green light or blue. I put t know exactly the LED on the U200 Pro colors.

A hint; all the details should be in the manual of the user who is always pre-installed on Toshiba laptops

There, you will find also information about the battery and manipulation.Concerning

-

External network drive helps to work with Time Machine

I did research on my brain for the last 2 days on this subject, because I have to remove a ton of material from my laptop before he goes kaput (spoke yesterday on another post)

Here's the plan... I think so:

I want to move photos, films (home movies not DVD) and the iTunes library from my MacBook Pro to an external HD attached to the wifi router, so I don't have to be hard wired to access photos, music, etc., then at home.

I want to continue using Time Machine to back up a) my laptop and b) the files moved to the external hard drive.

This will require a SECOND external drive.

My understanding is that this is possible if the SECOND external drive is connected ONLY to an 802.11ac Apple Airport. No other wifi router will not work.

The airport could be connected by ethernet to an existing Netgear wifi router that Comcast installed in order to manipulation of our home security system.

Is this possible? It is frying my brain.

Or...

I do one) get a time Capsule 2 TB,

(b) plug on the Netgear

(c) to charge all my top media and

(d) connect a 3 TB drive in the time Capsule to back up what is on it and my laptop?

Does it work?

It kills me.

-

Good evening

First of all, I am fairly new to LabVIEW and NXT programming, so I hope this isn't a stupid question.

Right now, I have this little VI, to help me to pass along an absolute scale robotic arm.

When I hit the run button, the value of the button is picked up, the rotation sensor is picked up, then that data gets manipulated in order to determine the direction and the amount of degrees of rotation of the NXT motor. Then it stops completely.

There is my problem, I need the VI to be able to update the position of the wheel gets here and not only read a value and then get stuck.

Thanks in advance,

I'm sorry, it was a stupid question.

Ramp has been set to constant. And that's why the loop never went beyond the motor moving, the gradient from the bottom configuration solves the problem.

"doh!"

Feel free to delete this thread and sorry if it was a waste of time everyone.

-

Streaming AO of manipulated copy of AI: PCI-6115

I would like to release an analog output of a manipulated copy of an analog input, but I can't seem to get anywhere with the available examples. It could well be an impossible task with DAQmx.

Thanks John, I'm capable of running late; This work certainly if I actually had a stream of data. I have described my system incorrectly. There are synchronization problems that will not allow a delay in the AI and AO without errors. However, it works as expected, make me wish that I had a continuous application to use it on. No worries, I have a solution that will still be able to manipulate data in real-time using a channel AO and some hardware.

If there is a solution to this problem in real time, let me know.

-

Greetings,

I'm working on an application for the acquisition of data that runs constantly and need to record data at intervals of 10 seconds for a duration of 2 seconds.

On each interval recording, I have to create a new file that contains a number of journal in the name of the file (Test_1.txt, Test_2.txt,...);

and add to the current time and date on the header of the file.

My question is how can I do this with what I have so far?

The attached vi is a notion of what I am trying to accomplish. The application also has the DAQmx read functions in the vicinity while loop.

and a few manipulations of data. Apart from the main loop, I create and launch tasks DAQmx.

Thank you for your help.

Best regards

Matej

Hello

Thought this one myself.

Here is the solution, if anyone needs something similar.

Best regards

Matej

-

What is the minimum response of analog input, through DSP online, output analog time?

Hello experts!

I want to know if it is possible to get a very quick response latency (~ 1 ms) sound recording (analog input), through online registration (DSP online), the presentation of his (analog output) processing, by using the DAQmx programming codes. My system of NEITHER includes NOR SMU 8135, SMU 6358 DAQ Multifunction controller and SMU 5412 arbitrary signal generator. I also have access to the latest version of Labview (2015 Version) software.

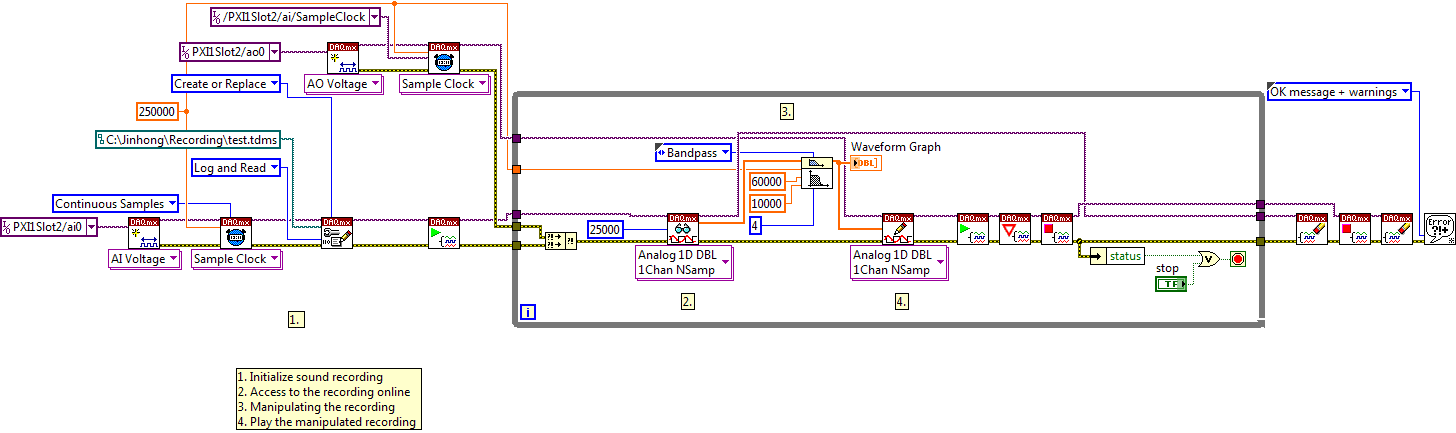

My project is on auditory disturbances, which inovles record vocalizations, manipulating the recorded vocalziations and then present the manipulated vocalizations. My current idea of how to achieve this fact triggered output voltage after reading the input using DAQmx Read samples. DAQmx Read output is filtered online and then passed as input for the DAQmx writing for analog output. For purposes of illustration, examples of code are presented below. Note for simplisity, codes for the trigger part are not presented here. It's something to work in the future.

My question here is If the idea above should be reaching ~ 1ms delay? Or I have to rely on a totally different programming module, the FPGA? I am very new to Labview so as to NEITHER. After reading some documentation on FPGA, I realized that my current hardware is unable to do so because I do not have the FPGA signals processing equipment. Am I wrong?

Something might be important to mention, I'm tasting with network (approximately 16 microphones) microphones at very high sampling rate (250 kHz), which is technically very high speed. Natually, these records must be saved on hard drive. Here again, a single microphone is shown.

I have two concerns that my current approach could achieve my goal.

First, for the DAQmx Read function in step 2, I put the samples to be read as 1/10 of the sampling frequency. It's recommended by Labview and so necessary to avoid buffer overflow when a smaller number is used. However, my concern here associated with the latency of the answer is that it might already cause a delay of 100 ms response, i.e. the time to collect these samples before reading. Is this true?

Secondly, every interaction while the loop takes at least a few tens of milliseconds (~ 30 ms). He is originally a State 30 late?

Hey, I've never used or familiar with the hardware you have. So I can't help you there.

On the side of RT, again once I don't know about your hardware, but I used NOR myRIO 1900, where he has a personality of high specific speed for the RT where I can acquire the kHz Audio @44 and process data. Based image processing is ultimately do the treatment on a wide range of audio data you have gathered through high sampling frequency and number number of samples as permitted by latency, please check this .

I lost about 2 weeks to understand host-side does not work and another 2 weeks to understand the even side of RT does not work for online processing (real time). Then, finally now I'm working on FPGA, where the sampling rate is 250 kHz (of course shared by multiple channels).

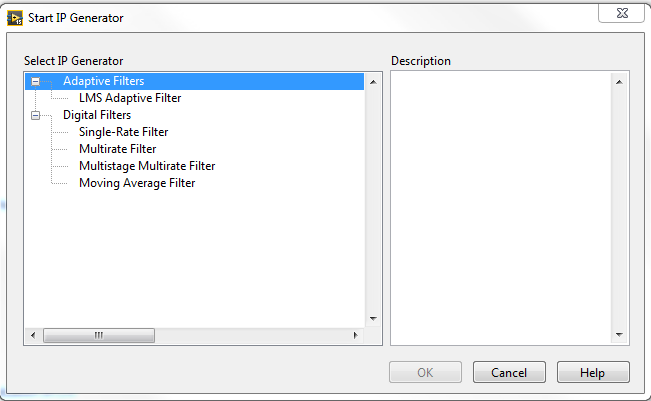

The complex thing with FPGA is coding, please check if the filter you want is given below as labview automatically generates some codes of some filters.

Most of them will work in 1 SCTL IE if your target has 40 MHz clock algorithm will run in 25 ns. That's what I was looking for, I hope you

See you soon... !

-

execution time varied in image processing

Hello

I designed a vi to process the images eyes and calculate its Center. However I seem to be getting various run time sometimes 100 ms sometimes > 200ms. I used the structure of flat sequence and timers to measure the runtime of the vi. I had a few parallel processes and had forced sequential streams using error hydrants. But the problem still remains. I thought that the problem could be due to different images of entry but the execution of the same image again and again still the same problem poster. Can someone tell me what is the cause?

Running code on a Windows system is NEVER deterministic.

That being said, the code in a way that can be allocated in advance all the memory of the design will be an important step to improve performance.

Playing with priorities can also improve things, but you can easily spoil if you use priorities in a bad way.

In addition, applications running in parallel will induce the unknown system resources (CPU, memory, interfaces,...) and therefore lead to greater instability.

If you really have a hard cap for example loop iterations about the jitter (e.g. must not have a jitter > 1us), the only way to address that moves to RT or maybe even of FPGAS (depending on the complexity vs interfaces vs knowledge/skills).

If determinism is required for the material e/s if it is single point (as in control loops), material clocked IO and manipulation of the data packets in the application is sufficient for 99.99999% of all applications.

Norbert

-

Hi all

I am a fairly new user to Labview and am trying to create a program that: watching the entries in different modules (9213, 9217) CompactDAQ sensors check errors and then uses data from some final calculations like averaging etc. I've set up a small piece of code that includes the main functions that I am trying to accomplish. Now I'm rather uncomfortable with getting set up and assigned channels, then pass these data in a while loop that continuously pulls the data from these sensors.

I have the vi calculations complete and complement the inputs of channel UI, now, I'm working on the side of data manipulation and reading things. The waveform D 1 who comes to read function is currently divided into components I want (given String Name and Y). He then transmit this information out of the loop via a queue which transmits to the parallel loop below that will perform the verification errors and calculations of average. There are some things that make it difficult. I showed the 2 sensors in my example, but in the actual program, there may be up to 400 entries. The problem is that not all of these sensors will be used on all the tests that the software is used for. To use the entries in the VI test for example: if I'm trying to the average of these 2 entries to create a common value, but then on a test, sensor 2 is not there, it must be recognized that fact and not use it in the service average.

So to get down to it, I want to create something that can look through the D 1 waveform data groups, determine what these values are, then perform the functions necessary for these numbers once they are identified, then pass on the calculations of VI where it displays then usable for user information. I think I'm having a problem with the finding in sorting than what I thought at makes me think that there must be an easier way.

If someone has encountered this? What is my explanation of what I'm trying to make sense? Let me know and I can clarify.

In terms of features, I don't see anything wrong. with respect to the effectiveness we must remember some general indications. These issues that I bring to the top will not affect your code now since your stack sizes are very small but can start to create the fragmentation of memory and slow down the software if your stack sizes are much larger and the software runs for a long period of time.

1. try not to use the table to build. Whenever you use a table built labview creates a copy in memory. Try to initilized your berries and replace subsets.

2. as much as you can try not index tables or unbundle cluster several times. try to perform as many tasks as possbile in one shot. (what was obvious in your second loop)

In addition, he's looking good.

I made a few changes to your code and attached to it. I wanted to give you a different on her opinion as it relates to being able to control entry and exit of programitically, if you had a look up table. I do not have the same changes to your second loop but I can guide you through what to do if you do not want to go in that direction. Yet once your original code is well done for someone who is new to labview, so don't feel you need to follow the new design of VI. Just try to remove the table construction and multiple indexing as a good habbit programming.

-

a problem of manipulation of table

Hello:

I'm sorry for wasting your time if this is a manipulation of the base. I am a newer for Labview and I tried several methods, unfortunately it did not work.

There are two tables that refer respectively array and array of experience. assuming that they are

Array (R) 0 0 5 5 5 5 0 0 0 5 5 5 0 5

Array (E) 1 2 3 4 5 6 7 8 9 10 11 12 13 14How can I get new subtables of Array (E) whose elements corresponding to the value 5 in the Array (R). for this instance, the subtables news here is:

Array (E1) 3 4 5 6

Array (E2) 10 11 12

Array (E3) 14Hello!

Try this and let me know

Maybe you are looking for

-

Any java time is needed, for example on the java page check, chrome/Firefox/IE freeze then crash in giving this information. I have reinstalled java, chrome, IE and reset Firefox.Problem event name: AppHangB1 Application Name: firefox.exe Application

-

FireFox offers a download of security when he suspects a virus or Trojan etc horses lie? Is this legitimate or an attempt of a virus.

-

I can't get my isp connection to open in my fpolder to start, why not not?

my ISP connection does not in the startup folder, why not? Please help, I have xp sp3.

-

Why my keyboard used let me scroll to safe mode or boot from cd? And how to fix this?

I use windows xp home eddition and when I start my computer it wont let me press F8 to enter safe mode menu. When I reset my computer that I can get to the screen where it allows you to choose to start in safe mode... but for some reason I can't scro

-

How to remove hpproduct Assistant that keeps popping up on my computer?

How can I remove Deputy of the hp product that continues to show up on my computer and will not remove?