Database is slow

How can I check why the database is slow? I have received a complaint from a user saying that the database is slow, how can I check which takes process long?Select * from v$version;

Oracle Database 11g Enterprise Edition Release 11.2.0.2.0 - 64bit Production

PL/SQL Release 11.2.0.2.0 - Production

"CORE 11.2.0.2.0 Production"

TNS for HPUX: Version 11.2.0.2.0 - Production

NLSRTL Version 11.2.0.2.0 - ProductionHello

You have configured Enterprise Manager? Using 'EM is the best and easiest to see what the problem is with your database.

Otherwise, if the performance is slow in the last minutes, you can use reports history of Active Session (ASH) to check top queries. You will notice also the top wait events in the same.

If the performance problem is consistent in these last hours, you better check the AWR & ADDM reports. They will give you a better way to find out if there are performance problems and vision. Since the events of high expectation of the page, you can see if the bottleneck exists in the database or outside of the database.

If there is a query that performs poorly all of a sudden, you can first try to collect stats of the tables involved.

Kind regards

Rizwan Wangde

SR Oracle DBA.

http://Rizwan-DBA.blogspot.com

Tags: Database

Similar Questions

-

How do I know if the Web server is slow or apex database is slow

I want to test to see if the Web server is initially slow to load the page or database is...

Anyone know how? or how to check the http server is well configured?

Thank you

DeanHello

When you turn on debugging, then check the debugging information, you can see the time required for each step. This is the best option

Alternatively, you can install the application in the Oracle's Web site (workspace online giving for free) and try it.

But when you do this, you will get more time to get response because of the speed of the internet.Another way is to to execute the sql much time codes in your app through sql and compare then against the application time.

* If the response is useful the ce mark.

-

Export of database so slow running

Hello

I export our server Oracle 11 GR 2

That is why it is hanging from the "export of system procedural objects and actions? He has already stayed here for 5 hours.C:\Documents and Settings\Administrator>exp system/manager@prod1 FULL=Y STATISTICS=NONE Export: Release 11.1.0.6.0 - Production on Tue Apr 26 09:11:53 2011 Copyright (c) 1982, 2007, Oracle. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.1.0 - 64bit Production With the Partitioning, OLAP, Data Mining and Real Application Testing options Export done in WE8MSWIN1252 character set and UTF8 NCHAR character set server uses UTF8 character set (possible charset conversion) About to export the entire database ... Copyright (c) 1982, 2007, Oracle. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.1.0 - 64bit Production With the Partitioning, OLAP, Data Mining and Real Application Testing options Export done in WE8MSWIN1252 character set and UTF8 NCHAR character set server uses UTF8 character set (possible charset conversion) About to export the entire database ... . exporting tablespace definitions . exporting profiles . exporting user definitions . exporting roles . exporting resource costs . exporting rollback segment definitions . exporting database links . exporting sequence numbers . exporting directory aliases . exporting context namespaces . exporting foreign function library names . exporting PUBLIC type synonyms . exporting private type synonyms . exporting object type definitions . exporting system procedural objects and actions

How to count all procedures and actions, so I don't know how big are they?

How can I speedup this part?

Thank youSalvation;

you are at level 11 GR 2 Please use expdp. Please also follow:

Note to master for data pump [ID 1264715.1]

Respect of

HELIOS -

'The database is slow when it rains' what is history?

I have heard, but not quite as a single line, can someone point me to a link where I can read the full version of this famous quote from Tom kYte?

Thank youI've heard too many Jonathan tell the story during the AIOUG Conference in Hyderabad. You can read the story in Chapter 1 of the Expert Oracle practices: Oracle Database Administration of the Oak Table. See http://books.google.com/books?id=xzjw4hoeDegC&pg=PA6#v=onepage&q&f=false

The first chapter of this book has been reprinted in the May 2010 issue of the NoCOUG Journal beginning on page 13. See http://www.nocoug.org/Journal/NoCOUG_Journal_201005.pdf

Iggy

Published by: Iggy Fernandez 23 Sep, 2010 15:03

-

connection to the database to slow down using OAS 10.1.2.3

We have customized Oracle Forms Application; The current production version is 10g (9.0.4.3).

We are trying to upgrade to 10.1.2.3.

I have installed on the same server at separate oracle 'forms and reports' 10.1.2.0.2 + Patch 10.1 .2.3;

We use exactly the tnsnames.ora or sqlnet.ora file in the production version and the new version. When I me sqlpus using oracle version of production House that he takes 3 seconds running connects to database and when I execute sqlplus using the new connection to the oracle home in the same database takes 30 sec.

What is the problem?

My sqlnet.ora file is

SQLNET. AUTHENTICATION_SERVICES = (NTS)

NAMES. DOMAINE_PAR_DEFAUT = EVERYONE

NAMES. DIRECTORY_PATH = (LDAP, TNSNAMES, ONAMES, HOSTNAME)

DEFAULT_SDU_SIZE = 8761

My tnsnames.ora file is

test. WORLD = (DESCRIPTION = (ADDRESS_LIST = (ADDRESS = (COMMUNITY = TCP. (WORLD) (PROTOCOL = TCP)(HOST=TEST) (PORT = 1521))) (CONNECT_DATA = (SID = TEST) (GLOBAL_NAME = TEST. «"" WORLD)))»»»

Thank you, MaryGreat Mary!

Can mark you your question as a reply to show to other users.

First of all... It was the wrong forum :)

concerning

Maurice -

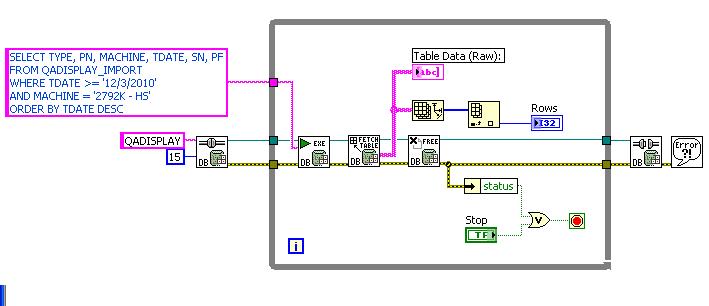

I use DB toolset to acquire data from the server and is very slow. It takes almost 3 minutes to make the data using the tools, but there is virtually no wait when I made the request in MS SQL Server Management Studio. I don't expect the toolset to be as fast as SQL SMS but maybe a lil closer... Unless there is something wrong with my VI then it's another story.

As I searched on the forum that it is seems to be a problem in young...

If there is an idea to accelerate this thing please do advice...

Thank you very much..

"Why is the database toolset slow to return data from the Table?"

http://digital.NI.com/public.nsf/allkb/862567530005F0A186256A33006B917A

(I suspect that the database-variant for the data at the heart of Fetch Table is the culprit. Try the normal recordset to Fetch that generates output a table of variant).

-

Dear friends,

The developers around me tell me that the database is slow (they use PL/SQL Developer and other tools).

How can I check if the database is actually causing the problem?

Thanks in advance.

NithI know not why CKPT said of the response time of the listener since once the connection is estabilished, listener is no longer in the picture, but probably it's guessing all the time to take the implementation of connection as well as why he believes it. Anyway, there is no way toget it as long as you enable tracing for the listener. Who should inquire about the activity of the listener. But I would rather check not it but would take a statspack/awr report and will see what comes out.

HTH

Aman... -

database use charge/cost of database

What database gets slower when new jobs are created in the database?

I understand that employment is a sort of timer that the database engine must be running and is a sort of loop checking weather the time has now arrived or not to run the code. If this loop can make it more slow, I think of the database. But not much I guess?

I'm very busy/load the database and I don't want to do the slower database. I thought that maybe I should to cron-job at the level of the operating system, so that the database will not get mor load/work with caring Job' manipulation.CharlesRoos wrote:

What database gets slower when new jobs are created in the database?It depends on several factors. Creating a job is a simply SQL UPDATE of the SYS. Employment table - and even a basic Oracle instance is capable of 1000's of updates per second.

I understand that employment is a sort of timer that the database engine must be running and is a sort of loop checking weather the time has now arrived or not to run the code. If this loop can make it more slow, I think of the database. But not much I guess?

Not really. There is no timer as such. There is a background process Oracle who performs work - these treat work (workload) specified in the work table.

I'm very busy/load the database and I don't want to do the slower database. I thought that maybe I should to cron-job at the level of the operating system, so that the database will not get mor load/work with caring Job' manipulation.

Would be a waste of time beast. (be it an Oracle workload is managed via cron by using the dedicated server process, or by a working Oracle process - this workload is the same and needs to run)

-

Maximum number of models lead scoring

Hello

Is there a maximum number of LS models that we can use?

We have models of LS campaign and I was wondering if this can create problems anyway.

Thank you very much

Factors that can affect performance:

- Size of the database (like the database, the slower the potential performance)

- Complexity of the Score profile drive or Engagement criteria and parameters (avoid using all wildcards (*) because this can affect performance)

- Number of Lead Scoring models (lead most score models, the slowdown of the potential performance; it is recommended that no more than 3 models are enabled at any time)

For example, there may be major performance problems if there are 20 profile and 20 criteria for commitment to a model unique especially if you have a database is greater than 1 million contacts. However, maybe this isn't the case for a smaller size 10K database. The recommended technique even applies for the number of active lead score models - size of the database and the complexity of the criteria and parameters can affect performance.

-

MaxCapabity JDBC in JDBCDataSourceRuntimeMBean?

According to https://docs.oracle.com/cloud/latest/as111170/WLSAR/weblogic/management/runtime/JDBCConnectionPoolRuntimeMBean.html JDBCDataSourceRuntimeMBean replaced JDBCConnectionPoolRuntimeMBean.

In older beans, I found MaxCapcity which reports the value I would expect such as configured for the data source. I can't find an attribute similar to the bean again. I see a number which seem appropriate to name but do not reflect the value that expect when comparing with the old values of bean. These attributes look promising:

Attribute Description (from the Oracle documents) ActiveConnectionsHighCount Largest number of connections of active database in the instance of the source data from the data source has been instantiated.

Active connections are connections that are used by an application.

CurrCapacityHighCount Largest number of connections available database or in use in this instance of the source data from the data source (the current capacity) has been deployed. HighestNumAvailable Largest number of connections available to be used by an application at any time in this instance of the source data from the data source and database which slowed was deployed. I have my data source set up to a maximum of 25 connections. When I check the old bean MaxCapacity I find '25' when I check the above attributes I find:

- ActiveConnectionsHighCount = 10

- CurrCapacityHighCount = 10

- HighestNumAvailable = 10

.. .one a server and...

- ActiveConnectionsHighCount = 16

- CurrCapacityHighCount = 16

- HighestNumAvailable = 7

Assuming that the connections max at any time between my two nodes is 25 (as I put on the data source) I'm jumping to find ways to monitor the data via JMX and detect when I'm near exceed my connection availability. However, I see no obvious way to link data without knowing the maximum number of connections available.

Can I rely safely on the bean discouraged to get MaxCapacity?

Do I have to synchronize my HighestNumAvailable reading all consumers of the data source (e.g. 25 - (10 + 7) = 8 rest)? If Yes, this sounds boring. I hope to find a counter of data source at the domain level that gives me this detail.

I appreciate any guidance on monitoring properly my use of the data source.

Jon-Eric

I tried to browse the MBeans using WLST (I guess that might help a little), and here's what I found:

- JDBCDataSourceRuntimeMBean as you said, does not include the MaxCapacity attribute

- The MaxCapacity attribute could be found under "JDBCSystemResources" (not a runtime attribute)

- Here's the full path in WLST > wls: / {DomainName} / serverConfig/JDBCSystemResources / {DATASOURCE_NAME} /JDBCResource/ {DATASOURCE_NAME} /JDBCConnectionPoolParams/ {DATASOURCE_NAME}

- Now, after having listed the contents/attributes by using the command "ls().

.

.

.-r - MaxCapacity 100 -r - MinCapacity 1 .

.

.

So I guess you can use an MBean non-runtime to obtain the desired value.

It could be that useful...

Kind regards

White

-

In the parameter of size of memory to the CBD

I was at the parameter inmemory_size 200 MB, when I bounced my database the value show is 208 MB. Can you help me understand why it turns into 208 MB while that bounce?

sys@CDB1 > alter system set inmemory_size = 200M scope = spfile;

Modified system.

Elapsed time: 00:00:00.09

sys@CDB1 > display inmemory_sizeVALUE OF TYPE NAME

--------------------------------------------- ----------- -------------------------

inmemory_size big integer 0

sys@CDB1 >

sys@CDB1 > shutdown immediate;

The database is closed.

The database is dismounted.

ORACLE instance stops.

ERROR:

ORA-12514: TNS:listener is not currently of service requested in connect descr

WARNING: You are more connected to ORACLE.

sys@CDB1 > start

SP2-0640: not connected

sys@CDB1 >

sys@CDB1 > exitC:\Users\179818 > set oracle_sid = CDB1

C:\Users\179818 > sqlplus/nolog

SQL * more: Production of the version 12.1.0.2.0 on Mon Mar 23 18:08:38 2015

Copyright (c) 1982, 2014, Oracle. All rights reserved.

slow motion > Connect sys/oracle as a sysdba

Connect to an instance is idle.

slow motion > start

ORACLE instance started.Total System Global Area 1660944384 bytes

Bytes of size 3046320 fixed

989856848 variable size bytes

436207616 of database buffers bytes

Redo buffers 13729792 bytes

218103808 region in memory bytes

Mounted database.

Open database.

idle >

slow motion > Connect sys/oracle@cdb1 as sysdba

Connected.

sys@CDB1 > display inmemory_area

sys@CDB1 >

sys@CDB1 > view the inmemory parameterVALUE OF TYPE NAME

--------------------------------------------- ----------- -------------------------

inmemory_clause_default string

inmemory_force string by DEFAULT

inmemory_max_populate_servers integer 2

inmemory_query string ENABLE

whole big inmemory_size 208M

inmemory_trickle_repopulate_servers_percent integer 1

optimizer_inmemory_aware Boolean TRUE

sys@CDB1 >

sys@CDB1 >In addition to what others have said, I suggest that you set the value in memory for each PDB.

If you do not have each PDB will inherit the default PIC, which means that any an APB could consume all available memory.

See the table in the doc

https://docs.Oracle.com/database/121/Admin/memory.htm#BABJEHAJ

In a multi-tenant environment, the setting of this parameter in the root is the setting for the database entire container multi-tenant (PEH). This adjustable parameter in each plug-in database (PDB) to limit the maximum size of the column for each PDB IM store. The sum of the values of the PDB may be less than, equal, or greater than the value of the CBD. However, the value of the CDB is the maximum amount of memory available in the store column IM for the full CDB, including the root and all PDB files. unless this parameter is specifically set for a PDB file, the PDB inherits the value of the CBD, which means that the PDB can use all available for the CBD column IM warehouse.

You can also specify a compression level for objects that you plan to store in memory. The compromise is compared to the performance space.

-

How to count the log generated per hour?

Hi all

11.2.0.1

AIX 6.1

/ / DESC v$ archived_log

Name of Type Null

--------------------- ---- -------------

NUMBER OF RECID

NUMBER STAMP

NAME VARCHAR2 (257)

NUMBER OF DEST_ID

THREAD NUMBER #

SEQUENCE NUMBER #.

RESETLOGS_CHANGE # NUMBER

DATE OF RESETLOGS_TIME

NUMBER OF RESETLOGS_ID

FIRST_CHANGE # NUMBER

FIRST_TIME DATE

NEXT_CHANGE # NUMBER

DATE OF NEXT_TIME

NUMBER OF BLOCKS

NUMBER OF BLOCK_SIZE

CREATOR VARCHAR2 (7)

VARCHAR2 REGISTRATION OFFICE (7)

STANDBY_DEST VARCHAR2 (3)

ARCHIVED VARCHAR2 (3)

VARCHAR2 (9) APPLIED

DELETED VARCHAR2 (3)

STATUS VARCHAR2 (1)

DATE COMPLETION_TIME

DICTIONARY_BEGIN VARCHAR2 (3)

DICTIONARY_END VARCHAR2 (3)

END_OF_REDO VARCHAR2 (3)

NUMBER OF BACKUP_COUNT

ARCHIVAL_THREAD # NUMBER

NUMBER OF ACTIVATION #.

IS_RECOVERY_DEST_FILE VARCHAR2 (3)

COMPRESSED VARCHAR2 (3)

FAL VARCHAR2 (3)

END_OF_REDO_TYPE VARCHAR2 (10)

BACKED_BY_VSS VARCHAR2 (3)

Can you help me to ask how achivelogs or redologs generated per hour based on the table above?

I would point out to my boss why we have days where the database is slow. I'll give you the comparison that this slow day have many updates of the transaction.

Assuming our application not investigated.

Thank you very much

pK

Comments by AWR:

=============

'Library Cache lock' activity for 21st.

1 / the SQL - pct Miss AREA is 59%, which is too high and 5395 SQL queries are reloaded.

It seems that the size of the SGA is not adequate during the period when no connections are higher and treatment more important workload are the busiest spend in the system.

2 / in 'Statistical model time' - it says - the analysis of the time 411 seconds and time of analysis 315 seconds for an hour

3 / some queries are intended to COMPLETE analysis and directly reading 15 is 737 GB while the 21st, it is 399 GB and have an impact on the i/o subsystem.

Long table scan: 6566 for 1 hour and 1.82/s

highlights of table short: 14388 for 1 hour and 40/sec

These numbers are too high.

Given all this, the best approach would be:

First change:

=========

1 / increase memory_target = / 3 GB (current size is 2 GB) - this should be the first test to see how Miss MDT performs and analysis is underway. PCT Miss should be close to zero.

Next change:

=========

2 / need to understand more about FULL scan queries that may be matter of statistics. Collect statistics with auto sampling: it is recommended that oracle. Do not use any size collection of samples 100% or any other to collect enough stats or not. If it is the product of the seller, reaching out from the seller to understand if they have a recommended approach to collect statistics,

Even if the problem persists, Identify top queries to minimize the load on the i/o subsystem. Remember that no matter what shouldn't OLTP system work best when FULL analysis happens and is reading 737 GB of data during the period of 1 hour and that could saturate the IO subsystem and slow down the processing.

Above try steps one at a time to see if the change is a positive change of the application.

Thank you

-

Install the agent in several ORACLE_HOME / instances on a host

Hello

I would monitor with OEM Grid 11 PSU6 some SAP (11 GR 2) databases running on a single Linux host.

Each database has its own ORACLE_HOME, user oracle and listner, sharing only the ORACLE_BASE (/ oracle). Example:

$> ps - ef | grep smon

2969 1 oraus1 0 Feb06? 00:00:45 ora_smon_US1

13078 oraus2 1 0 04:30? 00:00:00 ora_smon_US2

oraus3 32371 1 0 04:10? 00:00:02 ora_smon_US3

What is the best Setup for EMAgent?

A share, or one for each database (inside the different AGENT_HOME)?

Thank you.

P.I am also tracking several SAP DBs on individual hosts.

Install only one agent for each host. I was using an account dedicated to the agent ("oraagent"), installed in a separate Directory (and not under oracle) tree. As long as the user agent account belongs to all s/n OS groups used for SAP instances, things seem to work well.

A suggestion (although I use 12 c) - I had a lot more luck when each SAP database has its own, not shared, oraInventory (/ US1/oracle/oraInventory, / oracle/US2/oraInventory and so on, all indicated by $ORACLE_HOME/oraInst.loc of each instance). We had a few incorrect oraInventory to clones of system files and database moves and they confused 12 c in making some incorrect associations. I have not run 11g to monitor SAP, so that might not be a problem for you.

Another suggestion, my SAP databases has slowed to a crawl and started running out of temporary space as soon as I brought in OEM and watched the target page DB, see MOS 748251.1 Note on his stats on X$ KCCRSR if you have any problems there.

-

Hi all

Recently we migrated 9.2.0.4 to 10.2.0.4 and performance of the database is slow in a newer version, log alerts of the audit, we found that: -.

Thread 1 cannot allocate new logs, Checkpoint 1779 sequence is not complete

Currently Journal # 6 seq # 1778 mem # 0: /oradata/lipi/redo6.log

Currently Journal # 6 seq # 1778 mem # 1: /oradata/lipi/redo06a.log Wed Mar 10 15:19:27 2010 1 thread forward to log sequence 1779 (switch LGWR)

Currently journal # 1, seq # 1779 mem # 0: /oradata/lipi/redo01.log

Currently journal # 1, seq # 1779 mem # 1: /oradata/lipi/redo01a.log Wed Mar 10 15:20:45 2010 1 thread forward to log sequence 1780 (switch LGWR)

Currently Journal # 2 seq # 1780 mem # 0: /oradata/lipi/redo02.log

Currently Journal # 2 seq # 1780 mem # 1: /oradata/lipi/redo02a.log Wed Mar 10 15:21:44 2010 1 thread forward to log sequence 1781 (switch LGWR)

Currently Journal # 3 seq # 1781 mem # 0: /oradata/lipi/redo03.log

Currently Journal # 3 seq # 1781 mem # 1: /oradata/lipi/redo03a.log Wed Mar 10 15:23 2010 Thread 1 Advanced to save sequence 1782 (switch LGWR)

Currently Journal # 4, seq # 1782 mem # 0: /oradata/lipi/redo04.log

Currently Journal # 4, seq # 1782 mem # 1: /oradata/lipi/redo04a.log Wed Mar 10 15:24:48 2010 1 thread forward to log sequence 1783 (switch LGWR)

Currently journal # 5 seq # 1783 mem # 0: /oradata/lipi/redo5.log

Currently journal # 5 seq # 1783 mem # 1: /oradata/lipi/redo05a.log Wed Mar 10 15:25 2010 1 thread cannot allocate new journal, sequence 1784 Checkpoint ends not

Currently journal # 5 seq # 1783 mem # 0: /oradata/lipi/redo5.log

Currently journal # 5 seq # 1783 mem # 1: /oradata/lipi/redo05a.log Wed Mar 10 15:25:27 2010 1 thread forward to log sequence 1784 (switch LGWR)

Currently Journal # 6 seq # 1784 mem # 0: /oradata/lipi/redo6.log

Currently Journal # 6 seq # 1784 mem # 1: /oradata/lipi/redo06a.log Wed Mar 10 15:28:11 2010 1 thread forward to log sequence 1785 (switch LGWR)

Currently journal # 1, seq # 1785 mem # 0: /oradata/lipi/redo01.log

Currently journal # 1, seq # 1785 mem # 1: /oradata/lipi/redo01a.log Wed Mar 10 15:29:56 2010 1 thread forward to log sequence 1786 (switch LGWR)

Currently Journal # 2 seq # 1786 mem # 0: /oradata/lipi/redo02.log

Currently Journal # 2 seq # 1786 mem # 1: /oradata/lipi/redo02a.log Wed Mar 10 15:31:22 2010 1 wire could not be allocated for new newspapers, private part of 1787 flush sequence is not complete

Currently Journal # 2 seq # 1786 mem # 0: /oradata/lipi/redo02.log

Currently Journal # 2 seq # 1786 mem # 1: /oradata/lipi/redo02a.log Wed Mar 10 15:31:29 2010 1 thread forward to log sequence 1787 (switch LGWR)

Currently Journal # 3 seq # 1787 mem # 0: /oradata/lipi/redo03.log

Currently Journal # 3 seq # 1787 mem # 1: /oradata/lipi/redo03a.log Wed Mar 10 15:31:40 2010 1 thread cannot allocate a new journal, sequence 1788 Checkpoint ends not

Currently Journal # 3 seq # 1787 mem # 0: /oradata/lipi/redo03.log

Currently Journal # 3 seq # 1787 mem # 1: /oradata/lipi/redo03a.log Wed Mar 10 15:31:47 2010 1 thread forward to log sequence 1788 (switch LGWR)

Currently Journal # 4, seq # 1788 mem # 0: /oradata/lipi/redo04.log

Currently Journal # 4, seq # 1788 mem # 1: /oradata/lipi/redo04a.log

so my point is, we should increase the redo log size to set the checkpoint ends not message, if yes, then what should be the optimum size of the redo log file?

PiyushThe REDO LOG file must contain at least 20 minutes of data, the log file will be every 20 minutes.

It is the best practice, otherwise he must log frequent switching and increasing the e/s and waiting.The optimum size can be obtained

by querying the column OPTIMAL_LOGFILE_SIZE of the view V$ INSTANCE_RECOVERY.Published by: adnanKaysar on March 11, 2010 17:03

-

Hello

I'm making use of a 5 MB SQLite database in my application on the 1st use of the db app is copied to the card memory of the device.

The problem is that the questioning of the db is very slow.

Suggestions for performance improvements?

Thank you!

you don't know.

1) check in your statmente select field in which you sing in the Where / OrderBy clauses

(2) use your favorite SQLite manager and indexes of Crete in the table where you wanto to concern a SELECT statement

(3) in order to prepare your use of licensed generic statements if you want to run a query in a loop and just use Bind to pass parameters

Check http://docs.blackberry.com/en/developers/deliverables/8682/SQLite_overview_701956_11.jsp

http://today.Java.NET/article/2010/03/17/getting-started-Java-and-SQLite-BlackBerry-OS-50

Maybe you are looking for

-

Is - this save all users on a computer, or just the user who runs the backup Time Machine? I do a backup Time Machine manual for my family and am not sure if other users files are included on my help because I do not have access to their user account

-

How can I reset the Setup/BIOS password?

Yesterday, I bought a used HP Pavilion a1640n Media Center Edition for my granddaughter. I wanted to go through the installation and make sure that everything has been updated and looked good but when I went into the Setup program it asked me passwor

-

duty cycle of 98% on myDAQ OR using LabVIEW?

I am trying to output a square wave with a frequency of 100 Hz and a cycle of 98%, but when I do that with labview I just read a flat 5v and don't see all the edges. I can output the square using NI ELVIS(and having it work) wave, so I figure it must

-

Iteration of the loop only to store data

Hello wouldn't be a good practice to use a while loop with: his terminal State forced true, Register shift not initialized The idea is to store information useful to a VI only and avoid the addition of a registry to offset for the moment inside the m

-

HP Pavilion 2200 Series: power on password for notebook Pavilion g7?

After three trials he gives disable the code 61708514. Please any help would be appreciated